本次作业的要求来自于:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE1/homework/2822

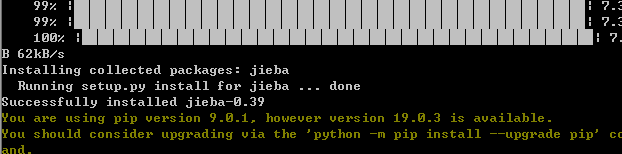

初始化jieba环境:

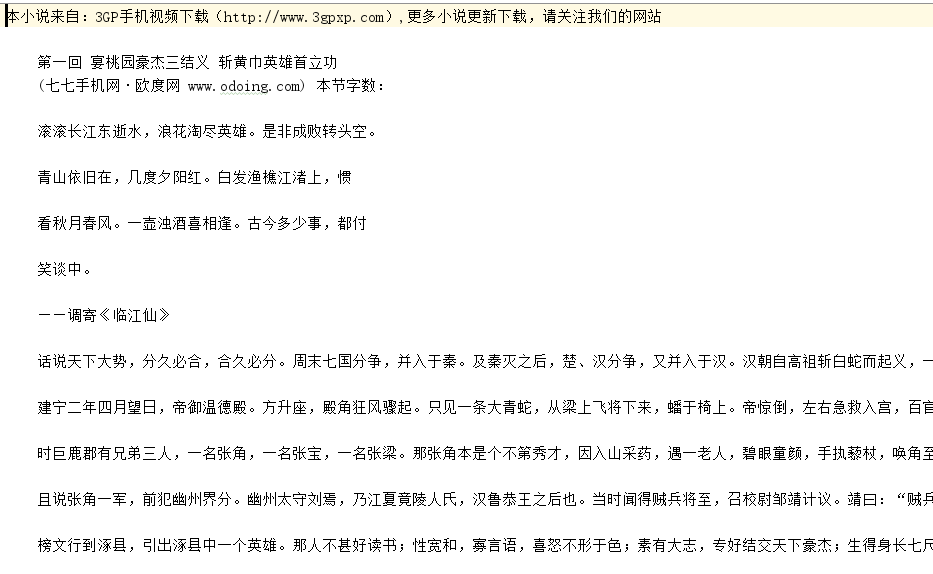

1. 下载一长篇中文小说。

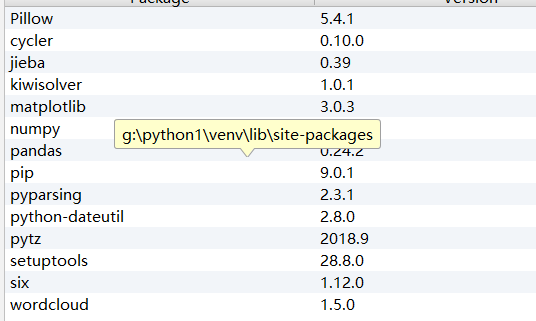

2. 从文件读取待分析文本。

3. 安装并使用jieba进行中文分词。

pip install jieba

import jieba

jieba.lcut(text)

4. 更新词库,加入所分析对象的专业词汇。

jieba.add_word('天罡北斗阵') #逐个添加

jieba.load_userdict(word_dict) #词库文本文件

test=open(r"..python1 hreeCountry.txt", "r",encoding="utf-8").read() File=open(r"..python1stops_chinese.txt", "r",encoding="utf-8") jieba.load_userdict(r"..python1比较全的三国人名.txt") #停词表 stops = File.read().split(' ') ch="《》 :,。、-!?"

转换代码:scel_to_text

# -*- coding: utf-8 -*- import struct import os # 拼音表偏移, startPy = 0x1540; # 汉语词组表偏移 startChinese = 0x2628; # 全局拼音表 GPy_Table = {} # 解析结果 # 元组(词频,拼音,中文词组)的列表 # 原始字节码转为字符串 def byte2str(data): pos = 0 str = '' while pos < len(data): c = chr(struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0]) if c != chr(0): str += c pos += 2 return str # 获取拼音表 def getPyTable(data): data = data[4:] pos = 0 while pos < len(data): index = struct.unpack('H', bytes([data[pos],data[pos + 1]]))[0] pos += 2 lenPy = struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0] pos += 2 py = byte2str(data[pos:pos + lenPy]) GPy_Table[index] = py pos += lenPy # 获取一个词组的拼音 def getWordPy(data): pos = 0 ret = '' while pos < len(data): index = struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0] ret += GPy_Table[index] pos += 2 return ret # 读取中文表 def getChinese(data): GTable = [] pos = 0 while pos < len(data): # 同音词数量 same = struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0] # 拼音索引表长度 pos += 2 py_table_len = struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0] # 拼音索引表 pos += 2 py = getWordPy(data[pos: pos + py_table_len]) # 中文词组 pos += py_table_len for i in range(same): # 中文词组长度 c_len = struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0] # 中文词组 pos += 2 word = byte2str(data[pos: pos + c_len]) # 扩展数据长度 pos += c_len ext_len = struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0] # 词频 pos += 2 count = struct.unpack('H', bytes([data[pos], data[pos + 1]]))[0] # 保存 GTable.append((count, py, word)) # 到下个词的偏移位置 pos += ext_len return GTable def scel2txt(file_name): print('-' * 60) with open(file_name, 'rb') as f: data = f.read() print("词库名:", byte2str(data[0x130:0x338])) # .encode('GB18030') print("词库类型:", byte2str(data[0x338:0x540])) print("描述信息:", byte2str(data[0x540:0xd40])) print("词库示例:", byte2str(data[0xd40:startPy])) getPyTable(data[startPy:startChinese]) getChinese(data[startChinese:]) return getChinese(data[startChinese:]) if __name__ == '__main__': # scel所在文件夹路径 in_path = r"F: ext" #修改为你的词库文件存放文件夹 # 输出词典所在文件夹路径 out_path = r"F: ext" # 转换之后文件存放文件夹 fin = [fname for fname in os.listdir(in_path) if fname[-5:] == ".scel"] for f in fin: try: for word in scel2txt(os.path.join(in_path, f)): file_path=(os.path.join(out_path, str(f).split('.')[0] + '.txt')) # 保存结果 with open(file_path,'a+',encoding='utf-8')as file: file.write(word[2] + ' ') os.remove(os.path.join(in_path, f)) except Exception as e: print(e) pass

5. 生成词频统计

for w in tokens: if len(w)==1: continue else: wordict[w] = wordict.get(w,0)+1

7. 排除语法型词汇,代词、冠词、连词等停用词。

stops

tokens=[token for token in wordsls if token not in stops]

wordlist=jieba.lcut(test) tokens=[token for token in wordlist if token not in stops]

8. 输出词频最大TOP20,把结果存放到文件里。

for i in range(20): print(wordsort[i])

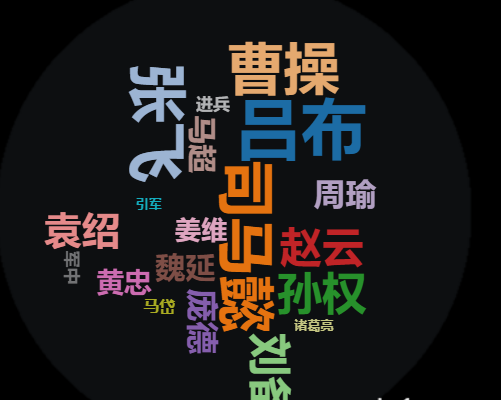

9. 生成词云。

pd.DataFrame(wordsort).to_csv('three.csv', encoding='utf-8') txt = open('three.csv','r',encoding='utf-8').read() cut_text=''.join(txt) from wordcloud import WordCloud mywc=WordCloud().generate(cut_text) import matplotlib.pyplot as plt plt.imshow(mywc) plt.axis("off") plt.show() mywc.to_file(r"F: xt hreestory.png")

整体代码:

# _*_ coding: utf-8 _*_

import jieba

import pandas as pd

test=open(r"..python1 hreeCountry.txt", "r",encoding="utf-8").read()

File=open(r"..python1stops_chinese.txt", "r",encoding="utf-8")

jieba.load_userdict(r"..python1比较全的三国人名.txt")

#停词表

stops = File.read().split(' ')

ch="《》 :,。、-!?"

for c in ch:

test = test.replace(c,'')

#更新词库,加入所分析对象的专业词汇

jieba.add_word('哎哟不错哟')

#中文切词

wordlist=jieba.lcut(test)

tokens=[token for token in wordlist if token not in stops]

wordict={}

for w in tokens:

if len(w)==1:

continue

else:

wordict[w] = wordict.get(w,0)+1

wordsort=list(wordict.items())

wordsort.sort(key= lambda x:x[1],reverse=True)

#输出词频最大TOP20

for i in range(20):

print(wordsort[i])

pd.DataFrame(wordsort).to_csv('three.csv', encoding='utf-8')

txt = open('three.csv','r',encoding='utf-8').read()

cut_text=''.join(txt)

from wordcloud import WordCloud

mywc=WordCloud().generate(cut_text)

import matplotlib.pyplot as plt

plt.imshow(mywc)

plt.axis("off")

plt.show()

mywc.to_file(r"F: xt hreestory.png")