这一次呢,让我们来试一下“CSDN热门文章的抓取”。

话不多说,让我们直接进入CSND官网。

(其实是因为我被阿里的反爬磨到没脾气,不想说话……)

一、URL分析

输入“Python”并点击搜索:

便得到了所有关于“Python”的热门博客,包括 [ 标题,网址、阅读数 ] 等等,我们的任务,就是爬取这些博客。

分析一下上图中曲线处的URL,不难发现:p为页数,q为关键字。

二、XPath路径

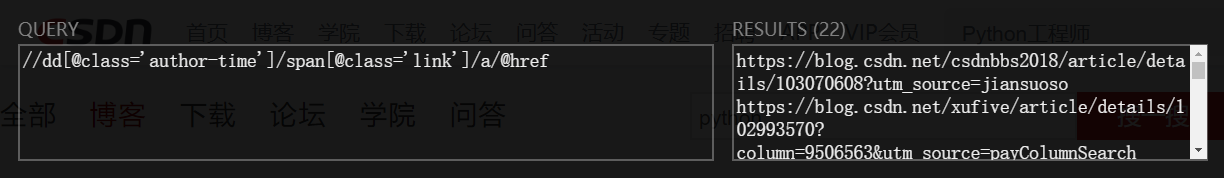

打开开发者模式,匹配我们所需信息的标签:

- 通过

//dd[@class='author-time']/span[@class='link']/a/@href匹配各个博客的URL地址;

- 通过

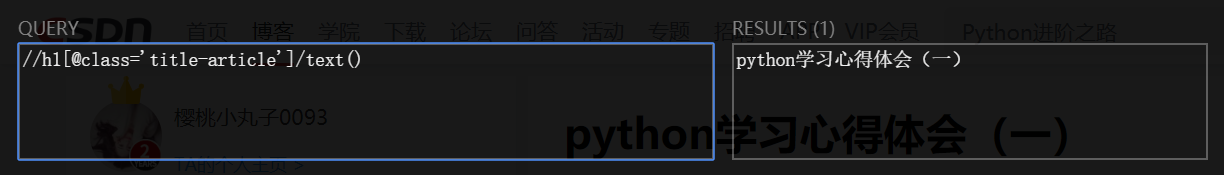

//h1[@class='title-article']/text()匹配各个博客的标题。

注意:

对于XPath路径有疑问的话,可复习《XPath语法的学习与lxml模块的使用》。

三、代码实现

1.定制输入框:

keyword = input("请输入关键词:")

pn_start = int(input("起始页:"))

pn_end = int(input("终止页:"))

2.确定URL:

# 注意要+1

for pn in range(pn_start, pn_end+1):

url = "https://so.csdn.net/so/search/s.do?p=%s&q=%s&t=blog&viparticle=&domain=&o=&s=&u=&l=&f=&rbg=0" % (pn, keyword)

3.构建request对象:

# 返回request对象

def getRequest(url):

return ur.Request(

url=url,

headers={

'User-Agent': user_agent.get_user_agent_pc(),

}

)

for pn in range(pn_start, pn_end+1):

url = "https://so.csdn.net/so/search/s.do?p=%s&q=%s&t=blog&viparticle=&domain=&o=&s=&u=&l=&f=&rbg=0" % (pn, keyword)

# 构建request对象

request = getRequest(url)

4.爬取博客网站:

# 打开request对象

response = ur.urlopen(request).read()

# response为字节,可直接进行le.HTML将其解析成xml类型

href_s = le.HTML(response).xpath("//dd[@class='author-time']/span[@class='link']/a/@href")

print(href_s)

输出如下:

5.遍历href_s列表,输出网址和标题:

for href in href_s:

print(href)

response_blog = ur.urlopen(getRequest(href)).read()

title = le.HTML(response_blog).xpath("//h1[@class='title-article']/text()")[0]

print(title)

输出如下:

结果倒是出来了,但 list index out of range 是什么鬼???

6.打印open结果进行检查:

for href in href_s:

try:

print(href)

response_blog = ur.urlopen(getRequest(href)).read()

print(response_blog)

输出如下:

有爬到东西,但似乎不是我们想要的网页内容???看着这乱七八糟的 x1fx8bx08x00 ,我一下子想到了 “ 编码 ” 的问题。

7.九九八十一难:

encode和decode;UTF-8和GBK;bytes和str;urlencode和unquote;dumps和loads;request和requests;- 甚至是

accept-encoding: gzip, deflate; - ······

- 等等等等;

结果!竟然!都不是!!!啊啊啊啊~ ~ ~ ~

罢了罢了,bug还是要解决的_(:3」∠❀)_

8.灵机一动!难道是 cookie ???

# 返回request对象

def getRequest(url):

return ur.Request(

url=url,

headers={

'User-Agent': user_agent.get_user_agent_pc(),

'Cookie': 'acw_tc=2760825115771713670314014ebaddd9cff4024b9ed3255873ddb28d85e269; acw_sc__v2=5e01b9a7ea60f5bf87d21658f23db9678e320a82; uuid_tt_dd=10_19029403540-1577171367226-613100; dc_session_id=10_1577171367226.599226; dc_tos=q3097q; Hm_lvt_6bcd52f51e9b3dce32bec4a3997715ac=1577171368; Hm_lpvt_6bcd52f51e9b3dce32bec4a3997715ac=1577171368; Hm_ct_6bcd52f51e9b3dce32bec4a3997715ac=6525*1*10_19029403540-1577171367226-613100; c-login-auto=1; firstDie=1; announcement=%257B%2522isLogin%2522%253Afalse%252C%2522announcementUrl%2522%253A%2522https%253A%252F%252Fblog.csdn.net%252Fblogdevteam%252Farticle%252Fdetails%252F103603408%2522%252C%2522announcementCount%2522%253A0%252C%2522announcementExpire%2522%253A3600000%257D',

}

)

输出如下:

成功!!

才明白过来,原来我一直走错了方向啊,竟然是阿里反爬机制搞的鬼。I hate you。╭(╯^╰)╮

9.将网页写入本地文件:

with open('blog/%s.html' % title, 'wb') as f:

f.write(response_blog)

10.加入异常处理代码try-except:

try:

······

······

except Exception as e:

print(e)

讲到这里就结束了,大家有心思的话可以自己再把本文的代码进行完善:比如将异常写入到TXT中,方便后续进行异常分析;比如对爬取结果进行筛选,提高数据的针对性;等等。

全文完整代码:

import urllib.request as ur

import user_agent

import lxml.etree as le

keyword = input("请输入关键词:")

pn_start = int(input("起始页:"))

pn_end = int(input("终止页:"))

# 返回request对象

def getRequest(url):

return ur.Request(

url=url,

headers={

'User-Agent': user_agent.get_user_agent_pc(),

'Cookie': 'uuid_tt_dd=10_7175678810-1573897791171-515870; dc_session_id=10_1573897791171.631189; __gads=Test; UserName=WoLykos; UserInfo=b90874fc47d447b8a78866db1bde5770; UserToken=b90874fc47d447b8a78866db1bde5770; UserNick=WoLykos; AU=A57; UN=WoLykos; BT=1575250270592; p_uid=U000000; Hm_ct_6bcd52f51e9b3dce32bec4a3997715ac=6525*1*10_7175678810-1573897791171-515870!5744*1*WoLykos; Hm_lvt_e5ef47b9f471504959267fd614d579cd=1575356971; Hm_ct_e5ef47b9f471504959267fd614d579cd=5744*1*WoLykos!6525*1*10_7175678810-1573897791171-515870; __yadk_uid=qo27C9PZzNLSwM0hXjha0zVMAtGzJ4sX; Hm_lvt_70e69f006e81d6a5cf9fa5725096dd7a=1575425024; Hm_ct_70e69f006e81d6a5cf9fa5725096dd7a=5744*1*WoLykos!6525*1*10_7175678810-1573897791171-515870; acw_tc=2760824315766522959534770e8d26ee9946cc510917f981c1d79aec141232; UM_distinctid=16f1e154f3e6fc-06a15022d8e2cb-7711a3e-e1000-16f1e154f3f80c; searchHistoryArray=%255B%2522python%2522%252C%2522Python%2522%255D; firstDie=1; Hm_lvt_6bcd52f51e9b3dce32bec4a3997715ac=1577156990,1577157028,1577167164,1577184133; announcement=%257B%2522isLogin%2522%253Atrue%252C%2522announcementUrl%2522%253A%2522https%253A%252F%252Fblog.csdn.net%252Fblogdevteam%252Farticle%252Fdetails%252F103603408%2522%252C%2522announcementCount%2522%253A0%252C%2522announcementExpire%2522%253A3600000%257D; TY_SESSION_ID=6121abc2-f0d2-404d-973b-ebf71a77c098; acw_sc__v2=5e01ee7889eda6ecf4690eab3dfd334e8301d2f6; acw_sc__v3=5e01ee7ca3cb12d33dcb15a19cdc2fe3d7735b49; dc_tos=q30jqt; Hm_lpvt_6bcd52f51e9b3dce32bec4a3997715ac=1577185014',

}

)

# 注意要+1

for pn in range(pn_start, pn_end+1):

url = "https://so.csdn.net/so/search/s.do?p=%s&q=%s&t=blog&viparticle=&domain=&o=&s=&u=&l=&f=&rbg=0" % (pn, keyword)

# 构建request对象

request = getRequest(url)

try:

# 打开request对象

response = ur.urlopen(request).read()

# response为字节,可直接进行le.HTML将其解析成xml类型

href_s = le.HTML(response).xpath("//dd[@class='author-time']/span[@class='link']/a/@href")

# print(href_s)

for href in href_s:

try:

print(href)

response_blog = ur.urlopen(getRequest(href)).read()

# print(response_blog)

title = le.HTML(response_blog).xpath("//h1[@class='title-article']/text()")[0]

print(title)

with open('blog/%s.html' % title, 'wb') as f:

f.write(response_blog)

except Exception as e:

print(e)

except:

pass

全文完整代码(代理IP):

import urllib.request as ur

import lxml.etree as le

import user_agent

keyword = input('请输入关键词:')

pn_start = int(input('起始页:'))

pn_end = int(input('终止页:'))

def getRequest(url):

return ur.Request(

url=url,

headers={

'User-Agent':user_agent.get_user_agent_pc(),

}

)

def getProxyOpener():

proxy_address = ur.urlopen('http://api.ip.data5u.com/dynamic/get.html?order=d314e5e5e19b0dfd19762f98308114ba&sep=4').read().decode('utf-8').strip()

proxy_handler = ur.ProxyHandler(

{

'http':proxy_address

}

)

return ur.build_opener(proxy_handler)

for pn in range(pn_start, pn_end+1):

request = getRequest(

'https://so.csdn.net/so/search/s.do?p=%s&q=%s&t=blog&domain=&o=&s=&u=&l=&f=&rbg=0' % (pn,keyword)

)

try:

response = getProxyOpener().open(request).read()

href_s = le.HTML(response).xpath('//span[@class="down fr"]/../span[@class="link"]/a/@href')

for href in href_s:

try:

response_blog = getProxyOpener().open(

getRequest(href)

).read()

title = le.HTML(response_blog).xpath('//h1[@class="title-article"]/text()')[0]

print(title)

with open('blog/%s.html' % title,'wb') as f:

f.write(response_blog)

except Exception as e:

print(e)

except:pass

为我心爱的女孩~~