| 班级 |

| ---- | ---- | ---- |

| 实验要求|

| 实验目标|掌握K近邻树实现算法 |

| 学号 |3180701331|

一、实验目的

1.理解朴素贝叶斯算法原理,掌握朴素贝叶斯算法框架;

2.掌握常见的高斯模型,多项式模型和伯努利模型;

3.能根据不同的数据类型,选择不同的概率模型实现朴素贝叶斯算法;

4.针对特定应用场景及数据,能应用朴素贝叶斯解决实际问题。

二、实验内容

1.实现高斯朴素贝叶斯算法。

2.熟悉sklearn库中的朴素贝叶斯算法;

3.针对iris数据集,应用sklearn的朴素贝叶斯算法进行类别预测。

4.针对iris数据集,利用自编朴素贝叶斯算法进行类别预测。

三、实验报告要求

1.对照实验内容,撰写实验过程、算法及测试结果;

2.代码规范化:命名规则、注释;

3.分析核心算法的复杂度;

4.查阅文献,讨论各种朴素贝叶斯算法的应用场景;

5.讨论朴素贝叶斯算法的优缺点。

四、实验内容以及结果

In [1]:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from collections import Counter

import math

In [2]:

# data

def create_data():

iris = load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

df['label'] = iris.target

df.columns = [

'sepal length', 'sepal width', 'petal length', 'petal width', 'label'

]

data = np.array(df.iloc[:100, :])

# print(data)

return data[:, :-1], data[:, -1]

In [3]:

X, y = create_data()

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

In [4]:

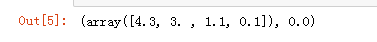

X_test[0], y_test[0]

GaussianNB 高斯朴素贝叶斯

In [5]:

class NaiveBayes:

def __init__(self):

self.model = None

# 数学期望

@staticmethod

def mean(X):

return sum(X) / float(len(X))

# 标准差(方差)

def stdev(self, X):

avg = self.mean(X)

return math.sqrt(sum([pow(x - avg, 2) for x in X]) / float(len(X)))

# 概率密度函数

def gaussian_probability(self, x, mean, stdev):

exponent = math.exp(-(math.pow(x - mean, 2) /

(2 * math.pow(stdev, 2))))

return (1 / (math.sqrt(2 * math.pi) * stdev)) * exponent

# 处理X_train

def summarize(self, train_data):

summaries = [(self.mean(i), self.stdev(i)) for i in zip(*train_data)]

return summaries

# 分类别求出数学期望和标准差

def fit(self, X, y):

labels = list(set(y))

data = {label: [] for label in labels}

for f, label in zip(X, y):

data[label].append(f)

self.model = {

label: self.summarize(value)

for label, value in data.items()

}

return 'gaussianNB train done!'

# 计算概率

def calculate_probabilities(self, input_data):

# summaries:{0.0: [(5.0, 0.37),(3.42, 0.40)], 1.0: [(5.8, 0.449),(2.7, 0.27)]}

# input_data:[1.1, 2.2]

probabilities = {}

for label, value in self.model.items():

probabilities[label] = 1

for i in range(len(value)):

mean, stdev = value[i]

probabilities[label] *= self.gaussian_probability(

input_data[i], mean, stdev)

return probabilities

# 类别

def predict(self, X_test):

# {0.0: 2.9680340789325763e-27, 1.0: 3.5749783019849535e-26}

label = sorted(

self.calculate_probabilities(X_test).items(),

key=lambda x: x[-1])[-1][0]

return label

def score(self, X_test, y_test):

right = 0

for X, y in zip(X_test, y_test):

label = self.predict(X)

if label == y:

right += 1

return right / float(len(X_test))

In [6]:

model = NaiveBayes()

In [7]:

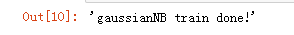

model.fit(X_train, y_train)

In [8]:

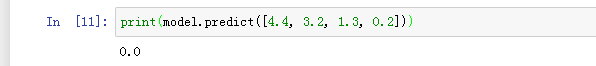

print(model.predict([4.4, 3.2, 1.3, 0.2]))

In [9]:

model.score(X_test, y_test)

In [10]:

from sklearn.naive_bayes import GaussianNB

In [11]:

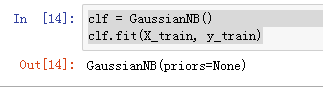

clf = GaussianNB()

clf.fit(X_train, y_train)

In [12]:

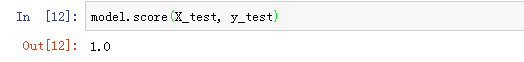

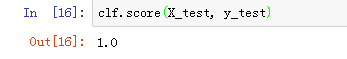

clf.score(X_test, y_test)

In [13]:

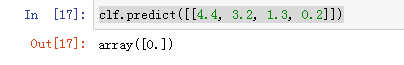

clf.predict([[4.4, 3.2, 1.3, 0.2]])

In [14]:

from sklearn.naive_bayes import BernoulliNB, MultinomialNB # 伯努利模型和多项式模型

五、实验小结

朴素贝叶斯算法优点在于其算法逻辑简单,易于实现;而且算法实施的时间、空间开销小;同时其算法性能稳定,对于不同特点的数据其分类性能差别不大,即模型的健壮性比较好

缺点是缺点:理论上,朴素贝叶斯模型与其他分类方法相比具有最小的误差率。但是实际上并非总是如此,这是因为朴素贝叶斯模型假设属性之间相互独立,这个假设在实际应用中往往是不成立的,在属性个数比较多或者属性之间相关性较大时,分类效果不好。而在属性相关性较小时,朴素贝叶斯性能最为良好。