本次迁移学习训练的是865种鱼的分类,使用的是WildFish数据集:

百度云盘链接:https://pan.baidu.com/s/1_kHg87LghgWT9_mVawGdYQ

提取码:a9pl

导入一些包:

import torch import torch.nn as nn import torch.optim as optim import torchvision import torchvision.transforms as transforms import numpy as np from torchvision import datasets, models, transforms import os import matplotlib.pyplot as plt import pandas as pd from PIL import Image from torch.utils.data import Dataset

把数据集分成Training和Testing两个部分:

# 把数据变成可读取的数据

# 数据集中提供了Training和Testing两部分,但是以5:5分配,这里使用8:2的方式重新分配 tb1 = pd.read_table('./train.txt', sep=' ', names=['path', 'label']) tb1['path'] = r'E:datawildfish\' + tb1['path'] tb2 = pd.read_table('./val.txt', sep=' ', names=['path', 'label']) tb2['path'] = r'E:datawildfish\' + tb2['path']

# 把两个数据集合并成一个数据集

tb = pd.concat([tb1, tb2], sort=True).reset_index(drop=True)

tb = tb.loc[:, ['path', 'label']]

# 序号的尾号为9和0记为Testing,其余的记为Training

train_rows = [i for i in range(tb.shape[0]) if (i % 10 != 9 and i % 10 != 0)]

test_rows = [i for i in range(tb.shape[0]) if (i % 10 == 9 or i % 10 == 0)]

train_data = tb.iloc[train_rows]

test_data = tb.iloc[test_rows]

# 存储数据集

train_data.to_csv(r'./train_path.txt', sep=' ', header=None, index=False)

test_data.to_csv(r'./test_path.txt', sep=' ', header=None, index=False)

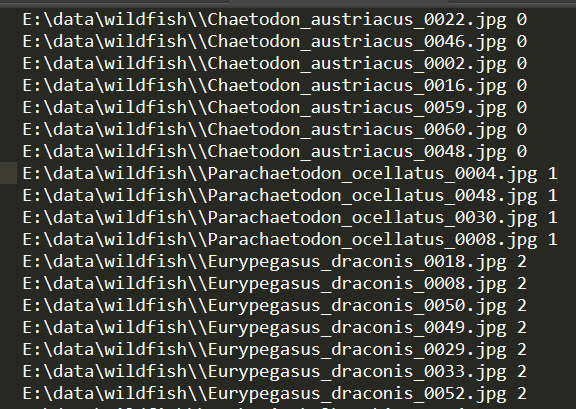

pytorch最后可读取的图片名称(以绝对路径显示)和类别名称如下图所示:

定义一些超参数:

# 定义是否使用GPU device = torch.device("cuda" if torch.cuda.is_available() else "cpu") EPOCH = 10 #遍历数据集次数 pre_epoch = 0 # 定义已经遍历数据集的次数 BATCH_SIZE = 128 #批处理尺寸(batch_size) LR = 0.0001 #学习率

对数据做预处理

# 准备数据集并预处理 transform_train = transforms.Compose([ transforms.Resize((150, 150)), transforms.RandomHorizontalFlip(0.5), # 图像一半的概率翻转,一半的概率不翻转 transforms.RandomVerticalFlip(0.5), # 竖直翻转 transforms.RandomRotation(30), transforms.RandomCrop(128, padding=4), # transforms.ColorJitter(brightness=0.5), # transforms.ColorJitter(contrast=0), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), #R,G,B每层的归一化用到的均值和方差 ]) transform_test = transforms.Compose([ transforms.Resize((128, 128)), # 调整图像大小 transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), ])

将数据放到TrainLoader和TestLoader中

class MyDataset(Dataset): def __init__(self, txt_path, transform = None, target_transform = None): fh = open(txt_path, 'r', encoding='utf-8') imgs = [] for line in fh: line = line.rstrip() words = line.split() imgs.append((words[0], int(words[1]))) self.imgs = imgs self.transform = transform self.target_transform = target_transform def __getitem__(self, index): fn, label = self.imgs[index] img = Image.open(fn).convert('RGB') if self.transform is not None: img = self.transform(img) return img, label def __len__(self): return len(self.imgs) train_datasets = MyDataset(r'./train_path.txt', transform=transform_train) test_datasets = MyDataset(r'./test_path.txt', transform=transform_test) # 由于我使用的是Win10系统,num_workers只能设置为0,其他系统可以调大此参数,提高训练速度 trainloader = torch.utils.data.DataLoader(train_datasets, batch_size=BATCH_SIZE, shuffle=True, num_workers=0) testloader = torch.utils.data.DataLoader(test_datasets, batch_size=BATCH_SIZE, shuffle=True, num_workers=0)

查看图片的代码,不执行不会影响后续的训练

# 查看图片 to_pil_image = transforms.ToPILImage() cnt = 0 for image,label in trainloader: if cnt>=3: # 只显示3张图片 break print(label) # 显示label img = image[0] # plt.imshow()只能接受3-D Tensor,所以也要用image[0]消去batch那一维 img = img.numpy() # FloatTensor转为ndarray img = np.transpose(img, (1,2,0)) # 把channel那一维放到最后 # 显示图片 plt.imshow(img) plt.show() cnt += 1

调用VGG16的预训练模型

class VGGNet(nn.Module): def __init__(self, num_classes=685): # num_classes,此处为 二分类值为2 super(VGGNet, self).__init__() net = models.vgg16(pretrained=True) # 从预训练模型加载VGG16网络参数 net.classifier = nn.Sequential() # 将分类层置空,下面将改变我们的分类层 self.features = net # 保留VGG16的特征层 self.classifier = nn.Sequential( # 定义自己的分类层 nn.Linear(512 * 7 * 7, 1024), #512 * 7 * 7不能改变 ,由VGG16网络决定的,第二个参数为神经元个数可以微调 nn.ReLU(True), nn.Dropout(0.3), nn.Linear(1024, 1024), nn.ReLU(True), nn.Dropout(0.3), nn.Linear(1024, num_classes), ) def forward(self, x): x = self.features(x) # 预训练提供的提取特征的部分 x = x.view(x.size(0), -1) x = self.classifier(x) # 自定义的分类部分 return x net = VGGNet().to(device)

调用ResNet18的预训练模型

class ResNet(nn.Module): def __init__(self, num_classes=685): # num_classes,此处为 二分类值为2 super(ResNet, self).__init__() net = models.resnet18(pretrained=True) # 从预训练模型加载VGG16网络参数 net.classifier = nn.Sequential() # 将分类层置空,下面将改变我们的分类层 self.features = net # 保留VGG16的特征层 self.classifier = nn.Sequential( # 定义自己的分类层 nn.Linear(1000, 1000), #1000不能改变 ,由VGG16网络决定的,第二个参数为神经元个数可以微调 nn.ReLU(True), nn.Dropout(0.5), # nn.Linear(1024, 1024), # nn.ReLU(True), # nn.Dropout(0.3), nn.Linear(1000, num_classes), ) def forward(self, x): x = self.features(x) x = x.view(x.size(0), -1) x = self.classifier(x) return x net = ResNet().to(device)

MobileNet V2的预训练模型

class MobileNet(nn.Module): def __init__(self, num_classes=685): # num_classes,此处为 二分类值为2 super(MobileNet, self).__init__() net = models.mobilenet_v2(pretrained=True) # 从预训练模型加载VGG16网络参数 net.classifier = nn.Sequential() # 将分类层置空,下面将改变我们的分类层 self.features = net # 保留VGG16的特征层 self.classifier = nn.Sequential( # 定义自己的分类层 nn.Linear(1280, 1000), #512 * 7 * 7不能改变 ,由VGG16网络决定的,第二个参数为神经元个数可以微调 nn.ReLU(True), nn.Dropout(0.5), # nn.Linear(1024, 1024), # nn.ReLU(True), # nn.Dropout(0.3), nn.Linear(1000, num_classes), ) def forward(self, x): x = self.features(x) x = x.view(x.size(0), -1) x = self.classifier(x) return x net = MobileNet().to(device)

选择优化器和Loss

optimizer = optim.Adam(net.parameters(), lr=0.0001) criterion = nn.CrossEntropyLoss() criterion.to(device=device)

定义两个函数,一个可以冻住features层,只训练FC层,另一个把features层解冻,训练所有参数

from collections.abc import Iterable def set_freeze_by_names(model, layer_names, freeze=True): if not isinstance(layer_names, Iterable): layer_names = [layer_names] for name, child in model.named_children(): if name not in layer_names: continue for param in child.parameters(): param.requires_grad = not freeze def freeze_by_names(model, layer_names): set_freeze_by_names(model, layer_names, True) def unfreeze_by_names(model, layer_names): set_freeze_by_names(model, layer_names, False)

# 冻结 features层 freeze_by_names(net, ('features'))

# 解冻features层 unfreeze_by_names(net, ('features'))

定义两个数组,为了存储预测的y值和真实的y值

y_predict = []

y_true = []

# 我不导入这个包会报错,

from PIL import ImageFile ImageFile.LOAD_TRUNCATED_IMAGES = True

训练过程

# 训练 print("Start Training!") # 定义遍历数据集的次数 for epoch in range(pre_epoch, EPOCH): print(' Epoch: %d' % (epoch + 1)) net.train() sum_loss = 0.0 correct = 0.0 total = 0.0 for i, data in enumerate(trainloader, 0): # 准备数据 length = len(trainloader) inputs, labels = data inputs, labels = inputs.to(device), labels.to(device) optimizer.zero_grad() # forward + backward outputs = net(inputs) loss = criterion(outputs, labels) loss.backward() optimizer.step() # 每训练1个batch打印一次loss和准确率 sum_loss += loss.item() # 使用Top5分类 maxk = max((1,5)) label_resize = labels.view(-1, 1) _, predicted = outputs.topk(maxk, 1, True, True) total += labels.size(0) correct += torch.eq(predicted, label_resize).cpu().sum().float().item() print('[epoch:%d, iter:%d] Loss: %.03f | Acc: %.3f%% ' % (epoch + 1, (i + 1 + epoch * length), sum_loss / (i + 1), 100. * correct / total)) # 每训练完一个epoch测试一下准确率 print("Waiting Test!") with torch.no_grad(): correct = 0 total = 0 for data in testloader: net.eval() images, labels = data images, labels = images.to(device), labels.to(device) outputs = net(images) # 取得分最高的那个类 (outputs.data的索引号) maxk = max((1,5)) label_resize = labels.view(-1, 1) _, predicted = outputs.topk(maxk, 1, True, True) total += labels.size(0) correct += torch.eq(predicted, label_resize).cpu().sum().float().item() y_predict.append(predicted) y_true.append(labels) print('测试分类准确率为:%.3f%%' % (100 * correct / total)) acc = 100. * correct / total print("Training Finished, TotalEPOCH=%d" % EPOCH)

保存模型

torch.save(net, './model/mobileNet freeze.pth')

加载模型

net = torch.load('./model/VGG16-2 freeze.pth')

训练过程

我是先把特征层冻住训练10个epoch,再解冻训练20个epoch,各个模型在Training上的准确率基本在98%左右,在Testing上的准确率在88%左右。