* 建站数据SuperSpider(简书)

* 本项目目的:

* 为练习web开发提供相关的数据;

* 主要数据包括:

* 简书热门专题模块信息、对应模块下的热门文章、

* 文章的详细信息、作者信息、

* 评论区详细信息、评论者信息等...

* 最后存储mysql数据库.

想学习爬虫的同学也可以瞧瞧

整个项目跑完花了近十个小时, 足见数据之多, 个人web开发练习用来充当建站数据也是绰绰有余的(~ ̄▽ ̄)~

代码注释写的挺详细的,我就直接上代码了。

主要代码:

1 2 3 /** 4 * 此类对简书文章内容页进行了详细的解析爬取; 5 * 封装后的唯一入口函数 {@link ArticleSpider#run(String, int)}. 6 * 7 * author As_ 8 * date 2018-08-21 12:34:23 9 * github https://github.com/apknet 10 */ 11 12 13 public class ArticleSpider { 14 15 /** 长度为4 :阅读量、评论数、点赞数、文章对应的评论id */ 16 private List<Integer> readComLikeList = new ArrayList<>(); 17 18 /** 文章Id */ 19 private long articleId; 20 21 /** 22 * 此类的入口函数; 23 * 爬虫范围包括文章的详情信息、评论的详细信息; 24 * 并同时持久化数据; 25 * key实例:443219930c5b 26 * 27 * @param key 28 * @param flag_type 文章所属分类的索引 29 */ 30 31 public void run(String key, int flag_type){ 32 33 try { 34 articleSpider(key, flag_type); 35 36 // 以下参数由articleSpeder()函数获得 37 String comUrl = String.format("https://www.jianshu.com/notes/%d/comments?comment_id=&author_only=false&since_id=0&max_id=1586510606000&order_by=desc&page=1", readComLikeList.get(3)); 38 39 comSpider(comUrl); 40 41 } catch (Exception e) { 42 e.printStackTrace(); 43 } 44 } 45 46 /** 47 * 链接实例 https://www.jianshu.com/p/443219930c5b 48 * 故只接受最后一段关键字 49 * @param key 50 * @param flag_type 文章所属分类的索引 51 * @throws Exception 52 */ 53 private void articleSpider(String key, int flag_type) throws Exception { 54 55 String url = "https://www.jianshu.com/p/" + key; 56 57 Document document = getDocument(url); 58 59 IdUtils idUtils = new IdUtils(); 60 61 List<Integer> list = getReadComLikeList(document); 62 63 articleId = idUtils.genArticleId(); 64 String title = getTitle(document); 65 String time = getTime(document); 66 String imgCover = getImgCover(document); 67 String brief = getBrief(document); 68 String content = getContent(document); 69 String author = idUtils.genUserId(); 70 int type = flag_type; 71 int readNum = list.get(0); 72 int comNum = list.get(1); 73 int likeNum = list.get(2); 74 75 // 数据库添加对应对象数据 76 Article article = new Article(articleId, title, time, imgCover, brief, content, author, type, readNum, comNum, likeNum); 77 new ArticleDao().addArticle(article); 78 79 User user = new User(); 80 user.setUser(idUtils.genUserId()); 81 user.setName(getAuthorName(document)); 82 user.setImgAvatar(getAuthorAvatar(document)); 83 new UserDao().addUser(user); 84 85 } 86 87 private Document getDocument(String url) throws IOException { 88 89 Document document = Jsoup.connect(url) 90 .header("User-Agent", "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:61.0) Gecko/20100101 Firefox/61.0") 91 .header("Content-Type", "application/json; charset=utf-8") 92 .header("Cookie", "_m7e_session=2e930226548924f569cb27ba833346db;locale=zh-CN;default_font=font2;read_mode=day") 93 .get(); 94 return document; 95 } 96 97 private String getTitle(Document doc){ 98 return doc.select("h1[class=title]").text(); 99 } 100 101 private String getTime(Document doc){ 102 Element element = doc.select("span[class=publish-time]").first(); 103 return element.text(); 104 } 105 106 107 private String getImgCover(Document doc){ 108 Element element = doc.select("img[data-original-format=image/jpeg]").first(); 109 if (element.hasAttr("data-original-src")){ 110 String url = element.attr("data-original-src").trim(); 111 return SpiderUtils.handleUrl(url); 112 } 113 return null; 114 } 115 116 private String getBrief(Document doc){ 117 return doc.select("meta[name=description]").first().attr("content"); 118 } 119 120 private String getContent(Document doc){ 121 Element element = doc.select("div[class=show-content-free]").first(); 122 // System.out.println(element.html()); 123 124 Elements eles = element.select("div[class=image-package]"); 125 for(Element ele: eles){ 126 Element imgEle = ele.select("img").first(); 127 ele.replaceWith(imgEle); 128 } 129 130 String result = element.html().replaceAll("data-original-", ""); 131 return result; 132 } 133 134 private String getAuthorAvatar(Document doc){ 135 String url = doc.select("div[class=author]").select("img").attr("src"); 136 return SpiderUtils.handleUrl(url); 137 } 138 139 private String getAuthorName(Document doc){ 140 Element element = doc.select("script[data-name=page-data]").first(); 141 JSONObject jsonObject = new JSONObject(element.html()).getJSONObject("note").getJSONObject("author"); 142 return jsonObject.getString("nickname"); 143 } 144 145 private List<Integer> getReadComLikeList(Document doc){ 146 Element element = doc.select("script[data-name=page-data]").first(); 147 JSONObject jsonObject = new JSONObject(element.html()).getJSONObject("note"); 148 149 readComLikeList.add(jsonObject.getInt("views_count")); 150 readComLikeList.add(jsonObject.getInt("comments_count")); 151 readComLikeList.add(jsonObject.getInt("likes_count")); 152 readComLikeList.add(jsonObject.getInt("id")); 153 154 System.out.println(Arrays.toString(readComLikeList.toArray())); 155 return readComLikeList; 156 } 157 158 /** 159 * 评论区爬虫---包括: 160 * 评论楼层、时间、内容、评论者、评论回复信息等 161 * 具体可查看{@link Comment} 162 * 163 * @param url 164 * @throws Exception 165 */ 166 private void comSpider(String url) throws Exception { 167 URLConnection connection = new URL(url).openConnection(); 168 169 // 需加上Accept header,不然导致406 170 connection.setRequestProperty("Accept", "*/*"); 171 172 BufferedReader reader = new BufferedReader(new InputStreamReader(connection.getInputStream())); 173 174 String line; 175 StringBuilder str = new StringBuilder(); 176 177 while ((line = reader.readLine()) != null) { 178 str.append(line); 179 } 180 181 System.out.println(str.toString()); 182 183 JSONObject jb = new JSONObject(str.toString()); 184 185 boolean hasComment = jb.getBoolean("comment_exist"); 186 if(hasComment){ 187 int pages = jb.getInt("total_pages"); 188 if(pages > 20){ 189 // 某些热门文章评论成千上万, 此时没必要全部爬取 190 pages = 20; 191 } 192 int curPage = jb.getInt("page"); 193 194 195 JSONArray jsonArray = jb.getJSONArray("comments"); 196 Iterator iterator = jsonArray.iterator(); 197 while(iterator.hasNext()){ 198 JSONObject comment = (JSONObject) iterator.next(); 199 JSONArray comChildren = comment.getJSONArray("children"); 200 JSONObject userObj = comment.getJSONObject("user"); 201 IdUtils idUtils = new IdUtils(); 202 CommentDao commentDao = new CommentDao(); 203 UserDao userDao = new UserDao(); 204 205 206 int commentId = idUtils.genCommentId(); 207 String userId = idUtils.genUserId(); 208 209 210 System.out.println(comment.toString()); 211 // 评论内容相关 212 String content = comment.getString("compiled_content"); 213 String time = comment.getString("created_at"); 214 int floor = comment.getInt("floor"); 215 int likeNum = comment.getInt("likes_count"); 216 int parentId = 0; 217 218 // 评论者信息 219 String name = userObj.getString("nickname"); 220 String avatar = userObj.getString("avatar"); 221 222 Comment newCom = new Comment(commentId, articleId, content, time, floor, likeNum, parentId, userId, avatar, name); 223 commentDao.addComment(newCom); 224 225 User user = new User(); 226 user.setUser(userId); 227 user.setName(name); 228 user.setImgAvatar(avatar); 229 230 userDao.addUser(user); 231 232 // 爬取评论中的回复 233 Iterator childrenIte = comChildren.iterator(); 234 while(childrenIte.hasNext()){ 235 JSONObject child = (JSONObject) childrenIte.next(); 236 237 Comment childCom = new Comment(); 238 childCom.setId(idUtils.genCommentId()); 239 childCom.setArticleId(articleId); 240 childCom.setComment(child.getString("compiled_content")); 241 childCom.setTime(child.getString("created_at")); 242 childCom.setParentId(child.getInt("parent_id")); 243 childCom.setFloor(floor); 244 childCom.setNameAuthor(child.getJSONObject("user").getString("nickname")); 245 246 commentDao.addComment(childCom); 247 248 } 249 } 250 251 // 实现自动翻页 252 if(curPage == 1){ 253 for(int i = 2; i <= pages; i++){ 254 System.out.println("page-------------------> " + i); 255 int index = url.indexOf("page="); 256 String sub_url = url.substring(0, index + 5); 257 String nextPage = sub_url + i; 258 comSpider(nextPage); 259 } 260 } 261 } 262 } 263 } 264 265

and:

1 2 3 /** 4 * 模块(简书的专题)爬虫 5 * 入口:https://www.jianshu.com/recommendations/collections 6 * 模块实例: https://www.jianshu.com/c/V2CqjW 7 * 文章实例: https://www.jianshu.com/p/443219930c5b 8 * 故此爬虫多处直接传递链接后缀的关键字符串(如‘V2CqjW’、‘443219930c5b’) 9 * 10 * @author As_ 11 * @date 2018-08-21 12:31:35 12 * @github https://github.com/apknet 13 * 14 */ 15 16 public class ModuleSpider { 17 18 public void run() { 19 try { 20 21 List<String> moduleList = getModuleList("https://www.jianshu.com/recommendations/collections"); 22 23 for (String key: moduleList) { 24 // 每个Module爬取10页内容的文章 25 System.out.println((moduleList.indexOf(key) + 1) + "." + key); 26 for (int page = 1; page < 11; page++) { 27 String moduleUrl = String.format("https://www.jianshu.com/c/%s?order_by=top&page=%d", key, page); 28 29 List<String> articleList = getArticleList(moduleUrl); 30 31 for (String articlekey: articleList) { 32 new ArticleSpider().run(articlekey, moduleList.indexOf(key) + 1); 33 } 34 } 35 } 36 37 } catch (Exception e) { 38 e.printStackTrace(); 39 } 40 } 41 42 /** 43 * 返回Module 关键字符段 44 * @param url 45 * @return 46 * @throws IOException 47 * @throws SQLException 48 */ 49 50 private List<String> getModuleList(String url) throws IOException, SQLException { 51 52 List<String> moduleList = new ArrayList<>(); 53 int i = 0; 54 55 Document document = Jsoup.connect(url).get(); 56 Element container = document.selectFirst("div[id=list-container]"); 57 Elements modules = container.children(); 58 for(Element ele: modules){ 59 String relUrl = ele.select("a[target=_blank]").attr("href"); 60 String moduleKey = relUrl.substring(relUrl.indexOf("/c/") + 3); 61 moduleList.add(moduleKey); 62 63 // System.out.println(moduleKey); 64 // 以下爬取数据创建Module对象并持久化 65 66 String name = ele.select("h4[class=name]").text(); 67 String brief = ele.selectFirst("p[class=collection-description]").text(); 68 String imgcover = SpiderUtils.handleUrl(ele.selectFirst("img[class=avatar-collection]").attr("src")); 69 70 String articleNu = ele.select("a[target=_blank]").get(1).text(); 71 int articleNum = Integer.parseInt(articleNu.substring(0, articleNu.indexOf("篇"))); 72 73 String userNu = ele.select("div[class=count]").text().replace(articleNu, ""); 74 Matcher matcher = Pattern.compile("(\D*)(\d*\.\d*)?(\D*)").matcher(userNu); 75 matcher.find(); 76 int userNum = (int)(Float.parseFloat(matcher.group(2)) * 1000); 77 78 Module module = new Module((++i), name, brief, imgcover, userNum, articleNum, "apknet"); 79 new ModuleDao().addModule(module); 80 81 System.out.println(name + "------------------>"); 82 } 83 return moduleList; 84 } 85 86 87 88 /** 89 * 文章链接实例 https://www.jianshu.com/p/443219930c5b 90 * 故此处返回List的String为url后的那段字符串 91 * 92 * @param url 93 * @return 94 */ 95 private List<String> getArticleList(String url) { 96 97 System.out.println("getArticleList: --------------------->"); 98 99 List<String> keyList = new ArrayList<>(); 100 101 Document document = null; 102 try { 103 document = Jsoup.connect(url).get(); 104 } catch (Exception e) { 105 e.printStackTrace(); 106 } 107 Element ulElement = document.select("ul[class=note-list]").first(); 108 109 Elements elements = ulElement.select("li[id]"); 110 for (Element ele : elements) { 111 String relUrl = ele.select("a[class=title]").attr("href"); 112 String key = relUrl.substring(relUrl.indexOf("/p/") + 3); 113 keyList.add(key); 114 System.out.println(key); 115 } 116 117 return keyList; 118 } 119 120 } 121 122

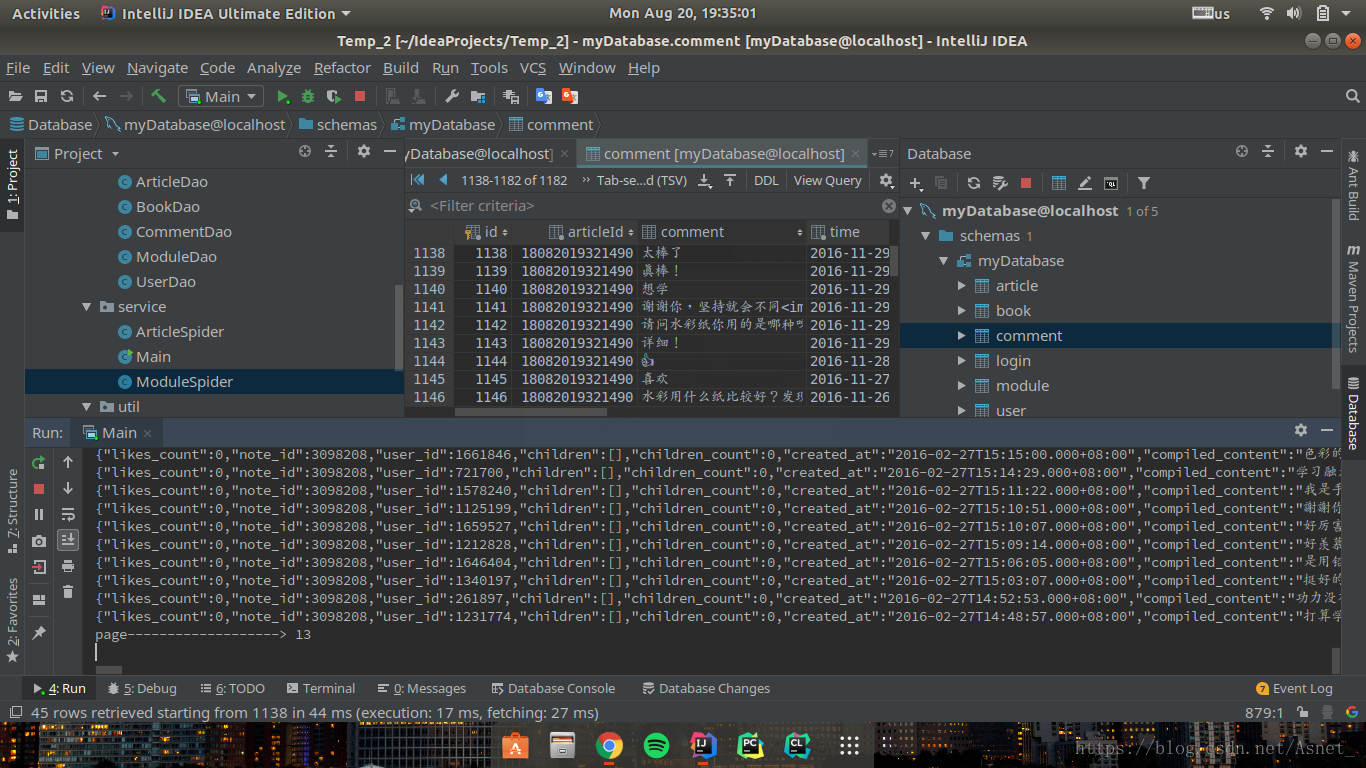

最后附上实验截图:

完整项目地址: