1.取出一个新闻列表页的全部新闻 包装成函数。

2.获取总的新闻篇数,算出新闻总页数。

3.获取全部新闻列表页的全部新闻详情。

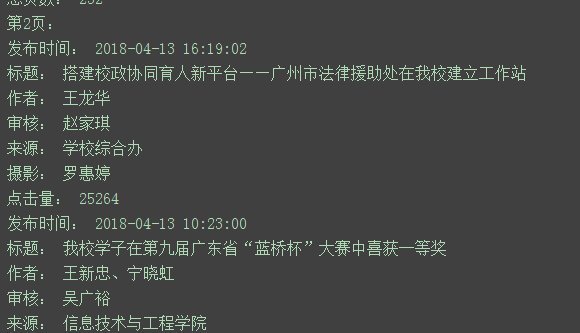

# -*- coding: UTF-8 -*-# -*- import requests import re import locale locale=locale.setlocale(locale.LC_CTYPE, 'chinese') from bs4 import BeautifulSoup from datetime import datetime url = "http://news.gzcc.cn/html/xiaoyuanxinwen/" res = requests.get(url) res.encoding = 'utf-8' soup = BeautifulSoup(res.text, 'html.parser') # def writeDetail(content): def getClickCount(newsUrl): newId = re.search('\_(.*).html', newsUrl).group(1) clickUrl = "http://oa.gzcc.cn/api.php?op=count&id=9183&modelid=80".format(newId) return (int(requests.get(clickUrl).text.split('.html')[-1].lstrip("('").rstrip("');"))) def getNewDetail(newsUrl): resd = requests.get(newsUrl) resd.encoding = 'utf-8' soupd = BeautifulSoup(resd.text, 'html.parser') title = soupd.select('.show-title')[0].text info = soupd.select('.show-info')[0].text t = soupd.select('.show-info')[0].text[0:24].lstrip('发布时间:') dt = datetime.strptime(t, '%Y-%m-%d %H:%M:%S') if info.find('作者:') > 0: au = info[info.find('作者:'):].split()[0].lstrip('作者:') else: au = 'none' if info.find('审核:') > 0: review = info[info.find('审核:'):].split()[0].lstrip('审核:') else: review = 'none' if info.find('来源:')>0: source=info[info.find('来源:'):].split()[0].lstrip('来源:') else: source='none' if info.find('摄影:') > 0: pf = info[info.find('摄影:'):].split()[0].lstrip('摄影:') else: pf = 'none' content = soupd.select('#content')[0].text.strip() click=getClickCount(newsUrl) print("发布时间:", dt) print("标题:", title) print("作者:", au) print("审核:", review) print("来源:", source) print("摄影:", pf) print("点击量:", click) # print(dt,title,newsUrl,au,review,source,pf,click) def getListPage(ListPageUrl): res = requests.get(ListPageUrl) res.encoding = 'utf-8' soupd = BeautifulSoup(res.text, 'html.parser') for news in soup.select('li'): # print(news) if len(news.select('.news-list-title')) > 0: # t1 = news.select('.news-list-title')[0].text # d1 = news.select('.news-list-description')[0].text a = news.select('a')[0].attrs['href'] getNewDetail(a) # 算出总页 def getPageN(): resn = requests.get(url) resn.encoding = 'utf-8' soupn = BeautifulSoup(resn.text, 'html.parser') num=int(soupn.select('.a1')[0].text.rstrip('条'))//10+1 return (num) firstPageUrl = "http://news.gzcc.cn/html/xiaoyuanxinwen/" print('第1页:') getListPage(firstPageUrl) # 输出总页 n=getPageN() print('总页数:',n) for i in range(2, n): pageUrl='http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) print('第{}页:'.format(i)) getListPage(pageUrl) break

4.找一个自己感兴趣的主题,进行数据爬取,并进行分词分析。不能与其它同学雷同。

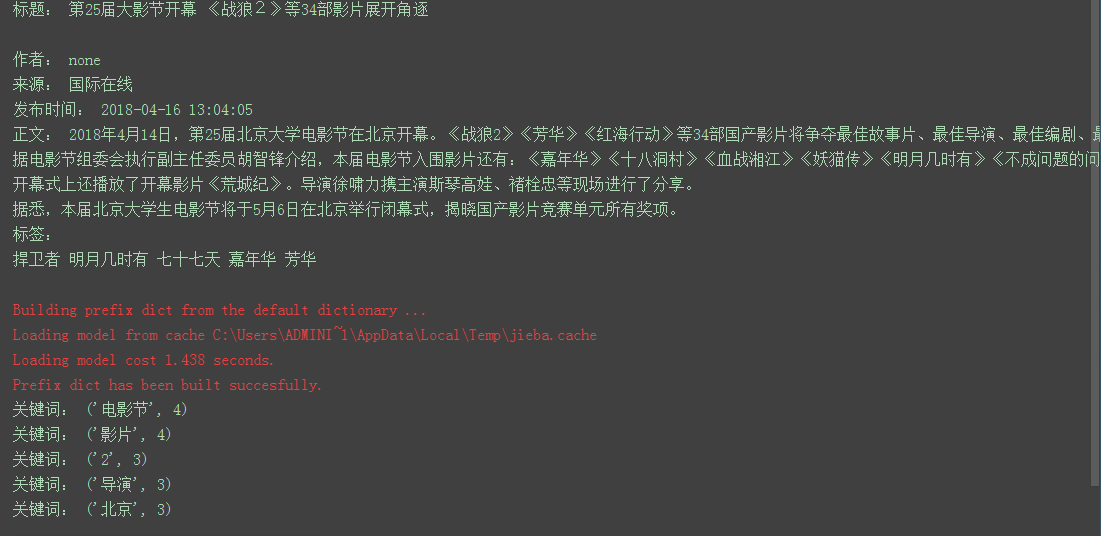

# -*- coding: UTF-8 -*-# -*- import requests import re import jieba import locale locale=locale.setlocale(locale.LC_CTYPE, 'chinese') from bs4 import BeautifulSoup from datetime import datetime url = "http://ent.chinadaily.com.cn/" res = requests.get(url) res.encoding = 'utf-8' soup = BeautifulSoup(res.text, 'html.parser') def getKeyWords(text): str = '''一!“”,。?、;’"',.、 : ''' for s in str: text = text.replace(s, '') newsList=list(jieba.lcut(text)) exclude = {',',',', '。','?', '“', '”',' ','u3000',' ',':', '这', '走', '最佳', '好', '《', '》', '为', '将', '都','说','了','的','是','还','在','发布','什么','因为'} newsDict = {} keywords = [] newsSet = set(newsList)-exclude for s in newsSet: newsDict[s] = newsList.count(s) dictList = list(newsDict.items()) dictList.sort(key=lambda x: x[1], reverse=True) for i in range(5): print('关键词:',dictList[i]) def getNewDetail(newsUrl): resd = requests.get(newsUrl) resd.encoding = 'utf-8' soupd = BeautifulSoup(resd.text, 'html.parser') title = soupd.select('h1')[0].text info = soupd.select('.xinf-le')[0].text # t = soupd.select('.meta-box')[0].text[0:24].lstrip('发布时间:') t = soupd.select('#pubtime')[0].text dt = datetime.strptime(t, ' %Y-%m-%d %H:%M:%S') source = soupd.select('#source')[0].text.lstrip(' 来源:') biaoqian = soupd.select('.fenx-bq')[0].text.lstrip('标签:') # dt = datetime.strptime(t, '%Y-%m-%d %H:%M:%S') # au=soupd.select('.hznr') if info.find('作者:') > 0: au = info[info.find('作者:'):].split()[0].lstrip('作者:') else: au = 'none' content = soupd.select('#Content')[0].text.strip() # click=getClickCount(newsUrl) print("标题:", title) print("作者:",au) print("来源:",source) print("发布时间:", dt) print("正文:",content) print("标签:", biaoqian) getKeyWords(content) for i in range(2, 3): ListPageUrl="http://ent.chinadaily.com.cn/" res = requests.get(ListPageUrl) res.encoding = 'gbk' soupn = BeautifulSoup(res.text, 'html.parser') # print(soupn.select('li')) for news in soupn.select('.yaowen-xinwen'): atail = news.a.attrs['href'] a = 'http://ent.chinadaily.com.cn/' + atail getNewDetail(a) break