官网:https://tensorflow.google.cn/tfx/guide/serving

步骤1:保存pb模型

# 为模型每一个参数添加name

# ner demo: https://github.com/buppt/ChineseNER

self.input_x = tf.placeholder(tf.int32, shape=[None, None], name='input_x')

self.input_y = tf.placeholder(tf.int32, shape=[None, None], name='input_y')

self.seq_length = tf.placeholder(tf.int32, shape=[None], name='sequence_length')

self.keep_pro = tf.placeholder(tf.float32, name='drop_out')

self.global_step = tf.Variable(0, trainable=False, name='global_step')

# 保存模型时添加签名

def save_model(self, sess, input, seq_length, keep_pro, logit, transition_params):

model_output_path = 'output/model/1/'

if os.path.exists(model_output_path):

shutil.rmtree(model_output_path)

# tf.saved_model.simple_save(sess,

# model_output_path,

# inputs={"input": input},

# outputs={"logit": logit,

# "transition_params": transition_params})

builder = tf.saved_model.builder.SavedModelBuilder(model_output_path)

signature = tf.saved_model.predict_signature_def(inputs={"input": input,

"sequence_length": seq_length,

"drop_out": keep_pro},

outputs={"logit": logit,

"transition_params": transition_params})

builder.add_meta_graph_and_variables(sess=sess,

tags=['serve'],

signature_def_map={'predict': signature})

builder.save()

步骤2:运行模型:

- 下载docker tensorflow/serving

# Download the TensorFlow Serving Docker image and repo

docker pull tensorflow/serving

# Start TensorFlow Serving container and open the REST API port

# -p 端口映射

# -v 卷映射,本地地址:docker目标地址

# -e 环境变量MODEL_NAME和卷映射目标地址保持一致

docker run -p 8501:8501 -p 8500:8500 -v /D/04_project/tf_tools/tf_serving/ner:/models/ner -e MODEL_NAME=ner -t tensorflow/serving

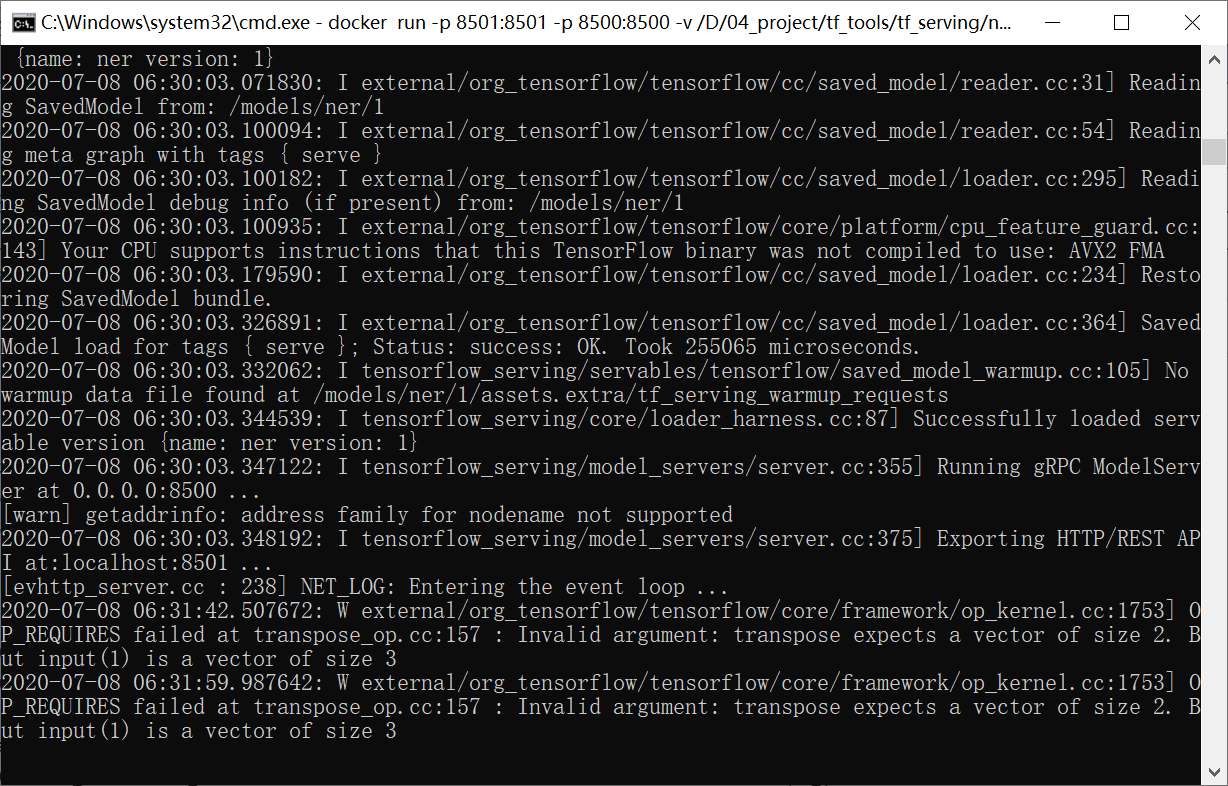

成功提示如下:

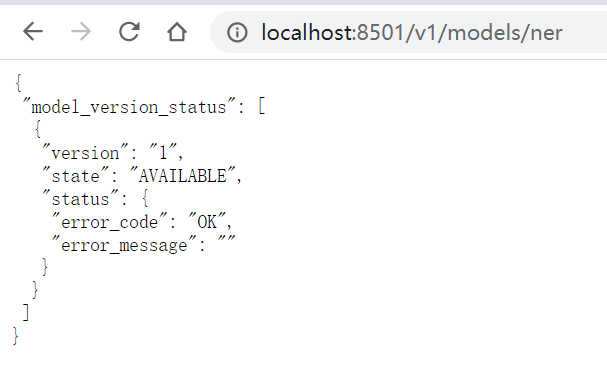

查看docker tfserving状态

http://localhost:8501/v1/models/ner

http://localhost:8501/v1/models/ner/metadata

结果如下:

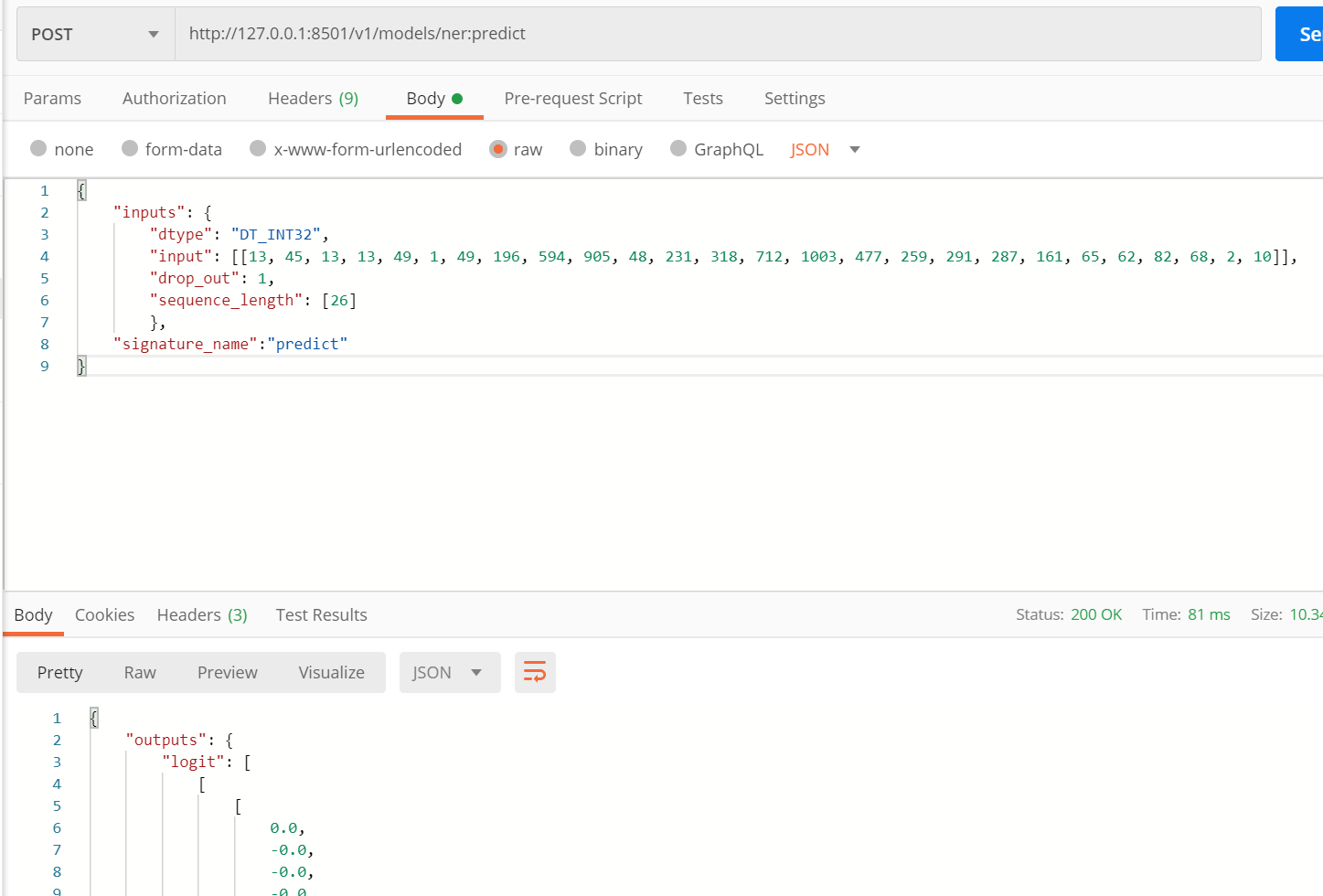

使用grpc或者rest api调用

注意事项:

- 入参每个字段都要添加签名,否则会提示缺少tensor,

例如缺少sequence_length参数会提示:

"error": "You must feed a value for placeholder tensor 'sequence_length' with dtype int32 and shape [?] [[{{node sequence_length}}]]"