首先datax运行需要python2.x和jdk1.8

我这里在python2.7的镜像的基础上做

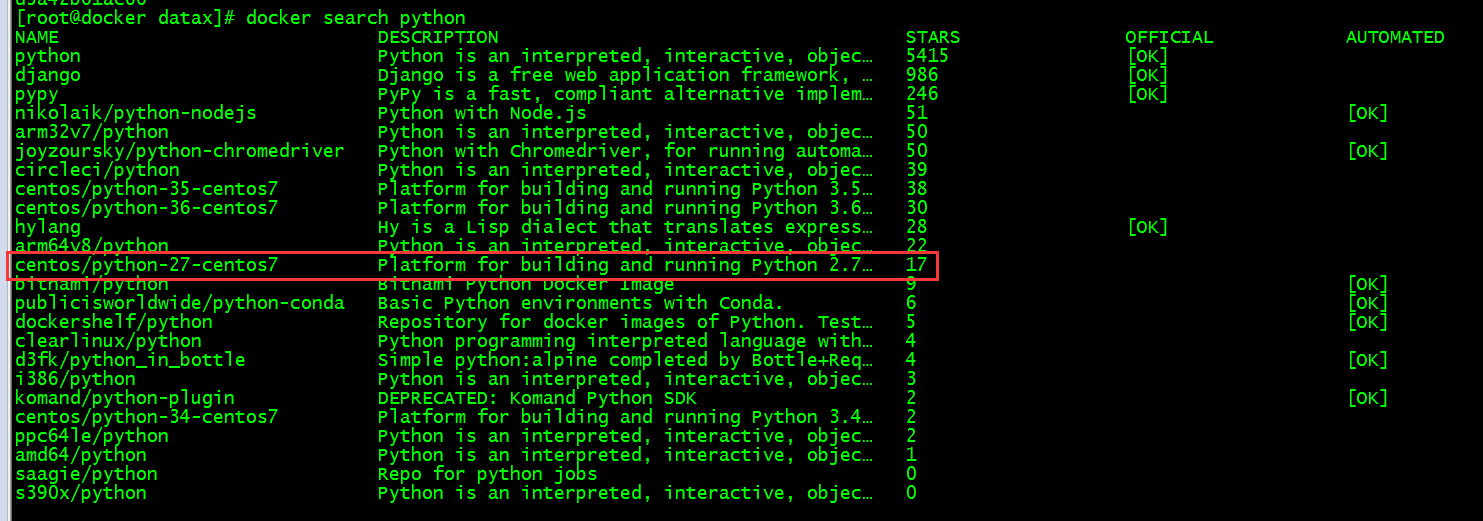

拉取python2.7的镜像到本地虚拟机

docker pull centos/python-27-centos7

上传jdk1.8 tar包已经datax安装包到本地服务器

在当前目录下编辑Dockerfile文件

FROM centos/python-27-centos7

#安装jdk

ADD jdk-8u221-linux-x64.tar.gz /opt/local

ENV JAVA_HOME /opt/local/jdk1.8.0_221

ENV PATH $JAVA_HOME/bin:$PATH

# 添加并解压datax文件到/opt/local 目录

ADD datax.tar.gz /opt/local/

# 设置运行的工作目录,可不添加,运行中使用 docker -w 指定

WORKDIR /opt/local/datax

ENTRYPOINT ["bash"]

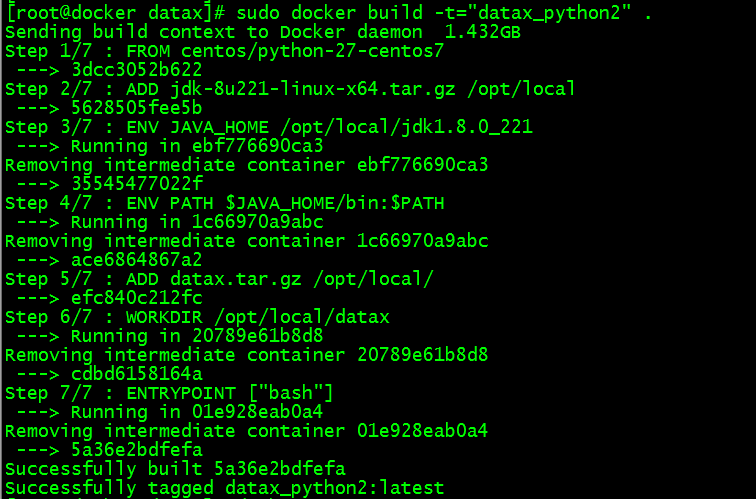

通过docker build来生成镜像

sudo docker build -t="datax_python2" .

这个命令必须在Dockerfile文件所在的目录下运行

其中 -t表示给镜像起一个名字

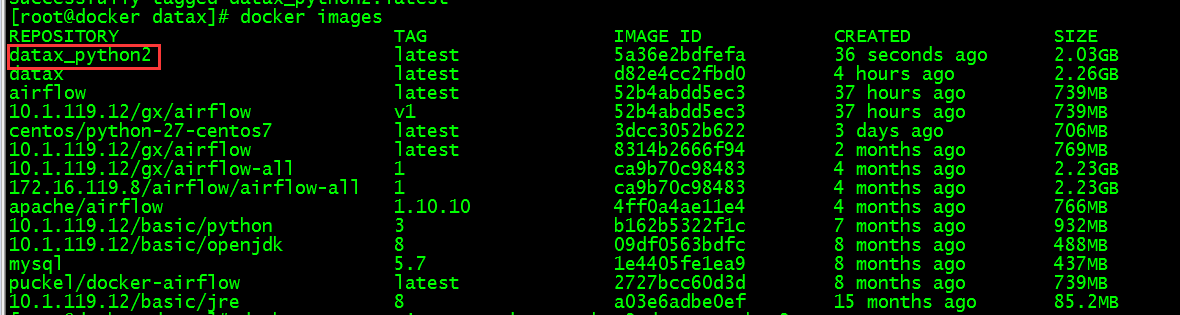

查看本地的镜像,可以看到刚刚创建到的镜像

通过该镜像创建容器

docker run -t -i --name dataxpython2 datax_python2

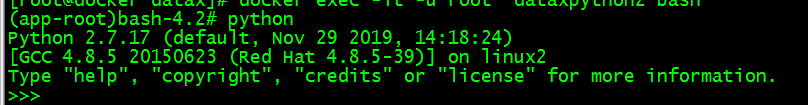

检查python环境

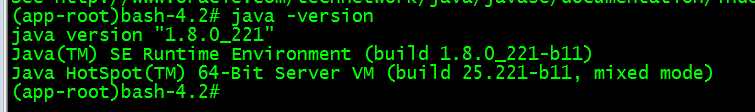

检查jdk环境

用datax的自测脚本测试一下

python /opt/local/datax/bin/datax.py /opt/local/datax/job/job.json

(app-root)bash-4.2# python /opt/local/datax/bin/datax.py /opt/local/datax/job/job.json DataX (DATAX-OPENSOURCE-3.0), From Alibaba ! Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved. 2020-08-14 15:46:23.899 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl 2020-08-14 15:46:23.909 [main] INFO Engine - the machine info => osInfo: Oracle Corporation 1.8 25.221-b11 jvmInfo: Linux amd64 3.10.0-957.el7.x86_64 cpu num: 1 totalPhysicalMemory: -0.00G freePhysicalMemory: -0.00G maxFileDescriptorCount: -1 currentOpenFileDescriptorCount: -1 GC Names [Copy, MarkSweepCompact] MEMORY_NAME | allocation_size | init_size Eden Space | 273.06MB | 273.06MB Code Cache | 240.00MB | 2.44MB Survivor Space | 34.13MB | 34.13MB Compressed Class Space | 1,024.00MB | 0.00MB Metaspace | -0.00MB | 0.00MB Tenured Gen | 682.69MB | 682.69MB 2020-08-14 15:46:23.933 [main] INFO Engine - { "content":[ { "reader":{ "name":"streamreader", "parameter":{ "column":[ { "type":"string", "value":"DataX" }, { "type":"long", "value":19890604 }, { "type":"date", "value":"1989-06-04 00:00:00" }, { "type":"bool", "value":true }, { "type":"bytes", "value":"test" } ], "sliceRecordCount":100000 } }, "writer":{ "name":"streamwriter", "parameter":{ "encoding":"UTF-8", "print":false } } } ], "setting":{ "errorLimit":{ "percentage":0.02, "record":0 }, "speed":{ "byte":10485760 } } } 2020-08-14 15:46:23.957 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null 2020-08-14 15:46:23.958 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0 2020-08-14 15:46:23.958 [main] INFO JobContainer - DataX jobContainer starts job. 2020-08-14 15:46:23.960 [main] INFO JobContainer - Set jobId = 0 2020-08-14 15:46:23.990 [job-0] INFO JobContainer - jobContainer starts to do prepare ... 2020-08-14 15:46:23.995 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work . 2020-08-14 15:46:23.995 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work . 2020-08-14 15:46:23.995 [job-0] INFO JobContainer - jobContainer starts to do split ... 2020-08-14 15:46:23.996 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 10485760 bytes. 2020-08-14 15:46:23.997 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [1] tasks. 2020-08-14 15:46:23.997 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [1] tasks. 2020-08-14 15:46:24.025 [job-0] INFO JobContainer - jobContainer starts to do schedule ... 2020-08-14 15:46:24.036 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups. 2020-08-14 15:46:24.041 [job-0] INFO JobContainer - Running by standalone Mode. 2020-08-14 15:46:24.067 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks. 2020-08-14 15:46:24.073 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated. 2020-08-14 15:46:24.074 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated. 2020-08-14 15:46:24.102 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started 2020-08-14 15:46:24.312 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[219]ms 2020-08-14 15:46:24.313 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks. 2020-08-14 15:46:34.076 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.083s | All Task WaitReaderTime 0.094s | Percentage 100.00% 2020-08-14 15:46:34.076 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks. 2020-08-14 15:46:34.076 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work. 2020-08-14 15:46:34.076 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work. 2020-08-14 15:46:34.076 [job-0] INFO JobContainer - DataX jobId [0] completed successfully. 2020-08-14 15:46:34.077 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/local/datax/hook 2020-08-14 15:46:34.078 [job-0] INFO JobContainer - [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00% [total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime Copy | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s MarkSweepCompact | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s 2020-08-14 15:46:34.078 [job-0] INFO JobContainer - PerfTrace not enable! 2020-08-14 15:46:34.078 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.083s | All Task WaitReaderTime 0.094s | Percentage 100.00% 2020-08-14 15:46:34.079 [job-0] INFO JobContainer - 任务启动时刻 : 2020-08-14 15:46:23 任务结束时刻 : 2020-08-14 15:46:34 任务总计耗时 : 10s 任务平均流量 : 253.91KB/s 记录写入速度 : 10000rec/s 读出记录总数 : 100000 读写失败总数 : 0

测试成功!!!