前面讲过了怎么通过mapreduce把mysql的一张表的数据放到另外一张表中,这次讲的是把mysql的数据读取到hdfs里面去

具体怎么搭建环境我这里就不多说了。参考

通过mapreduce把mysql的一张表的数据导到另外一张表中

也在eclipse里面创建一个mapreduce工程

具体的实现代码

package com.gong.mrmysql; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; import java.sql.PreparedStatement; import java.sql.ResultSet; import java.sql.SQLException; import java.util.Iterator; import org.apache.hadoop.filecache.DistributedCache; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.io.Writable; import org.apache.hadoop.mapred.FileOutputFormat; import org.apache.hadoop.mapred.JobClient; import org.apache.hadoop.mapred.JobConf; import org.apache.hadoop.mapred.MapReduceBase; import org.apache.hadoop.mapred.Mapper; import org.apache.hadoop.mapred.OutputCollector; import org.apache.hadoop.mapred.Reducer; import org.apache.hadoop.mapred.Reporter; import org.apache.hadoop.mapred.lib.IdentityReducer; import org.apache.hadoop.mapred.lib.db.DBConfiguration; import org.apache.hadoop.mapred.lib.db.DBInputFormat; import org.apache.hadoop.mapred.lib.db.DBOutputFormat; import org.apache.hadoop.mapred.lib.db.DBWritable; /** * Function: 测试 mr 与 mysql 的数据交互,此测试用例将一个表中的数据复制到另一张表中 * 实际当中,可能只需要从 mysql 读,或者写到 mysql 中。 * date: 2013-7-29 上午2:34:04 <br/> * @author june */ public class Mysql2Mr { // DROP TABLE IF EXISTS `hadoop`.`studentinfo`; // CREATE TABLE studentinfo ( // id INTEGER NOT NULL PRIMARY KEY, // name VARCHAR(32) NOT NULL); public static class StudentinfoRecord implements Writable, DBWritable { int id; String name; //构造方法 public StudentinfoRecord() { } //Writable接口是对数据流进行操作的,所以输入是DataInput类对象 public void readFields(DataInput in) throws IOException { this.id = in.readInt(); //输入流中的读取下一个整数,并返回 this.name = Text.readString(in); } public String toString() { return new String(this.id + " " + this.name); } //DBWritable负责对数据库进行操作,所以输出格式是PreparedStatement //PreparedStatement接口继承并扩展了Statement接口,用来执行动态的SQL语句,即包含参数的SQL语句 @Override public void write(PreparedStatement stmt) throws SQLException { stmt.setInt(1, this.id); stmt.setString(2, this.name); } //DBWritable负责对数据库进行操作,输入格式是ResultSet // ResultSet接口类似于一张数据表,用来暂时存放从数据库查询操作所获得的结果集 @Override public void readFields(ResultSet result) throws SQLException { this.id = result.getInt(1); this.name = result.getString(2); } //Writable接口是对数据流进行操作的,所以输出是DataOutput类对象 @Override public void write(DataOutput out) throws IOException { out.writeInt(this.id); Text.writeString(out, this.name); } } // 记住此处是静态内部类,要不然你自己实现无参构造器,或者等着抛异常: // Caused by: java.lang.NoSuchMethodException: DBInputMapper.<init>() // http://stackoverflow.com/questions/7154125/custom-mapreduce-input-format-cant-find-constructor // 网上脑残式的转帖,没见到一个写对的。。。 public static class DBInputMapper extends MapReduceBase implements Mapper<LongWritable, StudentinfoRecord, LongWritable, Text> { public void map(LongWritable key, StudentinfoRecord value, OutputCollector<LongWritable, Text> collector, Reporter reporter) throws IOException { collector.collect(new LongWritable(value.id), new Text(value.toString())); } } public static class MyReducer extends MapReduceBase implements Reducer<LongWritable, Text, StudentinfoRecord, Text> { @Override public void reduce(LongWritable key, Iterator<Text> values, OutputCollector<StudentinfoRecord, Text> output, Reporter reporter) throws IOException { String[] splits = values.next().toString().split(" "); StudentinfoRecord r = new StudentinfoRecord(); r.id = Integer.parseInt(splits[0]); r.name = splits[1]; output.collect(r, new Text(r.name)); } } public static void main(String[] args) throws IOException { JobConf conf = new JobConf(Mysql2Mr.class); DistributedCache.addFileToClassPath(new Path("hdfs://192.168.241.13:9000/mysqlconnector/mysql-connector-java-5.1.38-bin.jar"), conf); conf.setMapOutputKeyClass(LongWritable.class); conf.setMapOutputValueClass(Text.class); conf.setOutputKeyClass(LongWritable.class); conf.setOutputValueClass(Text.class); // conf.setOutputFormat(DBOutputFormat.class); conf.setInputFormat(DBInputFormat.class); // mysql to hdfs conf.set("fs.defaultFS", "hdfs://192.168.241.13:9000");//在配置文件conf中指定所用的文件系统---HDFS conf.setReducerClass(IdentityReducer.class); Path outPath = new Path("hdfs://192.168.241.13:9000/student/out1"); FileSystem.get(conf).delete(outPath, true); FileOutputFormat.setOutputPath(conf, outPath); DBConfiguration.configureDB(conf, "com.mysql.jdbc.Driver", "jdbc:mysql://192.168.241.13:3306/mrtest", "root", "543116"); String[] fields = { "id", "name" }; // 从 t 表读数据 DBInputFormat.setInput(conf, StudentinfoRecord.class, "t", null, "id", fields); // mapreduce 将数据输出到 t2 表 //DBOutputFormat.setOutput(conf, "t2", "id", "name"); // FileOutputFormat.setOutputPath(conf, new Path("hdfs://192.168.241.13:9000/student/out1")); conf.setMapperClass(org.apache.hadoop.mapred.lib.IdentityMapper.class); conf.setMapperClass(DBInputMapper.class); // conf.setReducerClass(MyReducer.class); JobClient.runJob(conf); } }

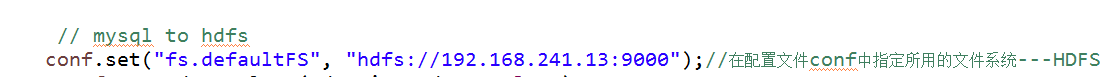

特别要主要的是在主函数里面添加这么一句话

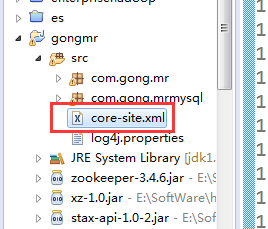

如果不添加这句话的话就不能识别你的hdfs路径了,除了这个方法之外还,不想添加这句话的话还可以把集群的core-site.xml文件直接拷贝一份放到工程的src目录下

这样也是可以的

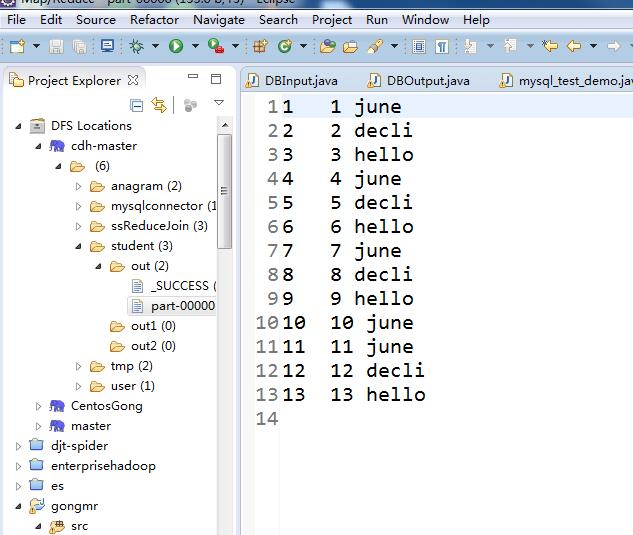

运行程序

可以看到hdfs的文件上面已经有mysql数据库表的内容了