国内不fq安装K8S一: 安装docker

国内不fq安装K8S二: 安装kubernet

国内不fq安装K8S三: 使用helm安装kubernet-dashboard

国内不fq安装K8S四: 安装过程中遇到的问题和解决方法

本文是按照"青蛙小白"的博客一步一步执行的:(全程无问题)

https://blog.frognew.com/2019/07/kubeadm-install-kubernetes-1.15.html

3 使用helm安装kubernet-dashboard

3.1 Helm的安装

$ curl -O https://get.helm.sh/helm-v2.14.1-linux-amd64.tar.gz

$ tar -zxvf helm-v2.14.1-linux-amd64.tar.gz

$ cd linux-amd64/

$ cp helm /usr/local/bin/

创建helm-rbac.yaml文件:

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

创建tiller使用的service account: tiller并分配合适的角色给它

$ kubectl create -f helm-rbac.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

使用helm部署tiller:

helm init --service-account tiller --skip-refresh

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

tiller默认被部署在k8s集群中的kube-system这个namespace下:

kubectl get pod -n kube-system -l app=helm

NAME READY STATUS RESTARTS AGE

tiller-deploy-c4fd4cd68-dwkhv 1/1 Running 0 83s

helm version

Client: &version.Version{SemVer:"v2.14.1", GitCommit:"5270352a09c7e8b6e8c9593002a73535276507c0", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.14.1", GitCommit:"5270352a09c7e8b6e8c9593002a73535276507c0", GitTreeState:"clean"}

注意由于某些原因需要网络可以访问gcr.io和kubernetes-charts.storage.googleapis.com,如果无法访问可以通过helm init --service-account tiller --tiller-image

/tiller:v2.13.1 --skip-refresh使用私有镜像仓库中的tiller镜像,如:

helm init --service-account tiller --tiller-image gcr.azk8s.cn/kubernetes-helm/tiller:v2.14.1 --skip-refresh

如果错过了怎么办?可以用"kubectl edit deployment tiller-deploy -n kube-system"修改默认的gcr源即可,其他gcr源同理。

最后在node1上修改helm chart仓库的地址为azure提供的镜像地址:

helm repo add stable http://mirror.azure.cn/kubernetes/charts

"stable" has been added to your repositories

helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts

local http://127.0.0.1:8879/charts

3.2 使用Helm部署Nginx Ingress

我们将kub1(192.168.15.174)做为边缘节点,打上Label:

$ kubectl label node kub1 node-role.kubernetes.io/edge=

node/kub1 labeled

$ kubectl get node

NAME STATUS ROLES AGE VERSION

kub1 Ready edge,master 6h43m v1.15.2

kub2 Ready <none> 6h36m v1.15.2

stable/nginx-ingress chart的值文件ingress-nginx.yaml如下:

controller:

replicaCount: 1

hostNetwork: true

nodeSelector:

node-role.kubernetes.io/edge: ''

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx-ingress

- key: component

operator: In

values:

- controller

topologyKey: kubernetes.io/hostname

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

defaultBackend:

nodeSelector:

node-role.kubernetes.io/edge: ''

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

安装nginx-ingress

$ helm repo update

$ helm install stable/nginx-ingress

-n nginx-ingress

--namespace ingress-nginx

-f ingress-nginx.yaml

如果访问http://192.168.15.174返回default backend,则部署完成。

如果backend的pod找不到image和上面处理tiller没image的方法一样,不再多说。

3.3 使用Helm部署dashboard

kubernetes-dashboard.yaml:

image:

repository: k8s.gcr.io/kubernetes-dashboard-amd64

tag: v1.10.1

ingress:

enabled: true

hosts:

- k8s.frognew.com

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

tls:

- secretName: frognew-com-tls-secret

hosts:

- k8s.frognew.com

nodeSelector:

node-role.kubernetes.io/edge: ''

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

rbac:

clusterAdminRole: true

注意上面有hosts选项,因为我是在局域网里测试的,所以直接把两处hosts选项删掉,install之后使用IP访问是一样的。

$ helm install stable/kubernetes-dashboard

-n kubernetes-dashboard

--namespace kube-system

-f kubernetes-dashboard.yaml

$ kubectl -n kube-system get secret | grep kubernetes-dashboard-token

kubernetes-dashboard-token-5d5b2 kubernetes.io/service-account-token 3 4h24m

$ kubectl describe -n kube-system secret/kubernetes-dashboard-token-5d5b2

Name: kubernetes-dashboard-token-5d5b2

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 82c89647-1a1c-450f-b2bb-8753de12f104

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi01ZDViMiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjgyYzg5NjQ3LTFhMWMtNDUwZi1iMmJiLTg3NTNkZTEyZjEwNCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.UF2Fnq-SnqM3oAIwJFvXsW64SAFstfHiagbLoK98jWuyWDPoYyPQvdB1elRsJ8VWSzAyTyvNw2MD9EgzfDdd9_56yWGNmf4Jb6prbA43PE2QQHW69kLiA6seP5JT9t4V_zpjnhpGt0-hSfoPvkS4aUnJBllldCunRGYrxXq699UDt1ah4kAmq5MqhH9l_9jMtcPwgpsibBgJY-OD8vElITv63fP4M16DFtvig9u0EnIwhAGILzdLSkfwBJzLvC_ukii_2A9e-v2OZBlTXYgNQ1MnS7CvU8mu_Ycoxqs0r1kZ4MjlNOUOt6XFjaN8BlPwfEPf2VNx0b1ZgZv-euQQtA

在dashboard的登录窗口使用上面的token登录。

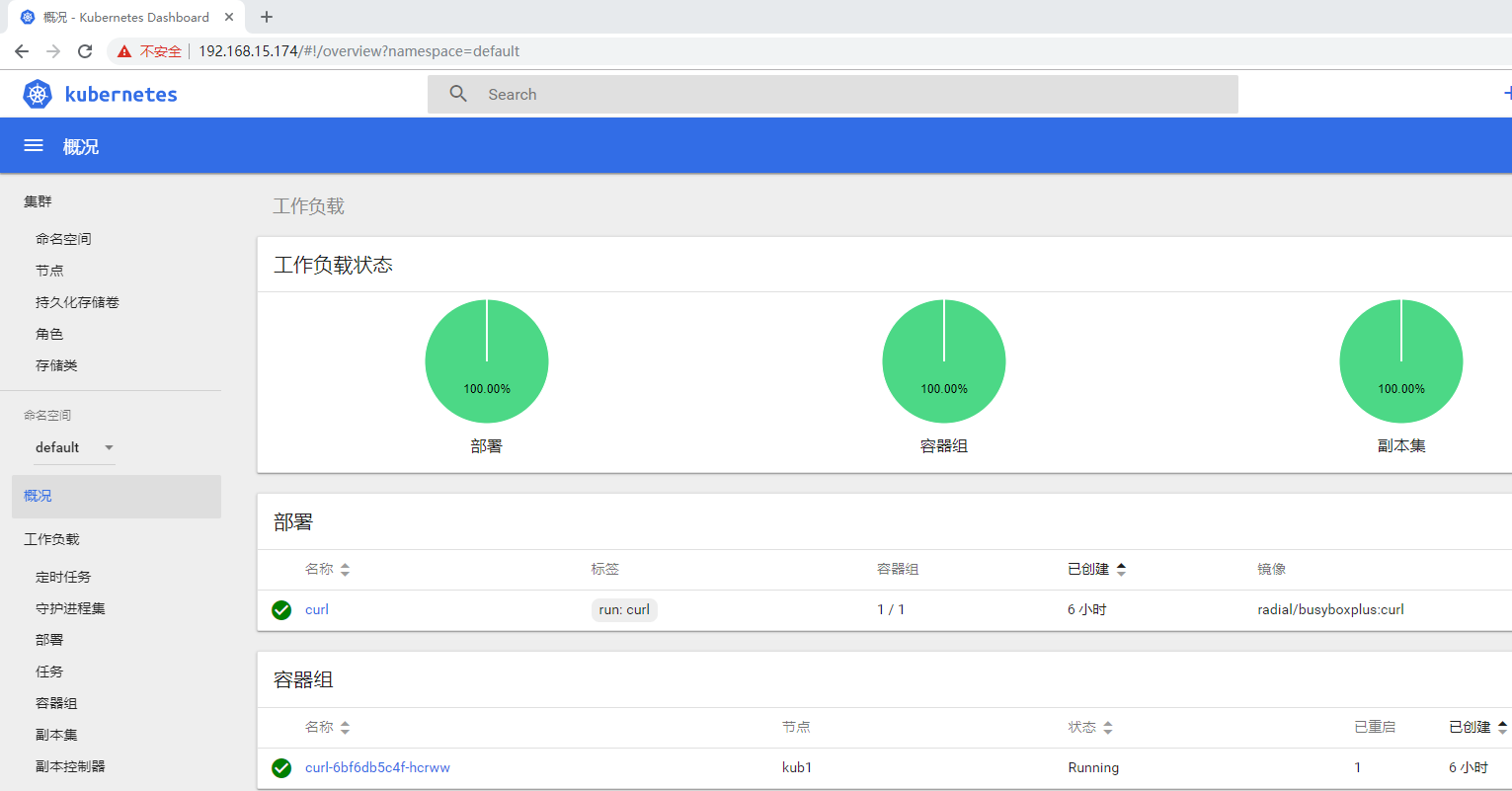

访问地址:https://192.168.15.174然后选择token方式登录,然后用把上面的token粘贴进去即可。

3.4 使用Helm部署metrics-server

metrics-server.yaml:

args:

- --logtostderr

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

nodeSelector:

node-role.kubernetes.io/edge: ''

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

$ helm install stable/metrics-server

-n metrics-server

--namespace kube-system

-f metrics-server.yaml

使用命令获取到关于集群节点基本的指标信息:

$ kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

kub1 433m 5% 2903Mi 37%

kub2 101m 1% 1446Mi 18%

$ kubectl top pod -n kube-system

NAME CPU(cores) MEMORY(bytes)

coredns-5c98db65d4-7n4gm 7m 14Mi

coredns-5c98db65d4-s5zfr 7m 14Mi

etcd-kub1 49m 72Mi

kube-apiserver-kub1 61m 219Mi

kube-controller-manager-kub1 36m 47Mi

kube-flannel-ds-amd64-mssbt 5m 17Mi

kube-flannel-ds-amd64-pb4dz 5m 15Mi

kube-proxy-hc4kh 1m 17Mi

kube-proxy-rp4cx 1m 18Mi

kube-scheduler-kub1 3m 15Mi

kubernetes-dashboard-77f9fd6985-ctwmc 1m 23Mi

metrics-server-75bfbbbf76-6blkn 4m 17Mi

tiller-deploy-7dd9d8cd47-ztl7w 1m 12Mi