环境

虚拟机:VMware 10

Linux版本:CentOS-6.5-x86_64

客户端:Xshell4

FTP:Xftp4

jdk8

hadoop-3.1.1

apache-hive-3.1.1

一、Hive 参数

1、Hive 参数类型

hive当中的参数、变量,都是以命名空间开头;

通过${}方式进行引用,其中system、env下的变量必须以前缀开头;

在Hive CLI查看参数

#显示所有参数 hive>set; #查看单个参数 hive> set hive.cli.print.header; hive.cli.print.header=false

2、Hive参数设置方式

(1)、修改配置文件 ${HIVE_HOME}/conf/hive-site.xml 这会使所有客户端都生效

(2)、启动hive cli时,通过--hiveconf key=value的方式进行设置 这只会在当前客户端生效

例:

[root@PCS102 ~]# hive --hiveconf hive.cli.print.header=true hive> set hive.cli.print.header; hive.cli.print.header=true hive>

(3)、进入cli之后,通过使用set命令设置 这只会在当前客户端生效

hive> set hive.cli.print.header; hive.cli.print.header=false hive> select * from wc; OK hadoop 2 hbase 1 hello 2 name 3 world 1 zookeeper 1 Time taken: 2.289 seconds, Fetched: 6 row(s) hive> set hive.cli.print.header=true; hive> set hive.cli.print.header; hive.cli.print.header=true hive> select * from wc; OK wc.word wc.totalword hadoop 2 hbase 1 hello 2 name 3 world 1 zookeeper 1 Time taken: 2.309 seconds, Fetched: 6 row(s) hive>

(4)使用.hiverc文件设置

当前用户家目录(例:root用户:家目录是/root)下的.hiverc文件

如果没有,可直接创建该文件,将需要设置的参数写到该文件中,hive启动运行时,会加载改文件中的配置。

[root@PCS102 ~]# vi ~/.hiverc set hive.cli.print.header=true :wq

[root@PCS102 ~]# ll -a|grep hive -rw-r--r--. 1 root root 5562 Feb 15 15:01 .hivehistory -rw-r--r--. 1 root root 31 Feb 15 15:03 .hiverc

另外:

.hivehistory 文件记录hive历史操作命令集

#重新登录 可以发现配置生效了 影响当前linux用户登录的客户端

[root@PCS102 ~]# hive hive> set hive.cli.print.header; hive.cli.print.header=true hive>

二、动态分区

参数设置:

开启支持动态分区

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nostrict;

默认:strict(至少有一个分区列是静态分区)

其他参数

set hive.exec.max.dynamic.partitions.pernode;

每一个执行mr节点上,允许创建的动态分区的最大数量(100)

set hive.exec.max.dynamic.partitions;

所有执行mr节点上,允许创建的所有动态分区的最大数量(1000)

set hive.exec.max.created.files;

所有的mr job允许创建的文件的最大数量(100000)

数据 /root/data:

1,小明1,18,boy,lol-book-movie,beijing:shangxuetang-shanghai:pudong 2,小明2,20,man,lol-book-movie,beijing:shangxuetang-shanghai:pudong 3,小明3,21,boy,lol-book-movie,beijing:shangxuetang-shanghai:pudong 4,小明4,21,man,lol-book-movie,beijing:shangxuetang-shanghai:pudong 5,小明5,21,boy,lol-book-movie,beijing:shangxuetang-shanghai:pudong 6,小明6,21,man,lol-book-movie,beijing:shangxuetang-shanghai:pudong

1、原始表

hive> CREATE TABLE psn21( > id INT, > name STRING, > age INT, > sex string, > likes ARRAY<STRING>, > address MAP<STRING,STRING> > ) > ROW FORMAT DELIMITED > FIELDS TERMINATED BY ',' > COLLECTION ITEMS TERMINATED BY '-' > MAP KEYS TERMINATED BY ':' > LINES TERMINATED BY ' '; OK Time taken: 0.183 seconds hive> LOAD DATA LOCAL INPATH '/root/data' INTO TABLE psn21; Loading data to table default.psn21 OK Time taken: 0.248 seconds hive> select * from psn21; OK psn21.id psn21.name psn21.age psn21.sex psn21.likes psn21.address 1 小明1 18 boy ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 2 小明2 20 man ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 3 小明3 21 boy ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 4 小明4 21 man ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 5 小明5 21 boy ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 6 小明6 21 man ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} Time taken: 0.113 seconds, Fetched: 6 row(s) hive>

2、分区表

hive> CREATE TABLE psn22( > id INT, > name STRING, > likes ARRAY<STRING>, > address MAP<STRING,STRING> > ) > partitioned by (age int,sex string) > ROW FORMAT DELIMITED > FIELDS TERMINATED BY ',' > COLLECTION ITEMS TERMINATED BY '-' > MAP KEYS TERMINATED BY ':' > LINES TERMINATED BY ' '; OK Time taken: 0.045 seconds

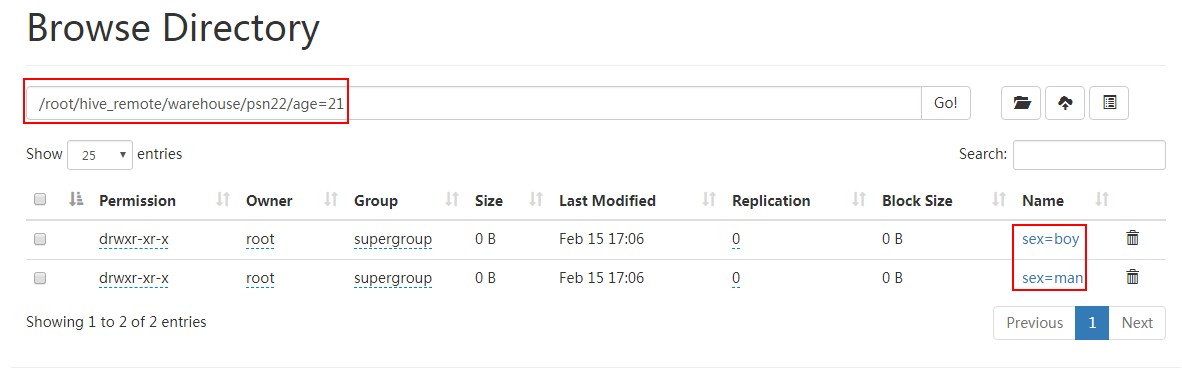

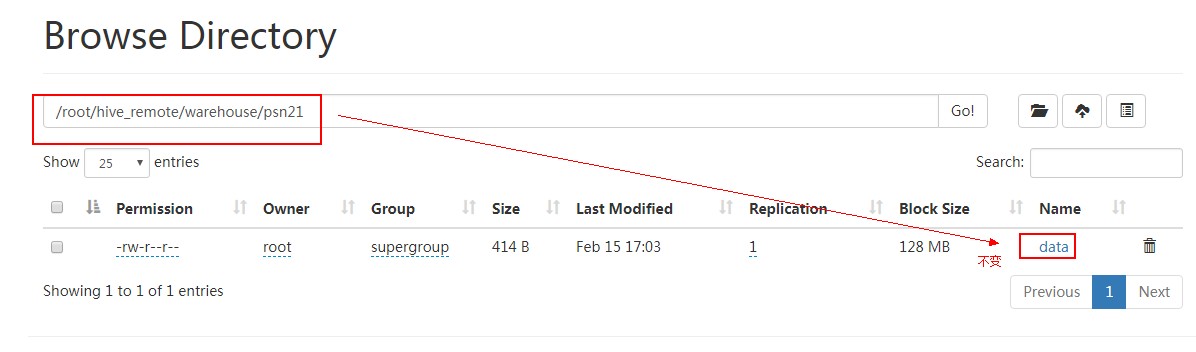

3、原始表数据导入分区表(注意psn21下数据不变)

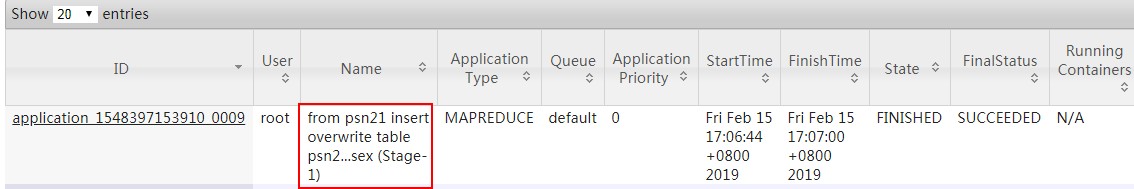

hive> from psn21 > insert overwrite table psn22 partition(age, sex) > select id, name,likes, address,age, sex distribute by age, sex; Query ID = root_20190215170643_7aeb9dae-62d5-49fe-ab37-022446f6a004 Total jobs = 1 Launching Job 1 out of 1 Number of reduce tasks not specified. Estimated from input data size: 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapreduce.job.reduces=<number> Starting Job = job_1548397153910_0009, Tracking URL = http://PCS102:8088/proxy/application_1548397153910_0009/ Kill Command = /usr/local/hadoop-3.1.1/bin/mapred job -kill job_1548397153910_0009 Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1 2019-02-15 17:06:50,930 Stage-1 map = 0%, reduce = 0% 2019-02-15 17:06:55,069 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.86 sec 2019-02-15 17:07:00,206 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 6.26 sec MapReduce Total cumulative CPU time: 6 seconds 260 msec Ended Job = job_1548397153910_0009 Loading data to table default.psn22 partition (age=null, sex=null) Time taken to load dynamic partitions: 0.482 seconds Time taken for adding to write entity : 0.001 seconds MapReduce Jobs Launched: Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 6.26 sec HDFS Read: 13250 HDFS Write: 599 SUCCESS Total MapReduce CPU Time Spent: 6 seconds 260 msec OK id name likes address age sex Time taken: 18.572 seconds hive>

查看该分区下数据:

[root@PCS102 ~]# hdfs dfs -cat /root/hive_remote/warehouse/psn22/age=21/sex=boy/* 5,小明5,lol-book-movie,beijing:shangxuetang-shanghai:pudong 3,小明3,lol-book-movie,beijing:shangxuetang-shanghai:pudong [root@PCS102 ~]#

全部分区数据:

hive> select * from psn22; OK psn22.id psn22.name psn22.likes psn22.address psn22.age psn22.sex 1 小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 18 boy 2 小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20 man 5 小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 21 boy 3 小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 21 boy 6 小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 21 man 4 小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 21 man Time taken: 0.141 seconds, Fetched: 6 row(s) hive>

三、分桶

1、分桶

分桶表是对列值取哈希值的方式,将不同数据放到不同文件中存储。

对于hive中每一个表、分区都可以进一步进行分桶。

由列的哈希值除以桶的个数来决定每条数据划分在哪个桶中。

适用场景:数据抽样( sampling )、map-join

2、开启支持分桶

set hive.enforce.bucketing=true;

默认:false;设置为true之后,mr运行时会根据bucket的个数自动分配reduce task个数。

(用户也可以通过mapred.reduce.tasks自己设置reduce任务个数,但分桶时不推荐使用)

注意:一次作业产生的桶(文件数量)和reduce task个数一致。

3、桶表 抽样查询

select * from bucket_table tablesample(bucket 1 out of 4 on columns);

TABLESAMPLE语法:

TABLESAMPLE(BUCKET x OUT OF y)

x:表示从哪个bucket开始抽取数据

y:必须为该表总bucket数的倍数或因子 (Y表示相隔多少个桶再次抽取)

举例:

当表总bucket数为32时

(1)TABLESAMPLE(BUCKET 2 OUT OF 4),抽取哪些数据?

数据个数:32/4=8份

桶号:2,6(2+4),10(6+4),14(10+4),18(14+4),22(18+4),26(22+4),30(26+4)

(2)TABLESAMPLE(BUCKET 3 OUT OF 8),抽取哪些数据?

数据个数:32/8=4份

桶号:3,11(3+8),19(11+8),27(19+8)

(3)TABLESAMPLE(BUCKET 3 OUT OF 256),抽取哪些数据?

数据个数:32/256=1/8份

桶号:3, 一个桶取1/8即可

4、分桶案例

原始表:

CREATE TABLE psn31 ( id INT, name STRING, age INT) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

数据/root/data2:

1,tom,11 2,cat,22 3,dog,33 4,hive,44 5,hbase,55 6,mr,66 7,alice,77 8,scala,88

数据导入:

hive>load data local inpath '/root/data2' into table psn31;

创建分桶表

CREATE TABLE psnbucket ( id INT, name STRING, age INT) CLUSTERED BY (age) INTO 4 BUCKETS ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

数据分桶预测:

age%4

1,tom,11 --3 2,cat,22 --2 3,dog,33 --1 4,hive,44 --0 5,hbase,55 --3 6,mr,66 --2 7,alice,77 --1 8,scala,88 --0

加载数据 执行MR任务 表目录下有四个文件(桶表不能通过load的方式直接加载数据,只能从另一张表中插入数据):

hive>insert into table psnbucket select id, name, age from psn31;

看一下每个桶文件内的数据是否和预测一样:

[root@PCS102 ~]# hdfs dfs -cat /root/hive_remote/warehouse/psnbucket/000000_0 8,scala,88 4,hive,44 [root@PCS102 ~]# hdfs dfs -cat /root/hive_remote/warehouse/psnbucket/000001_0 7,alice,77 3,dog,33 [root@PCS102 ~]# hdfs dfs -cat /root/hive_remote/warehouse/psnbucket/000002_0 6,mr,66 2,cat,22 [root@PCS102 ~]# hdfs dfs -cat /root/hive_remote/warehouse/psnbucket/000003_0 5,hbase,55 1,tom,11

数据抽样:结果跟之前版本预期不一样 很奇怪 为什么不是取00001_0里的数据?

hive> select id, name, age from psnbucket tablesample(bucket 2 out of 4 on age); OK id name age 6 mr 66 1 tom 11 Time taken: 0.184 seconds, Fetched: 2 row(s) hive>