这部分是PoseTrack数据集的Evaluation Tools中的code:https://github.com/leonid-pishchulin/poseval.git

Step1 :首先是README.md

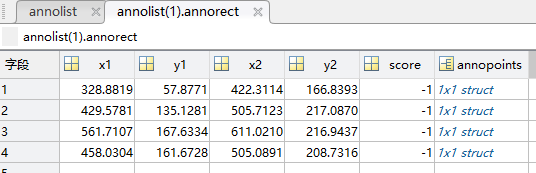

要求预测的结果要按所属视频分开保存(video_pred_1.mat video_pred_2.mat ...),并且与GT内部的数据形式要相同,下面是最终的json格式:

``` { "annolist": [ { "image": [ { "name": "images/bonn_5sec/000342_mpii/00000001.jpg" } ], "annorect": [ { "x1": [625], "y1": [94], "x2": [681], "y2": [178], "score": [0.9], "track_id": [0], "annopoints": [ { "point": [ { "id": [0], "x": [394], "y": [173], }, { ... } ] } ] }, { ... } ], }, { ... } ] } ```

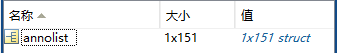

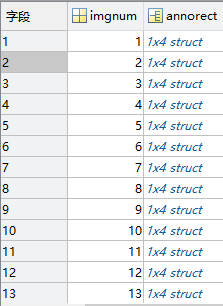

也就是说在MATLAB中存贮的数据格式应当是:

然后通过poseval-master/matlab/mat2json.m 转换成json格式。然后调用poseval-master/py/evaluate.py --args..得到测试结果。

Step2 : evaluate.py eval_helper.py evaluateAP.py 这三个用到的code解读。

显示利用输入的参数调用eval_helper.load_data_dir(argv) :

gtFramesAll,prFramesAll = eval_helpers.load_data_dir(argv)

这里输出的prFramesAll中有三个keys() score 、 annopoints 、seq_id 依次存贮了所有需要待测图片的结果,同时需要保证len(gt)== len(pr)

然后就是评估结果了:

apAll,preAll,recAll = evaluateAP(gtFramesAll,prFramesAll)

打开evaluateAP.py 代码如下:

def evaluateAP(gtFramesAll, prFramesAll): distThresh = 0.5 # assign predicted poses to GT poses

#

# scoreAll[nJoints][len(gtFrames)] = np.zeros([0,0],dtypr=np.float32)

scoresAll, labelsAll, nGTall, _ = eval_helpers.assignGTmulti(gtFramesAll, prFramesAll, distThresh) # compute average precision (AP), precision and recall per part apAll, preAll, recAll = computeMetrics(scoresAll, labelsAll, nGTall) return apAll, preAll, recAll

scoreAll[ i ][ imgidx ] = np.append( scoreAll[ i ][ imgidx ], s[ i ] ) Prd的第 i 个关键点的score

labelAll[ i ][ imgidx ] = np.append( labelAll[ i ][ imgidx ], m[ i ] ) 如果第i个关键点hasPrd = 1 ,那么 m[ i ] =1 ,否则 =0

nGTall.shape = nJoints x len(gtFrames) 存的是每张图片中各个关节点的数量

接下来看computeMetrics 这个函数:

def computeMetrics(scoresAll, labelsAll, nGTall): apAll = np.zeros((nGTall.shape[0] + 1, 1)) recAll = np.zeros((nGTall.shape[0] + 1, 1)) preAll = np.zeros((nGTall.shape[0] + 1, 1)) # iterate over joints for j in range(nGTall.shape[0]): scores = np.zeros([0, 0], dtype=np.float32) labels = np.zeros([0, 0], dtype=np.int8) # iterate over images for imgidx in range(nGTall.shape[1]): scores = np.append(scores, scoresAll[j][imgidx]) labels = np.append(labels, labelsAll[j][imgidx]) # compute recall/precision values nGT = sum(nGTall[j, :]) precision, recall, scoresSortedIdxs = eval_helpers.computeRPC(scores, labels, nGT) if (len(precision) > 0): apAll[j] = eval_helpers.VOCap(recall, precision) * 100 preAll[j] = precision[len(precision) - 1] * 100 recAll[j] = recall[len(recall) - 1] * 100 apAll[nGTall.shape[0]] = apAll[:nGTall.shape[0], 0].mean() recAll[nGTall.shape[0]] = recAll[:nGTall.shape[0], 0].mean() preAll[nGTall.shape[0]] = preAll[:nGTall.shape[0], 0].mean() return apAll, preAll, recAll

这里涉及到 precision 和 recall . 不了解的可以参考检测和姿态估计的评价标准 http://blog.csdn.net/xiaojiajia007/article/details/78746149

Precison = TP / (TP +FP )

Recall = TP / ( TP + FN )

AP衡量的是学出来的模型在每个类别上的好坏,mAP衡量的是学出的模型在所有类别上的好坏,得到AP后mAP的计算就变得很简单了,就是取所有AP的平均值。

最后附上eval_helper.assignGTmulti(gtFrames,prFrames,distThresh) 代码的标注

def assignGTmulti(gtFrames, prFrames, distThresh): assert (len(gtFrames) == len(prFrames)) nJoints = Joint().count # part detection scores scoresAll = {} # positive / negative labels labelsAll = {} # number of annotated GT joints per image nGTall = np.zeros([nJoints, len(gtFrames)]) for pidx in range(nJoints): scoresAll[pidx] = {} labelsAll[pidx] = {} for imgidx in range(len(gtFrames)): scoresAll[pidx][imgidx] = np.zeros([0, 0], dtype=np.float32) labelsAll[pidx][imgidx] = np.zeros([0, 0], dtype=np.int8) # GT track IDs trackidxGT = [] # prediction track IDs trackidxPr = [] # number of GT poses 实际的每张图中包含pose的个数 nGTPeople = np.zeros((len(gtFrames), 1)) # number of predicted poses 预测的每张图片中有几个人的pose nPrPeople = np.zeros((len(gtFrames), 1)) # container to save info for computing MOT metrics motAll = {} for imgidx in range(len(gtFrames)): # distance between predicted and GT joints dist = np.full((len(prFrames[imgidx]["annorect"]), len(gtFrames[imgidx]["annorect"]), nJoints), np.inf) # score of the predicted jointlen(prFrames[imgidx]["annorect"]), score = np.full((nJoints), np.nan) # body joint prediction exist hasPr = np.zeros((len(prFrames[imgidx]["annorect"]), nJoints), dtype=bool) # body joint is annotated hasGT = np.zeros((len(gtFrames[imgidx]["annorect"]), nJoints), dtype=bool) trackidxGT = [] trackidxPr = [] idxsPr = [] for ridxPr in range(len(prFrames[imgidx]["annorect"])): if (("annopoints" in prFrames[imgidx]["annorect"][ridxPr].keys()) and ("point" in prFrames[imgidx]["annorect"][ridxPr]["annopoints"][0].keys())): idxsPr += [ridxPr]; prFrames[imgidx]["annorect"] = [prFrames[imgidx]["annorect"][ridx] for ridx in idxsPr] nPrPeople[imgidx, 0] = len(prFrames[imgidx]["annorect"]) nGTPeople[imgidx, 0] = len(gtFrames[imgidx]["annorect"]) # iterate over GT poses for ridxGT in range(len(gtFrames[imgidx]["annorect"])): # GT pose rectGT = gtFrames[imgidx]["annorect"][ridxGT] if ("track_id" in rectGT.keys()): trackidxGT += [rectGT["track_id"][0]] pointsGT = [] if len(rectGT["annopoints"]) > 0: pointsGT = rectGT["annopoints"][0]["point"] # iterate over all possible body joints for i in range(nJoints): # GT joint in LSP format ppGT = getPointGTbyID(pointsGT, i) if len(ppGT) > 0: hasGT[ridxGT, i] = True # iterate over predicted poses for ridxPr in range(len(prFrames[imgidx]["annorect"])): # predicted pose rectPr = prFrames[imgidx]["annorect"][ridxPr] if ("track_id" in rectPr.keys()): trackidxPr += [rectPr["track_id"][0]] pointsPr = rectPr["annopoints"][0]["point"] for i in range(nJoints): # predicted joint in LSP format ppPr = getPointGTbyID(pointsPr, i) if len(ppPr) > 0: assert("score" in ppPr.keys() and "keypoint score is missing") score[ridxPr, i] = ppPr["score"][0] hasPr[ridxPr, i] = True if len(prFrames[imgidx]["annorect"]) and len(gtFrames[imgidx]["annorect"]): # predictions and GT are present # iterate over GT poses 得到distance (pred_num x gt_num x nJoints) for ridxGT in range(len(gtFrames[imgidx]["annorect"])): # GT pose # 一张图中的某一个pose rectGT = gtFrames[imgidx]["annorect"][ridxGT] # compute reference distance as head size headSize = getHeadSize(rectGT["x1"][0], rectGT["y1"][0], rectGT["x2"][0], rectGT["y2"][0]) pointsGT = [] if len(rectGT["annopoints"]) > 0: pointsGT = rectGT["annopoints"][0]["point"] # iterate over predicted poses for ridxPr in range(len(prFrames[imgidx]["annorect"])): # predicted pose rectPr = prFrames[imgidx]["annorect"][ridxPr] pointsPr = rectPr["annopoints"][0]["point"] # iterate over all possible body joints for i in range(nJoints): # GT joint ppGT = getPointGTbyID(pointsGT, i) # predicted joint ppPr = getPointGTbyID(pointsPr, i) # compute distance between predicted and GT joint locations if hasPr[ridxPr, i] and hasGT[ridxGT, i]: pointGT = [ppGT["x"][0], ppGT["y"][0]] pointPr = [ppPr["x"][0], ppPr["y"][0]] dist[ridxPr, ridxGT, i] = np.linalg.norm(np.subtract(pointGT, pointPr)) / headSize dist = np.array(dist) hasGT = np.array(hasGT) # number of annotated joints nGTp = np.sum(hasGT, axis=1)#每个pose分别有多少个点 match = dist <= distThresh #dist中距离小于阈值的置1,否则置0 pck = 1.0 * np.sum(match, axis=2)#pck = pr_num x gt_num for i in range(hasPr.shape[0]): for j in range(hasGT.shape[0]): if nGTp[j] > 0: pck[i, j] = pck[i, j] / nGTp[j] #Pred的Pose点个数 / GT 的点个数 # preserve best GT match only idx = np.argmax(pck, axis=1) val = np.max(pck, axis=1) for ridxPr in range(pck.shape[0]): for ridxGT in range(pck.shape[1]): if (ridxGT != idx[ridxPr]): pck[ridxPr, ridxGT] = 0 prToGT = np.argmax(pck, axis=0) val = np.max(pck, axis=0) prToGT[val == 0] = -1 # info to compute MOT metrics mot = {} for i in range(nJoints): mot[i] = {} #将hasGT、hasPr、dist转存到mot中,并且只保留匹配好的点的内容 for i in range(nJoints): ridxsGT = np.argwhere(hasGT[:,i] == True); ridxsGT = ridxsGT.flatten().tolist()#得到hasGT[:,i] = 1的人ID ridxsPr = np.argwhere(hasPr[:,i] == True); ridxsPr = ridxsPr.flatten().tolist() #mot[i]["trackidxGT"] = [trackidxGT[idx] for idx in ridxsGT] #mot[i]["trackidxPr"] = [trackidxPr[idx] for idx in ridxsPr] mot[i]["ridxsGT"] = np.array(ridxsGT) mot[i]["ridxsPr"] = np.array(ridxsPr) mot[i]["dist"] = np.full((len(ridxsGT),len(ridxsPr)),np.nan) for iPr in range(len(ridxsPr)): for iGT in range(len(ridxsGT)): if (match[ridxsPr[iPr], ridxsGT[iGT], i]): mot[i]["dist"][iGT,iPr] = dist[ridxsPr[iPr], ridxsGT[iGT], i] # assign predicted poses to GT poses for ridxPr in range(hasPr.shape[0]): if (ridxPr in prToGT): # pose matches to GT # GT pose that matches the predicted pose ridxGT = np.argwhere(prToGT == ridxPr) assert(ridxGT.size == 1) ridxGT = ridxGT[0,0] s = score[ridxPr, :] m = np.squeeze(match[ridxPr, ridxGT, :])#从数组的形状中删除单维条目,即把shape中为1的维度去掉 hp = hasPr[ridxPr, :] for i in range(len(hp)): if (hp[i]): scoresAll[i][imgidx] = np.append(scoresAll[i][imgidx], s[i]) labelsAll[i][imgidx] = np.append(labelsAll[i][imgidx], m[i]) else: # no matching to GT s = score[ridxPr, :] m = np.zeros([match.shape[2], 1], dtype=bool) hp = hasPr[ridxPr, :] for i in range(len(hp)): if (hp[i]): scoresAll[i][imgidx] = np.append(scoresAll[i][imgidx], s[i]) labelsAll[i][imgidx] = np.append(labelsAll[i][imgidx], m[i]) else: if not len(gtFrames[imgidx]["annorect"]): # No GT available. All predictions are false positives for ridxPr in range(hasPr.shape[0]): s = score[ridxPr, :] m = np.zeros([nJoints, 1], dtype=bool) hp = hasPr[ridxPr, :] for i in range(len(hp)): if hp[i]: scoresAll[i][imgidx] = np.append(scoresAll[i][imgidx], s[i]) labelsAll[i][imgidx] = np.append(labelsAll[i][imgidx], m[i]) # save number of GT joints for ridxGT in range(hasGT.shape[0]): hg = hasGT[ridxGT, :] for i in range(len(hg)): nGTall[i, imgidx] += hg[i] motAll[imgidx] = mot return scoresAll, labelsAll, nGTall, motAll