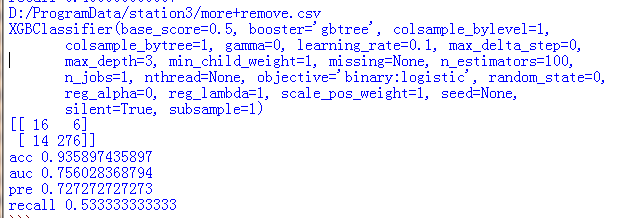

此次我做的实验是二分类问题,输出precision,recall,accuracy,auc

# -*- coding: utf-8 -*- #from sklearn.neighbors import import numpy as np from pandas import read_csv import pandas as pd import sys import importlib from sklearn.neighbors import KNeighborsClassifier from sklearn.ensemble import GradientBoostingClassifier from sklearn import svm from sklearn import cross_validation from sklearn.metrics import hamming_loss from sklearn import metrics importlib.reload(sys) from sklearn.linear_model import LogisticRegression from imblearn.combine import SMOTEENN from sklearn.tree import DecisionTreeClassifier from sklearn.ensemble import RandomForestClassifier #92% from sklearn import tree from xgboost.sklearn import XGBClassifier from sklearn.linear_model import SGDClassifier from sklearn import neighbors from sklearn.naive_bayes import BernoulliNB import matplotlib as mpl import matplotlib.pyplot as plt from sklearn.metrics import confusion_matrix from numpy import mat def metrics_result(actual, predict): print('准确度:{0:.3f}'.format(metrics.accuracy_score(actual, predict))) print('精密度:{0:.3f}'.format(metrics.precision_score(actual, predict,average='weighted'))) print('召回:{0:0.3f}'.format(metrics.recall_score(actual, predict,average='weighted'))) print('f1-score:{0:.3f}'.format(metrics.f1_score(actual, predict,average='weighted'))) print('auc:{0:.3f}'.format(metrics.roc_auc_score(test_y, predict)))

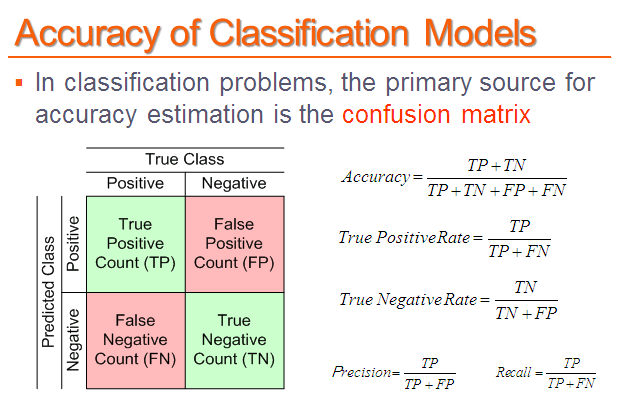

输出混淆矩阵

matr=confusion_matrix(test_y,predict) matr=mat(matr) conf=np.matrix([[0,0],[0,0]]) conf[0,0]=matr[1,1] conf[1,0]=matr[1,0] conf[0,1]=matr[0,1] conf[1,1]=matr[0,0] print(conf)

全代码:

# -*- coding: utf-8 -*- #from sklearn.neighbors import import numpy as np from pandas import read_csv import pandas as pd import sys import importlib from sklearn.neighbors import KNeighborsClassifier from sklearn.ensemble import GradientBoostingClassifier from sklearn import svm from sklearn import cross_validation from sklearn.metrics import hamming_loss from sklearn import metrics importlib.reload(sys) from sklearn.linear_model import LogisticRegression from imblearn.combine import SMOTEENN from sklearn.tree import DecisionTreeClassifier from sklearn.ensemble import RandomForestClassifier #92% from sklearn import tree from xgboost.sklearn import XGBClassifier from sklearn.linear_model import SGDClassifier from sklearn import neighbors from sklearn.naive_bayes import BernoulliNB import matplotlib as mpl import matplotlib.pyplot as plt from sklearn.metrics import confusion_matrix from numpy import mat def metrics_result(actual, predict): print('准确度:{0:.3f}'.format(metrics.accuracy_score(actual, predict))) print('精密度:{0:.3f}'.format(metrics.precision_score(actual, predict,average='weighted'))) print('召回:{0:0.3f}'.format(metrics.recall_score(actual, predict,average='weighted'))) print('f1-score:{0:.3f}'.format(metrics.f1_score(actual, predict,average='weighted'))) print('auc:{0:.3f}'.format(metrics.roc_auc_score(test_y, predict))) '''分类0-1''' root1="D:/ProgramData/station3/10.csv" root2="D:/ProgramData/station3/more+average2.csv" root3="D:/ProgramData/station3/new_10.csv" root4="D:/ProgramData/station3/more+remove.csv" root5="D:/ProgramData/station3/new_10 2.csv" root6="D:/ProgramData/station3/new10.csv" root7="D:/ProgramData/station3/no_-999.csv" root=root4 data1 = read_csv(root) #数据转化为数组 data1=data1.values print(root) time=1 accuracy=[] aucc=[] pre=[] recall=[] for i in range(time): train, test= cross_validation.train_test_split(data1, test_size=0.2, random_state=i) test_x=test[:,:-1] test_y=test[:,-1] train_x=train[:,:-1] train_y=train[:,-1] # ============================================================================= # print(train_x.shape) # print(train_y.shape) # print(test_x.shape) # print(test_y.shape) # print(type(train_x)) # ============================================================================= #X_Train=train_x #Y_Train=train_y X_Train, Y_Train = SMOTEENN().fit_sample(train_x, train_y) #clf = RandomForestClassifier() #82 #clf = LogisticRegression() #82 #penalty=’l2’, dual=False, tol=0.0001, C=1.0, fit_intercept=True, intercept_scaling=1, class_weight=None, random_state=None, solver=’liblinear’, max_iter=100, multi_class=’ovr’, verbose=0, warm_start=False, n_jobs=1 #clf=svm.SVC() clf= XGBClassifier() #from sklearn.ensemble import RandomForestClassifier #92% #clf = DecisionTreeClassifier() #clf = GradientBoostingClassifier() #clf=neighbors.KNeighborsClassifier() #clf=BernoulliNB() print(clf) clf.fit(X_Train, Y_Train) predict=clf.predict(test_x) matr=confusion_matrix(test_y,predict) matr=mat(matr) conf=np.matrix([[0,0],[0,0]]) conf[0,0]=matr[1,1] conf[1,0]=matr[1,0] conf[0,1]=matr[0,1] conf[1,1]=matr[0,0] print(conf) #a=metrics_result(test_y, predict) #a=metrics_result(test_y,predict) '''accuracy''' aa=metrics.accuracy_score(test_y, predict) #print(metrics.accuracy_score(test_y, predict)) accuracy.append(aa) '''auc''' bb=metrics.roc_auc_score(test_y, predict, average=None) aucc.append(bb) '''precision''' cc=metrics.precision_score(test_y, predict, average=None) pre.append(cc[1]) # ============================================================================= # print('cc') # print(type(cc)) # print(cc[1]) # print('cc') # ============================================================================= '''recall''' dd=metrics.recall_score(test_y, predict, average=None) #print(metrics.recall_score(test_y, predict,average='weighted')) recall.append(dd[1]) f=open('D:ProgramDatastation3predict.txt', 'w') for i in range(len(predict)): f.write(str(predict[i])) f.write(' ') f.write("写好了") f.close() f=open('D:ProgramDatastation3y_.txt', 'w') for i in range(len(predict)): f.write(str(test_y[i])) f.write(' ') f.write("写好了") f.close() # ============================================================================= # f=open('D:/ProgramData/station3/predict.txt', 'w') # for i in range(len(predict)): # f.write(str(predict[i])) # f.write(' ') # f.write("写好了") # f.close() # # f=open('D:/ProgramData/station3/y.txt', 'w') # for i in range(len(test_y)): # f.write(str(test_y[i])) # f.write(' ') # f.write("写好了") # f.close() # # ============================================================================= # ============================================================================= # print('调用函数auc:', metrics.roc_auc_score(test_y, predict, average='micro')) # # fpr, tpr, thresholds = metrics.roc_curve(test_y.ravel(),predict.ravel()) # auc = metrics.auc(fpr, tpr) # print('手动计算auc:', auc) # #绘图 # mpl.rcParams['font.sans-serif'] = u'SimHei' # mpl.rcParams['axes.unicode_minus'] = False # #FPR就是横坐标,TPR就是纵坐标 # plt.plot(fpr, tpr, c = 'r', lw = 2, alpha = 0.7, label = u'AUC=%.3f' % auc) # plt.plot((0, 1), (0, 1), c = '#808080', lw = 1, ls = '--', alpha = 0.7) # plt.xlim((-0.01, 1.02)) # plt.ylim((-0.01, 1.02)) # plt.xticks(np.arange(0, 1.1, 0.1)) # plt.yticks(np.arange(0, 1.1, 0.1)) # plt.xlabel('False Positive Rate', fontsize=13) # plt.ylabel('True Positive Rate', fontsize=13) # plt.grid(b=True, ls=':') # plt.legend(loc='lower right', fancybox=True, framealpha=0.8, fontsize=12) # plt.title(u'大类问题一分类后的ROC和AUC', fontsize=17) # plt.show() # ============================================================================= sum_acc=0 sum_auc=0 sum_pre=0 sum_recall=0 for i in range(time): sum_acc+=accuracy[i] sum_auc+=aucc[i] sum_pre+=pre[i] sum_recall+=recall[i] acc1=sum_acc*1.0/time auc1=sum_auc*1.0/time pre1=sum_pre*1.0/time recall1=sum_recall*1.0/time print("acc",acc1) print("auc",auc1) print("pre",pre1) print("recall",recall1) # ============================================================================= # # data1 = read_csv(root2) #数据转化为数组 # data1=data1.values # # # accuracy=[] # auc=[] # pre=[] # recall=[] # for i in range(30): # train, test= cross_validation.train_test_split(data1, test_size=0.2, random_state=i) # test_x=test[:,:-1] # test_y=test[:,-1] # train_x=train[:,:-1] # train_y=train[:,-1] # X_Train, Y_Train = SMOTEENN().fit_sample(train_x, train_y) # # #clf = RandomForestClassifier() #82 # clf = LogisticRegression() #82 # #clf=svm.SVC() # #clf= XGBClassifier() # #from sklearn.ensemble import RandomForestClassifier #92% # #clf = DecisionTreeClassifier() # #clf = GradientBoostingClassifier() # # #clf=neighbors.KNeighborsClassifier() 65.25% # #clf=BernoulliNB() # clf.fit(X_Train, Y_Train) # predict=clf.predict(test_x) # # '''accuracy''' # aa=metrics.accuracy_score(test_y, predict) # accuracy.append(aa) # # '''auc''' # aa=metrics.roc_auc_score(test_y, predict) # auc.append(aa) # # '''precision''' # aa=metrics.precision_score(test_y, predict,average='weighted') # pre.append(aa) # # '''recall''' # aa=metrics.recall_score(test_y, predict,average='weighted') # recall.append(aa) # # # sum_acc=0 # sum_auc=0 # sum_pre=0 # sum_recall=0 # for i in range(30): # sum_acc+=accuracy[i] # sum_auc+=auc[i] # sum_pre+=pre[i] # sum_recall+=recall[i] # # acc1=sum_acc*1.0/30 # auc1=sum_auc*1.0/30 # pre1=sum_pre*1.0/30 # recall1=sum_recall*1.0/30 # print("more 的 acc:", acc1) # print("more 的 auc:", auc1) # print("more 的 precision:", pre1) # print("more 的 recall:", recall1) # # ============================================================================= #X_train, X_test, y_train, y_test = cross_validation.train_test_split(X_Train,Y_Train, test_size=0.2, random_state=i)

输出结果:

UEditor的使用方法

MVC 生成PDf表格并插入图片

简单行列转换记录

此数据库没有有效所有者“的解决,我很受用

ASP.NET MVC3 使用kindeditor编辑器获取不到值

c#读写文件

VB 中Sub和Function的区别

问题集

hadoop2.7.2运行例子时报错