参考视频:莫烦python https://mofanpy.com/tutorials/python-basic/multiprocessing/why/

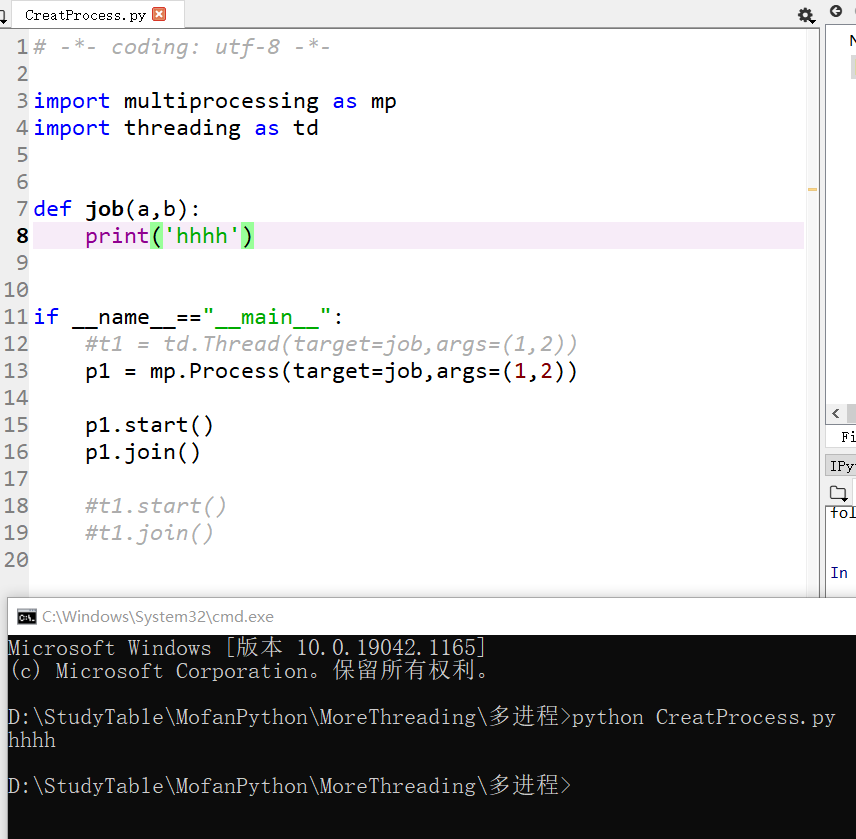

1.创建进程

# -*- coding: utf-8 -*- import multiprocessing as mp import threading as td def job(a,b): print('hhhh') if __name__=="__main__": #t1 = td.Thread(target=job,args=(1,2)) p1 = mp.Process(target=job,args=(1,2)) p1.start() p1.join() #t1.start() #t1.join()

2. queue 进程输出

# -*- coding: utf-8 -*- import multiprocessing as mp import threading as td def job(q): res = 0 for i in range(10): res += i+i**2+i**3 q.put(res)#queue if __name__=="__main__": q = mp.Queue() p1 = mp.Process(target=job,args=(q,)) p2 = mp.Process(target=job,args=(q,)) p1.start() p2.start() p1.join() p2.join() res1 = q.get() res2 = q.get() print("res1",res1) print("res2",res1)

res1 2355

res2 2355

3. 多线程和多进程效率对比

# -*- coding: utf-8 -*- import multiprocessing as mp import threading as td import time from queue import Queue def job(q): res = 0 for i in range(10000000): res += i+i**2+i**3 q.put(res)#queue def multcore(): q = mp.Queue() p1 = mp.Process(target=job,args=(q,)) p2 = mp.Process(target=job,args=(q,)) p1.start() p2.start() p1.join() p2.join() res1 = q.get() res2 = q.get() print("multiprocess:",res1+res2) def multicore(): q = Queue() t1 = td.Thread(target=job,args=(q,)) t2 = td.Thread(target=job,args=(q,)) t1.start() t2.start() t1.join() t2.join() res1 = q.get() res2 = q.get() print("multithread:",res1+res2) def normal(): res = 0 for _ in range(2): for i in range(10000000): res += i+i**2+i**3 print("normal:",res) if __name__=="__main__": st = time.time() normal() st1 = time.time() print('normal time:', st1 - st) multicore() st2 = time.time() print('multithread time:', st2 - st1) multicore() print('multicore time:', time.time() - st2)

normal: 4999999666666716666660000000

normal time: 13.963502645492554

multithread: 4999999666666716666660000000

multithread time: 14.728559017181396

multithread: 4999999666666716666660000000

multicore time: 13.920192003250122

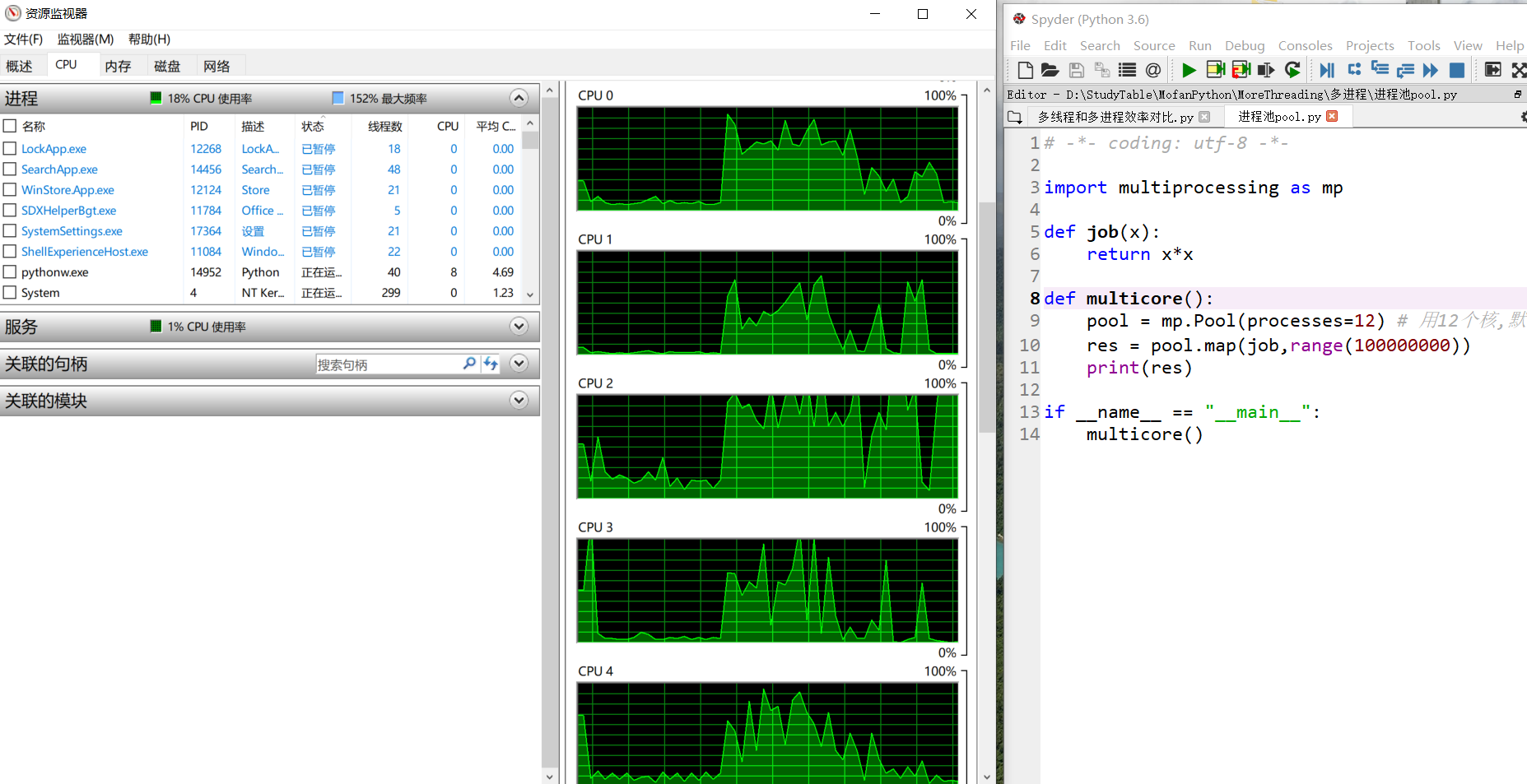

4.进程池 Pool

# -*- coding: utf-8 -*- import multiprocessing as mp def job(x): return x*x def multicore(): pool = mp.Pool(processes=12) # 用12个核,默认所有核 res = pool.map(job,range(100000000)) print(res) if __name__ == "__main__": multicore()

# -*- coding: utf-8 -*- import multiprocessing as mp def job(x): return x*x def multicore(): pool = mp.Pool() # 一个进程 res = pool.apply_async(job,(2,)) #一次只能再一个进程中计算一个结果 print(res.get()) # 很多进程 multi_res = [pool.apply_async(job,(i,)) for i in range (10)] print([res.get() for res in multi_res]) if __name__ == "__main__": multicore()

4

[0, 1, 4, 9, 16, 25, 36, 49, 64, 81]

4. 共享内存

# -*- coding: utf-8 -*- import multiprocessing as mp value = mp.Value('d',1) # i 表示int, d 表示 浮点 ... array = mp.Array('i',[1,2,3]) #只能是一维的

5. 锁 Lock

如果没有锁,那么多个进程可能会抢占共享内存中的变量

没有加 lock

# -*- coding: utf-8 -*- import multiprocessing as mp import time def job(v,num): for _ in range(10): time.sleep(0.1) v.value += num print(v.value) def multicore(): v = mp.Value('i',0) p1 = mp.Process(target=job,args=(v,1)) p2 = mp.Process(target=job,args=(v,3)) p1.start() p2.start() p1.join() p2.join() if __name__ == "__main__": multicore()

加了 lock

# -*- coding: utf-8 -*- import multiprocessing as mp import time def job(v,num,l): l.acquire() for _ in range(10): time.sleep(0.1) v.value += num print(v.value) l.release() def multicore(): l = mp.Lock() v = mp.Value('i',0) p1 = mp.Process(target=job,args=(v,1,l)) p2 = mp.Process(target=job,args=(v,3,l)) p1.start() p2.start() p1.join() p2.join() if __name__ == "__main__": multicore()

1

4

5

8

9

12

13

16

17

20

21

24

25

28

29

32

33

36

37

40