一、kubeadm部署K8S集群故障排查

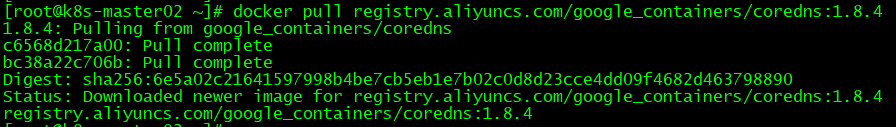

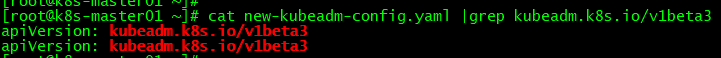

问题1:kubeadm初始化master01失败?

[root@k8s-master01 ~]# kubeadm init --config=new-kubeadm-config.yaml --upload-certs |tee kubeadm-init.log

W0819 14:05:46.581489 81698 strict.go:47] unknown configuration schema.GroupVersionKind{Group:"kubeadm.k8s.io", Version:"v1beta3", Kind:"InitConfiguration"} for scheme definitions in "k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/scheme/scheme.go:31" and "k8s.io/kubernetes/cmd/kubeadm/app/componentconfigs/scheme.go:28"

no kind "InitConfiguration" is registered for version "kubeadm.k8s.io/v1beta3" in scheme "k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/scheme/scheme.go:31"

To see the stack trace of this error execute with --v=5 or higher

原因分析:yaml文件中apiVersion版本错误。

解决方法:修改yaml文件中apiVersion版本即可。

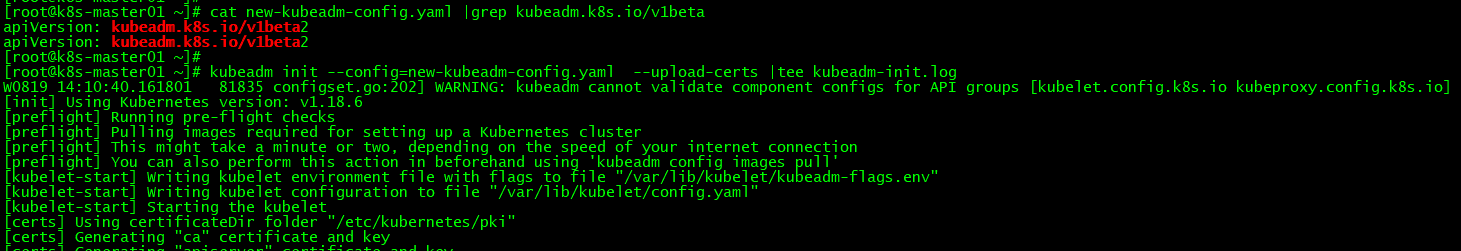

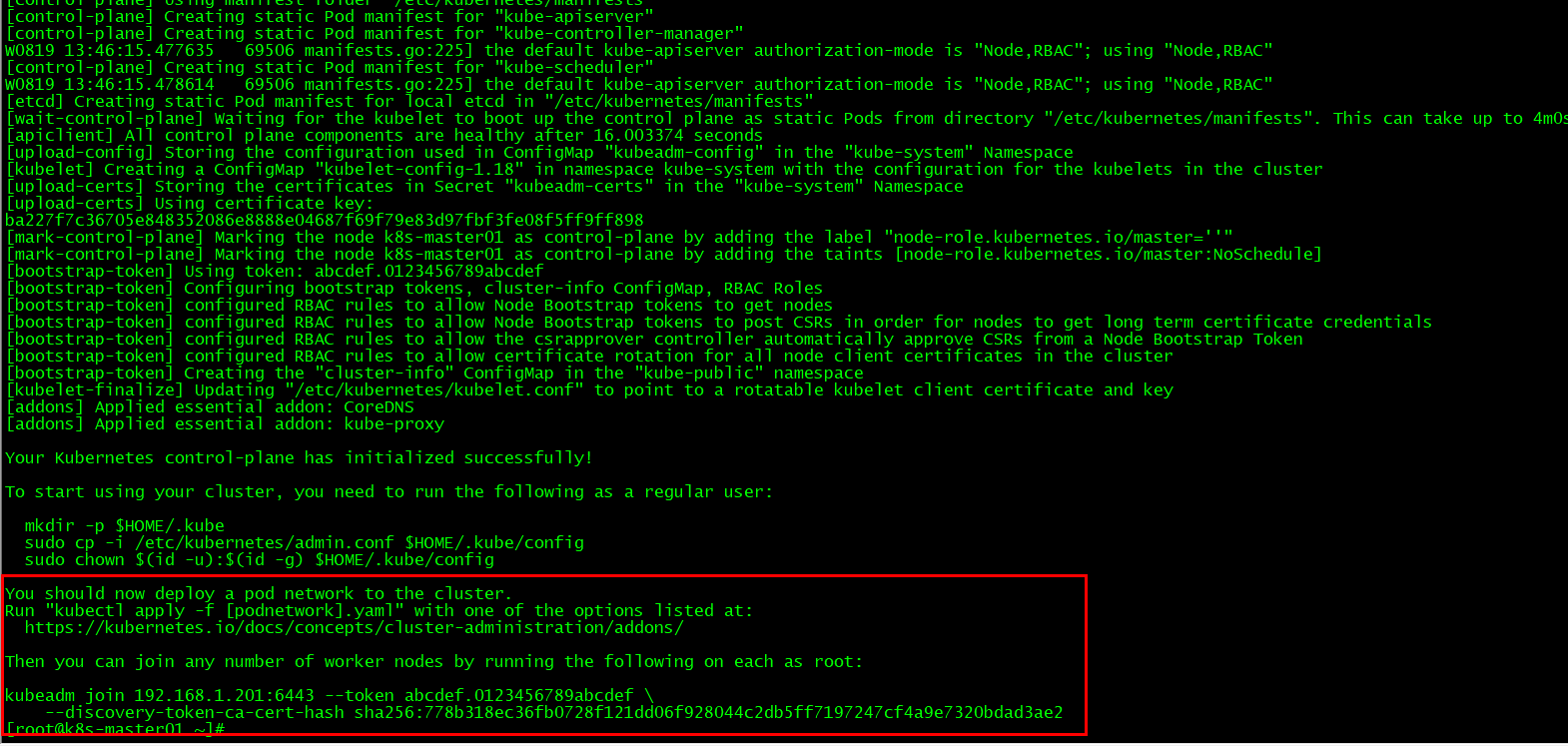

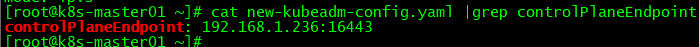

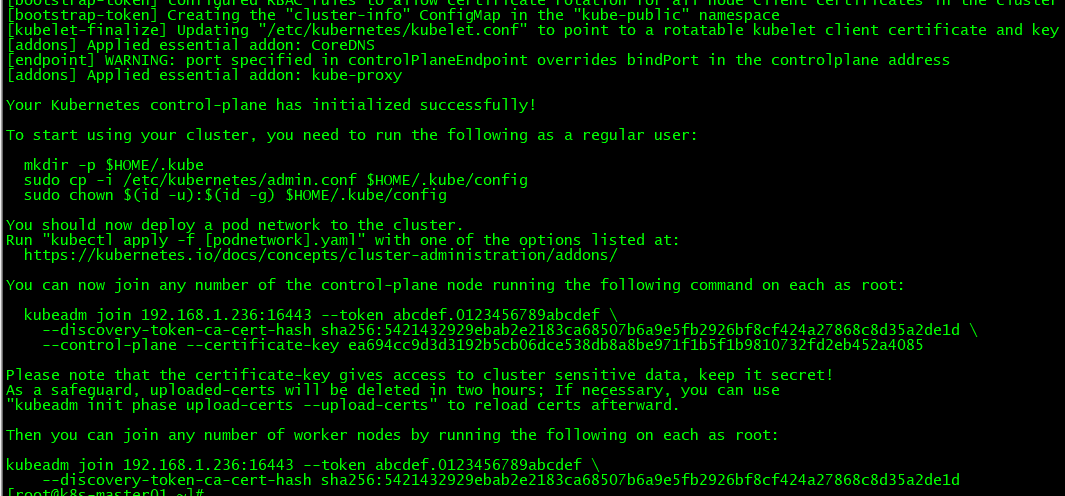

问题2:kubeadm初始化master01成功后,没有出现加入其他master节点的提示命令?

原因分析:初始化master的yaml文件存在问题。

解决方法:修改适合k8s版本的yaml添加“controlPlaneEndpoint: 192.168.1.236:16443”即可。

问题3:kubeadm方式部署K8S集群时,node加入K8S集群时卡顿并失败?

[root@k8s-node01 ~]# kubeadm join 192.168.1.201:6443 --token 1qo7ms.7atall1jcecf10qz --discovery-token-ca-cert-hash sha256:d1d102ceb6241a3617777f6156cd4e86dc9f9edd9e1d6d73266d6ca7f6280890

[preflight] Running pre-flight checks

原因分析:初始化主机点后发现部分组件异常;集群时间不同步;

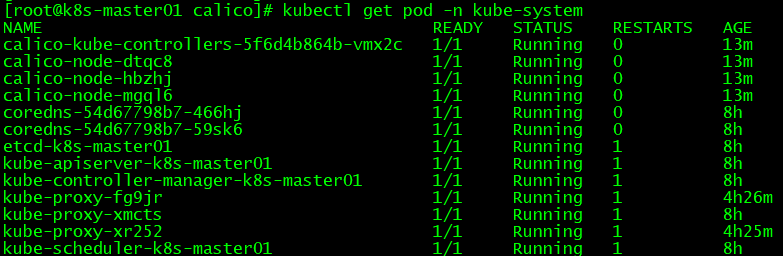

[root@k8s-master01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-54d67798b7-28w5q 0/1 Pending 0 3m39s

coredns-54d67798b7-sxqpm 0/1 Pending 0 3m39s

etcd-k8s-master01 1/1 Running 0 3m53s

kube-apiserver-k8s-master01 1/1 Running 0 3m53s

kube-controller-manager-k8s-master01 1/1 Running 0 3m53s

kube-proxy-rvj6w 0/1 CrashLoopBackOff 5 3m40s

kube-scheduler-k8s-master01 1/1 Running 0 3m53s

解决方法:同步时间后,重新进行初始化主节点,再行加入其他节点。

###所有节点执行如下内容

ntpdate ntp1.aliyun.com;hwclock --systohc

date;hwclock

kubeadm reset -f;ipvsadm --clear;rm -rf ./.kube

###首个节点初始化

kubeadm init --config=new-kubeadm-config.yaml --upload-certs |tee kubeadm-init.log

###其他节点加入集群

问题3:kubeadm方式部署K8S集群时,node加入K8S集群失败?

[root@k8s-node01 ~]# kubeadm join 192.168.1.201:6443 --token 2g9k0a.tsm6xe31rdb7jbo8 --discovery-token-ca-cert-hash sha256:d1d102ceb6241a3617777f6156cd4e86dc9f9edd9e1d6d73266d6ca7f6280890

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

To see the stack trace of this error execute with --v=5 or higher

原因分析:token过期了;

解决方法:重新生成不过期的token即可;

[root@k8s-master01 ~]# kubeadm token create --ttl 0 --print-join-command

W0819 12:00:27.541838 7855 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

kubeadm join 192.168.1.201:6443 --token 6xyv8a.cueltqmpe9qa8nxu --discovery-token-ca-cert-hash sha256:bd78dfd370e47dfca742b5f6934c21014792168fa4dc19c9fa63bfdd87270097

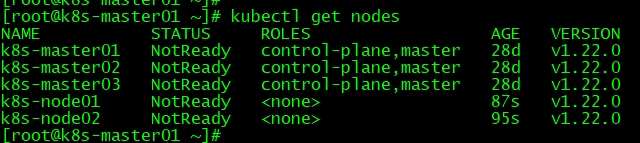

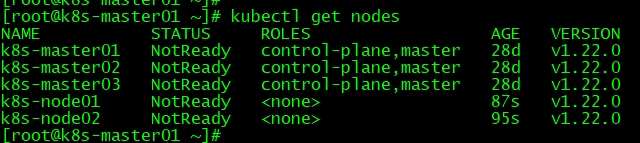

问题3:kubeadm方式部署K8S集群时,node状态为NotReady,且coredns的pod状态为pending,仅初始化的master节点kubeproxy为running?

[root@k8s-master01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 NotReady control-plane,master 35m v1.22.0 k8s-master02 NotReady control-plane,master 32m v1.22.0 k8s-master03 NotReady control-plane,master 22m v1.22.0 k8s-node01 NotReady node 32m v1.22.0 k8s-node02 NotReady node 32m v1.22.0 [root@k8s-master01 ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-7d89d9b6b8-ddjjp 0/1 Pending 0 35m coredns-7d89d9b6b8-sbd8k 0/1 Pending 0 35m etcd-k8s-master01 1/1 Running 3 (18m ago) 35m etcd-k8s-master02 1/1 Running 0 32m etcd-k8s-master03 0/1 Running 1 (18m ago) 22m kube-apiserver-k8s-master01 0/1 Running 2 34m kube-apiserver-k8s-master02 0/1 Running 0 32m kube-apiserver-k8s-master03 0/1 Running 0 22m kube-controller-manager-k8s-master01 1/1 Running 4 (19m ago) 34m kube-controller-manager-k8s-master02 1/1 Running 0 32m kube-controller-manager-k8s-master03 1/1 Running 0 22m kube-proxy-6wxkt 0/1 Error 8 (21m ago) 32m kube-proxy-9286f 1/1 Running 8 (21m ago) 32m kube-proxy-h9jcv 0/1 CrashLoopBackOff 5 (19m ago) 22m kube-proxy-v944j 0/1 Error 8 (21m ago) 32m kube-proxy-zfrrk 0/1 Error 8 (23m ago) 35m kube-scheduler-k8s-master01 1/1 Running 3 (32m ago) 35m kube-scheduler-k8s-master02 1/1 Running 1 (19m ago) 32m kube-scheduler-k8s-master03 1/1 Running 0 22m [root@k8s-master01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 35m

原因分析:网络插件没有安装好。

解决方法:安装网络插件,如flannel、calico等。

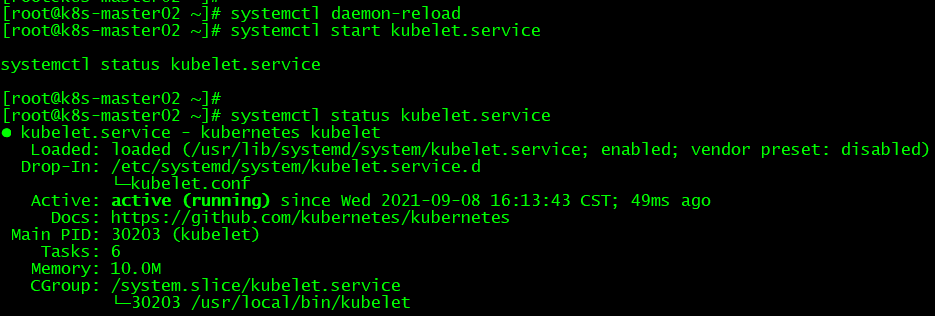

问题4、kubeadm方式部署K8S集群时,部署calico组件失败?

[root@k8s-master01 calico]# kubectl get pod -A -o wide | grep calico kube-system calico-kube-controllers-cdd5755b9-phg94 0/1 Running 0 6m5s 192.168.1.203 k8s-master03 <none> <none> kube-system calico-node-cb6c6 0/1 CrashLoopBackOff 5 (99s ago) 6m5s 192.168.1.202 k8s-master02 <none> <none> kube-system calico-node-knr8g 0/1 CrashLoopBackOff 5 (78s ago) 6m5s 192.168.1.203 k8s-master03 <none> <none> kube-system calico-node-l4fp9 0/1 CrashLoopBackOff 5 (98s ago) 6m5s 192.168.1.204 k8s-node01 <none> <none> kube-system calico-node-mwqlt 0/1 CrashLoopBackOff 5 (96s ago) 6m5s 192.168.1.205 k8s-node02 <none> <none> kube-system calico-node-zjkz6 0/1 CrashLoopBackOff 5 (93s ago) 6m5s 192.168.1.201 k8s-master01 <none> <none>

原因分析:calico-node的yaml文件存在问题。

[root@k8s-master01 ~]# kubectl describe pod calico-node-cb6c6 -n kube-system

Name: calico-node-cb6c6

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: k8s-master02/192.168.1.202

Start Time: Tue, 14 Sep 2021 17:14:28 +0800

Labels: controller-revision-hash=b5f966fbb

k8s-app=calico-node

pod-template-generation=1

Annotations: <none>

Status: Running

IP: 192.168.1.202

IPs:

IP: 192.168.1.202

Controlled By: DaemonSet/calico-node

Init Containers:

install-cni:

Container ID: docker://3e7d03495a0daa469b0f6b15acb451fa228c8d97a7d41fda0642d733cb21de9c

Image: registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.15.3

Image ID: docker-pullable://registry.cn-beijing.aliyuncs.com/dotbalo/cni@sha256:a559d264c7a75a7528560d11778dba2d3b55c588228aed99be401fd2baa9b607

Port: <none>

Host Port: <none>

Command:

/install-cni.sh

State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 14 Sep 2021 17:14:51 +0800

Finished: Tue, 14 Sep 2021 17:14:51 +0800

Ready: True

Restart Count: 0

Environment:

CNI_CONF_NAME: 10-calico.conflist

CNI_NETWORK_CONFIG: <set to the key 'cni_network_config' of config map 'calico-config'> Optional: false

ETCD_ENDPOINTS: <set to the key 'etcd_endpoints' of config map 'calico-config'> Optional: false

CNI_MTU: <set to the key 'veth_mtu' of config map 'calico-config'> Optional: false

SLEEP: false

Mounts:

/calico-secrets from etcd-certs (rw)

/host/etc/cni/net.d from cni-net-dir (rw)

/host/opt/cni/bin from cni-bin-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-tfw5v (ro)

flexvol-driver:

Container ID: docker://c8fe47bd4805c83fa3fad054470c3f3eb9dbccc1e64455cae085f050b11897c1

Image: registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol:v3.15.3

Image ID: docker-pullable://registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol@sha256:6bd1246d0ea1e573a6a050902995b1666ec0852339e5bda3051f583540361b55

Port: <none>

Host Port: <none>

State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 14 Sep 2021 17:14:56 +0800

Finished: Tue, 14 Sep 2021 17:14:56 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/host/driver from flexvol-driver-host (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-tfw5v (ro)

Containers:

calico-node:

Container ID: docker://7e209f5b426027b10aa3460b4bab2b003ad4a853f46e24353f119211abb42cee

Image: registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.15.3

Image ID: docker-pullable://registry.cn-beijing.aliyuncs.com/dotbalo/node@sha256:988095dbe39d2066b1964aafaa4a302a1286b149a4a80c9a1eb85544f2a0cdd0

Port: <none>

Host Port: <none>

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Tue, 14 Sep 2021 17:21:43 +0800

Finished: Tue, 14 Sep 2021 17:21:45 +0800

Ready: False

Restart Count: 6

Requests:

cpu: 250m

Liveness: exec [/bin/calico-node -felix-live -bird-live] delay=10s timeout=1s period=10s #success=1 #failure=6

Readiness: exec [/bin/calico-node -felix-ready -bird-ready] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

ETCD_ENDPOINTS: <set to the key 'etcd_endpoints' of config map 'calico-config'> Optional: false

ETCD_CA_CERT_FILE: <set to the key 'etcd_ca' of config map 'calico-config'> Optional: false

ETCD_KEY_FILE: <set to the key 'etcd_key' of config map 'calico-config'> Optional: false

ETCD_CERT_FILE: <set to the key 'etcd_cert' of config map 'calico-config'> Optional: false

CALICO_K8S_NODE_REF: (v1:spec.nodeName)

CALICO_NETWORKING_BACKEND: <set to the key 'calico_backend' of config map 'calico-config'> Optional: false

CLUSTER_TYPE: k8s,bgp

IP: autodetect

CALICO_IPV4POOL_IPIP: Always

CALICO_IPV4POOL_VXLAN: Never

FELIX_IPINIPMTU: <set to the key 'veth_mtu' of config map 'calico-config'> Optional: false

FELIX_VXLANMTU: <set to the key 'veth_mtu' of config map 'calico-config'> Optional: false

FELIX_WIREGUARDMTU: <set to the key 'veth_mtu' of config map 'calico-config'> Optional: false

CALICO_IPV4POOL_CIDR: 172.168.0.0/12

CALICO_DISABLE_FILE_LOGGING: true

FELIX_DEFAULTENDPOINTTOHOSTACTION: ACCEPT

FELIX_IPV6SUPPORT: false

FELIX_LOGSEVERITYSCREEN: info

FELIX_HEALTHENABLED: true

Mounts:

/calico-secrets from etcd-certs (rw)

/lib/modules from lib-modules (ro)

/run/xtables.lock from xtables-lock (rw)

/var/lib/calico from var-lib-calico (rw)

/var/run/calico from var-run-calico (rw)

/var/run/nodeagent from policysync (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-tfw5v (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

lib-modules:

Type: HostPath (bare host directory volume)

Path: /lib/modules

HostPathType:

var-run-calico:

Type: HostPath (bare host directory volume)

Path: /var/run/calico

HostPathType:

var-lib-calico:

Type: HostPath (bare host directory volume)

Path: /var/lib/calico

HostPathType:

xtables-lock:

Type: HostPath (bare host directory volume)

Path: /run/xtables.lock

HostPathType: FileOrCreate

cni-bin-dir:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni-net-dir:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

etcd-certs:

Type: Secret (a volume populated by a Secret)

SecretName: calico-etcd-secrets

Optional: false

policysync:

Type: HostPath (bare host directory volume)

Path: /var/run/nodeagent

HostPathType: DirectoryOrCreate

flexvol-driver-host:

Type: HostPath (bare host directory volume)

Path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds

HostPathType: DirectoryOrCreate

kube-api-access-tfw5v:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: :NoSchedule op=Exists

:NoExecute op=Exists

CriticalAddonsOnly op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/network-unavailable:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 7m19s default-scheduler Successfully assigned kube-system/calico-node-cb6c6 to k8s-master02

Normal Pulling 7m19s kubelet Pulling image "registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.15.3"

Normal Pulled 6m57s kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.15.3" in 22.396538132s

Normal Created 6m56s kubelet Created container install-cni

Normal Started 6m56s kubelet Started container install-cni

Normal Pulling 6m55s kubelet Pulling image "registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol:v3.15.3"

Normal Pulling 6m51s kubelet Pulling image "registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.15.3"

Normal Pulled 6m51s kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol:v3.15.3" in 3.78191146s

Normal Created 6m51s kubelet Created container flexvol-driver

Normal Started 6m51s kubelet Started container flexvol-driver

Normal Pulled 6m12s kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.15.3" in 39.059498113s

Warning Unhealthy 6m7s (x4 over 6m12s) kubelet Readiness probe failed: calico/node is not ready: BIRD is not ready: Failed to stat() nodename file: stat /var/lib/calico/nodename: no such file or directory

Normal Created 5m49s (x3 over 6m12s) kubelet Created container calico-node

Normal Started 5m49s (x3 over 6m12s) kubelet Started container calico-node

Normal Pulled 5m49s (x2 over 6m10s) kubelet Container image "registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.15.3" already present on machine

Warning BackOff 2m10s (x22 over 6m7s) kubelet Back-off restarting failed containerp

pod详细信息显示:Readiness probe failed导致重启pod失败。

[root@k8s-master01 ~]# kubectl logs calico-node-cb6c6 -n kube-system

2021-09-14 09:21:43.819 [INFO][8] startup/startup.go 349: Early log level set to info

2021-09-14 09:21:43.819 [INFO][8] startup/startup.go 369: Using HOSTNAME environment (lowercase) for node name

2021-09-14 09:21:43.819 [INFO][8] startup/startup.go 377: Determined node name: k8s-master02

2021-09-14 09:21:43.819 [INFO][8] startup/startup.go 109: Skipping datastore connection test

2021-09-14 09:21:43.830 [INFO][8] startup/startup.go 460: Building new node resource Name="k8s-master02"

2021-09-14 09:21:45.832 [ERROR][8] startup/startup.go 149: failed to query kubeadm's config map error=Get "https://10.96.0.1:443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=2s": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

2021-09-14 09:21:45.832 [WARNING][8] startup/startup.go 1264: Terminating

Calico node failed to start

pod日志显示:未能成功访问kubeadm's config导致calico启动失败。

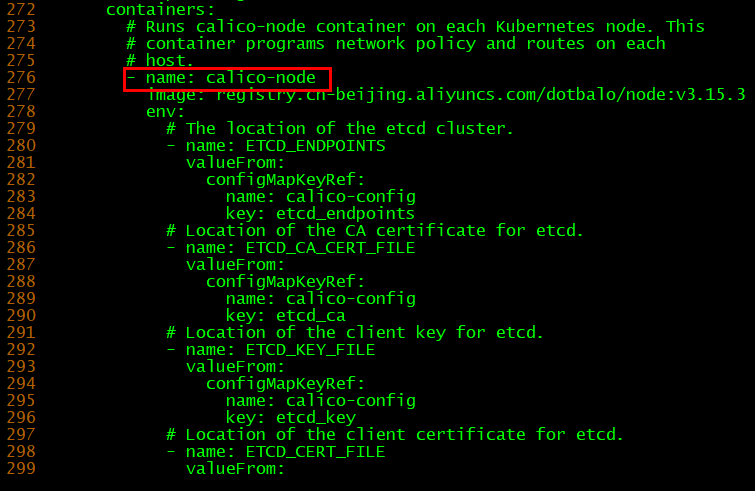

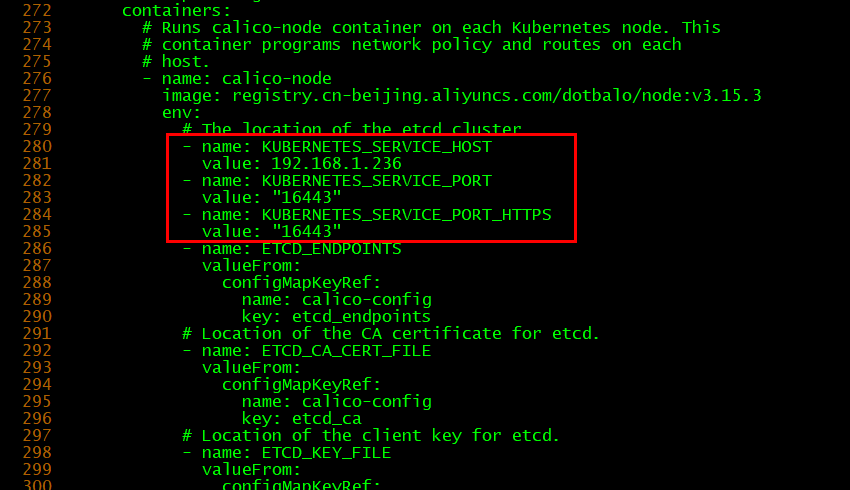

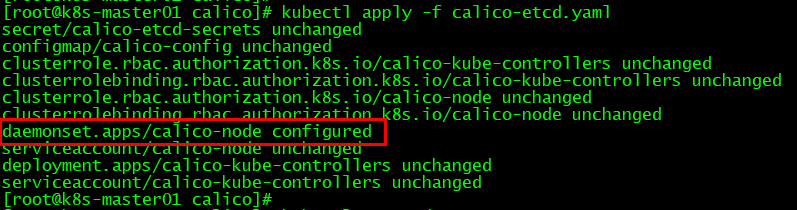

解决方法:calico-node默认访问kubernetes SVC的443端口,这将导致无法访问apiserver,需要在yaml文件添加apiserver的IP和端口,字段名:KUBERNETES_SERVICE_HOST、KUBERNETES_SERVICE_PORT、KUBERNETES_SERVICE_PORT_HTTPS。

问题5:kubeadm方式部署K8S集群时,安装calico网络组件成功后,但coredns依然处于ContainerCreating?

[root@k8s-master01 .kube]# kubectl get pod -A -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-cdd5755b9-phg94 0/1 Running 0 73m 192.168.1.203 k8s-master03 <none> <none> kube-system calico-node-5d6xr 1/1 Running 0 50m 192.168.1.202 k8s-master02 <none> <none> kube-system calico-node-k7qmf 1/1 Running 0 50m 192.168.1.204 k8s-node01 <none> <none> kube-system calico-node-p47mm 1/1 Running 0 50m 192.168.1.201 k8s-master01 <none> <none> kube-system calico-node-v7q76 1/1 Running 0 50m 192.168.1.203 k8s-master03 <none> <none> kube-system calico-node-wjw4d 1/1 Running 0 50m 192.168.1.205 k8s-node02 <none> <none> kube-system coredns-7d89d9b6b8-ddjjp 0/1 ContainerCreating 0 92m <none> k8s-master03 <none> <none> kube-system coredns-7d89d9b6b8-sbd8k 0/1 ContainerCreating 0 92m <none> k8s-master03 <none> <none>

原因分析:

[root@k8s-master01 ~]# kubectl logs coredns-7d89d9b6b8-ddjjp -n kube-system Error from server (BadRequest): container "coredns" in pod "coredns-7d89d9b6b8-ddjjp" is waiting to start: ContainerCreating

日志显示:容器创建中。

[root@k8s-master01 ~]# kubectl describe pod coredns-7d89d9b6b8-ddjjp -n kube-system

Name: coredns-7d89d9b6b8-ddjjp

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: k8s-master03/192.168.1.203

Start Time: Tue, 14 Sep 2021 17:14:54 +0800

Labels: k8s-app=kube-dns

pod-template-hash=7d89d9b6b8

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/coredns-7d89d9b6b8

Containers:

coredns:

Container ID:

Image: registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4

Image ID:

Ports: 53/UDP, 53/TCP, 9153/TCP

Host Ports: 0/UDP, 0/TCP, 0/TCP

Args:

-conf

/etc/coredns/Corefile

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:8080/health delay=60s timeout=5s period=10s #success=1 #failure=5

Readiness: http-get http://:8181/ready delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/coredns from config-volume (ro)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-7m9rx (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: coredns

Optional: false

kube-api-access-7m9rx:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: CriticalAddonsOnly op=Exists

node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SandboxChanged 6m21s (x2093 over 136m) kubelet Pod sandbox changed, it will be killed and re-created. Warning FailedCreatePodSandBox 82s (x2154 over 136m) kubelet (combined from similar events): Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "089f15d2961982244b2ce122b738afc70e29fa17eb79a4e7b88a35ce5be4868b" network for pod "coredns-7d89d9b6b8-ddjjp": networkPlugin cni failed to set up pod "coredns-7d89d9b6b8-ddjjp_kube-system" network: Get "https://[10.96.0.1]:443/api/v1/namespaces/kube-system": dial tcp 10.96.0.1:443: connect: connection refused

pod详细信息显示:调度节点失败。

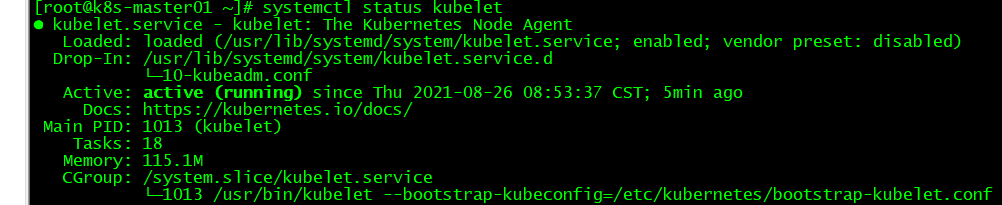

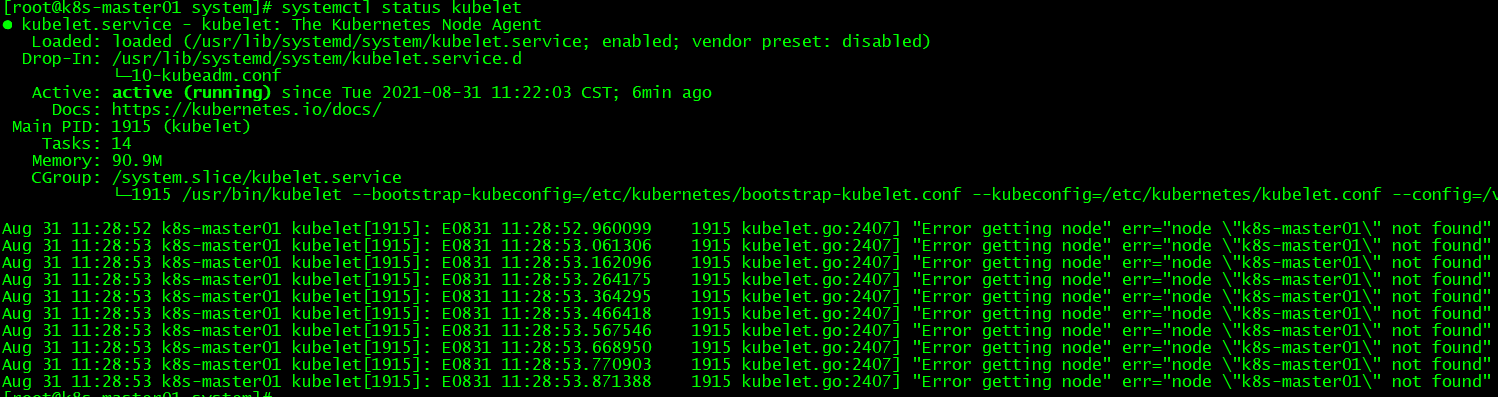

[root@k8s-master01 .kube]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Tue 2021-09-14 16:56:06 CST; 1h 42min ago

Docs: https://kubernetes.io/docs/

Main PID: 28526 (kubelet)

Tasks: 14

Memory: 78.6M

CGroup: /system.slice/kubelet.service

└─28526 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --net...

Sep 14 18:37:41 k8s-master01 kubelet[28526]: I0914 18:37:41.703532 28526 scope.go:110] "RemoveContainer" containerID="a9e6b2c2df1bd9530394a4077af6c159830efd7e2b2d9156cbf1580086bf6d99"

Sep 14 18:37:41 k8s-master01 kubelet[28526]: E0914 18:37:41.703788 28526 pod_workers.go:747] "Error syncing pod, skipping" err="failed to "StartContainer" for "kube-proxy" with ...

Sep 14 18:37:52 k8s-master01 kubelet[28526]: I0914 18:37:52.704002 28526 scope.go:110] "RemoveContainer" containerID="a9e6b2c2df1bd9530394a4077af6c159830efd7e2b2d9156cbf1580086bf6d99"

Sep 14 18:37:52 k8s-master01 kubelet[28526]: E0914 18:37:52.704281 28526 pod_workers.go:747] "Error syncing pod, skipping" err="failed to "StartContainer" for "kube-proxy" with ...

Sep 14 18:38:07 k8s-master01 kubelet[28526]: I0914 18:38:07.704831 28526 scope.go:110] "RemoveContainer" containerID="a9e6b2c2df1bd9530394a4077af6c159830efd7e2b2d9156cbf1580086bf6d99"

Sep 14 18:38:07 k8s-master01 kubelet[28526]: E0914 18:38:07.705058 28526 pod_workers.go:747] "Error syncing pod, skipping" err="failed to "StartContainer" for "kube-proxy" with ...

Sep 14 18:38:22 k8s-master01 kubelet[28526]: I0914 18:38:22.705015 28526 scope.go:110] "RemoveContainer" containerID="a9e6b2c2df1bd9530394a4077af6c159830efd7e2b2d9156cbf1580086bf6d99"

Sep 14 18:38:22 k8s-master01 kubelet[28526]: E0914 18:38:22.705279 28526 pod_workers.go:747] "Error syncing pod, skipping" err="failed to "StartContainer" for "kube-proxy" with ...

Sep 14 18:38:34 k8s-master01 kubelet[28526]: I0914 18:38:34.709678 28526 scope.go:110] "RemoveContainer" containerID="a9e6b2c2df1bd9530394a4077af6c159830efd7e2b2d9156cbf1580086bf6d99"

Sep 14 18:38:34 k8s-master01 kubelet[28526]: E0914 18:38:34.711197 28526 pod_workers.go:747] "Error syncing pod, skipping" err="failed to "StartContainer" for "kube-proxy" with ...

Hint: Some lines were ellipsized, use -l to show in full.

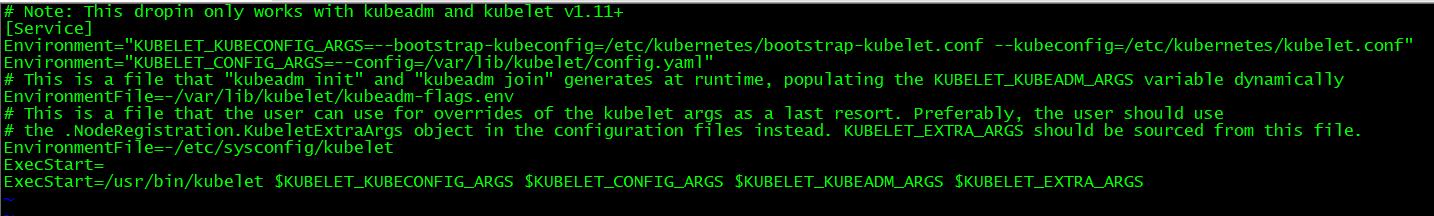

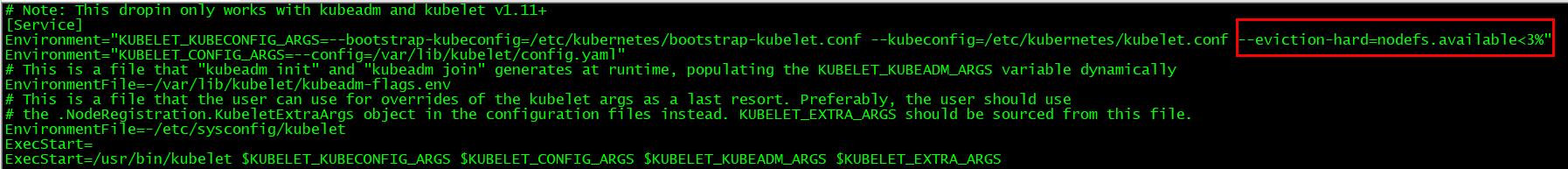

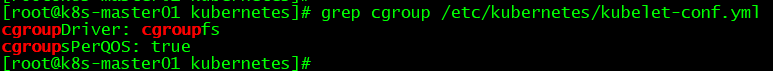

解决方法:修改kubelet服务配置即可。

问题5:kubeadm方式部署K8S集群时,部署flannel组件失败?

kube-flannel-ds-amd64-8cqqz 0/1 CrashLoopBackOff 3 84s 192.168.66.10 k8s-master01 <none> <none>

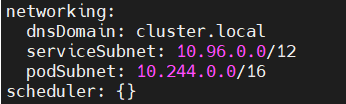

原因分析:查看日志,发现注册网络失败,原因在于主节点初始化时yaml文件存在问题。

kubectl logs kube-flannel-ds-amd64-8cqqz -n kubesystem

I0602 01:53:54.021093 1 main.go:514] Determining IP address of default interface

I0602 01:53:54.022514 1 main.go:527] Using interface with name ens33 and address 192.168.66.10

I0602 01:53:54.022619 1 main.go:544] Defaulting external address to interface address (192.168.66.10)

I0602 01:53:54.030311 1 kube.go:126] Waiting 10m0s for node controller to sync

I0602 01:53:54.030555 1 kube.go:309] Starting kube subnet manager

I0602 01:53:55.118656 1 kube.go:133] Node controller sync successful

I0602 01:53:55.118754 1 main.go:244] Created subnet manager: Kubernetes Subnet Manager - k8s-master01

I0602 01:53:55.118765 1 main.go:247] Installing signal handlers

I0602 01:53:55.119057 1 main.go:386] Found network config - Backend type: vxlan

I0602 01:53:55.119146 1 vxlan.go:120] VXLAN config: VNI=1 Port=0 GBP=false DirectRouting=false

E0602 01:53:55.119470 1 main.go:289] Error registering network: failed to acquire lease: node "k8s-master01" pod cidr not assigned

I0602 01:53:55.119506 1 main.go:366] Stopping shutdownHandler...

解决方法:修改kubeadm-config.yaml后重新初始化主节点即可。

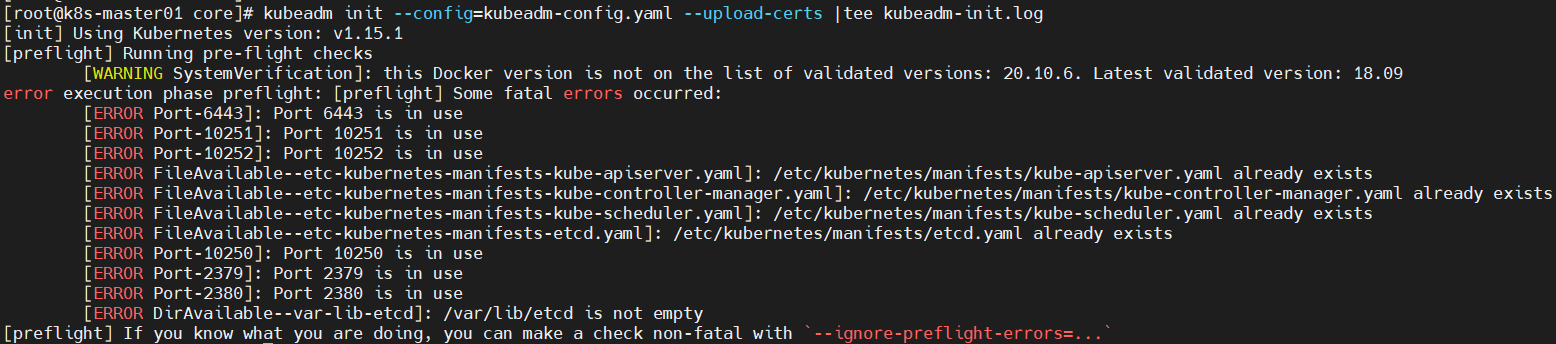

问题6:K8S集群初始化主节点失败?

[init] Using Kubernetes version: v1.15.1

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 18.09

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-6443]: Port 6443 is in use

[ERROR Port-10251]: Port 10251 is in use

[ERROR Port-10252]: Port 10252 is in use

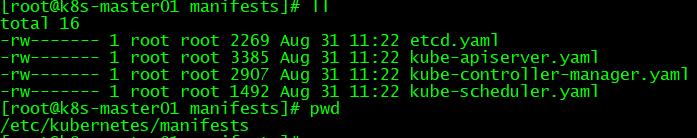

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[ERROR Port-10250]: Port 10250 is in use

[ERROR Port-2379]: Port 2379 is in use

[ERROR Port-2380]: Port 2380 is in use

[ERROR DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

原因分析:K8S集群已进行过初始化主节点。

解决方法:重置K8S后重新初始化。

kubeadm reset

kubeadm init --config=kubeadm-config.yaml --upload-certs |tee kubeadm-init.log

问题7:重置K8S成功后,是否需要删除相关文件?

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0602 10:20:53.656954 76680 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please, manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually.

For example:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

原因分析:无。

解决方法:可根据提示删除相关文件,避免主节点初始化后引起其他问题。

问题8:主节点初始化成功后,查看节点信息失败?

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

原因分析:初始化主节点后,未导入kubelet的config文件。

解决方法:导入config文件即可。

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

问题9、master节点加入K8S集群失败?

[root@k8s-master02 ~]# kubeadm join 192.168.1.201:6443 --token 6xyv8a.cueltqmpe9qa8nxu --discovery-token-ca-cert-hash sha256:bd78dfd370e47dfca742b5f6934c21014792168fa4dc19c9fa63bfdd87270097

> --control-plane --certificate-key b464a8d23d3313c4c0bb5b65648b039cb9b1177dddefbf46e2e296899d0e4516

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

error execution phase preflight:

One or more conditions for hosting a new control plane instance is not satisfied.

unable to add a new control plane instance a cluster that doesn't have a stable controlPlaneEndpoint address

Please ensure that:

* The cluster has a stable controlPlaneEndpoint address.

* The certificates that must be shared among control plane instances are provided.

To see the stack trace of this error execute with --v=5 or higher

原因分析:证书未共享。

解决方法:共享证书即可!!!

##########其他master节点执行如下内容#################################

mkdir -p /etc/kubernetes/pki/etcd/

##########master01节点执行如下内容#################################

cd /etc/kubernetes/pki/

scp ca.* front-proxy-ca.* sa.* 192.168.1.202:/etc/kubernetes/pki/

scp ca.* front-proxy-ca.* sa.* 192.168.1.203:/etc/kubernetes/pki/

##########其他master节点执行如下内容#################################

kubeadm join 192.168.1.201:6443 --token 6xyv8a.cueltqmpe9qa8nxu --discovery-token-ca-cert-hash sha256:bd78dfd370e47dfca742b5f6934c21014792168fa4dc19c9fa63bfdd87270097 --control-plane --certificate-key b464a8d23d3313c4c0bb5b65648b039cb9b1177dddefbf46e2e296899d0e4516

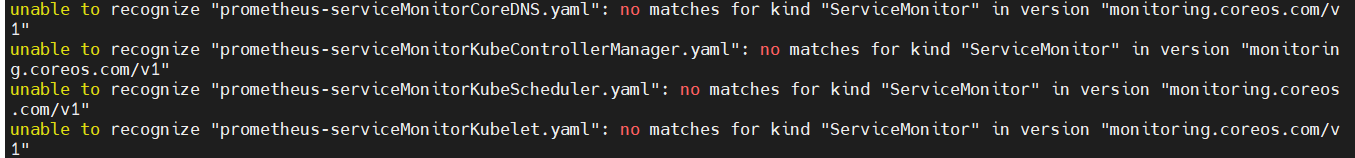

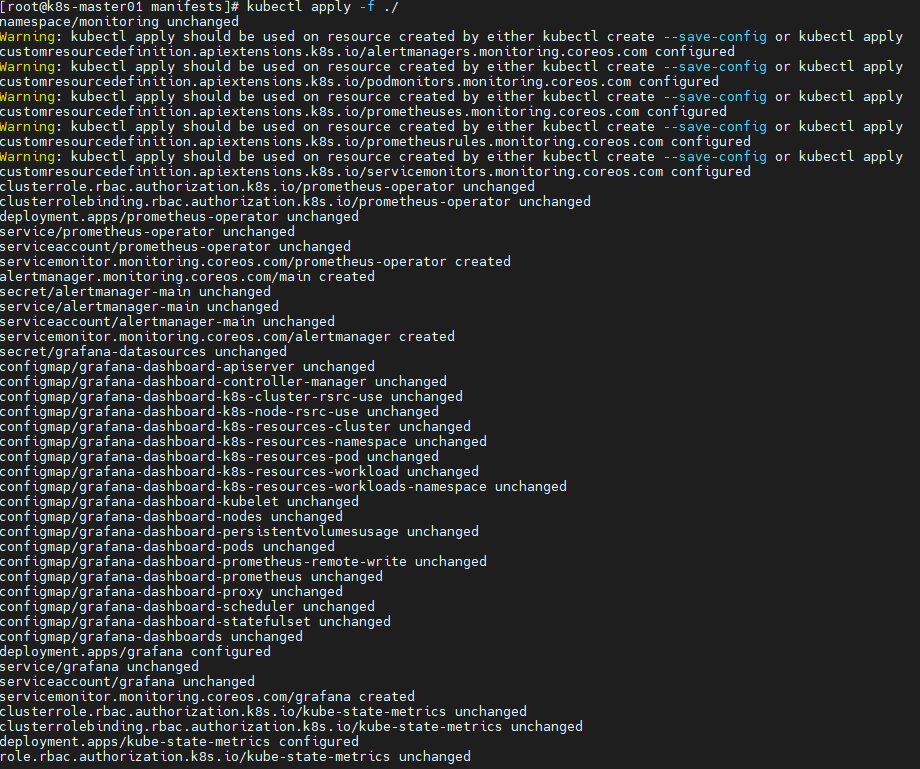

问题10、部署prometheus失败?

unable to recognize "0prometheus-operator-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.co m/v1"

unable to recognize "alertmanager-alertmanager.yaml": no matches for kind "Alertmanager" in version "monitoring.coreos.com/v1"

unable to recognize "alertmanager-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "grafana-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "kube-state-metrics-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/ v1"

unable to recognize "node-exporter-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "prometheus-prometheus.yaml": no matches for kind "Prometheus" in version "monitoring.coreos.com/v1"

unable to recognize "prometheus-rules.yaml": no matches for kind "PrometheusRule" in version "monitoring.coreos.com/v1"

unable to recognize "prometheus-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "prometheus-serviceMonitorApiserver.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com /v1"

unable to recognize "prometheus-serviceMonitorCoreDNS.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v 1"

unable to recognize "prometheus-serviceMonitorKubeControllerManager.yaml": no matches for kind "ServiceMonitor" in version "monitorin g.coreos.com/v1"

unable to recognize "prometheus-serviceMonitorKubeScheduler.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos .com/v1"

unable to recognize "prometheus-serviceMonitorKubelet.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v 1"

原因分析:不明。

解决方法:重新执行发布命令。

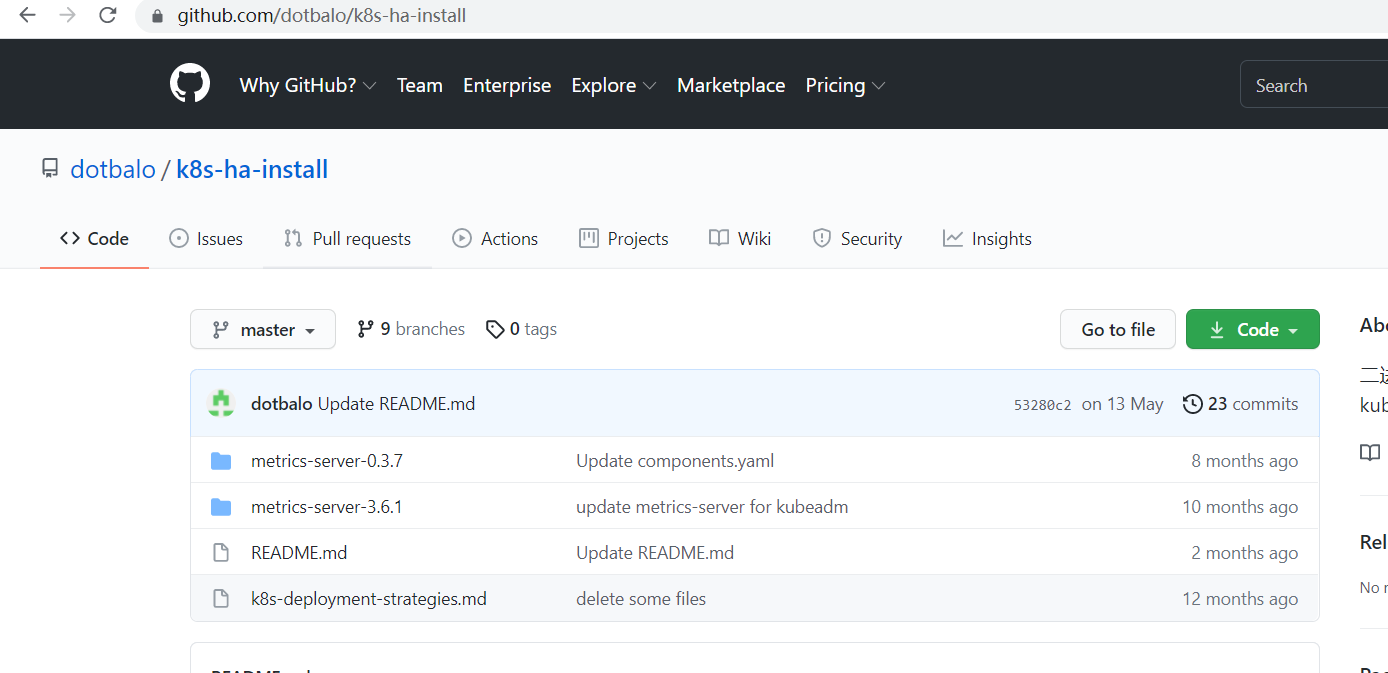

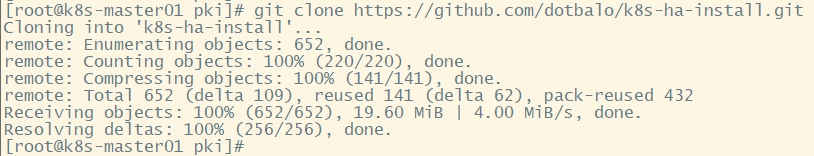

问题7:无法下载K8S高可用安装包,但能直接通过浏览器下载git包?

[root@k8s-master01 install-k8s-v1.17]# git clone https://github.com/dotbalo/k8s-ha-install.git

Cloning into 'k8s-ha-install'...

error: RPC failed; result=35, HTTP code = 0

fatal: The remote end hung up unexpectedly

原因分析: git buffer 太小了。

解决方法:git config --global http.postBuffer 100M #git buffer增大。

问题8:无法下载K8S高可用安装包,但能直接通过浏览器下载git包?

[root@k8s-master01 install-k8s-v1.17]# git clone https://github.com/dotbalo/k8s-ha-install.git

Cloning into 'k8s-ha-install'...

fatal: unable to access 'https://github.com/dotbalo/k8s-ha-install.git/': Empty reply from server

原因分析:不明;

解决方法:手动下载并上传git包;

问题9:K8S查看分支失败?

[root@k8s-master01 k8s-ha-install-master]# git branch -a

fatal: Not a git repository (or any of the parent directories): .git

原因分析:缺少.git本地仓库。

解决方法:初始化git即可。

[root@k8s-master01 k8s-ha-install-master]# git init

Initialized empty Git repository in /root/install-k8s-v1.17/k8s-ha-install-master/.git/

[root@k8s-master01 k8s-ha-install-master]# git branch -a

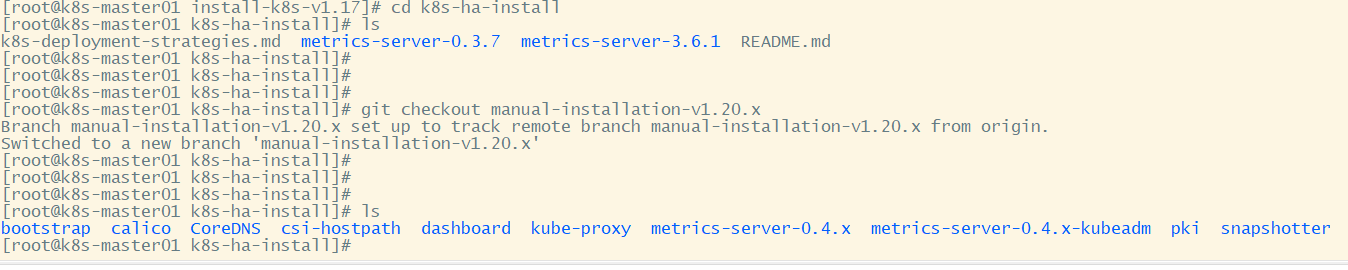

问题10:K8S切换分支失败?

[root@k8s-master01 k8s-ha-install-master]# git checkout manual-installation-v1.20.x

error: pathspec 'manual-installation-v1.20.x' did not match any file(s) known to git.

原因分析:没有发现分支。

解决方法:必须通过git下载,不能通过浏览器下载zip格式的文件;或将git下载的文件打包后再次解压也不可使用。

git clone https://github.com/dotbalo/k8s-ha-install.git

[root@k8s-master01 k8s-ha-install]# git checkout manual-installation-v1.20.x

Branch manual-installation-v1.20.x set up to track remote branch manual-installation-v1.20.x from origin.

Switched to a new branch 'manual-installation-v1.20.x'

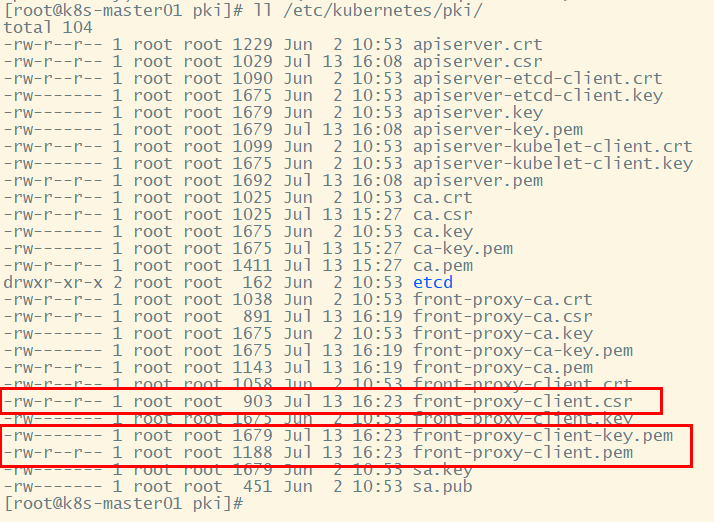

问题11:apiserver聚合证书生成失败?

[root@k8s-master01 pki]# cfssl gencert

> -ca=/etc/kubernetes/pki/front-proxy-ca.pem

> -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem

> -config=ca-config.json

> -profile=kubernetes

> front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

2021/07/13 16:23:31 [INFO] generate received request

2021/07/13 16:23:31 [INFO] received CSR

2021/07/13 16:23:31 [INFO] generating key: rsa-2048

2021/07/13 16:23:31 [INFO] encoded CSR

2021/07/13 16:23:31 [INFO] signed certificate with serial number 723055319564838988438867282088171857759978642034

2021/07/13 16:23:31 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements")

原因分析:命令缺少hosts参数,不适合用于网站;但不影响apiserver组件与其他组件通信。

解决方法:无需关注。

问题12:二进制安装K8S组件后,出现警告?

[root@k8s-master01 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.0", GitCommit:"af46c47ce925f4c4ad5cc8d1fca46c7b77d13b38", GitTreeState:"clean", BuildDate:"2020-12-08T17:59:43Z", GoVersion:"go1.15.5", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

原因分析:kubectl组件安装成功,但没有成功连接kubernetes apiserver;

解决方法:安装证书并配置组件即可。

问题13:二进制安装K8S进行证书配置后,启动ETCD失败?

[root@k8s-master02 ~]# systemctl status etcd

● etcd.service - Etcd Service

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: activating (start) since Wed 2021-07-21 08:51:42 CST; 1min 13s ago

Docs: https://coreos.com/etcd/docs/latest/

Main PID: 1424 (etcd)

Tasks: 9

Memory: 33.1M

CGroup: /system.slice/etcd.service

└─1424 /usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Jul 21 08:52:55 k8s-master02 etcd[1424]: rejected connection from "192.168.0.107:38222" (error "remote error: tls: bad certificate", ServerName "")

Jul 21 08:52:55 k8s-master02 etcd[1424]: rejected connection from "192.168.0.107:38224" (error "remote error: tls: bad certificate", ServerName "")

原因分析:认证失败;可能原因:创建证书的csr文件问题导致证书错误,IP未加入hosts中。

解决方法:重新生成证书即可,处理方法详见问题21.

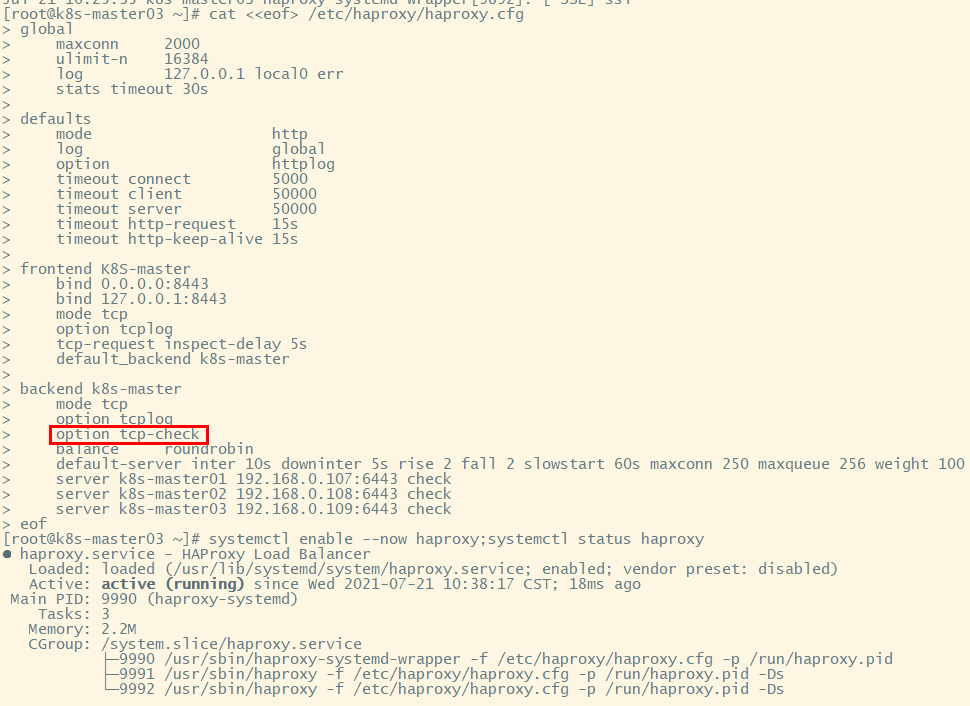

问题14:haproxy启动失败?

[root@k8s-master01 pki]# systemctl enable --now haproxy;systemctl status haproxy

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled)

Active: failed (Result: exit-code) since Wed 2021-07-21 10:25:35 CST; 3ms ago

Process: 2255 ExecStart=/usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid $OPTIONS (code=exited, status=1/FAILURE)

Main PID: 2255 (code=exited, status=1/FAILURE)

Jul 21 10:25:35 k8s-master01 haproxy-systemd-wrapper[2255]: [ALERT] 201/102535 (2256) : parsing [/etc/haproxy/haproxy.cfg:59] : 'server 192.168.0.107:6443' : invalid address...in 'check'

Jul 21 10:25:35 k8s-master01 haproxy-systemd-wrapper[2255]: [ALERT] 201/102535 (2256) : parsing [/etc/haproxy/haproxy.cfg:60] : 'server 192.168.0.108:6443' : invalid address...in 'check'

Jul 21 10:25:35 k8s-master01 haproxy-systemd-wrapper[2255]: [ALERT] 201/102535 (2256) : parsing [/etc/haproxy/haproxy.cfg:61] : 'server 192.168.0.109:6443' : invalid address...in 'check'

Jul 21 10:25:35 k8s-master01 haproxy-systemd-wrapper[2255]: [ALERT] 201/102535 (2256) : Error(s) found in configuration file : /etc/haproxy/haproxy.cfg

Jul 21 10:25:35 k8s-master01 haproxy-systemd-wrapper[2255]: [WARNING] 201/102535 (2256) : config : frontend 'GLOBAL' has no 'bind' directive. Please declare it as a backend ... intended.

Jul 21 10:25:35 k8s-master01 haproxy-systemd-wrapper[2255]: [ALERT] 201/102535 (2256) : Fatal errors found in configuration.

Jul 21 10:25:35 k8s-master01 systemd[1]: haproxy.service: main process exited, code=exited, status=1/FAILURE

Jul 21 10:25:35 k8s-master01 haproxy-systemd-wrapper[2255]: haproxy-systemd-wrapper: exit, haproxy RC=1

Jul 21 10:25:35 k8s-master01 systemd[1]: Unit haproxy.service entered failed state.

Jul 21 10:25:35 k8s-master01 systemd[1]: haproxy.service failed.

Hint: Some lines were ellipsized, use -l to show in full.

原因分析:配置错误。

解决方法:添加option tcp-check即可。

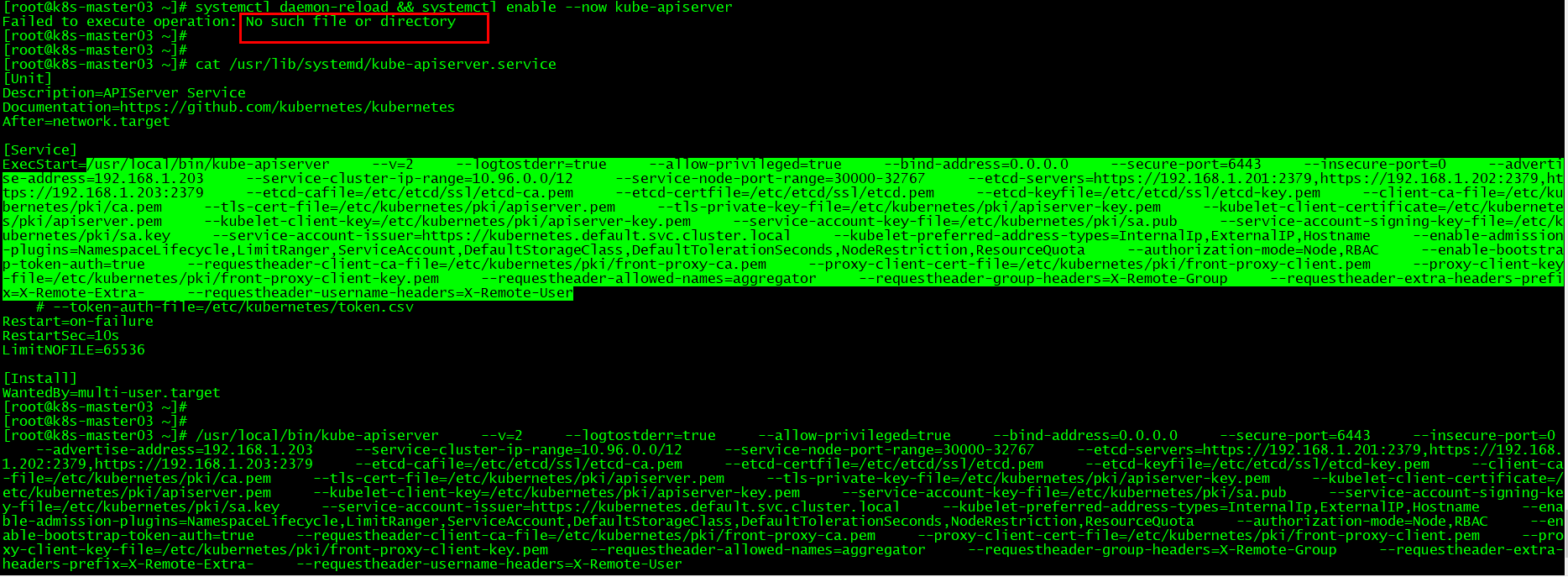

问题15:apiserver启动失败?

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now kube-apiserver

Failed to execute operation: No such file or directory

原因分析:配置问题,etcd不能正常工作原因。

解决方法:修改配置即可。

问题16:kubelet服务启动失败?

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Fri 2021-08-13 12:15:11 CST; 948ms ago

Docs: https://kubernetes.io/docs/

Process: 5600 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=1/FAILURE)

Main PID: 5600 (code=exited, status=1/FAILURE)

Aug 13 12:15:11 k8s-master02 systemd[1]: Unit kubelet.service entered failed state.

Aug 13 12:15:11 k8s-master02 systemd[1]: kubelet.service failed.

原因分析:selinux未关闭,且未进行初始化;

解决方法:关闭selinux并进行初始化。

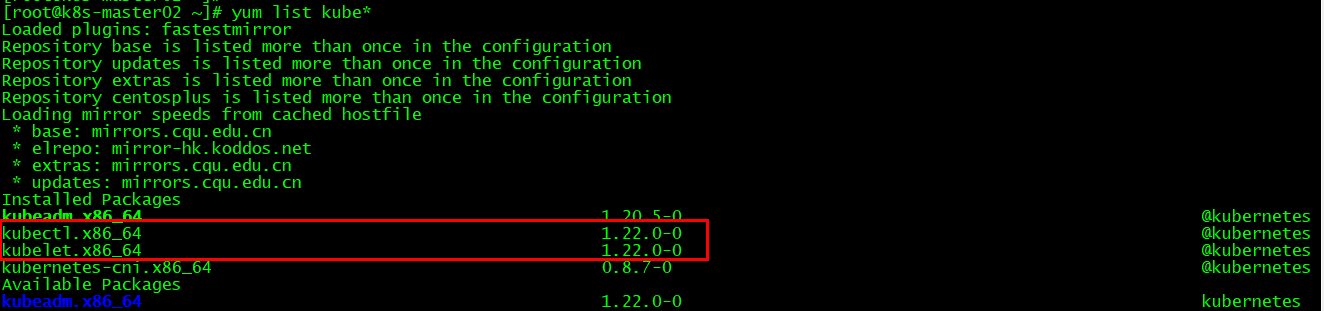

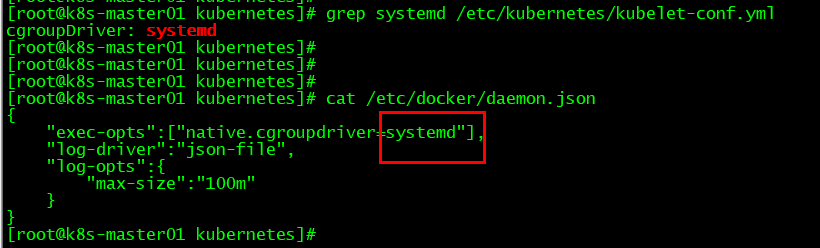

问题17:k8s主节点初始化失败?

[root@k8s-master01 ~]# kubeadm init --control-plane-endpoint "k8s-master01-lb:16443" --upload-certs

I0813 13:49:07.164695 10196 version.go:254] remote version is much newer: v1.22.0; falling back to: stable-1.20

[init] Using Kubernetes version: v1.20.10

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR KubeletVersion]: the kubelet version is higher than the control plane version. This is not a supported version skew and may lead to a malfunctional cluster. Kubelet version: "1.22.0" Control plane version: "1.20.10"

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

原因分析:kubeadm版本不一致;

解决方法:重新安装kubeadm即可。

yum -y remove kubectl kubelet && yum -y install kubectl-1.20.5-0 kubelet-1.20.5-0 kubeadm-1.20.5-0

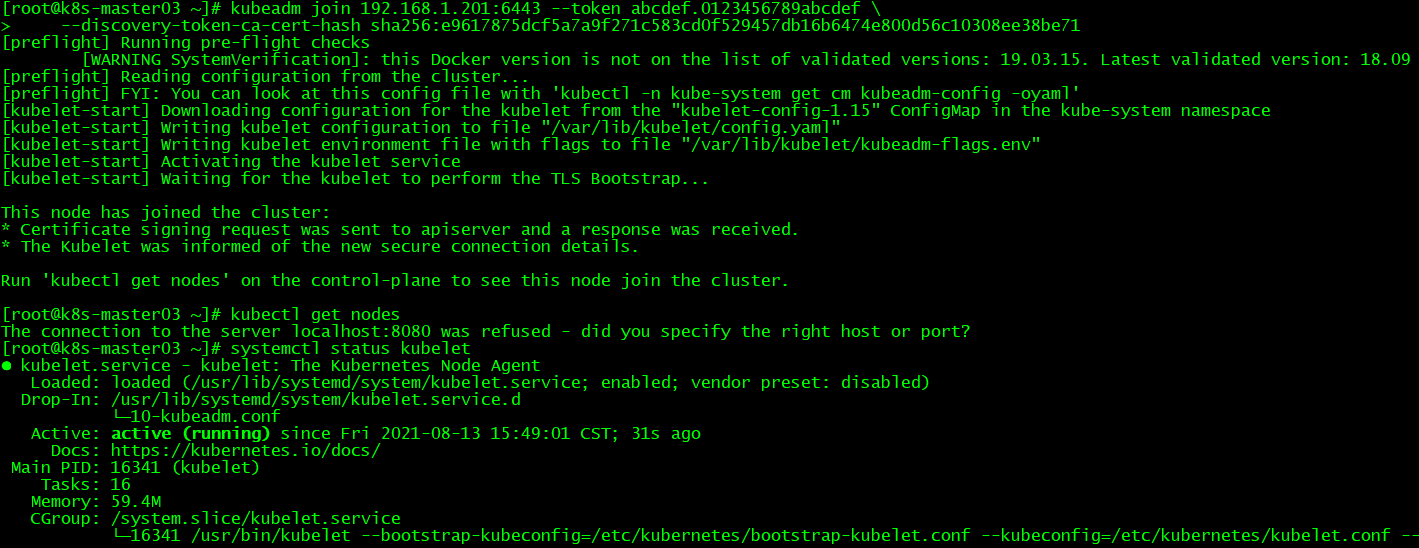

问题18:kubeadm安装k8s,初始化后无法查看node状态?

[root@k8s-master01 ~]# kubectl get node

The connection to the server localhost:8080 was refused - did you specify the right host or port?

原因分析:kubectl无法与K8S集群通信;

解决方法:.kube目录是用于存放kubectl与K8S集群交互的缓存文件及相关配置文件;

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

问题20:calico安装异常?

[root@k8s-master01 calico]# kubectl describe pod calico-node-dtqc8 -n kube-system

Name: calico-node-dtqc8

Namespace: kube-system

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned kube-system/calico-node-dtqc8 to k8s-master01

Normal Pulling 10m kubelet Pulling image "registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.15.3"

Normal Pulled 10m kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.15.3" in 44.529354646s

Normal Created 10m kubelet Created container install-cni

Normal Started 10m kubelet Started container install-cni

Normal Pulling 10m kubelet Pulling image "registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol:v3.15.3"

Normal Pulled 9m45s kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol:v3.15.3" in 27.031642012s

Normal Created 9m45s kubelet Created container flexvol-driver

Normal Started 9m44s kubelet Started container flexvol-driver

Normal Pulling 9m43s kubelet Pulling image "registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.15.3"

Normal Pulled 8m42s kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.15.3" in 1m1.78614215s

Normal Created 8m41s kubelet Created container calico-node

Normal Started 8m41s kubelet Started container calico-node

Warning Unhealthy 8m39s kubelet Readiness probe failed: calico/node is not ready: BIRD is not ready: Error querying BIRD: unable to connect to BIRDv4 socket: dial unix /var/run/calico/bird.ctl: connect: connection refused

原因分析:不明。

解决方法:暂无影响,可不理会。

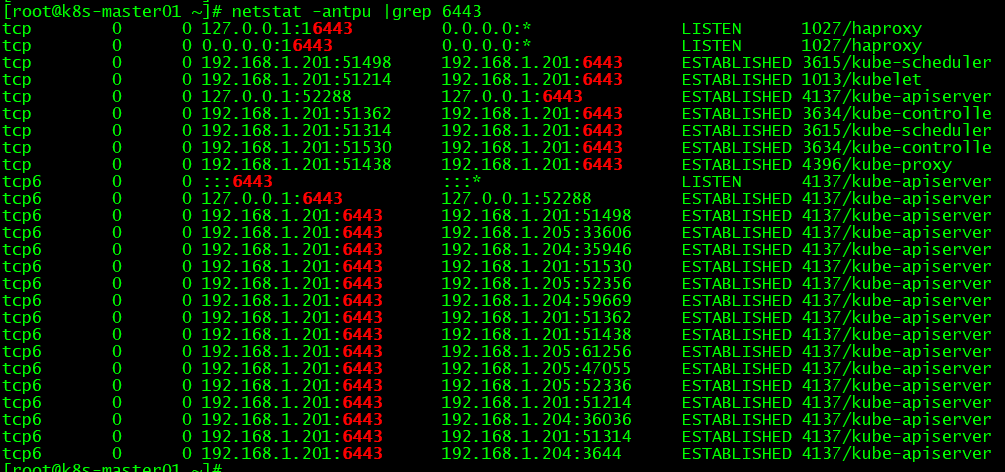

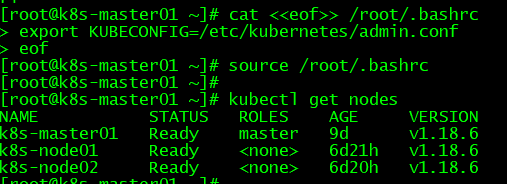

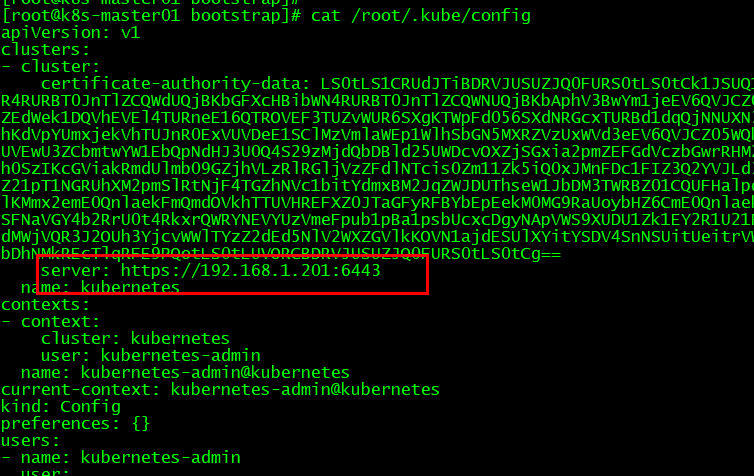

问题21:kubectl工具不能使用?

[root@k8s-master01 ~]# kubectl get nodes

The connection to the server 192.168.1.201:6443 was refused - did you specify the right host or port?

原因分析:未设置环境变量;

解决方法:

cat <<eof>> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

eof

source /root/.bashrc

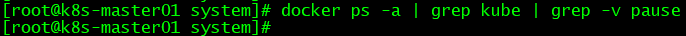

问题22:第一个master节点初始化失败?

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

原因分析:不明;

解决方法:待解决;

问题23、helm安装ingress失败?

[root@k8s-master01 ingress-nginx]# helm install ingress-nginx -n ingress-nginx .

Error: cannot re-use a name that is still in use

原因分析:安装失败后,缓存记录了ingress-nginx;

解决方法:删除记录即可。

[root@k8s-master01 ingress-nginx]# helm uninstall ingress-nginx -n ingress-nginx

Error: uninstall: Release not loaded: ingress-nginx: release: not found

[root@k8s-master01 ingress-nginx]#

[root@k8s-master01 ingress-nginx]#

[root@k8s-master01 ingress-nginx]# helm install ingress-nginx -n ingress-nginx .

NAME: ingress-nginx

LAST DEPLOYED: Tue Aug 31 16:00:45 2021

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:

export POD_NAME=$(kubectl --namespace ingress-nginx get pods -o jsonpath="{.items[0].metadata.name}" -l "app=ingress-nginx,component=,release=ingress-nginx")

kubectl --namespace ingress-nginx port-forward $POD_NAME 8080:80

echo "Visit http://127.0.0.1:8080 to access your application."

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

问题24:helm安装ingress失败,进入调试模式查看原因?

[root@k8s-master01 ingress-nginx]# helm install ingress-nginx -n ingress-nginx . --debug

install.go:148: [debug] Original chart version: ""

install.go:165: [debug] CHART PATH: /root/ingress-nginx

Error: could not get apiVersions from Kubernetes: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

helm.go:76: [debug] unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

could not get apiVersions from Kubernetes

helm.sh/helm/v3/pkg/action.(*Configuration).getCapabilities

/home/circleci/helm.sh/helm/pkg/action/action.go:114

helm.sh/helm/v3/pkg/action.(*Install).Run

/home/circleci/helm.sh/helm/pkg/action/install.go:195

main.runInstall

/home/circleci/helm.sh/helm/cmd/helm/install.go:209

main.newInstallCmd.func1

/home/circleci/helm.sh/helm/cmd/helm/install.go:115

github.com/spf13/cobra.(*Command).execute

/go/pkg/mod/github.com/spf13/cobra@v0.0.5/command.go:826

github.com/spf13/cobra.(*Command).ExecuteC

/go/pkg/mod/github.com/spf13/cobra@v0.0.5/command.go:914

github.com/spf13/cobra.(*Command).Execute

/go/pkg/mod/github.com/spf13/cobra@v0.0.5/command.go:864

main.main

/home/circleci/helm.sh/helm/cmd/helm/helm.go:75

runtime.main

/usr/local/go/src/runtime/proc.go:203

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1357

原因分析:存在错误的apiservice;

解决方法:删除错误的apiservice即可。

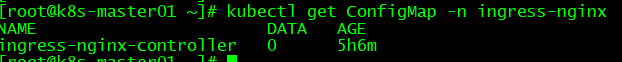

问题25:helm安装ingress失败,进入调试模式查看原因?

[root@k8s-master01 ingress-nginx]# helm install ingress-nginx -n ingress-nginx . --debug

install.go:148: [debug] Original chart version: ""

install.go:165: [debug] CHART PATH: /root/ingress-nginx

Error: rendered manifests contain a resource that already exists. Unable to continue with install: existing resource conflict: kind: ConfigMap, namespace: ingress-nginx, name: ingress-nginx-controller

helm.go:76: [debug] existing resource conflict: kind: ConfigMap, namespace: ingress-nginx, name: ingress-nginx-controller

rendered manifests contain a resource that already exists. Unable to continue with install

helm.sh/helm/v3/pkg/action.(*Install).Run

/home/circleci/helm.sh/helm/pkg/action/install.go:242

main.runInstall

/home/circleci/helm.sh/helm/cmd/helm/install.go:209

main.newInstallCmd.func1

/home/circleci/helm.sh/helm/cmd/helm/install.go:115

github.com/spf13/cobra.(*Command).execute

/go/pkg/mod/github.com/spf13/cobra@v0.0.5/command.go:826

github.com/spf13/cobra.(*Command).ExecuteC

/go/pkg/mod/github.com/spf13/cobra@v0.0.5/command.go:914

github.com/spf13/cobra.(*Command).Execute

/go/pkg/mod/github.com/spf13/cobra@v0.0.5/command.go:864

main.main

/home/circleci/helm.sh/helm/cmd/helm/helm.go:75

runtime.main

/usr/local/go/src/runtime/proc.go:203

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1357

[root@k8s-master01 ingress-nginx]# helm install ingress-nginx -n ingress-nginx . --debug

install.go:148: [debug] Original chart version: ""

install.go:165: [debug] CHART PATH: /root/ingress-nginx

Error: rendered manifests contain a resource that already exists. Unable to continue with install: existing resource conflict: kind: ServiceAccount, namespace: ingress-nginx, name: ingress-nginx

。。。

Error: rendered manifests contain a resource that already exists. Unable to continue with install: existing resource conflict: kind: ClusterRole, namespace: , name: ingress-nginx

helm.go:76: [debug] existing resource conflict: kind: ClusterRole, namespace: , name: ingress-nginx

rendered manifests contain a resource that already exists. Unable to continue with install

。。。

原因分析:已存在configmap等资源;

解决方法:删除configmap等资源即可;

kubectl delete ConfigMap ingress-nginx-controller -n ingress-nginx

kubectl delete ServiceAccount ingress-nginx -n ingress-nginx

kubectl delete ClusterRole ingress-nginx

kubectl delete ClusterRoleBinding ingress-nginx

kubectl delete Role ingress-nginx -n ingress-nginx

kubectl delete RoleBinding ingress-nginx -n ingress-nginx

kubectl delete svc ingress-nginx-controller ingress-nginx-controller-admission -n ingress-nginx

kubectl delete DaemonSet ingress-nginx-controller -n ingress-nginx

kubectl delete ValidatingWebhookConfiguration ingress-nginx-admission

问题26:etcd集群启动失败?

[root@k8s-master01 ~]# systemctl status etcd

● etcd.service - Etcd Service

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: activating (start) since Mon 2021-09-06 20:03:22 CST; 47s ago

Docs: https://coreos.com/etcd/docs/latest/

Main PID: 1497 (etcd)

Tasks: 8

Memory: 23.2M

CGroup: /system.slice/etcd.service

└─1497 /usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Sep 06 20:04:07 k8s-master01 etcd[1497]: raft2021/09/06 20:04:07 INFO: c05e87400fe17139 is starting a new election at term 928

Sep 06 20:04:07 k8s-master01 etcd[1497]: raft2021/09/06 20:04:07 INFO: c05e87400fe17139 became candidate at term 929

Sep 06 20:04:07 k8s-master01 etcd[1497]: raft2021/09/06 20:04:07 INFO: c05e87400fe17139 received MsgVoteResp from c05e87400fe17139 at term 929

Sep 06 20:04:07 k8s-master01 etcd[1497]: raft2021/09/06 20:04:07 INFO: c05e87400fe17139 [logterm: 1, index: 3] sent MsgVote request to 208ae89f369427bb at term 929

Sep 06 20:04:07 k8s-master01 etcd[1497]: raft2021/09/06 20:04:07 INFO: c05e87400fe17139 [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 929

Sep 06 20:04:09 k8s-master01 etcd[1497]: raft2021/09/06 20:04:09 INFO: c05e87400fe17139 is starting a new election at term 929

Sep 06 20:04:09 k8s-master01 etcd[1497]: raft2021/09/06 20:04:09 INFO: c05e87400fe17139 became candidate at term 930

Sep 06 20:04:09 k8s-master01 etcd[1497]: raft2021/09/06 20:04:09 INFO: c05e87400fe17139 received MsgVoteResp from c05e87400fe17139 at term 930

Sep 06 20:04:09 k8s-master01 etcd[1497]: raft2021/09/06 20:04:09 INFO: c05e87400fe17139 [logterm: 1, index: 3] sent MsgVote request to 208ae89f369427bb at term 930

Sep 06 20:04:09 k8s-master01 etcd[1497]: raft2021/09/06 20:04:09 INFO: c05e87400fe17139 [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 930

[root@k8s-master02 ~]# systemctl status etcd

● etcd.service - Etcd Service

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: activating (auto-restart) (Result: timeout) since Mon 2021-09-06 20:06:34 CST; 6s ago

Docs: https://coreos.com/etcd/docs/latest/

Process: 1531 ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml (code=killed, signal=TERM)

Main PID: 1531 (code=killed, signal=TERM)

Sep 06 20:06:34 k8s-master02 systemd[1]: Failed to start Etcd Service.

Sep 06 20:06:34 k8s-master02 systemd[1]: Unit etcd.service entered failed state.

Sep 06 20:06:34 k8s-master02 systemd[1]: etcd.service failed.

[root@k8s-master03 ~]# systemctl status etcd

● etcd.service - Etcd Service

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: inactive (dead) since Mon 2021-09-06 20:13:50 CST; 1s ago

Docs: https://coreos.com/etcd/docs/latest/

Process: 1781 ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml (code=killed, signal=TERM)

Main PID: 1781 (code=killed, signal=TERM)

Sep 06 20:13:49 k8s-master03 etcd[1781]: raft2021/09/06 20:13:49 INFO: d2cb62beee1ff93f became candidate at term 1247

Sep 06 20:13:49 k8s-master03 etcd[1781]: raft2021/09/06 20:13:49 INFO: d2cb62beee1ff93f received MsgVoteResp from d2cb62beee1ff93f at term 1247

Sep 06 20:13:49 k8s-master03 etcd[1781]: raft2021/09/06 20:13:49 INFO: d2cb62beee1ff93f [logterm: 1, index: 3] sent MsgVote request to 208ae89f369427bb at term 1247

Sep 06 20:13:49 k8s-master03 etcd[1781]: raft2021/09/06 20:13:49 INFO: d2cb62beee1ff93f [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 1247

Sep 06 20:13:49 k8s-master03 etcd[1781]: health check for peer 208ae89f369427bb could not connect: dial tcp 192.168.1.208:2380: i/o timeout

Sep 06 20:13:49 k8s-master03 etcd[1781]: health check for peer c05e87400fe17139 could not connect: dial tcp 192.168.1.207:2380: i/o timeout

Sep 06 20:13:49 k8s-master03 etcd[1781]: health check for peer 208ae89f369427bb could not connect: dial tcp 192.168.1.208:2380: i/o timeout

Sep 06 20:13:49 k8s-master03 etcd[1781]: health check for peer c05e87400fe17139 could not connect: dial tcp 192.168.1.207:2380: i/o timeout

Sep 06 20:13:50 k8s-master03 etcd[1781]: publish error: etcdserver: request timed out

原因分析:证书与配置出现问题,开启了高可用服务;同时etcd2|etcd3未启动,导致etcd1启动失败。

Sep 6 20:46:33 k8s-master02 Keepalived_vrrp[1036]: /etc/keepalived/check_apiserver.sh exited with status 2

Sep 6 20:46:33 k8s-master02 etcd: raft2021/09/06 20:46:33 INFO: 208ae89f369427bb is starting a new election at term 2231

Sep 6 20:46:33 k8s-master02 etcd: raft2021/09/06 20:46:33 INFO: 208ae89f369427bb became candidate at term 2232

Sep 6 20:46:33 k8s-master02 etcd: raft2021/09/06 20:46:33 INFO: 208ae89f369427bb received MsgVoteResp from 208ae89f369427bb at term 2232

Sep 6 20:46:33 k8s-master02 etcd: raft2021/09/06 20:46:33 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 2232

Sep 6 20:46:33 k8s-master02 etcd: raft2021/09/06 20:46:33 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 2232

Sep 6 20:46:34 k8s-master02 etcd: raft2021/09/06 20:46:34 INFO: 208ae89f369427bb is starting a new election at term 2232

Sep 6 20:46:34 k8s-master02 etcd: raft2021/09/06 20:46:34 INFO: 208ae89f369427bb became candidate at term 2233

Sep 6 20:46:34 k8s-master02 etcd: raft2021/09/06 20:46:34 INFO: 208ae89f369427bb received MsgVoteResp from 208ae89f369427bb at term 2233

Sep 6 20:46:34 k8s-master02 etcd: raft2021/09/06 20:46:34 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 2233

Sep 6 20:46:34 k8s-master02 etcd: raft2021/09/06 20:46:34 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 2233

Sep 6 20:46:36 k8s-master02 etcd: raft2021/09/06 20:46:36 INFO: 208ae89f369427bb is starting a new election at term 2233

Sep 6 20:46:36 k8s-master02 etcd: raft2021/09/06 20:46:36 INFO: 208ae89f369427bb became candidate at term 2234

Sep 6 20:46:36 k8s-master02 etcd: raft2021/09/06 20:46:36 INFO: 208ae89f369427bb received MsgVoteResp from 208ae89f369427bb at term 2234

Sep 6 20:46:36 k8s-master02 etcd: raft2021/09/06 20:46:36 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 2234

Sep 6 20:46:36 k8s-master02 etcd: raft2021/09/06 20:46:36 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 2234

Sep 6 20:46:37 k8s-master02 etcd: raft2021/09/06 20:46:37 INFO: 208ae89f369427bb is starting a new election at term 2234

Sep 6 20:46:37 k8s-master02 etcd: raft2021/09/06 20:46:37 INFO: 208ae89f369427bb became candidate at term 2235

Sep 6 20:46:37 k8s-master02 etcd: raft2021/09/06 20:46:37 INFO: 208ae89f369427bb received MsgVoteResp from 208ae89f369427bb at term 2235

Sep 6 20:46:37 k8s-master02 etcd: raft2021/09/06 20:46:37 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 2235

Sep 6 20:46:37 k8s-master02 etcd: raft2021/09/06 20:46:37 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 2235

Sep 6 20:46:38 k8s-master02 etcd: health check for peer c05e87400fe17139 could not connect: dial tcp 192.168.1.207:2380: connect: no route to host

Sep 6 20:46:38 k8s-master02 etcd: health check for peer c05e87400fe17139 could not connect: dial tcp 192.168.1.207:2380: connect: no route to host

Sep 6 20:46:38 k8s-master02 etcd: health check for peer d2cb62beee1ff93f could not connect: dial tcp 192.168.1.209:2380: connect: no route to host

Sep 6 20:46:38 k8s-master02 etcd: health check for peer d2cb62beee1ff93f could not connect: dial tcp 192.168.1.209:2380: connect: no route to host

Sep 6 20:46:38 k8s-master02 Keepalived_vrrp[1036]: /etc/keepalived/check_apiserver.sh exited with status 2

Sep 6 20:46:39 k8s-master02 etcd: raft2021/09/06 20:46:39 INFO: 208ae89f369427bb is starting a new election at term 2235

Sep 6 20:46:39 k8s-master02 etcd: raft2021/09/06 20:46:39 INFO: 208ae89f369427bb became candidate at term 2236

Sep 6 20:46:39 k8s-master02 etcd: raft2021/09/06 20:46:39 INFO: 208ae89f369427bb received MsgVoteResp from 208ae89f369427bb at term 2236

Sep 6 20:46:39 k8s-master02 etcd: raft2021/09/06 20:46:39 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 2236

Sep 6 20:46:39 k8s-master02 etcd: raft2021/09/06 20:46:39 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 2236

Sep 6 20:46:40 k8s-master02 etcd: publish error: etcdserver: request timed out

Sep 6 20:46:41 k8s-master02 etcd: raft2021/09/06 20:46:41 INFO: 208ae89f369427bb is starting a new election at term 2236

Sep 6 20:46:41 k8s-master02 etcd: raft2021/09/06 20:46:41 INFO: 208ae89f369427bb became candidate at term 2237

Sep 6 20:46:41 k8s-master02 etcd: raft2021/09/06 20:46:41 INFO: 208ae89f369427bb received MsgVoteResp from 208ae89f369427bb at term 2237

Sep 6 20:46:41 k8s-master02 etcd: raft2021/09/06 20:46:41 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 2237

Sep 6 20:46:41 k8s-master02 etcd: raft2021/09/06 20:46:41 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 2237

Sep 6 20:46:42 k8s-master02 etcd: raft2021/09/06 20:46:42 INFO: 208ae89f369427bb is starting a new election at term 2237

Sep 6 20:46:42 k8s-master02 etcd: raft2021/09/06 20:46:42 INFO: 208ae89f369427bb became candidate at term 2238

Sep 6 20:46:42 k8s-master02 etcd: raft2021/09/06 20:46:42 INFO: 208ae89f369427bb received MsgVoteResp from 208ae89f369427bb at term 2238

Sep 6 20:46:42 k8s-master02 etcd: raft2021/09/06 20:46:42 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to c05e87400fe17139 at term 2238

Sep 6 20:46:42 k8s-master02 etcd: raft2021/09/06 20:46:42 INFO: 208ae89f369427bb [logterm: 1, index: 3] sent MsgVote request to d2cb62beee1ff93f at term 2238

Sep 6 20:46:43 k8s-master02 etcd: health check for peer c05e87400fe17139 could not connect: dial tcp 192.168.1.207:2380: i/o timeout

Sep 6 20:46:43 k8s-master02 etcd: health check for peer c05e87400fe17139 could not connect: dial tcp 192.168.1.207:2380: i/o timeout

Sep 6 20:46:43 k8s-master02 etcd: health check for peer d2cb62beee1ff93f could not connect: dial tcp 192.168.1.209:2380: i/o timeout

Sep 6 20:46:43 k8s-master02 etcd: health check for peer d2cb62beee1ff93f could not connect: dial tcp 192.168.1.209:2380: i/o timeout

Sep 6 20:46:43 k8s-master02 Keepalived_vrrp[1036]: /etc/keepalived/check_apiserver.sh exited with status 2

Sep 6 20:46:44 k8s-master02 etcd: raft2021/09/06 20:46:44 INFO: 208ae89f369427bb is starting a new election at term 2238

Sep 6 21:22:49 k8s-master03 etcd: raft2021/09/06 21:22:49 INFO: 9eb1f144b60ad8c6 became candidate at term 64

Sep 6 21:22:49 k8s-master03 etcd: raft2021/09/06 21:22:49 INFO: 9eb1f144b60ad8c6 received MsgVoteResp from 9eb1f144b60ad8c6 at term 64

Sep 6 21:22:49 k8s-master03 etcd: raft2021/09/06 21:22:49 INFO: 9eb1f144b60ad8c6 [logterm: 1, index: 3] sent MsgVote request to 7f890ac3cab00125 at term 64

Sep 6 21:22:49 k8s-master03 etcd: raft2021/09/06 21:22:49 INFO: 9eb1f144b60ad8c6 [logterm: 1, index: 3] sent MsgVote request to ef155f75af77a2e6 at term 64

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37322" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37324" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37330" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37332" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37338" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37340" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37346" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:49 k8s-master03 etcd: rejected connection from "192.168.1.202:37348" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37354" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37356" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37364" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37362" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37370" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37372" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37380" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37382" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: health check for peer 7f890ac3cab00125 could not connect: dial tcp 192.168.1.201:2380: connect: connection refused

Sep 6 21:22:50 k8s-master03 etcd: health check for peer ef155f75af77a2e6 could not connect: x509: certificate is valid for 127.0.0.1, 192.168.1.206, 192.168.1.207, 192.168.1.208, not 192.168.1.202

Sep 6 21:22:50 k8s-master03 etcd: health check for peer 7f890ac3cab00125 could not connect: dial tcp 192.168.1.201:2380: connect: connection refused

Sep 6 21:22:50 k8s-master03 etcd: health check for peer ef155f75af77a2e6 could not connect: x509: certificate is valid for 127.0.0.1, 192.168.1.206, 192.168.1.207, 192.168.1.208, not 192.168.1.202

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37388" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 etcd: rejected connection from "192.168.1.202:37390" (error "remote error: tls: bad certificate", ServerName "")

Sep 6 21:22:50 k8s-master03 systemd: etcd.service start operation timed out. Terminating.

Sep 6 21:22:50 k8s-master03 systemd: Failed to start Etcd Service.

Sep 6 21:22:50 k8s-master03 systemd: Unit etcd.service entered failed state.

Sep 6 21:22:50 k8s-master03 systemd: etcd.service failed.

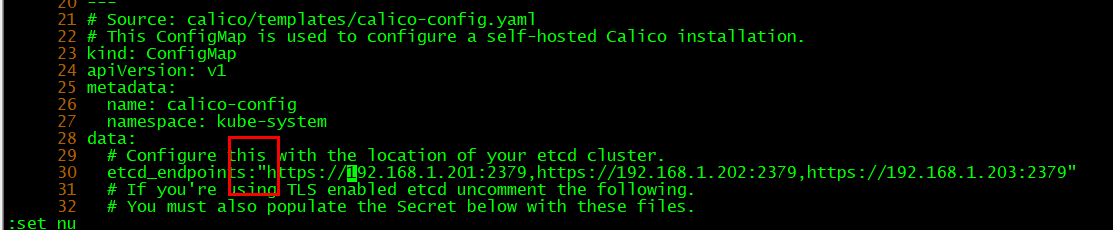

解决方法:

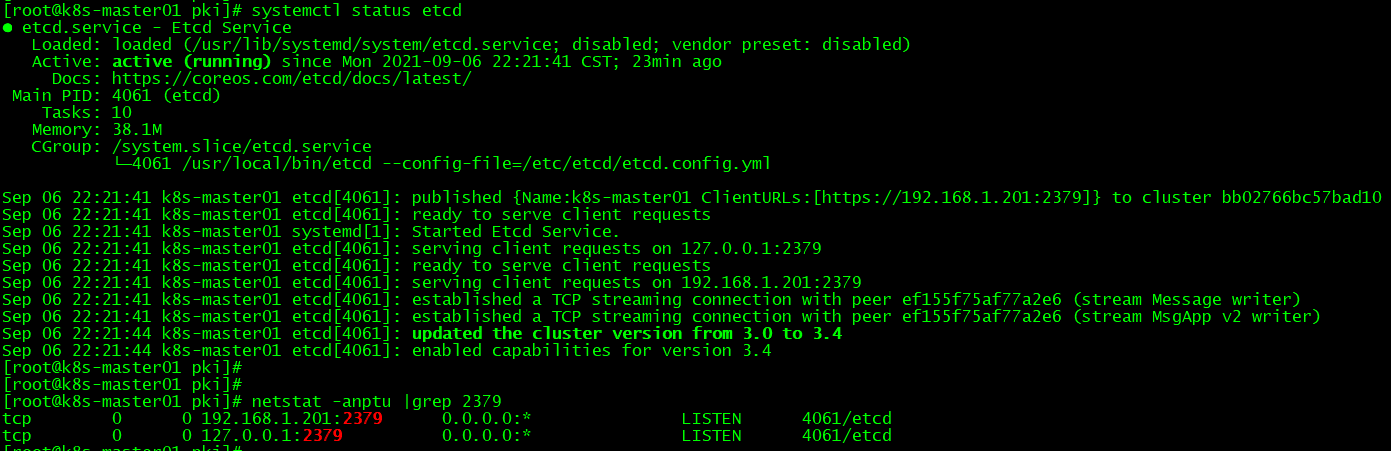

重新生成证书,如下图:

cfssl gencert

-ca=/etc/etcd/ssl/etcd-ca.pem

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem

-config=ca-config.json

-hostname=127.0.0.1,k8s-master01,k8s-master02,k8s-master03,192.168.1.201,192.168.1.202,192.168.1.203,192.168.1.206,192.168.1.207,192.168.1.208,192.168.1.209

-profile=kubernetes

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd #ip与域名对应,都必须写;

systemctl stop etcd && rm -rf /var/lib/etcd/* && ll /var/lib/etcd/ #停止etcd服务,删除etcd运行时产生的文件;同时严禁高可用服务开启;

tail /var/log/messages

Sep 6 22:21:41 k8s-master01 systemd: Started Etcd Service.

Sep 6 22:21:41 k8s-master01 etcd: serving client requests on 127.0.0.1:2379

Sep 6 22:21:41 k8s-master01 etcd: ready to serve client requests

Sep 6 22:21:41 k8s-master01 etcd: serving client requests on 192.168.1.201:2379

Sep 6 22:21:41 k8s-master01 etcd: established a TCP streaming connection with peer ef155f75af77a2e6 (stream Message writer)

Sep 6 22:21:41 k8s-master01 etcd: established a TCP streaming connection with peer ef155f75af77a2e6 (stream MsgApp v2 writer)

Sep 6 22:21:44 k8s-master01 etcd: updated the cluster version from 3.0 to 3.4

Sep 6 22:21:44 k8s-master01 etcd: enabled capabilities for version 3.4

Sep 6 22:30:01 k8s-master01 systemd: Started Session 16 of user root.

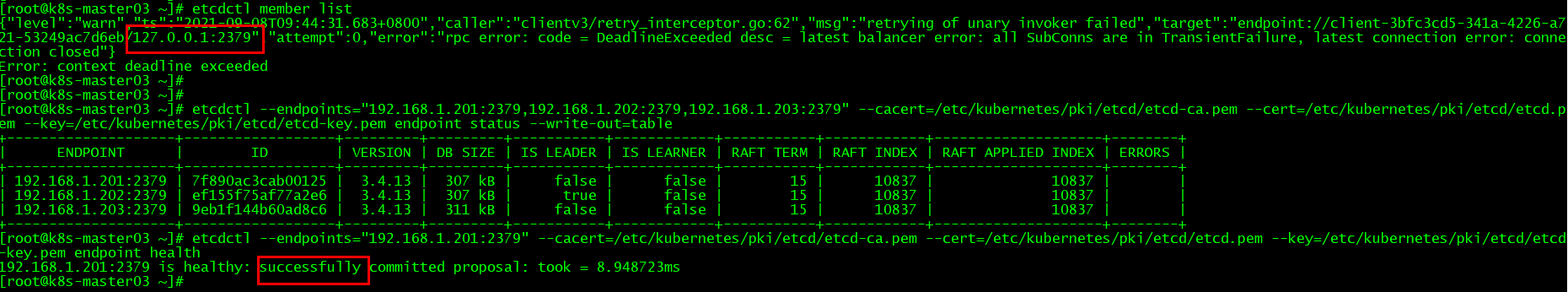

问题27:某个node的etcd服务正常运行,但提示rpc error?

[root@k8s-master01 pki]# ETCDCTL_API=3 etcdctl member list

{"level":"warn","ts":"2021-09-06T22:58:58.455+0800","caller":"clientv3/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"endpoint://client-40bd4115-87ef-4e13-8d82-28ede2ed9ff3/127.0.0.1:2379","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = latest balancer error: all SubConns are in TransientFailure, latest connection error: connection closed"}

Error: context deadline exceeded

原因分析:127.0.0.12379远程过程调用error不影响etcd集群使用,但会降低性能。

解决方法:可不理会。

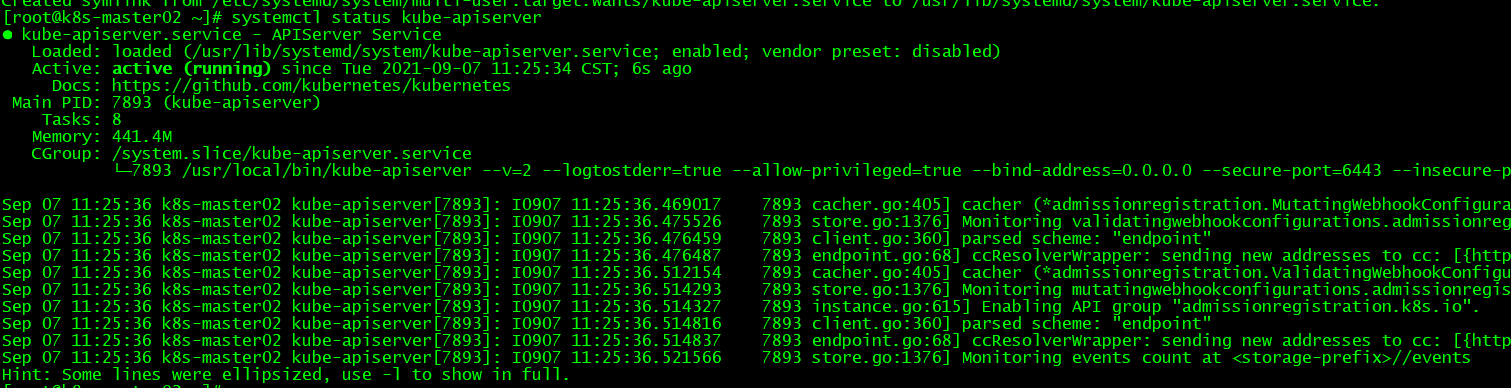

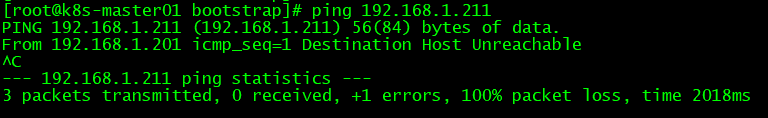

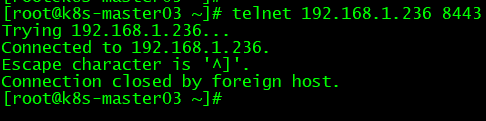

问题28:kube-apiserver无法启动?

[root@k8s-master01 ~]# systemctl enable --now kube-apiserver

Failed to execute operation: No such file or directory

原因分析:service文件放错位置。

I0907 10:13:14.991428 6008 store.go:1376] Monitoring limitranges count at <storage-prefix>//limitranges

I0907 10:13:14.997097 6008 cacher.go:405] cacher (*core.LimitRange): initialized

I0907 10:13:14.999869 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:14.999947 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.008518 6008 store.go:1376] Monitoring resourcequotas count at <storage-prefix>//resourcequotas

I0907 10:13:15.009517 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:15.009542 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.027691 6008 store.go:1376] Monitoring secrets count at <storage-prefix>//secrets

I0907 10:13:15.028338 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:15.028366 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.031897 6008 cacher.go:405] cacher (*core.ResourceQuota): initialized

I0907 10:13:15.032423 6008 cacher.go:405] cacher (*core.Secret): initialized

I0907 10:13:15.040312 6008 store.go:1376] Monitoring persistentvolumes count at <storage-prefix>//persistentvolumes

I0907 10:13:15.041293 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:15.041340 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.049139 6008 cacher.go:405] cacher (*core.PersistentVolume): initialized

I0907 10:13:15.049286 6008 store.go:1376] Monitoring persistentvolumeclaims count at <storage-prefix>//persistentvolumeclaims

I0907 10:13:15.050411 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:15.050509 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.052529 6008 cacher.go:405] cacher (*core.PersistentVolumeClaim): initialized

I0907 10:13:15.057764 6008 store.go:1376] Monitoring configmaps count at <storage-prefix>//configmaps

I0907 10:13:15.058496 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:15.058550 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.065377 6008 cacher.go:405] cacher (*core.ConfigMap): initialized

I0907 10:13:15.067289 6008 store.go:1376] Monitoring namespaces count at <storage-prefix>//namespaces

I0907 10:13:15.068480 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:15.068510 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.077229 6008 cacher.go:405] cacher (*core.Namespace): initialized

I0907 10:13:15.077465 6008 store.go:1376] Monitoring endpoints count at <storage-prefix>//services/endpoints

I0907 10:13:15.078124 6008 client.go:360] parsed scheme: "endpoint"

I0907 10:13:15.078166 6008 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 10:13:15.084988 6008 cacher.go:405] cacher (*core.Endpoints): initialized

I0907 10:13:15.087716 6008 store.go:1376] Monitoring nodes count at <storage-prefix>//minions

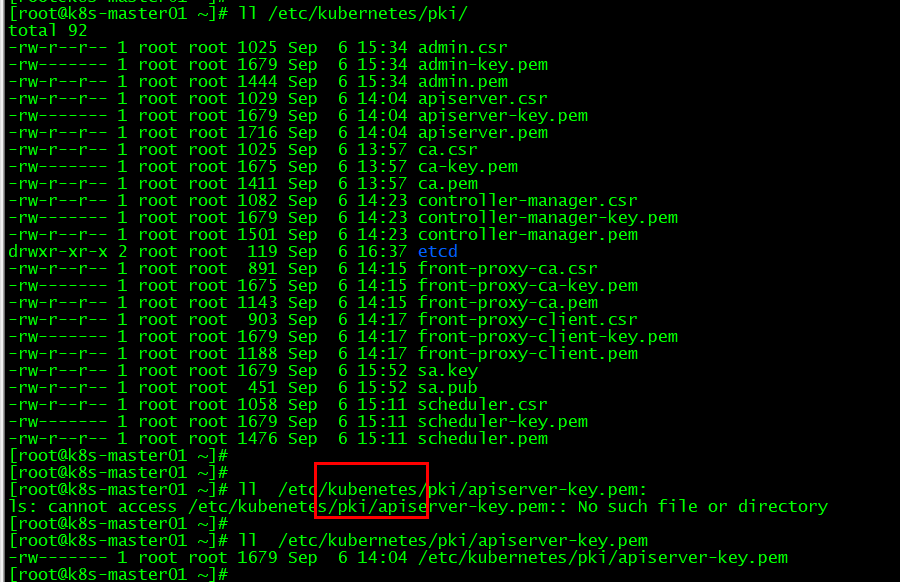

Error: error building core storage: open /etc/kubenetes/pki/apiserver-key.pem: no such file or directory

解决方法:修改配置文件位置即可。

问题29:kube-apiserver无法启动?

I0907 11:10:10.104401 7577 dynamic_cafile_content.go:129] Loaded a new CA Bundle and Verifier for "client-ca-bundle::/etc/kubernetes/pki/ca.pem"

I0907 11:10:10.104462 7577 dynamic_cafile_content.go:129] Loaded a new CA Bundle and Verifier for "request-header::/etc/kubernetes/pki/front-proxy-ca.pem"

I0907 11:10:10.105150 7577 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0907 11:10:10.105167 7577 plugins.go:161] Loaded 10 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

I0907 11:10:10.108787 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.108840 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.118716 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.118776 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.127421 7577 store.go:1376] Monitoring customresourcedefinitions.apiextensions.k8s.io count at <storage-prefix>//apiextensions.k8s.io/customresourcedefinitions

I0907 11:10:10.128585 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.128648 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.128859 7577 client.go:360] parsed scheme: "passthrough"

I0907 11:10:10.128920 7577 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{https://192.168.1.201:2379 <nil> 0 <nil>}] <nil> <nil>}

I0907 11:10:10.129014 7577 clientconn.go:948] ClientConn switching balancer to "pick_first"

I0907 11:10:10.129173 7577 client.go:360] parsed scheme: "passthrough"

I0907 11:10:10.129187 7577 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{https://192.168.1.202:2379 <nil> 0 <nil>}] <nil> <nil>}

I0907 11:10:10.129191 7577 clientconn.go:948] ClientConn switching balancer to "pick_first"

I0907 11:10:10.129357 7577 client.go:360] parsed scheme: "passthrough"

I0907 11:10:10.129378 7577 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{https://192.168.1.203:2379 <nil> 0 <nil>}] <nil> <nil>}

I0907 11:10:10.129384 7577 clientconn.go:948] ClientConn switching balancer to "pick_first"

I0907 11:10:10.130065 7577 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000609350, {CONNECTING <nil>}

I0907 11:10:10.130106 7577 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc0006095c0, {CONNECTING <nil>}

I0907 11:10:10.130158 7577 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000609810, {CONNECTING <nil>}

I0907 11:10:10.141244 7577 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc0006095c0, {READY <nil>}

I0907 11:10:10.141914 7577 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000609810, {READY <nil>}

I0907 11:10:10.142132 7577 store.go:1376] Monitoring customresourcedefinitions.apiextensions.k8s.io count at <storage-prefix>//apiextensions.k8s.io/customresourcedefinitions

I0907 11:10:10.143041 7577 cacher.go:405] cacher (*apiextensions.CustomResourceDefinition): initialized

I0907 11:10:10.144261 7577 controlbuf.go:508] transport: loopyWriter.run returning. connection error: desc = "transport is closing"

I0907 11:10:10.145268 7577 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000609350, {READY <nil>}

I0907 11:10:10.145679 7577 controlbuf.go:508] transport: loopyWriter.run returning. connection error: desc = "transport is closing"

I0907 11:10:10.159351 7577 controlbuf.go:508] transport: loopyWriter.run returning. connection error: desc = "transport is closing"

I0907 11:10:10.159498 7577 cacher.go:405] cacher (*apiextensions.CustomResourceDefinition): initialized

I0907 11:10:10.173307 7577 instance.go:289] Using reconciler: lease

I0907 11:10:10.173948 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.173992 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.184871 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.184916 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.193173 7577 store.go:1376] Monitoring podtemplates count at <storage-prefix>//podtemplates

I0907 11:10:10.193829 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.193864 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.200520 7577 cacher.go:405] cacher (*core.PodTemplate): initialized

I0907 11:10:10.204003 7577 store.go:1376] Monitoring events count at <storage-prefix>//events

I0907 11:10:10.204885 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.204931 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.211519 7577 store.go:1376] Monitoring limitranges count at <storage-prefix>//limitranges

I0907 11:10:10.214437 7577 client.go:360] parsed scheme: "endpoint"

I0907 11:10:10.214499 7577 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://192.168.1.201:2379 <nil> 0 <nil>} {https://192.168.1.202:2379 <nil> 0 <nil>} {https://192.168.1.203:2379 <nil> 0 <nil>}]

I0907 11:10:10.217852 7577 cacher.go:405] cacher (*core.LimitRange): initialized

I0907 11:10:10.225868 7577 store.go:1376] Monitoring resourcequotas count at <storage-prefix>//resourcequotas

I0907 11:10:10.228182 7577 client.go:360] parsed scheme: "endpoint"