int MAIN(int argc, char **argv) { int ret; unsigned lcore_id; ret = rte_eal_init(argc, argv); if (ret < 0) rte_panic("Cannot init EAL "); /* call lcore_hello() on every slave lcore */ RTE_LCORE_FOREACH_SLAVE(lcore_id) { rte_eal_remote_launch(lcore_hello, NULL, lcore_id); } /* call it on master lcore too */ lcore_hello(NULL); rte_eal_mp_wait_lcore(); return 0; }

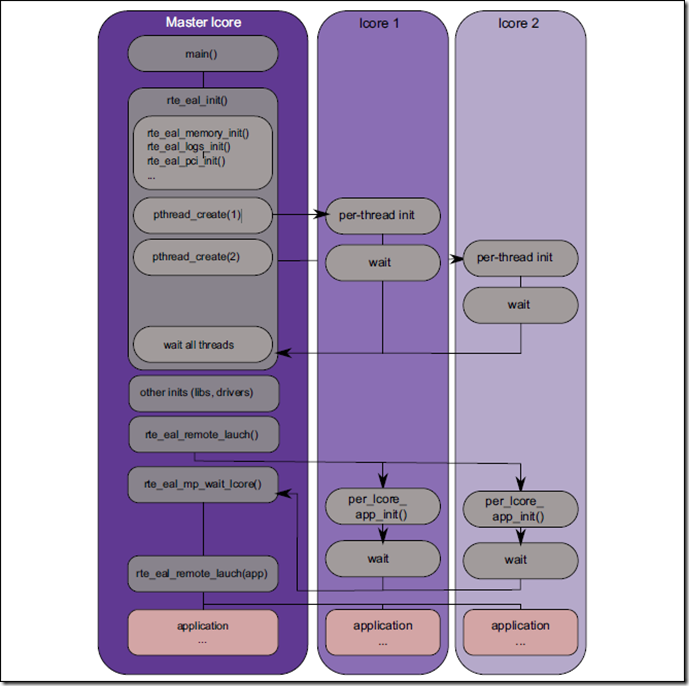

程序的流程如下图所示:

代码首先初始化了Environment Abstraction Layer(EAL),EAL主要提供了以下功能

• Intel® DPDK loading and launching • Support for multi-process and multi-thread execution types • Core affinity/assignment procedures • System memory allocation/de-allocation • Atomic/lock operations • Time reference • PCI bus access • Trace and debug functions • CPU feature identification • Interrupt handling • Alarm operations

num_pages

/* Launch threads, called at application init(). */ int rte_eal_init(int argc, char **argv) { int i, fctret, ret; pthread_t thread_id; static rte_atomic32_t run_once = RTE_ATOMIC32_INIT(0); struct shared_driver *solib = NULL; const char *logid; /* 只允许运行一次 */ if (!rte_atomic32_test_and_set(&run_once)) return -1; logid = strrchr(argv[0], '/'); logid = strdup(logid ? logid + 1: argv[0]); thread_id = pthread_self(); if (rte_eal_log_early_init() < 0) rte_panic("Cannot init early logs "); /* 获取系统中的CPU数量 */ if (rte_eal_cpu_init() < 0) rte_panic("Cannot detect lcores "); /* 根据命令行参数初始化internal_config */ fctret = eal_parse_args(argc, argv); if (fctret < 0) exit(1); /* 初始化系统中hugepage种类以及数量信息到internal_config.hugepage_info,用于后续内存初始化 */ if (internal_config.no_hugetlbfs == 0 && internal_config.process_type != RTE_PROC_SECONDARY && internal_config.xen_dom0_support == 0 && eal_hugepage_info_init() < 0) rte_panic("Cannot get hugepage information "); /* 获取系统中所有hugepage内存大小,计算方法hugepage_sz*num_pages */ if (internal_config.memory == 0 && internal_config.force_sockets == 0) { if (internal_config.no_hugetlbfs) internal_config.memory = MEMSIZE_IF_NO_HUGE_PAGE; else internal_config.memory = eal_get_hugepage_mem_size(); } if (internal_config.vmware_tsc_map == 1) { #ifdef RTE_LIBRTE_EAL_VMWARE_TSC_MAP_SUPPORT rte_cycles_vmware_tsc_map = 1; RTE_LOG (DEBUG, EAL, "Using VMWARE TSC MAP, " "you must have monitor_control.pseudo_perfctr = TRUE "); #else RTE_LOG (WARNING, EAL, "Ignoring --vmware-tsc-map because " "RTE_LIBRTE_EAL_VMWARE_TSC_MAP_SUPPORT is not set "); #endif } rte_srand(rte_rdtsc()); /* 在/var/run或者用户的home目录创建.rte_config文件用于存储内存配置信息(rte_mem_config结构)如果process type为RTE_PROC_SECONDARY则等待PRIMARY完成内存初始化 */ rte_config_init(); /* 请求IO权限 */ if (rte_eal_iopl_init() == 0) rte_config.flags |= EAL_FLG_HIGH_IOPL; /* 扫描系统中所有的PCI设备,并创建对应的device结构链到device_list中 */ if (rte_eal_pci_init() < 0) rte_panic("Cannot init PCI "); #ifdef RTE_LIBRTE_IVSHMEM if (rte_eal_ivshmem_init() < 0) rte_panic("Cannot init IVSHMEM "); #endif /* 初始化rte_config->mem_config,并映射hugepage到挂载目录下的文件rte_map* */ if (rte_eal_memory_init() < 0) rte_panic("Cannot init memory "); /* the directories are locked during eal_hugepage_info_init */ eal_hugedirs_unlock(); /* memzone可用内存初始化 */ if (rte_eal_memzone_init() < 0) rte_panic("Cannot init memzone "); /* memconfig链表初始化 */ if (rte_eal_tailqs_init() < 0) rte_panic("Cannot init tail queues for objects "); #ifdef RTE_LIBRTE_IVSHMEM if (rte_eal_ivshmem_obj_init() < 0) rte_panic("Cannot init IVSHMEM objects "); #endif if (rte_eal_log_init(logid, internal_config.syslog_facility) < 0) rte_panic("Cannot init logs "); /* 告警? 具体内容待分析 */ if (rte_eal_alarm_init() < 0) rte_panic("Cannot init interrupt-handling thread "); /* 创建与收包驱动通信用管道并初始化中断处理线程 */ if (rte_eal_intr_init() < 0) rte_panic("Cannot init interrupt-handling thread "); /* 定时器 */ if (rte_eal_timer_init() < 0) rte_panic("Cannot init HPET or TSC timers "); /* 检查master core所在socket是否有内存 */ eal_check_mem_on_local_socket(); /* 标记初始化完成 */ rte_eal_mcfg_complete(); /* 白名单内设备初始化 */ if (rte_eal_non_pci_ethdev_init() < 0) rte_panic("Cannot init non-PCI eth_devs "); /* 动态链接库 */ TAILQ_FOREACH(solib, &solib_list, next) { solib->lib_handle = dlopen(solib->name, RTLD_NOW); if ((solib->lib_handle == NULL) && (solib->name[0] != '/')) { /* relative path: try again with "./" prefix */ char sopath[PATH_MAX]; snprintf(sopath, sizeof(sopath), "./%s", solib->name); solib->lib_handle = dlopen(sopath, RTLD_NOW); } if (solib->lib_handle == NULL) RTE_LOG(WARNING, EAL, "%s ", dlerror()); } RTE_LOG(DEBUG, EAL, "Master core %u is ready (tid=%x) ", rte_config.master_lcore, (int)thread_id); /* 创建lcore的主线程 */ RTE_LCORE_FOREACH_SLAVE(i) { /* * create communication pipes between master thread * and children */ if (pipe(lcore_config[i].pipe_master2slave) < 0) rte_panic("Cannot create pipe "); if (pipe(lcore_config[i].pipe_slave2master) < 0) rte_panic("Cannot create pipe "); lcore_config[i].state = WAIT; /* create a thread for each lcore */ ret = pthread_create(&lcore_config[i].thread_id, NULL, eal_thread_loop, NULL); if (ret != 0) rte_panic("Cannot create thread "); } /* master线程绑定CPU */ eal_thread_init_master(rte_config.master_lcore); /* * Launch a dummy function on all slave lcores, so that master lcore * knows they are all ready when this function returns. */ /* 通知lcore开始调用loop */ rte_eal_mp_remote_launch(sync_func, NULL, SKIP_MASTER); rte_eal_mp_wait_lcore(); return fctret; }

下面主要分析一下内存的初始化过程

对于process type是PRIMARY的调用rte_eal_hugepage_init; SECONDARY的调用rte_eal_hugepage_attach;

/* init memory subsystem */ int rte_eal_memory_init(void) { RTE_LOG(INFO, EAL, "Setting up memory... "); const int retval = rte_eal_process_type() == RTE_PROC_PRIMARY ? rte_eal_hugepage_init() : rte_eal_hugepage_attach(); if (retval < 0) return -1; if (internal_config.no_shconf == 0 && rte_eal_memdevice_init() < 0) return -1; return 0; }

/* * Prepare physical memory mapping: fill configuration structure with * these infos, return 0 on success. * 1. map N huge pages in separate files in hugetlbfs * 2. find associated physical addr * 3. find associated NUMA socket ID * 4. sort all huge pages by physical address * 5. remap these N huge pages in the correct order * 6. unmap the first mapping * 7. fill memsegs in configuration with contiguous zones */ static int rte_eal_hugepage_init(void) { struct rte_mem_config *mcfg; struct hugepage_file *hugepage, *tmp_hp = NULL; struct hugepage_info used_hp[MAX_HUGEPAGE_SIZES]; uint64_t memory[RTE_MAX_NUMA_NODES]; unsigned hp_offset; int i, j, new_memseg; int nr_hugefiles, nr_hugepages = 0; void *addr; #ifdef RTE_EAL_SINGLE_FILE_SEGMENTS int new_pages_count[MAX_HUGEPAGE_SIZES]; #endif memset(used_hp, 0, sizeof(used_hp)); /* get pointer to global configuration */ mcfg = rte_eal_get_configuration()->mem_config; /* hugetlbfs can be disabled */ if (internal_config.no_hugetlbfs) { /* 对于不使用hugetlbfs的直接使用堆内存 */ addr = malloc(internal_config.memory); mcfg->memseg[0].phys_addr = (phys_addr_t)(uintptr_t)addr; mcfg->memseg[0].addr = addr; mcfg->memseg[0].len = internal_config.memory; mcfg->memseg[0].socket_id = SOCKET_ID_ANY; return 0; } /* check if app runs on Xen Dom0 */ if (internal_config.xen_dom0_support) { #ifdef RTE_LIBRTE_XEN_DOM0 /* use dom0_mm kernel driver to init memory */ if (rte_xen_dom0_memory_init() < 0) return -1; else return 0; #endif } /* calculate total number of hugepages available. at this point we haven't * yet started sorting them so they all are on socket 0 */ for (i = 0; i < (int) internal_config.num_hugepage_sizes; i++) { /* meanwhile, also initialize used_hp hugepage sizes in used_hp */ used_hp[i].hugepage_sz = internal_config.hugepage_info[i].hugepage_sz; nr_hugepages += internal_config.hugepage_info[i].num_pages[0]; } /* tmp_hp为hugepage的控制块 */ /* * allocate a memory area for hugepage table. * this isn't shared memory yet. due to the fact that we need some * processing done on these pages, shared memory will be created * at a later stage. */ tmp_hp = malloc(nr_hugepages * sizeof(struct hugepage_file)); if (tmp_hp == NULL) goto fail; memset(tmp_hp, 0, nr_hugepages * sizeof(struct hugepage_file)); hp_offset = 0; /* where we start the current page size entries */ /* map all hugepages and sort them */ for (i = 0; i < (int)internal_config.num_hugepage_sizes; i ++){ struct hugepage_info *hpi; /* * we don't yet mark hugepages as used at this stage, so * we just map all hugepages available to the system * all hugepages are still located on socket 0 */ hpi = &internal_config.hugepage_info[i]; if (hpi->num_pages[0] == 0) continue; /* 把所有hugepage映射进内存 */ /* map all hugepages available */ if (map_all_hugepages(&tmp_hp[hp_offset], hpi, 1) < 0){ RTE_LOG(DEBUG, EAL, "Failed to mmap %u MB hugepages ", (unsigned)(hpi->hugepage_sz / 0x100000)); goto fail; } /* 记录每一片hugepage的物理内存 */ /* find physical addresses and sockets for each hugepage */ if (find_physaddrs(&tmp_hp[hp_offset], hpi) < 0){ RTE_LOG(DEBUG, EAL, "Failed to find phys addr for %u MB pages ", (unsigned)(hpi->hugepage_sz / 0x100000)); goto fail; } /* 记录每一个片hugepage的socket id */ if (find_numasocket(&tmp_hp[hp_offset], hpi) < 0){ RTE_LOG(DEBUG, EAL, "Failed to find NUMA socket for %u MB pages ", (unsigned)(hpi->hugepage_sz / 0x100000)); goto fail; } /* 控制块按照物理地址从小到大排序 */ if (sort_by_physaddr(&tmp_hp[hp_offset], hpi) < 0) goto fail; #ifdef RTE_EAL_SINGLE_FILE_SEGMENTS /* remap all hugepages into single file segments */ new_pages_count[i] = remap_all_hugepages(&tmp_hp[hp_offset], hpi); if (new_pages_count[i] < 0){ RTE_LOG(DEBUG, EAL, "Failed to remap %u MB pages ", (unsigned)(hpi->hugepage_sz / 0x100000)); goto fail; } /* we have processed a num of hugepages of this size, so inc offset */ hp_offset += new_pages_count[i]; #else /* 连续的物理内存hugepage找到对应连续的虚拟内存空间重新映射 */ /* remap all hugepages */ if (map_all_hugepages(&tmp_hp[hp_offset], hpi, 0) < 0){ RTE_LOG(DEBUG, EAL, "Failed to remap %u MB pages ", (unsigned)(hpi->hugepage_sz / 0x100000)); goto fail; } /* 删除第一次不连续的映射 */ /* unmap original mappings */ if (unmap_all_hugepages_orig(&tmp_hp[hp_offset], hpi) < 0) goto fail; /* we have processed a num of hugepages of this size, so inc offset */ hp_offset += hpi->num_pages[0]; #endif } #ifdef RTE_EAL_SINGLE_FILE_SEGMENTS nr_hugefiles = 0; for (i = 0; i < (int) internal_config.num_hugepage_sizes; i++) { nr_hugefiles += new_pages_count[i]; } #else nr_hugefiles = nr_hugepages; #endif /* 所有socket的内存清零 */ /* clean out the numbers of pages */ for (i = 0; i < (int) internal_config.num_hugepage_sizes; i++) for (j = 0; j < RTE_MAX_NUMA_NODES; j++) internal_config.hugepage_info[i].num_pages[j] = 0; /* 重新计算每个socket对应size的内存 */ /* get hugepages for each socket */ for (i = 0; i < nr_hugefiles; i++) { int socket = tmp_hp[i].socket_id; /* find a hugepage info with right size and increment num_pages */ for (j = 0; j < (int) internal_config.num_hugepage_sizes; j++) { if (tmp_hp[i].size == internal_config.hugepage_info[j].hugepage_sz) { #ifdef RTE_EAL_SINGLE_FILE_SEGMENTS internal_config.hugepage_info[j].num_pages[socket] += tmp_hp[i].repeated; #else internal_config.hugepage_info[j].num_pages[socket]++; #endif } } } /* make a copy of socket_mem, needed for number of pages calculation */ for (i = 0; i < RTE_MAX_NUMA_NODES; i++) memory[i] = internal_config.socket_mem[i]; /* 把每个socket内存的情况写入used_hp,并返回所有hugepage页数 */ /* calculate final number of pages */ nr_hugepages = calc_num_pages_per_socket(memory, internal_config.hugepage_info, used_hp, internal_config.num_hugepage_sizes); /* error if not enough memory available */ if (nr_hugepages < 0) goto fail; /* reporting in! */ for (i = 0; i < (int) internal_config.num_hugepage_sizes; i++) { for (j = 0; j < RTE_MAX_NUMA_NODES; j++) { if (used_hp[i].num_pages[j] > 0) { RTE_LOG(INFO, EAL, "Requesting %u pages of size %uMB" " from socket %i ", used_hp[i].num_pages[j], (unsigned) (used_hp[i].hugepage_sz / 0x100000), j); } } } /* 创建可通过文件定位到的共享hugepage控制块 */ /* create shared memory */ hugepage = create_shared_memory(eal_hugepage_info_path(), nr_hugefiles * sizeof(struct hugepage_file)); if (hugepage == NULL) { RTE_LOG(ERR, EAL, "Failed to create shared memory! "); goto fail; } memset(hugepage, 0, nr_hugefiles * sizeof(struct hugepage_file)); /* 根据used_hp的内容,每个socket内存只映射used_hp[i].num_pages[socket],剩下的unmap,什么时候会出现这种情况呢? */ /* * unmap pages that we won't need (looks at used_hp). * also, sets final_va to NULL on pages that were unmapped. */ if (unmap_unneeded_hugepages(tmp_hp, used_hp, internal_config.num_hugepage_sizes) < 0) { RTE_LOG(ERR, EAL, "Unmapping and locking hugepages failed! "); goto fail; } /* 最终结果的hugepage控制块复制到共享内存中 */ /* * copy stuff from malloc'd hugepage* to the actual shared memory. * this procedure only copies those hugepages that have final_va * not NULL. has overflow protection. */ if (copy_hugepages_to_shared_mem(hugepage, nr_hugefiles, tmp_hp, nr_hugefiles) < 0) { RTE_LOG(ERR, EAL, "Copying tables to shared memory failed! "); goto fail; } /* free the temporary hugepage table */ free(tmp_hp); tmp_hp = NULL; /* find earliest free memseg - this is needed because in case of IVSHMEM, * segments might have already been initialized */ for (j = 0; j < RTE_MAX_MEMSEG; j++) if (mcfg->memseg[j].addr == NULL) { /* move to previous segment and exit loop */ j--; break; } for (i = 0; i < nr_hugefiles; i++) { new_memseg = 0; /* if this is a new section, create a new memseg */ if (i == 0) new_memseg = 1; else if (hugepage[i].socket_id != hugepage[i-1].socket_id) new_memseg = 1; else if (hugepage[i].size != hugepage[i-1].size) new_memseg = 1; else if ((hugepage[i].physaddr - hugepage[i-1].physaddr) != hugepage[i].size) new_memseg = 1; else if (((unsigned long)hugepage[i].final_va - (unsigned long)hugepage[i-1].final_va) != hugepage[i].size) new_memseg = 1; /* 物理地址连续且虚拟地址连续的内存块为一个segment */ if (new_memseg) { j += 1; if (j == RTE_MAX_MEMSEG) break; mcfg->memseg[j].phys_addr = hugepage[i].physaddr; mcfg->memseg[j].addr = hugepage[i].final_va; #ifdef RTE_EAL_SINGLE_FILE_SEGMENTS mcfg->memseg[j].len = hugepage[i].size * hugepage[i].repeated; #else mcfg->memseg[j].len = hugepage[i].size; #endif mcfg->memseg[j].socket_id = hugepage[i].socket_id; mcfg->memseg[j].hugepage_sz = hugepage[i].size; } /* continuation of previous memseg */ else { mcfg->memseg[j].len += mcfg->memseg[j].hugepage_sz; } hugepage[i].memseg_id = j; } if (i < nr_hugefiles) { RTE_LOG(ERR, EAL, "Can only reserve %d pages " "from %d requested " "Current %s=%d is not enough " "Please either increase it or request less amount " "of memory. ", i, nr_hugefiles, RTE_STR(CONFIG_RTE_MAX_MEMSEG), RTE_MAX_MEMSEG); return (-ENOMEM); } return 0; fail: if (tmp_hp) free(tmp_hp); return -1; }