尝试使用Keras构建我们的第一个RNN实例,在《爱丽丝梦游仙境》的文本上训练一个基于字符的语言模型,这个模型将通过给定的前10个字符预测下一个字符。我们选择一个基于字符的模型,是因为它的字典较小,并可以训练的更快,这和基于词的语言模型的想法是一样的。

1. 文本预处理

首先我们先获取《爱丽丝梦游仙境的》输入文本

下载地址

导入必要的库,读入文件并作基本的处理

1 from keras.layers.recurrent import SimpleRNN 2 from keras.models import Sequential 3 from keras.layers import Dense, Activation 4 import numpy as np 5 6 INPUT_FILE = "./alice_in_wonderland.txt" 7 8 # extract the input as a stream of characters 9 print("Extracting text from input...") 10 fin = open(INPUT_FILE, 'rb') 11 lines = [] 12 for line in fin: 13 line = line.strip().lower() 14 line = line.decode("ascii", "ignore") 15 if len(line) == 0: 16 continue 17 lines.append(line) 18 fin.close() 19 text = " ".join(lines)

因为我们在构建一个字符级水平的RNN,我们将字典设置为文本中出现的所有字符。因为我们将要处理的是这些字符的索引而非字符本身,于是我们要创建必要的查询表:

chars = set([c for c in text])

nb_chars = len(chars)

char2index = dict((c, i) for i, c in enumerate(chars))

index2char = dict((i, c) for i, c in enumerate(chars))

2. 创建输入和标签文本

我们通过STEP变量给出字符数目(本例为1)来步进便利文本,并提取出一段大小为SEQLEN变量定义值(本例为10)的文本段。文本段的下一字符是我们的标签字符。

1 # 例如:输入"The sky was falling",输出如下(前5个): 2 # The sky wa -> s 3 # he sky was -> 4 # e sky was -> f 5 # sky was f -> a 6 # sky was fa -> l 7 print("Creating input and label text...") 8 SEQLEN = 10 9 STEP = 1 10 11 input_chars = [] 12 label_chars = [] 13 for i in range(0, len(text) - SEQLEN, STEP): 14 input_chars.append(text[i:i + SEQLEN]) 15 label_chars.append(text[i + SEQLEN])

3. 输入和标签文本向量化

RNN输入中的每行都对应了前面展示的一个输入文本。输入中共有SEQLEN个字符,因为我们的字典大小是nb_chars给定的,我们把每个输入字符表示成one-hot编码的大小为(nb_chars)的向量。这样每行输入就是一个大小为(SEQLEN, nb_chars)的张量。我们的输出标签是一个单个的字符,所以和输入中的每个字符的表示类似。我们将输出标签表示成大小为(nb_chars)的one-hot编码的向量。因此,每个标签的形状就是nb_chars。

print("Vectorizing input and label text...") X = np.zeros((len(input_chars), SEQLEN, nb_chars), dtype=np.bool) y = np.zeros((len(input_chars), nb_chars), dtype=np.bool) for i, input_char in enumerate(input_chars): for j, ch in enumerate(input_char): X[i, j, char2index[ch]] = 1 y[i, char2index[label_chars[i]]] = 1

4. 构建模型

设定超参数BATCH_SIZE = 128

我们想返回一个字符作为输出,而非字符序列,因而设置return_sequences=False

输入形状为(SEQLEN, nb_chars)

为了改善TensorFlow后端性能,设置unroll=True

HIDDEN_SIZE = 128 BATCH_SIZE = 128 NUM_ITERATIONS = 25 NUM_EPOCHS_PER_ITERATION = 1 NUM_PREDS_PER_EPOCH = 100 model = Sequential() model.add(SimpleRNN(HIDDEN_SIZE, return_sequences=False, input_shape=(SEQLEN, nb_chars), unroll=True)) model.add(Dense(nb_chars)) model.add(Activation("softmax")) model.compile(loss="categorical_crossentropy", optimizer="rmsprop")

5. 模型的训练和测试

我们分批训练模型,每一步都测试输出

测试模型:我们先从输入里随机选一个,然后用它去预测接下来的100个字符

for iteration in range(NUM_ITERATIONS): print("=" * 50) print("Iteration #: %d" % (iteration)) model.fit(X, y, batch_size=BATCH_SIZE, epochs=NUM_EPOCHS_PER_ITERATION, verbose=0) test_idx = np.random.randint(len(input_chars)) test_chars = input_chars[test_idx] print("Generating from seed: %s" % (test_chars)) print(test_chars, end="") for i in range(NUM_PREDS_PER_EPOCH): Xtest = np.zeros((1, SEQLEN, nb_chars)) for i, ch in enumerate(test_chars): Xtest[0, i, char2index[ch]] = 1 pred = model.predict(Xtest, verbose=0)[0] ypred = index2char[np.argmax(pred)] print(ypred, end="") # move forward with test_chars + ypred test_chars = test_chars[1:] + ypred print()

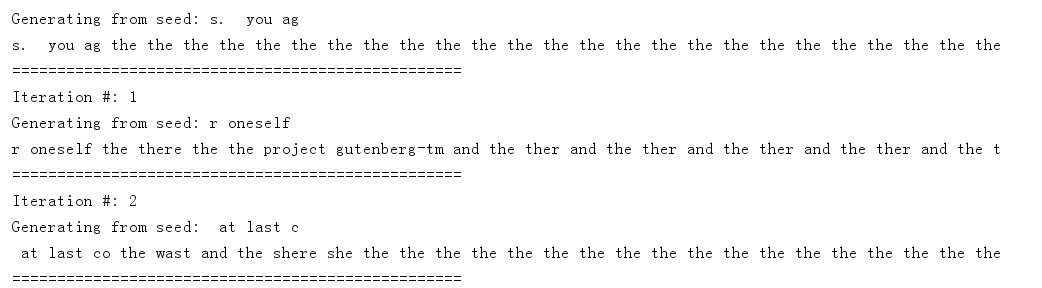

6. 输出测试结果

模型刚开始的预测毫无意义,当随着训练轮数的增加,它已经可以进行正确的拼写,尽管在表达语义上还有困难。毕竟我们这个模型是基于字符的,它对词没有任何认识,然而它的表现也足够令人惊艳了。

………………………………………………………………

完整代码如下:

from keras.layers.recurrent import SimpleRNN from keras.models import Sequential from keras.layers import Dense, Activation import numpy as np INPUT_FILE = "./alice_in_wonderland.txt" # extract the input as a stream of characters print("Extracting text from input...") fin = open(INPUT_FILE, 'rb') lines = [] for line in fin: line = line.strip().lower() line = line.decode("ascii", "ignore") if len(line) == 0: continue lines.append(line) fin.close() text = " ".join(lines) chars = set([c for c in text]) nb_chars = len(chars) char2index = dict((c, i) for i, c in enumerate(chars)) index2char = dict((i, c) for i, c in enumerate(chars)) # 例如:输入"The sky was falling",输出如下(前5个): # The sky wa -> s # he sky was -> # e sky was -> f # sky was f -> a # sky was fa -> l print("Creating input and label text...") SEQLEN = 10 STEP = 1 input_chars = [] label_chars = [] for i in range(0, len(text) - SEQLEN, STEP): input_chars.append(text[i:i + SEQLEN]) label_chars.append(text[i + SEQLEN]) print("Vectorizing input and label text...") X = np.zeros((len(input_chars), SEQLEN, nb_chars), dtype=np.bool) y = np.zeros((len(input_chars), nb_chars), dtype=np.bool) for i, input_char in enumerate(input_chars): for j, ch in enumerate(input_char): X[i, j, char2index[ch]] = 1 y[i, char2index[label_chars[i]]] = 1 HIDDEN_SIZE = 128 BATCH_SIZE = 128 NUM_ITERATIONS = 25 NUM_EPOCHS_PER_ITERATION = 1 NUM_PREDS_PER_EPOCH = 100 model = Sequential() model.add(SimpleRNN(HIDDEN_SIZE, return_sequences=False, input_shape=(SEQLEN, nb_chars), unroll=True)) model.add(Dense(nb_chars)) model.add(Activation("softmax")) model.compile(loss="categorical_crossentropy", optimizer="rmsprop") for iteration in range(NUM_ITERATIONS): print("=" * 50) print("Iteration #: %d" % (iteration)) model.fit(X, y, batch_size=BATCH_SIZE, epochs=NUM_EPOCHS_PER_ITERATION, verbose=0) test_idx = np.random.randint(len(input_chars)) test_chars = input_chars[test_idx] print("Generating from seed: %s" % (test_chars)) print(test_chars, end="") for i in range(NUM_PREDS_PER_EPOCH): Xtest = np.zeros((1, SEQLEN, nb_chars)) for i, ch in enumerate(test_chars): Xtest[0, i, char2index[ch]] = 1 pred = model.predict(Xtest, verbose=0)[0] ypred = index2char[np.argmax(pred)] print(ypred, end="") # move forward with test_chars + ypred test_chars = test_chars[1:] + ypred print()