Service概念

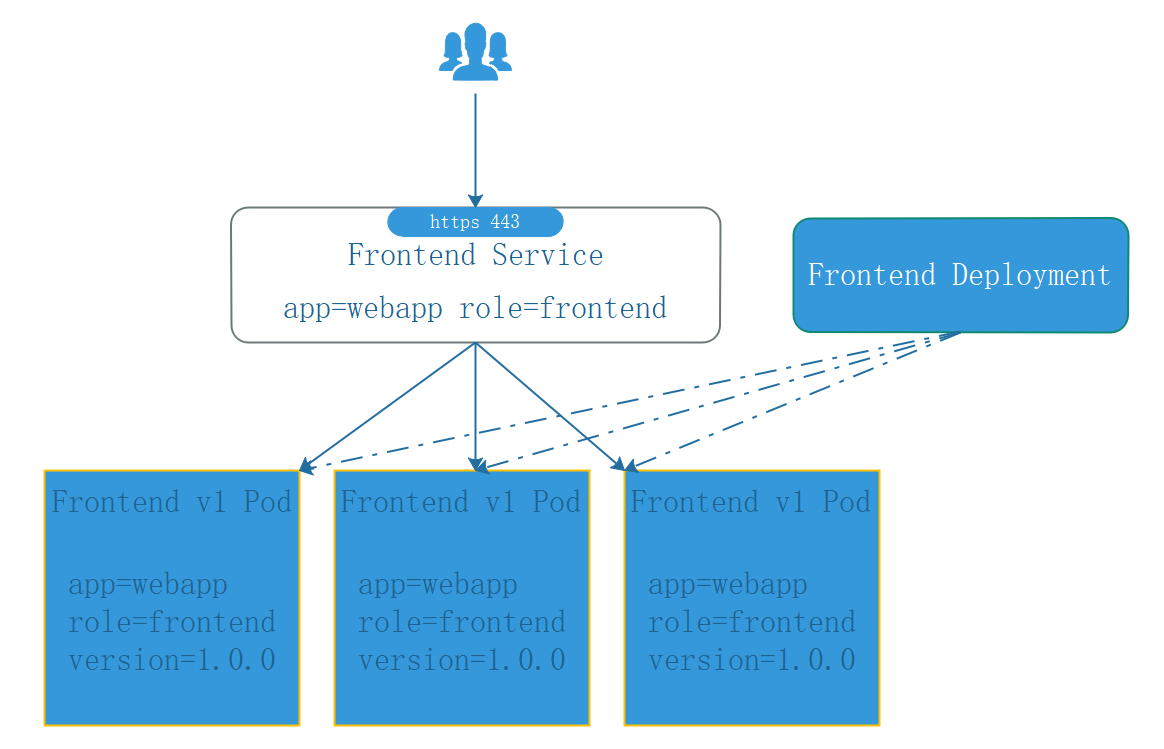

Kubernetes Service定义了一个Pod的逻辑分组,一种可以访问它们的策略。这组Pod能被Service访问到,通常是通过label Selector。

Service能够提供负载均衡的能力,它只提供4层负载均衡的能力,而没有7层功能,有时我们可能需要更多的匹配规则来转发请求,这点上4层负载均衡是不支持的。

Service的类型

Service在K8S中有四种类型

ClusterIp:默认类型,自动分配一个仅Cluster内部可以访问的虚拟IP。NodePort:在ClusterIP基础上为Service在每台机器上绑定一个端口,这样就可以通过NodePort来访问服务。LoadBalancer:在NodePort的基础上,借助cloud provider创建一个外部负载均衡器,并将请求转发到NodePort。ExternalName:把集群外部的服务引入到集群内部来,在集群内部直接使用。没有任何类型代理被创建,只有Kubernetes1.7或更高版本的kube-dns才支持。

VIP和Service代理

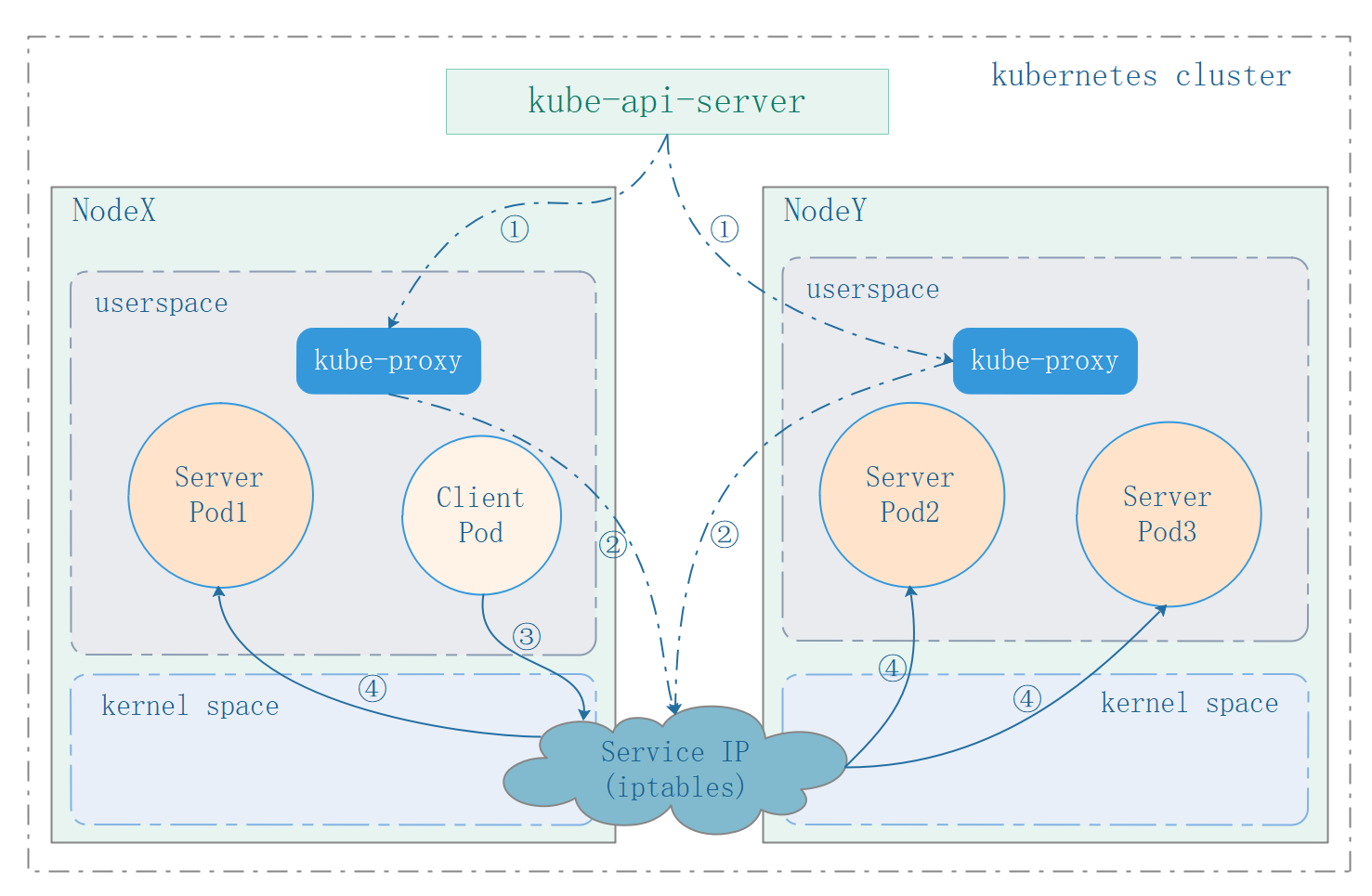

在Kubernetes集群中,每个Node运行一个kube-proxy进程。kube-proxy负责为Service实现了一种VIP(虚拟IP)的形式,而不是ExternalName的形式。在Kubernetes v1.0版本,代理v完全在userspace。在Kubernetes v1.1版本,新增了iptables代理,但并不是默认的运行模式。从Kubernetes v1.2起,默认就是iptables代理。在Kubernetes v1.8.0-beta.0中,添加了ipvs代理,在Kubernetes 1.14版本开始默认使用ipvs代理。在Kubernetes v1.0版本,Service是“4层”(TCP/UDP over IP)的概念。在Kubernetes v1.1版本,新增了Ingress API(beta版),用来表示“7层”(HTTP)服务。

代理模式的分类

userspace代理模式

iptables代理模式

-

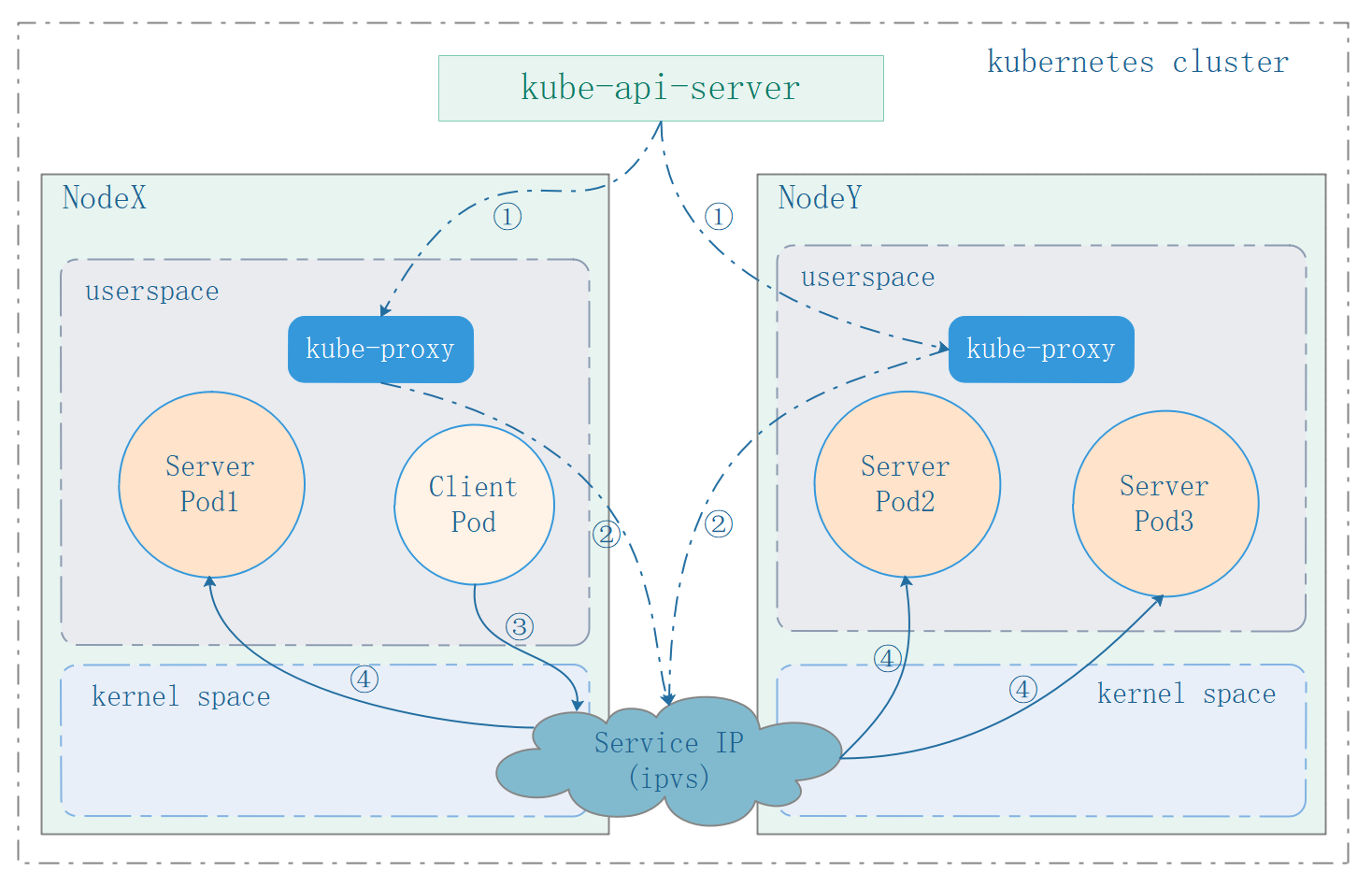

ipvs代理模式kube-proxy会监视KubernetesService对象和Endpoints对象,调用netlink接口以相应地创建ipvs规则并定期与KubernetesService对象和Endpoints对象同步ipvs规则,以确保ipvs状态与期望一致。访问服务时,流量将被重定向到其中一个后端Pod。与iptables类似,ipvs于netfilter的hook功能,但使用哈希表作为底层数据结构并在内核空间中工作。这意味着ipvs可以更快地重定向流量,并在同步代理规则时具有更好地性能。此外,ipvs为负载均衡算法提供了更多选项,例如:rr:轮询调度lc:最小连接数dh:目标哈希sh:源哈希sed:最短期望延迟nq:不排队调度

注意:ipvs模式假定在运行kube-proxy之前在节点上都已经安装了ipvs内核模块。当kube-proxy以ipvs代理模式启动时,kube-proxy将验证节点上是否安装了ipvs模块,如果未安装,则kube-proxy将回退到iptables代理模式。

Service的 spec.type

ClusterIP

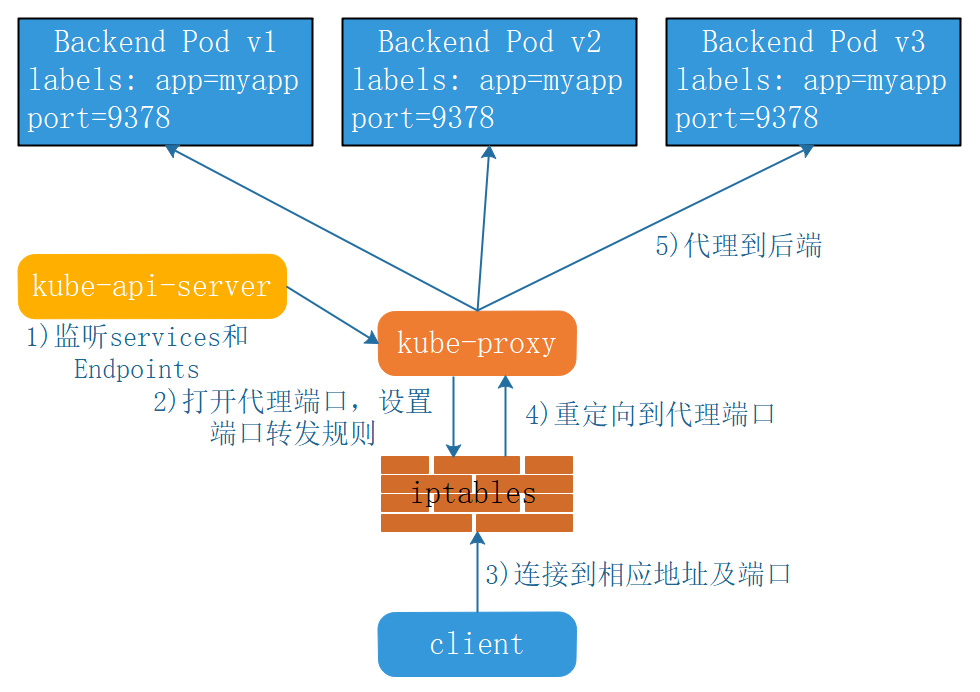

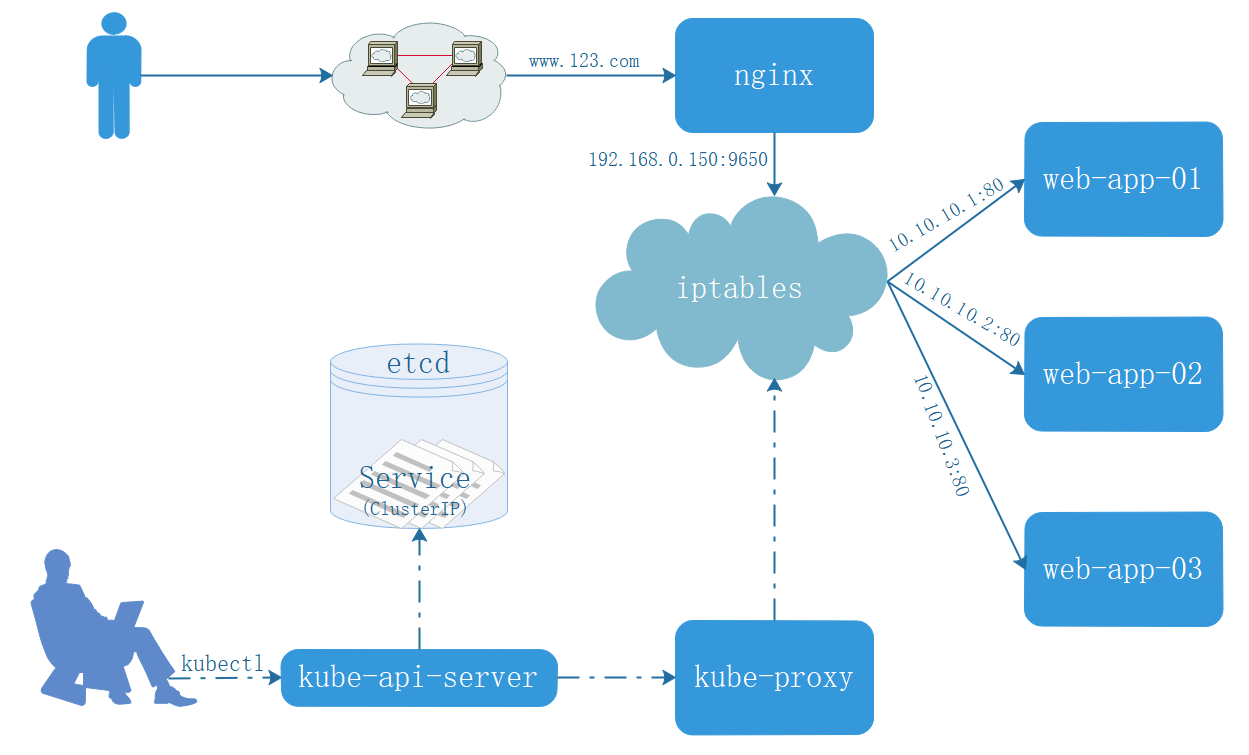

ClusterIP主要在每个node节点使用iptables,将发向clusterIP对应端口的数据,转发到kube-proxy中。然后kube-proxy自己内部实现有负载均衡的方法,并可以查询到这个service下对应pod的地址和端口,进而把数据转发给对应的pod地址和端口。

- 用户通过

kubectl命令向apiserver发送创建service的命令,apiserver收到请求后将数据存储到etcd中。 kubernetes的每个节点中都有一个叫做kube-proxy的进程,这个进程负责感知service,pod的变化,并将变化的信息写入本地的iptables规则中。iptables使用NAT等技术将virtualIP的流量转至endpoint中。

创建myapp-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

labels:

app: myapp-deploy

spec:

replicas: 3

template:

metadata:

name: myapp-deploy

labels:

app: myapp-deploy

release: stabel

env: test

spec:

containers:

- name: myapp-deploy

image: chinda.com/library/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: http

restartPolicy: Always

selector:

matchLabels:

app: myapp-deploy

release: stabel

创建myapp-service.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-service

spec:

selector:

app: myapp-service

release: stabel

ports:

- port: 80

targetPort: 80

name: http

type: ClusterIP

测试

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

myapp-service ClusterIP 10.107.157.192 <none> 80/TCP 9s

[root@k8s-master01 ~]# curl 10.107.157.192

curl: (7) Failed connect to 10.107.157.192:80; Connection refused

[root@k8s-master01 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 192.168.0.150:6443 Masq 1 3 0

TCP 10.96.0.10:53 rr

-> 10.244.0.43:53 Masq 1 0 0

-> 10.244.0.44:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.43:9153 Masq 1 0 0

-> 10.244.0.44:9153 Masq 1 0 0

TCP 10.107.157.192:80 rr

UDP 10.96.0.10:53 rr

-> 10.244.0.43:53 Masq 1 0 0

-> 10.244.0.44:53 Masq 1 0 0

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-deploy-6f87986465-2pcgp 1/1 Running 0 14m 10.244.1.123 k8s-node01 <none> <none>

myapp-deploy-6f87986465-7rhrq 1/1 Running 0 14m 10.244.1.124 k8s-node01 <none> <none>

myapp-deploy-6f87986465-scz2v 1/1 Running 0 14m 10.244.2.110 k8s-node02 <none> <none>

拒绝连接,ipvs检测service下没有挂载任何的pod,原因是deployment和service的spec.selector命名不相同。

修改后

修改后myapp-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

labels:

app: myapp-deploy

spec:

replicas: 3

template:

metadata:

name: myapp

labels:

app: myapp

release: stabel

env: test

spec:

containers:

- name: myapp-deploy

image: chinda.com/library/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: http

restartPolicy: Always

selector:

matchLabels:

app: myapp

release: stabel

修改后myapp-service.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-service

spec:

selector:

app: myapp

release: stabel

ports:

- port: 80

targetPort: 80

name: http

type: ClusterIP

检测

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

myapp-service ClusterIP 10.104.50.207 <none> 80/TCP 10s

[root@k8s-master01 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 192.168.0.150:6443 Masq 1 3 0

TCP 10.96.0.10:53 rr

-> 10.244.0.43:53 Masq 1 0 0

-> 10.244.0.44:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.43:9153 Masq 1 0 0

-> 10.244.0.44:9153 Masq 1 0 0

TCP 10.104.50.207:80 rr

-> 10.244.1.125:80 Masq 1 0 0

-> 10.244.1.126:80 Masq 1 0 0

-> 10.244.2.111:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.43:53 Masq 1 0 0

-> 10.244.0.44:53 Masq 1 0 0

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-deploy-677ccc888c-m42jt 1/1 Running 0 119s 10.244.1.125 k8s-node01 <none> <none>

myapp-deploy-677ccc888c-rgsn4 1/1 Running 0 119s 10.244.2.111 k8s-node02 <none> <none>

myapp-deploy-677ccc888c-w6hzg 1/1 Running 0 119s 10.244.1.126 k8s-node01 <none> <none>

[root@k8s-master01 ~]# curl 10.104.50.207

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Headless Service

有时不需要或不想要负载均衡,以及单独的Service IP。遇到这种情况,可以通过指定Cluster IP(spec.clusterIP)的值为“None”来创建Headless Service。这类Service并不会分配Cluster IP,kube-proxy不会处理他们,而且平台也不会为它们进行负载均衡和路由。

创建myapp-headless-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-headless

spec:

selector:

app: myapp

ports:

- port: 80

targetPort: 80

clusterIP: None

解析coredns

# svc 创建成功会写入到coredns中

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

myapp-headless ClusterIP None <none> 80/TCP 6s

myapp-service ClusterIP 10.104.50.207 <none> 80/TCP 16m

[root@k8s-master01 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-5c98db65d4-dhrw6 1/1 Running 21 13d 10.244.0.44 k8s-master01 <none> <none>

coredns-5c98db65d4-jhvv2 1/1 Running 21 13d 10.244.0.43 k8s-master01 <none> <none>

etcd-k8s-master01 1/1 Running 22 13d 192.168.0.150 k8s-master01 <none> <none>

kube-apiserver-k8s-master01 1/1 Running 22 13d 192.168.0.150 k8s-master01 <none> <none>

kube-controller-manager-k8s-master01 1/1 Running 22 13d 192.168.0.150 k8s-master01 <none> <none>

kube-flannel-ds-amd64-bm5px 1/1 Running 28 13d 192.168.0.150 k8s-master01 <none> <none>

kube-flannel-ds-amd64-cfhhg 1/1 Running 23 13d 192.168.0.152 k8s-node02 <none> <none>

kube-flannel-ds-amd64-mlqgl 1/1 Running 20 13d 192.168.0.151 k8s-node01 <none> <none>

kube-proxy-4f8dk 1/1 Running 19 13d 192.168.0.152 k8s-node02 <none> <none>

kube-proxy-lg875 1/1 Running 19 13d 192.168.0.151 k8s-node01 <none> <none>

kube-proxy-zwpmh 1/1 Running 22 13d 192.168.0.150 k8s-master01 <none> <none>

kube-scheduler-k8s-master01 1/1 Running 22 13d 192.168.0.150 k8s-master01 <none> <none>

# 安装dig命令

[root@k8s-master01 ~]# yum -y install bind-utils

# 访问svc {service-name}.{namespace}.svc.cluster.local

[root@k8s-master01 ~]# dig -t A myapp-headless.default.svc.cluster.local. @10.244.0.44

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-16.P2.el7_8.2 <<>> -t A myapp-headless.default.svc.cluster.local. @10.244.0.44

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 6438

;; flags: qr aa rd; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;myapp-headless.default.svc.cluster.local. IN A

;; ANSWER SECTION:

myapp-headless.default.svc.cluster.local. 30 IN A 10.244.1.125

myapp-headless.default.svc.cluster.local. 30 IN A 10.244.1.126

myapp-headless.default.svc.cluster.local. 30 IN A 10.244.2.111

;; Query time: 0 msec

;; SERVER: 10.244.0.44#53(10.244.0.44)

;; WHEN: Fri May 01 09:57:24 CST 2020

;; MSG SIZE rcvd: 237

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-deploy-677ccc888c-m42jt 1/1 Running 0 48m 10.244.1.125 k8s-node01 <none> <none>

myapp-deploy-677ccc888c-rgsn4 1/1 Running 0 48m 10.244.2.111 k8s-node02 <none> <none>

myapp-deploy-677ccc888c-w6hzg 1/1 Running 0 48m 10.244.1.126 k8s-node01 <none> <none>

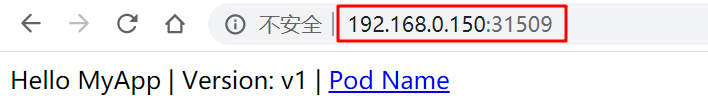

NodePort

NodePort的原理在于在Node上开了一个端口,将向该端口的流量导入kube-proxy,然后由kube-proxy进一步给对应的Pod。

创建nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-nodeport

spec:

selector:

app: myapp

release: stabel

ports:

- port: 80

targetPort: 80

type: NodePort

测试

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

myapp-headless ClusterIP None <none> 80/TCP 36m

myapp-nodeport NodePort 10.105.209.63 <none> 80:31509/TCP 5s

myapp-service ClusterIP 10.104.50.207 <none> 80/TCP 52m

# 每个节点都会开放此端口

[root@k8s-master01 ~]# netstat -anp | grep 31509

tcp6 0 0 :::31509 :::* LISTEN 3338/kube-proxy

LoadBalancer

云供应商提供的服务,其实和NodePort是同一种方式。

ExternalName

这种类型的Service通过返回CNAME和它的值,可以将服务映射到externalName字段的内容(例如:www.123.com)。ExternalName Service是Service的特例,它没有selector,也没有定义任何的端口和Endpoint。相反的,对于运行在集群之外的服务,它通过返回该外部服务的别名这种方式来提供服务。

创建ex.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-ex

spec:

type: ExternalName

externalName: www.123.com

检测

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

myapp-ex ExternalName <none> www.123.com <none> 6s

myapp-headless ClusterIP None <none> 80/TCP 99m

myapp-nodeport NodePort 10.105.209.63 <none> 80:31509/TCP 62m

myapp-service ClusterIP 10.104.50.207 <none> 80/TCP 115m

[root@k8s-master01 ~]# dig -t A myapp-ex.default.svc.cluster.local. @10.244.0.44

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-16.P2.el7_8.2 <<>> -t A myapp-ex.default.svc.cluster.local. @10.244.0.44

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 41068

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;myapp-ex.default.svc.cluster.local. IN A

;; ANSWER SECTION:

myapp-ex.default.svc.cluster.local. 30 IN CNAME www.123.com.

www.123.com. 30 IN A 61.132.13.130

;; Query time: 68 msec

;; SERVER: 10.244.0.44#53(10.244.0.44)

;; WHEN: Fri May 01 11:08:54 CST 2020

;; MSG SIZE rcvd: 149

当查询主机 myapp-ex.default.svc.cluster.local时,集群的DNS服务将返回一个值www.123.comd的CNAME记录。访问这个服务的工作方式和其他的相同,唯一不同的时重定向发生在DNS层,而且不会进行代理或转发。