Ceph : performance, reliability and scalability storage solution

Contents

|

|

| Software version | 0.72.2 |

|---|---|

| Operating System | Debian 7 |

| Website | Ceph Website |

| Last Update | 02/06/2014 |

| Others | |

1 Introduction

Ceph is an open-source, massively scalable, software-defined storage system which provides object, block and file system storage in a single platform. It runs on commodity hardware-saving you costs, giving you flexibility and because it’s in the Linux kernel, it’s easy to consume.[1]

Ceph is able to manage :

- Object Storage : Ceph provides seamless access to objects using native language bindings or radosgw, a REST interface that’s compatible with applications written for S3 and Swift.

- Block Storage : Ceph’s RADOS Block Device (RBD) provides access to block device images that are striped and replicated across the entire storage cluster.

- File System : Ceph provides a POSIX-compliant network file system that aims for high performance, large data storage, and maximum compatibility with legacy applications (not yet stable)

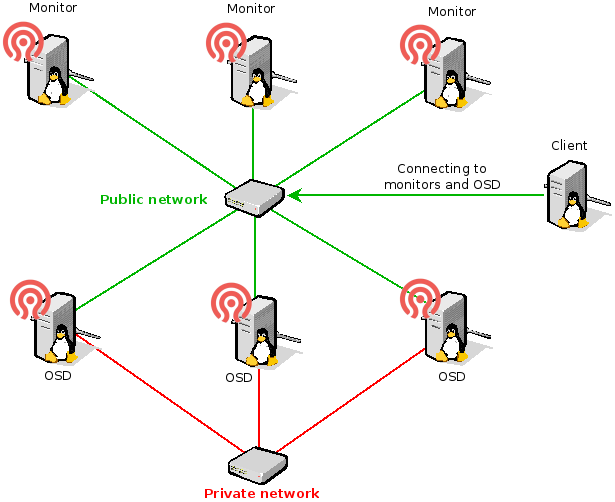

Whether you want to provide Ceph Object Storage and/or Ceph Block Device services to Cloud Platforms, deploy a Ceph Filesystem or use Ceph for another purpose, all Ceph Storage Cluster deployments begin with setting up each Ceph Node, your network and the Ceph Storage Cluster. A Ceph Storage Cluster requires at least one Ceph Monitor and at least two Ceph OSD Daemons. The Ceph Metadata Server is essential when running Ceph Filesystem clients.

- OSDs: A Ceph OSD Daemon (OSD) stores data, handles data replication, recovery, backfilling, rebalancing, and provides some monitoring information to Ceph Monitors by checking other Ceph OSD Daemons for a heartbeat. A Ceph Storage Cluster requires at least two Ceph OSD Daemons to achieve an active + clean state when the cluster makes two copies of your data (Ceph makes 2 copies by default, but you can adjust it).

- Monitors: A Ceph Monitor maintains maps of the cluster state, including the monitor map, the OSD map, the Placement Group (PG) map, and the CRUSH map. Ceph maintains a history (called an “epoch”) of each state change in the Ceph Monitors, Ceph OSD Daemons, and PGs.

- MDSs: A Ceph Metadata Server (MDS) stores metadata on behalf of the Ceph Filesystem (i.e., Ceph Block Devices and Ceph Object Storage do not use MDS). Ceph Metadata Servers make it feasible for POSIX file system users to execute basic commands like ls, find, etc. without placing an enormous burden on the Ceph Storage Cluster.

Ceph stores a client’s data as objects within storage pools. Using the CRUSH algorithm, Ceph calculates which placement group should contain the object, and further calculates which Ceph OSD Daemon should store the placement group. The CRUSH algorithm enables the Ceph Storage Cluster to scale, rebalance, and recover dynamically.

1.1 Testing case

If you want to test with Vagrant and VirtualBox, I've made a Vagrantfile for it running on Debian Wheezy :

This will spawn VMs with correct hardware to run. It will also install Ceph as well. After booting those instances, you will have all the Ceph servers like that :

2 Installation

2.1 First node

To get the latest version, we're going to use the official repositories :

Then we're going to install Ceph and ceph-deploy which help us to install in a faster way all the components :

aptitude update aptitude install ceph ceph-deploy openntpd |

Openntpd is not mandatory, but you need all your machine to be clock synchronized !

|

You absolutely need well named servers (hostname) and dns names available. A dns server is mandatory. |

2.2 Other nodes

To install ceph from the first (admin) node for any kind of nodes, here is a simple solution that avoid to enter the apt key etc... :

ceph-deploy install <node_name> |

| For production usage, you should choose an LTS version like emperor |

ceph-deploy install --release emperor <node_name> |

|

You need to exchange SSH keys to remotely be able to connect to the target machines. |

3 Deploy

First of all, your Ceph configuration will be generated in the current directory, so it's suggested to create a dedicated folder for it :

mkdir ceph-config cd ceph-config |

|

Be sure of your network configuration for Monitor nodes as it's a nightmare to change later !!! |

3.1 Cluster

The first machine on which you'll want to start, you'll need to create the Ceph cluster. I'll do it here on the monitor server named mon1 :

3.2 Monitor

A Ceph Monitor maintains maps of the cluster state, including the monitor map, the OSD map, the Placement Group (PG) map, and the CRUSH map. Ceph maintains a history (called an “epoch”) of each state change in the Ceph Monitors, Ceph OSD Daemons, and PGs.

3.2.1 Add the first monitor

To create the first (only the first today because ceph-deploy got problems) monitor node (mon1 here) :

3.2.2 Add a monitor

To add 2 others monitors nodes (mon2 and mon3) in the cluster, you'll need to edit the configuration on a monitor and admin node. You'll have to set the mon_host, mon_initial_members and public_network configuration in :

Then update the current cluster configuration :

ceph-deploy --overwrite-conf config push mon2 ceph-deploy --overwrite-conf config push mon3 or ceph-deploy --overwrite-conf config push mon2 mon3 |

You now need to update the new configuration on all your monitor nodes :

ceph-deploy --overwrite-conf config push mon1 mon2 mon3 |

| For production usage, you need at least 3 nodes |

3.2.3 Remove a monitor

If you need to remove a monitor for maintenance :

ceph-deploy mon destroy mon1 |

3.3 Admin node

To get the first admin node, you'll need to gather keys on a monitor node. To make it simple, all ceph-deploy should be done from that machine :

Then you need to exchange SSH keys to remotely be able to connect to the target machines.

3.4 OSD

Ceph OSD Daemon (OSD) stores data, handles data replication, recovery, backfilling, rebalancing, and provides some monitoring information to Ceph Monitors by checking other Ceph OSD Daemons for a heartbeat. A Ceph Storage Cluster requires at least two Ceph OSD Daemons to achieve an active + clean state when the cluster makes two copies of your data (Ceph makes 2 copies by default, but you can adjust it).

3.4.1 Add an OSD

To deploy Ceph OSD, we'll first start to erase the remote disk and create a gpt table on the dedicated disk 'sdb' :

It will create a journalized partition and a data one. Then we could create partitions on the on 'osd1' server and prepare + activate this OSD :

You can see there's an error but it works :

3.4.2 Get OSD status

To know the OSD status :

3.4.3 Remove an OSD

To remove an OSD, it's unfortunately not yet integrated in ceph-deploy. So first, look at the current status :

Here I want to remove the osd.0 :

osd='osd.0' |

If the OSD wasn't down, I should put it down with this command :

> ceph osd out $osd osd.0 is already out. |

Then I remove it from the crushmap :

> ceph osd crush remove $osd removed item id 0 name 'osd.0' from crush map |

Delete the authentication part (Paxos) :

ceph auth del $osd |

Then remove the OSD :

ceph osd rm $osd |

And now, it's definitively out :

3.5 RBD

To make Block devices, you need to have a correct OSD configuration done with a created pool. You don't have anything else to have :-)

4 Configuration

4.1 OSD

4.1.1 OSD Configuration

4.1.1.1 Global OSD configuration

The Ceph Client retrieves the latest cluster map and the CRUSH algorithm calculates how to map the object to a placement group, and then calculates how to assign the placement group to a Ceph OSD Daemon dynamically. By default Ceph have 2 replicas and you can change it by 3 in adding those line to the Ceph configuration[2] :

[global]

osd pool default size = 3

osd pool default min size = 1

|

- osd pool default size : the number of replicas

- osd pool default min size : set the minimum available replicas before putting OSD down

Configure the placement group (Total PGs = (number of OSD * 100) / replicas numbers) :

[global]

osd pool default pg num = 100

osd pool default pgp num = 100

|

Then you can push your new configuration :

ceph-deploy --overwrite-conf config push mon1 mon2 mon3 |

4.1.1.2 Network configuration

For the OSD, you've got 2 network interfaces (private and public). So to configure it properly on your admin machine by updating your configuration file as follow :

[osd] cluster network = 192.168.33.0/24 public network = 192.168.0.0/24 |

But if you want to add specific configuration :

Then you can push your new configuration :

ceph-deploy --overwrite-conf config push mon1 mon2 mon3 |

4.1.2 Create an OSD pool

To create an OSD pool :

ceph osd pool create <pool_name> <pg_num> <pgp_num> |

4.1.3 List OSD pools

You can list OSD :

> ceph osd lspools 0 data,1 metadata,2 rbd,3 mypool, |

4.2 Crush map

The Crushmap[3] contain a list of OSDs, a list of 'buckets' for aggregating the devices into physical locations. You can edit[4] it to manage it manually.

To show the complete map of your Ceph :

ceph osd tree |

To get crushmap and to edit it :

ceph osd getcrushmap -o mymap crushtool -d mymap -o mymap.txt |

To set a new crushmap after editing :

crushtool -c mymap.txt -o mynewmap ceph osd setcrushmap -i mynewmap |

To get quorum status for the monitors :

ceph quorum_status --format json-pretty |

5 Usage

5.1 Check health

You can check cluster health :

5.2 Change configuration on the fly

To avoid service restart on a simple modification, you can interact directly with Ceph to change some values. First of all, you can get all current values of your Ceph cluster :

Now if I want to change one of those values :

> ceph tell osd.* injectargs '--mon_clock_drift_allowed 1' osd.0: mon_clock_drift_allowed = '1' osd.1: mon_clock_drift_allowed = '1' osd.2: mon_clock_drift_allowed = '1' |

You can change '*' by the name of an OSD if you want to apply this configuration to a specific node.

Then do not forget to add it to your Ceph configuration and push it !

5.3 Use object storage

5.3.1 Add object

When you have an Ceph OSD pool ready, you can add a file :

rados put <object_name> <file_to_upload> --pool=<pool_name> |

5.3.2 List objects in a pool

You can list your pool content :

> rados -p <pool_name> ls filename |

You can see where it has been stored :

> ceph osd map <pool_name> <filename> osdmap e62 pool '<pool_name>' (3) object 'filename' -> pg 3.af0f2847 (3.47) -> up [1,0] acting [1,0] |

To locate the file on the hard drive, look at this folder (/var/lib/ceph/osd/ceph-1/current). Then look at the previous result (3.47) and the filename af0f2847. So the file will be placed here :

> ls /var/lib/ceph/osd/ceph-1/current/3.47_head cephulog__head_AF0F2847__3 |

5.3.3 Remove objects

To finish, remove it :

rados rm <filename> --pool=<pool_name> |

5.4 Use blocks device storage

This part becomes very interesting if you start using block devices storage. From an admin node, launch client install :

ceph-deploy install <client> ceph-deploy admin <client> |

On the client, load the module and add it to launch at boot :

modprobe rbd echo "rbd" >> /etc/modules |

5.4.1 Create a block device

Now to create a block device (you can do it on the client node if you want has it gets the admin key last pushed by ceph-deploy) :

rbd create <name> --size <size_in_megabytes> [--pool <pool_name>] |

If you don't specify the pool name option, it will automatically be created in the 'rbd' pool.

5.4.2 List available block devices

This is so simple :

> rbd ls bd_name |

And you can show mapped :

> rbd showmapped id pool image snap device 0 rbd bd_name - /dev/rbd0 |

5.4.3 Get block device informations

You may need to grab informations on the device block to know where it is physically or simply the size :

> rbd info <name> rbd image 'bd_name': size 4096 MB in 1024 objects order 22 (4096 kB objects) block_name_prefix: rb.0.139d.74b0dc51 format: 1 |

5.4.4 Map and mount a block device

First your need to map it to make it appears in your device list :

rbd map <name> [--pool <pool_name>] |

You can now format it :

mkfs.ext4 /dev/rbd/pool_name/name |

And now mount it :

mount /dev/rbd/pool_name/name /mnt |

That's it :-)

5.4.5 Remove a block device

To remove, once again it's simple :

rbd rm <name> [--pool <pool_name>] |

5.4.6 Umount and unmap a block device

Umount and umap is as easy as you think :

umount /mnt rbd unmap /dev/rbd/pool_name/name |

5.4.7 Advanced usage

I won't list all features, but you can look at the man to :

- clone

- export/import

- snapshot

- bench write

...

6 FAQ

6.1 Reset a node

You sometime needs to reset a node. It's generally needed when you're doing tests. From the admin node run this :

ceph-deploy purge <node_name> ceph-deploy purgedata <node_name> |

Then reinstall it with :

ceph-deploy install <node_name> |

6.2 Can't add a new monitor node

If you can't add a new monitor mon (here mon2)[5] :

You have to add public network and monitor to the list in configuration file. Look here to see how to add correctly a new mon.

6.3 health HEALTH_WARN clock skew detected

If you get this kind of problem :

You need to install an NTP server. The millisecond is important. Here is a workaround :

[global]

mon_clock_drift_allowed = 1

|

This set the possible delta to avoid the warning message.