1、配置远程服务器信息

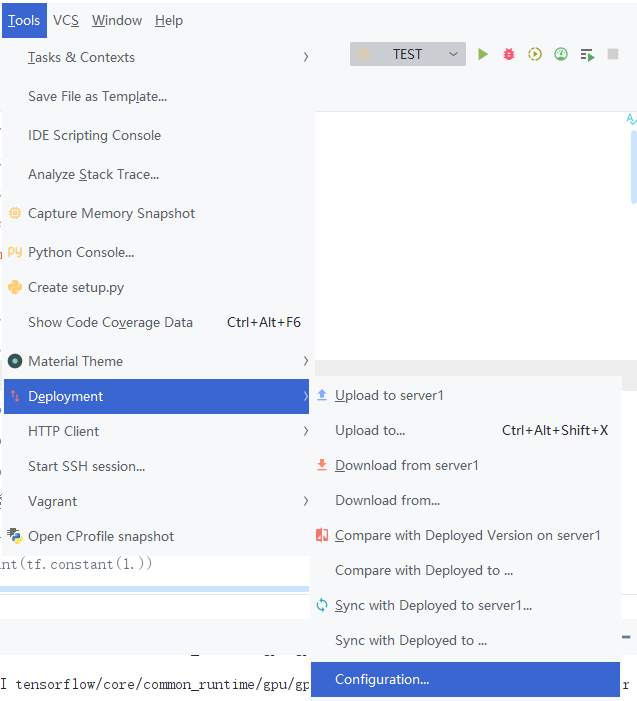

Tools——Deployment——Configuration

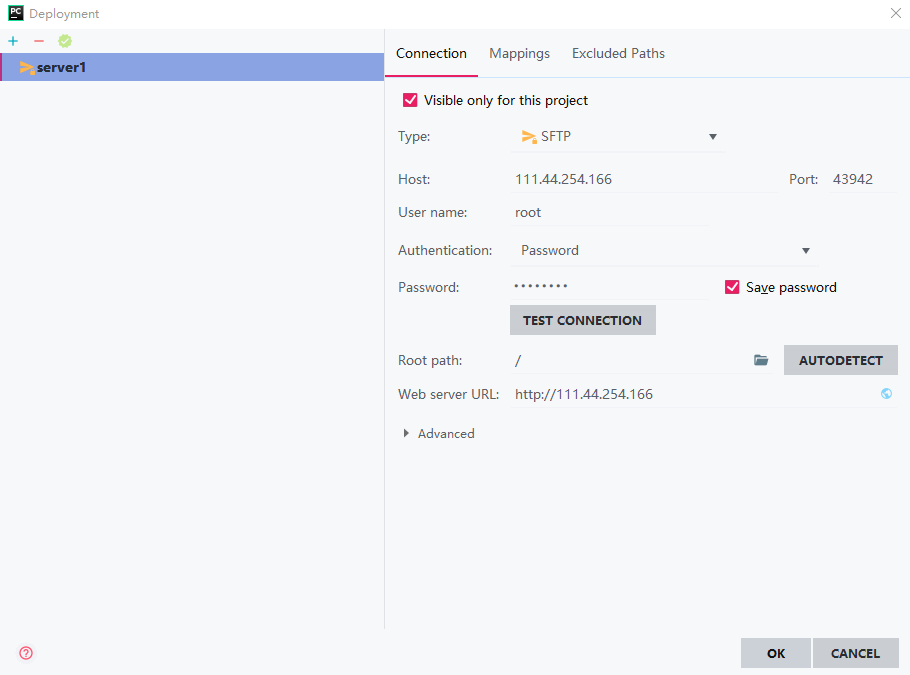

然后,点击加号Add一个远程服务信息。

我这里命名为server1;Type选择SFTP;Host即ip地址,也就是服务器的地址;Port是端口号;User name即用户名;再输一下password,顺便save一下。

Test一下Connection,然后自动生成一下Root path,OK。

2、添加远程调试环境

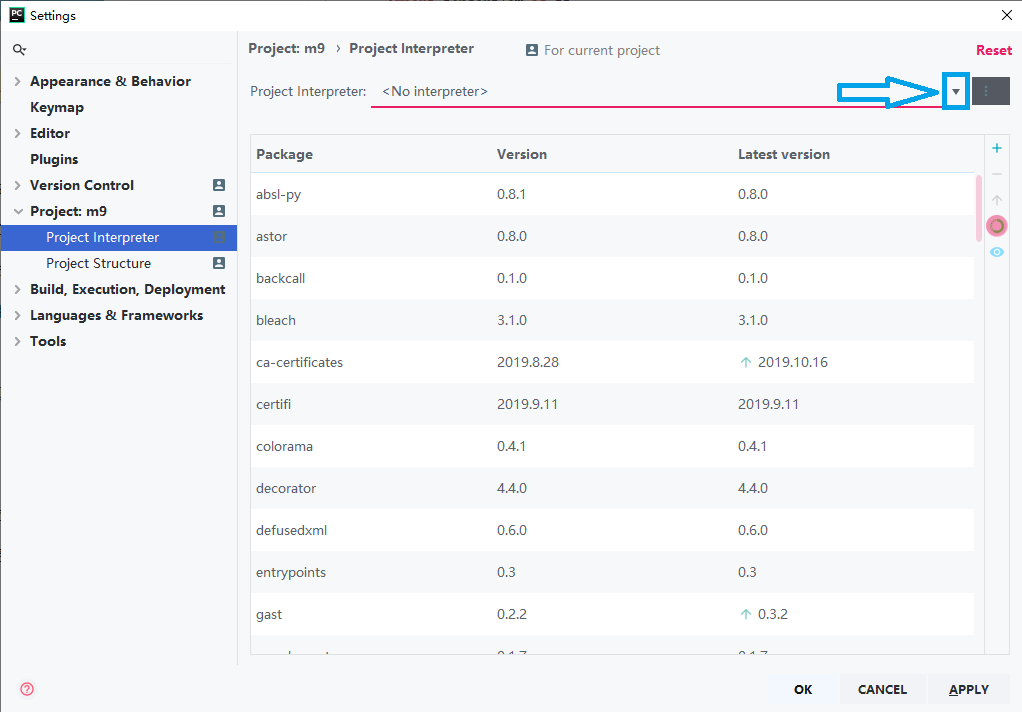

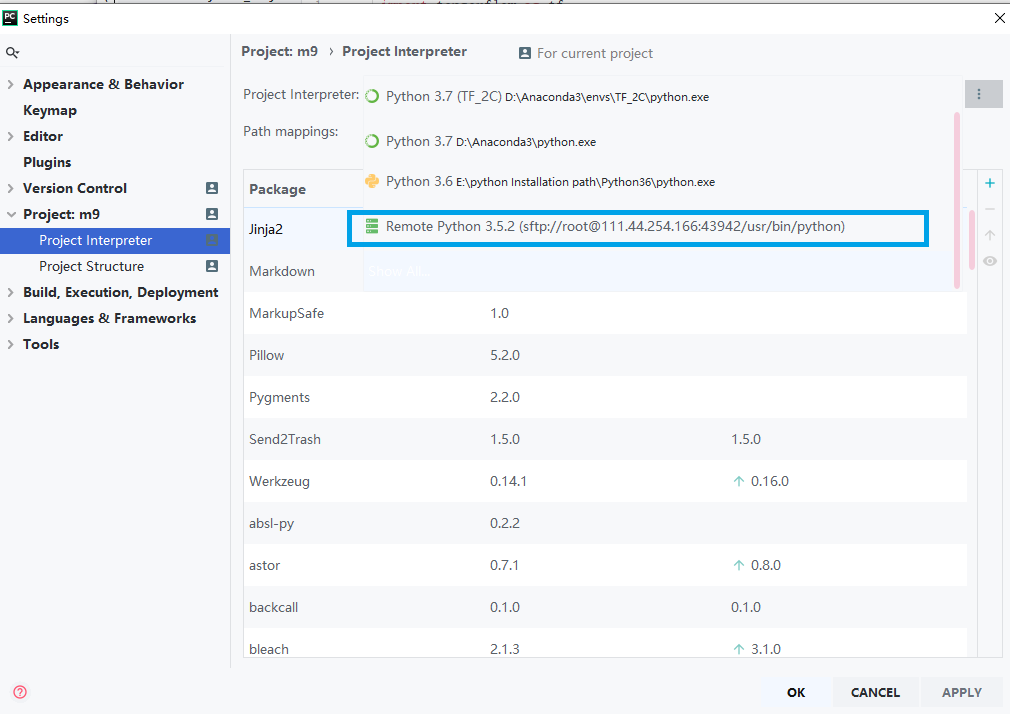

File——Settings——Project——Project Interpreter(解释器)

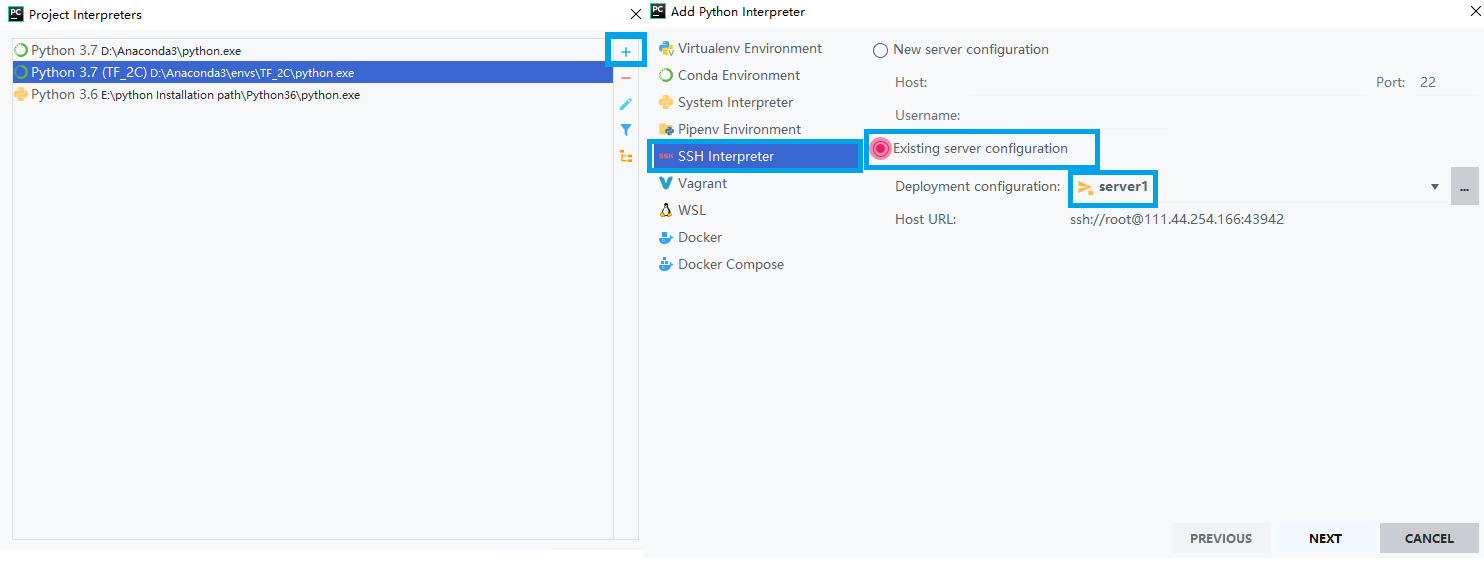

Show all——加号——SSH Interpreter——Existing server configuration——server1——Next——Finish

3、Test

新建一个项目文件,跑一下code。

import tensorflow as tf a = tf.test.is_built_with_cuda() b = tf.test.is_gpu_available( cuda_only=False, min_cuda_compute_capability=None ) print(a) print(b)

结果:

ssh://root@111.44.254.166:43942/usr/bin/python -u /root/tmp/pycharm_project_928/test.py 2019-10-22 22:54:51.954587: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA 2019-10-22 22:54:52.058581: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:897] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero 2019-10-22 22:54:52.059029: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1392] Found device 0 with properties: name: GeForce RTX 2080 major: 7 minor: 5 memoryClockRate(GHz): 1.71 pciBusID: 0000:01:00.0 totalMemory: 7.77GiB freeMemory: 7.62GiB 2019-10-22 22:54:52.059044: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1471] Adding visible gpu devices: 0 2019-10-22 22:54:52.275783: I tensorflow/core/common_runtime/gpu/gpu_device.cc:952] Device interconnect StreamExecutor with strength 1 edge matrix: 2019-10-22 22:54:52.275834: I tensorflow/core/common_runtime/gpu/gpu_device.cc:958] 0 2019-10-22 22:54:52.275842: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0: N 2019-10-22 22:54:52.275968: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1084] Created TensorFlow device (/device:GPU:0 with 7342 MB memory) -> physical GPU (device: 0, name: GeForce RTX 2080, pci bus id: 0000:01:00.0, compute capability: 7.5) True True Process finished with exit code 0