▶ 使用Jacobi 迭代求泊松方程的数值解

● 使用 data 构件,强行要求 u0 仅拷入和拷出 GPU 各一次,u1 仅拷入GPU 一次

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <math.h> 4 #include <time.h> 5 #include <openacc.h> 6 7 #if defined(_WIN32) || defined(_WIN64) 8 #include <C:Program FilesPGIwin6419.4includewrapsys imeb.h> 9 #define timestruct clock_t 10 #define gettime(a) (*(a) = clock()) 11 #define usec(t1,t2) (t2 - t1) 12 #else 13 #include <sys/time.h> 14 #define gettime(a) gettimeofday(a, NULL) 15 #define usec(t1,t2) (((t2).tv_sec - (t1).tv_sec) * 1000000 + (t2).tv_usec - (t1).tv_usec) 16 typedef struct timeval timestruct; 17 #endif 18 19 inline float uval(float x, float y) 20 { 21 return x * x + y * y; 22 } 23 24 int main() 25 { 26 const int row = 8191, col = 1023; 27 const float height = 1.0, width = 2.0; 28 const float hx = height / row, wy = width / col; 29 const float fij = -4.0f; 30 const float hx2 = hx * hx, wy2 = wy * wy, c1 = hx2 * wy2, c2 = 1.0f / (2.0 * (hx2 + wy2)); 31 const int maxIter = 100; 32 const int colPlus = col + 1; 33 34 float *restrict u0 = (float *)malloc(sizeof(float)*(row + 1)*colPlus); 35 float *restrict u1 = (float *)malloc(sizeof(float)*(row + 1)*colPlus); 36 float *utemp = NULL; 37 38 // 初始化 39 for (int ix = 0; ix <= row; ix++) 40 { 41 u0[ix*colPlus + 0] = u1[ix*colPlus + 0] = uval(ix * hx, 0.0f); 42 u0[ix*colPlus + col] = u1[ix*colPlus + col] = uval(ix*hx, col * wy); 43 } 44 for (int jy = 0; jy <= col; jy++) 45 { 46 u0[jy] = u1[jy] = uval(0.0f, jy * wy); 47 u0[row*colPlus + jy] = u1[row*colPlus + jy] = uval(row*hx, jy * wy); 48 } 49 for (int ix = 1; ix < row; ix++) 50 { 51 for (int jy = 1; jy < col; jy++) 52 u0[ix*colPlus + jy] = 0.0f; 53 } 54 55 // 计算 56 timestruct t1, t2; 57 acc_init(acc_device_nvidia); 58 gettime(&t1); 59 #pragma acc data copy(u0[0:(row + 1) * colPlus]) copyin(u1[0:(row + 1) * colPlus]) // 循环外侧添加 data 构件,跨迭代(内核)构造数据空间 60 { 61 for (int iter = 0; iter < maxIter; iter++) 62 { 63 #pragma acc kernels present(u0[0:((row + 1) * colPlus)], u1[0:((row + 1) * colPlus)]) // 每次调用内核时声明 u0 和 u1 已经存在,不要再拷贝 64 { 65 #pragma acc loop independent 66 for (int ix = 1; ix < row; ix++) 67 { 68 #pragma acc loop independent 69 for (int jy = 1; jy < col; jy++) 70 { 71 u1[ix*colPlus + jy] = (c1*fij + wy2 * (u0[(ix - 1)*colPlus + jy] + u0[(ix + 1)*colPlus + jy]) + 72 hx2 * (u0[ix*colPlus + jy - 1] + u0[ix*colPlus + jy + 1])) * c2; 73 } 74 } 75 } 76 utemp = u0, u0 = u1, u1 = utemp; 77 } 78 } 79 gettime(&t2); 80 81 long long timeElapse = usec(t1, t2); 82 #if defined(_WIN32) || defined(_WIN64) 83 printf(" Elapsed time: %13ld ms. ", timeElapse); 84 #else 85 printf(" Elapsed time: %13ld us. ", timeElapse); 86 #endif 87 free(u0); 88 free(u1); 89 acc_shutdown(acc_device_nvidia); 90 //getchar(); 91 return 0; 92 }

● 输出结果,win10 中运行结果,关闭 PGI_ACC_NOTIFY 后可以达到 67 ms

D:CodeOpenACC>pgcc main.c -o main.exe -c99 -Minfo -acc main: 51, Memory zero idiom, loop replaced by call to __c_mzero4 59, Generating copy(u0[:colPlus*(row+1)]) Generating copyin(u1[:colPlus*(row+1)]) 61, Generating present(u1[:colPlus*(row+1)],u0[:colPlus*(row+1)]) 66, Loop is parallelizable 69, Loop is parallelizable Generating Tesla code 66, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 69, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ 69, FMA (fused multiply-add) instruction(s) generated uval: 21, FMA (fused multiply-add) instruction(s) generated D:CodeOpenACC>main.exe launch CUDA kernel file=D:CodeOpenACCmain.c function=main line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 ... launch CUDA kernel file=D:CodeOpenACCmain.c function=main line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 Elapsed time: 346 ms.

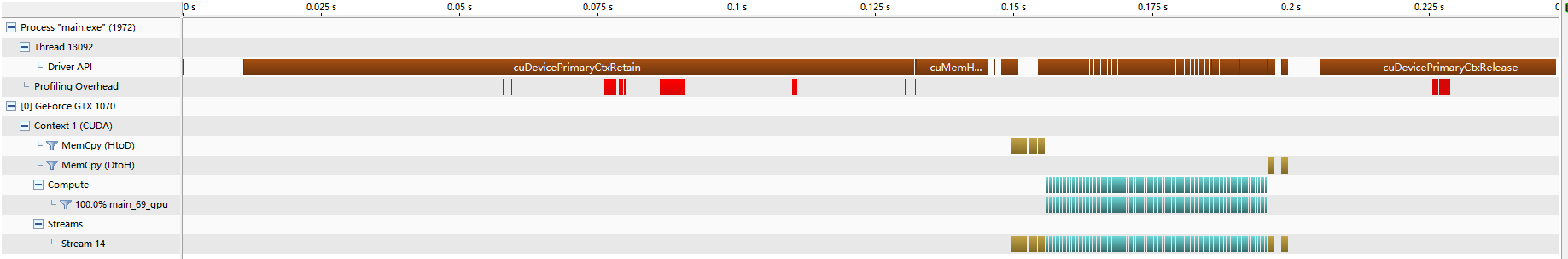

● nvvp 结果,可见大部分时间都花在了初始化设备上,计算用时已经比较少了,拷贝用时更少,只有开头和结尾有一点

● 输出结果,Ubuntu 中运行结果,含开启 PGI_ACC_TIME 的数据

cuan@CUAN:~$ pgcc data.c -o data.exe -c99 -Minfo -acc main: 51, Memory zero idiom, loop replaced by call to __c_mzero4 59, Generating copy(u0[:colPlus*(row+1)]) Generating copyin(u1[:colPlus*(row+1)]) 61, Generating present(utemp[:],u1[:colPlus*(row+1)],u0[:colPlus*(row+1)]) FMA (fused multiply-add) instruction(s) generated 66, Loop is parallelizable 69, Loop is parallelizable Generating Tesla code 66, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 69, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ uval: 22, FMA (fused multiply-add) instruction(s) generated cuan@CUAN:~$ ./data.exe launch CUDA kernel file=/home/cuan/data.c function=main line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 ... launch CUDA kernel file=/home/cuan/data.c function=main line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 Elapsed time: 64828 us. Accelerator Kernel Timing data /home/cuan/data.c main NVIDIA devicenum=0 time(us): 41,745 59: data region reached 2 times 59: data copyin transfers: 4 device time(us): total=5,188 max=1,308 min=1,287 avg=1,297 79: data copyout transfers: 3 device time(us): total=2,598 max=1,308 min=11 avg=866 61: data region reached 200 times 63: compute region reached 100 times 69: kernel launched 100 times grid: [32x1024] block: [32x4] device time(us): total=33,959 max=358 min=336 avg=339 elapsed time(us): total=37,984 max=1,003 min=349 avg=379

● 将 tempp 放到了更里一层循环,报运行时错误 715 或 719,参考【https://stackoverflow.com/questions/41366915/openacc-create-data-while-running-inside-a-kernels】,大意是关于内存泄露

D:CodeOpenACC>main.exe

launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main

line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4

launch CUDA kernel file=D:CodeOpenACCOpenACCProjectOpenACCProjectmain.c function=main

line=74 device=0 threadid=1 num_gangs=1 num_workers=1 vector_length=1 grid=1 block=1

call to cuStreamSynchronize returned error 715: Illegal instruction

call to cuMemFreeHost returned error 715: Illegal instruction

D:CodeOpenACC>main.exe launch CUDA kernel file=D:CodeOpenACCmain.c function=main line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 launch CUDA kernel file=D:CodeOpenACCmain.c function=main line=74 device=0 threadid=1 num_gangs=1 num_workers=1 vector_length=1 grid=1 block=1 Failing in Thread:1 call to cuStreamSynchronize returned error 719: Launch failed (often invalid pointer dereference) Failing in Thread:1 call to cuMemFreeHost returned error 719: Launch failed (often invalid pointer dereference)

● 尝试 在 data 构件中添加 create(utemp) 或在交换指针的位置临时定义 float *utemp 都会报运行时错误 700

D:CodeOpenACC>main.exe launch CUDA kernel file=D:CodeOpenACCmain.c function=main line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 launch CUDA kernel file=D:CodeOpenACCmain.c function=main line=74 device=0 threadid=1 num_gangs=1 num_workers=1 vector_length=1 grid=1 block=1 Failing in Thread:1 call to cuStreamSynchronize returned error 700: Illegal address during kernel execution Failing in Thread:1 call to cuMemFreeHost returned error 700: Illegal address during kernel execution

▶ 恢复错误控制,添加 reduction 导语用来计量改进量

1 #include <stdio.h> 2 #include <stdlib.h> 3 #include <math.h> 4 #include <time.h> 5 #include <openacc.h> 6 7 #if defined(_WIN32) || defined(_WIN64) 8 #include <C:Program FilesPGIwin6419.4includewrapsys imeb.h> 9 #define timestruct clock_t 10 #define gettime(a) (*(a) = clock()) 11 #define usec(t1,t2) (t2 - t1) 12 #else 13 #include <sys/time.h> 14 #define gettime(a) gettimeofday(a, NULL) 15 #define usec(t1,t2) (((t2).tv_sec - (t1).tv_sec) * 1000000 + (t2).tv_usec - (t1).tv_usec) 16 typedef struct timeval timestruct; 17 18 #define max(x,y) ((x) > (y) ? (x) : (y)) 19 #endif 20 21 inline float uval(float x, float y) 22 { 23 return x * x + y * y; 24 } 25 26 int main() 27 { 28 const int row = 8191, col = 1023; 29 const float height = 1.0, width = 2.0; 30 const float hx = height / row, wy = width / col; 31 const float fij = -4.0f; 32 const float hx2 = hx * hx, wy2 = wy * wy, c1 = hx2 * wy2, c2 = 1.0f / (2.0 * (hx2 + wy2)), errControl = 0.0f; 33 const int maxIter = 100; 34 const int colPlus = col + 1; 35 36 float *restrict u0 = (float *)malloc(sizeof(float)*(row + 1)*colPlus); 37 float *restrict u1 = (float *)malloc(sizeof(float)*(row + 1)*colPlus); 38 float *utemp = NULL; 39 40 // 初始化 41 for (int ix = 0; ix <= row; ix++) 42 { 43 u0[ix*colPlus + 0] = u1[ix*colPlus + 0] = uval(ix * hx, 0.0f); 44 u0[ix*colPlus + col] = u1[ix*colPlus + col] = uval(ix*hx, col * wy); 45 } 46 for (int jy = 0; jy <= col; jy++) 47 { 48 u0[jy] = u1[jy] = uval(0.0f, jy * wy); 49 u0[row*colPlus + jy] = u1[row*colPlus + jy] = uval(row*hx, jy * wy); 50 } 51 for (int ix = 1; ix < row; ix++) 52 { 53 for (int jy = 1; jy < col; jy++) 54 u0[ix*colPlus + jy] = 0.0f; 55 } 56 57 // 计算 58 timestruct t1, t2; 59 acc_init(acc_device_nvidia); 60 gettime(&t1); 61 #pragma acc data copy(u0[0:(row + 1) * colPlus]) copyin(u1[0:(row + 1) * colPlus]) 62 { 63 for (int iter = 1; iter < maxIter; iter++) 64 { 65 float uerr = 0.0f; // uerr 要放到前面,否则离开代码块数据未定义,书上这里是错的 66 #pragma acc kernels present(u0[0:(row + 1) * colPlus]) present(u1[0:(row + 1) * colPlus]) 67 { 68 #pragma acc loop independent reduction(max:uerr) // 添加 reduction 语句统计改进量 69 for (int ix = 1; ix < row; ix++) 70 { 71 for (int jy = 1; jy < col; jy++) 72 { 73 u1[ix*colPlus + jy] = (c1*fij + wy2 * (u0[(ix - 1)*colPlus + jy] + u0[(ix + 1)*colPlus + jy]) + 74 hx2 * (u0[ix*colPlus + jy - 1] + u0[ix*colPlus + jy + 1])) * c2; 75 uerr = max(uerr, fabs(u0[ix * colPlus + jy] - u1[ix * colPlus + jy])); 76 } 77 } 78 } 79 printf(" iter = %d, uerr = %e ", iter, uerr); 80 if (uerr < errControl) 81 break; 82 utemp = u0, u0 = u1, u1 = utemp; 83 } 84 } 85 gettime(&t2); 86 87 long long timeElapse = usec(t1, t2); 88 #if defined(_WIN32) || defined(_WIN64) 89 printf(" Elapsed time: %13ld ms. ", timeElapse); 90 #else 91 printf(" Elapsed time: %13ld us. ", timeElapse); 92 #endif 93 free(u0); 94 free(u1); 95 acc_shutdown(acc_device_nvidia); 96 //getchar(); 97 return 0; 98 }

● 输出结果,win10 相比没有错误控制的情形整整慢了一倍,nvvp 没有明显变化,不放上来了

D:CodeOpenACC>pgcc main.c -o main.exe -c99 -Minfo -acc main: 53, Memory zero idiom, loop replaced by call to __c_mzero4 61, Generating copy(u0[:colPlus*(row+1)]) Generating copyin(u1[:colPlus*(row+1)]) 66, Generating present(u0[:colPlus*(row+1)]) Generating implicit copy(uerr) Generating present(u1[:colPlus*(row+1)]) 69, Loop is parallelizable 71, Loop is parallelizable Generating Tesla code 69, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 71, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ Generating reduction(max:uerr) // 多了 reduction 的信息 71, FMA (fused multiply-add) instruction(s) generated uval: 23, FMA (fused multiply-add) instruction(s) generated D:CodeOpenACC>main.exe iter = 1, uerr = 2.496107e+00 ... iter = 99, uerr = 2.202189e-02 Elapsed time: 125 ms.

● 输出结果,Unubtu

cuan@CUAN:~$ pgcc data+reduction.c -o data+reduction.exe -c99 -Minfo -acc main: 53, Memory zero idiom, loop replaced by call to __c_mzero4 61, Generating copyin(u1[:colPlus*(row+1)]) Generating copy(u0[:colPlus*(row+1)]) 63, FMA (fused multiply-add) instruction(s) generated 66, Generating present(u0[:colPlus*(row+1)]) Generating implicit copy(uerr) Generating present(u1[:colPlus*(row+1)]) 69, Loop is parallelizable 71, Loop is parallelizable Generating Tesla code 69, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */ 71, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */ Generating reduction(max:uerr) uval: 24, FMA (fused multiply-add) instruction(s) generated cuan@CUAN:~$ ./data+reduction.exe launch CUDA kernel file=/home/cuan/data+reduction.c function=main line=71 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 shared memory=1024 launch CUDA kernel file=/home/cuan/data+reduction.c function=main line=71 device=0 threadid=1 num_gangs=1 num_workers=1 vector_length=256 grid=1 block=256 shared memory=1024 iter = 1, uerr = 2.496107e+00 launch CUDA kernel file=/home/cuan/data+reduction.c function=main line=71 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 shared memory=1024 launch CUDA kernel file=/home/cuan/data+reduction.c function=main line=71 device=0 threadid=1 num_gangs=1 num_workers=1 vector_length=256 grid=1 block=256 shared memory=1024 ... iter = 98, uerr = 2.214956e-02 launch CUDA kernel file=/home/cuan/data+reduction.c function=main line=71 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4 shared memory=1024 launch CUDA kernel file=/home/cuan/data+reduction.c function=main line=71 device=0 threadid=1 num_gangs=1 num_workers=1 vector_length=256 grid=1 block=256 shared memory=1024 iter = 99, uerr = 2.202189e-02 Elapsed time: 101391 us. Accelerator Kernel Timing data /home/cuan/data+reduction.c main NVIDIA devicenum=0 time(us): 73,976 61: data region reached 2 times 61: data copyin transfers: 4 device time(us): total=5,223 max=1,328 min=1,287 avg=1,305 85: data copyout transfers: 3 device time(us): total=2,562 max=1,280 min=4 avg=854 66: compute region reached 99 times 71: kernel launched 99 times grid: [32x1024] block: [32x4] device time(us): total=61,336 max=622 min=618 avg=619 elapsed time(us): total=63,876 max=1,645 min=630 avg=645 71: reduction kernel launched 99 times grid: [1] block: [256] device time(us): total=4,161 max=48 min=41 avg=42 elapsed time(us): total=7,376 max=1,082 min=51 avg=74 66: data region reached 396 times 66: data copyin transfers: 99 device time(us): total=297 max=18 min=2 avg=3 79: data copyout transfers: 99 device time(us): total=397 max=14 min=3 avg=4

▶ 尝试在计算的循环导语上加上 collapse(2) 子句,意思是合并两个较小的循环为一个较大的循环。发现效果不显著,不放上来了