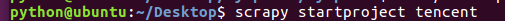

1.用 scrapy 新建一个 tencent 项目

2.在 items.py 中确定要爬去的内容

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your scraped items 4 # 5 # See documentation in: 6 # http://doc.scrapy.org/en/latest/topics/items.html 7 8 import scrapy 9 10 11 class TencentItem(scrapy.Item): 12 # define the fields for your item here like: 13 # 职位 14 position_name = scrapy.Field() 15 # 详情链接 16 positin_link = scrapy.Field() 17 # 职业类别 18 position_type = scrapy.Field() 19 # 招聘人数 20 people_number = scrapy.Field() 21 # 工作地点 22 work_location = scrapy.Field() 23 # 发布时间 24 publish_time = scrapy.Field()

3.在当前命令下创建一个名为 tencent_spider 的爬虫, 并指定爬取域的范围

4.打开tencent_spider.py已经初始化了格式, 修改一下就好了

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from tencent.items import TencentItem 4 5 class TencentSpiderSpider(scrapy.Spider): 6 name = "tencent_spider" 7 allowed_domains = ["tencent.com"] 8 9 url = "http://hr.tencent.com/position.php?&start=" 10 offset = 0 11 12 start_urls =[ 13 url + str(offset), 14 ] 15 16 def parse(self, response): 17 for each in response.xpath("//tr[@class='even'] | //tr[@class='odd']"): 18 # 初始化模型对象 19 item = TencentItem() 20 item['positionname'] = each.xpath("./td[1]/a/text()").extract()[0] 21 # 详情连接 22 item['positionlink'] = each.xpath("./td[1]/a/@href").extract()[0] 23 # 职位类别 24 item['positiontype'] = each.xpath("./td[2]/text()").extract()[0] 25 # 招聘人数 26 item['peoplenumber'] = each.xpath("./td[3]/text()").extract()[0] 27 # 工作地点 28 item['worklocation'] = each.xpath("./td[4]/text()").extract()[0] 29 # 发布时间 30 item['publishtime'] = each.xpath("./td[5]/text()").extract()[0] 31 32 yield item 33 if self.offset < 1000: 34 self.offset += 10 35 36 # 每次处理完一页的数据之后,重新发送下一页页面请求 37 # self.offset自增10,同时拼接为新的url,并调用回调函数self.parse处理Response 38 yield scrapy.Request(self.url + str(self.offset), callback = self.parse)

5.在 piplines.py 中写入文件

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html 7 8 import json 9 10 class TencentPipeline(object): 11 def open_spider(self, spider): 12 self.filename = open("tencent.json", "w") 13 14 def process_item(self, item, spider): 15 text = json.dumps(dict(item), ensure_ascii = False) + " " 16 self.filename.write(text.encode("utf-8") 17 return item 18 19 def close_spider(self, spider): 20 self.filename.close()

6.进入settings中, 设置一下 headers 和 piplines

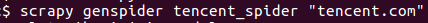

7.在命令输入以下命令运行

出现以下错误...还未解决...

2017-10-03 20:03:20 [scrapy] INFO: Scrapy 1.1.1 started (bot: tencent)

2017-10-03 20:03:20 [scrapy] INFO: Overridden settings: {'NEWSPIDER_MODULE': 'tencent.spiders', 'SPIDER_MODULES': ['tencent.spiders'], 'DOWNLOAD_DELAY': 2, 'BOT_NAME': 'tencent'}

2017-10-03 20:03:20 [scrapy] INFO: Enabled extensions:

['scrapy.extensions.logstats.LogStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.corestats.CoreStats']

2017-10-03 20:03:20 [scrapy] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.chunked.ChunkedTransferMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2017-10-03 20:03:20 [scrapy] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

Unhandled error in Deferred:

2017-10-03 20:03:20 [twisted] CRITICAL: Unhandled error in Deferred:

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/scrapy/commands/crawl.py", line 57, in run

self.crawler_process.crawl(spname, **opts.spargs)

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 163, in crawl

return self._crawl(crawler, *args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 167, in _crawl

d = crawler.crawl(*args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 1274, in unwindGenerator

return _inlineCallbacks(None, gen, Deferred())

--- <exception caught here> ---

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 1128, in _inlineCallbacks

result = g.send(result)

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 90, in crawl

six.reraise(*exc_info)

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 72, in crawl

self.engine = self._create_engine()

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 97, in _create_engine

return ExecutionEngine(self, lambda _: self.stop())

File "/usr/local/lib/python2.7/dist-packages/scrapy/core/engine.py", line 69, in __init__

self.scraper = Scraper(crawler)

File "/usr/local/lib/python2.7/dist-packages/scrapy/core/scraper.py", line 71, in __init__

self.itemproc = itemproc_cls.from_crawler(crawler)

File "/usr/local/lib/python2.7/dist-packages/scrapy/middleware.py", line 58, in from_crawler

return cls.from_settings(crawler.settings, crawler)

File "/usr/local/lib/python2.7/dist-packages/scrapy/middleware.py", line 34, in from_settings

mwcls = load_object(clspath)

File "/usr/local/lib/python2.7/dist-packages/scrapy/utils/misc.py", line 44, in load_object

mod = import_module(module)

File "/usr/lib/python2.7/importlib/__init__.py", line 37, in import_module

__import__(name)

exceptions.SyntaxError: invalid syntax (pipelines.py, line 17)

2017-10-03 20:03:20 [twisted] CRITICAL:

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 1128, in _inlineCallbacks

result = g.send(result)

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 90, in crawl

six.reraise(*exc_info)

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 72, in crawl

self.engine = self._create_engine()

File "/usr/local/lib/python2.7/dist-packages/scrapy/crawler.py", line 97, in _create_engine

return ExecutionEngine(self, lambda _: self.stop())

File "/usr/local/lib/python2.7/dist-packages/scrapy/core/engine.py", line 69, in __init__

self.scraper = Scraper(crawler)

File "/usr/local/lib/python2.7/dist-packages/scrapy/core/scraper.py", line 71, in __init__

self.itemproc = itemproc_cls.from_crawler(crawler)

File "/usr/local/lib/python2.7/dist-packages/scrapy/middleware.py", line 58, in from_crawler

return cls.from_settings(crawler.settings, crawler)

File "/usr/local/lib/python2.7/dist-packages/scrapy/middleware.py", line 34, in from_settings

mwcls = load_object(clspath)

File "/usr/local/lib/python2.7/dist-packages/scrapy/utils/misc.py", line 44, in load_object

mod = import_module(module)

File "/usr/lib/python2.7/importlib/__init__.py", line 37, in import_module

__import__(name)

File "/home/python/Desktop/tencent/tencent/pipelines.py", line 17

return item

^

SyntaxError: invalid syntax

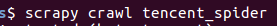

还是自己太粗心了, 在 5 中的piplines.py 少了一个括号...感谢 sun shine 的帮助

thank you !!!

thank you !!!