helm使用hostpath部署es

环境汇总:

节点数:3 nodes 91/92/93

k8s: v1.20.5

helm: v3.2.0

elasticsearch: 6.8.18

1.创建操作空间&前期准备

参考:快速搭建Kubernetes高可用集群七 ELKSTACK 部署 https://www.longger.net/article/33179.html

# 创建elk的namespace

kubectl create ns elk

# 拉取es镜像,后面需要获取证书

docker pull elasticsearch:6.8.18

## 生成证书

# 运行容器生成证书

docker run --name elastic-charts-certs -i -w /app elasticsearch:6.8.18 /bin/sh -c

"elasticsearch-certutil ca --out /app/elastic-stack-ca.p12 --pass '' &&

elasticsearch-certutil cert --name security-master --dns

security-master --ca /app/elastic-stack-ca.p12 --pass '' --ca-pass '' --out /app/elastic-certificates.p12"

# 从容器中将生成的证书拷贝出到当前目录

docker cp elastic-charts-certs:/app/elastic-certificates.p12 ./

# 删除容器

docker rm -f elastic-charts-certs

# 将 pcks12 中的信息分离出来,写入文件

openssl pkcs12 -nodes -passin pass:'' -in elastic-certificates.p12 -out elastic-certificate.pem

## 添加证书

kubectl create secret -n elk generic elastic-certificates --from-file=elastic-certificates.p12

kubectl -n elk create secret generic elastic-certificate-pem --from-file=elastic-certificate.pem

# 设置集群用户名密码,用户名不建议修改

kubectl create secret -n elk generic elastic-credentials

--from-literal=username=elastic --from-literal=password=elastic123456

# 查看生成的证书及秘钥库

kubectl get secret -n elk

2.helm拉取&更新repo

helm repo add elastic https://helm.elastic.co

helm repo update

3.提前pull镜像

# elasticsearch

docker pull elasticsearch:6.8.18

docker tag elasticsearch:6.8.18 docker.elastic.co/elasticsearch/elasticsearch:6.8.18

# kibana

docker pull kibana:6.8.18

docker tag kibana:6.8.18 docker.elastic.co/kibana/kibana:6.8.18

4.使用hostpath作为local storage的存储卷

需先创建好pv, storageclass-master与data各自创建自己的pv

参考:PV、PVC、StorageClass讲解 https://www.cnblogs.com/rexcheny/p/10925464.html

pv-master节点

# local-pv-master1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-master-pv1 # 多个master节点设置多个master的pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce # hostpath下的读写特性,单节点读写

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

local: # local类型

path: /mnt/data/master/vol01 # 节点上的具体路径,根据实际情况定

nodeAffinity: # 这里就设置了节点亲和

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node1 # 这里我们使用node1节点,该节点有/data/vol1路径

pv-data节点

# local-pv-data1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-data-pv1 # 多个data节点设置多个data的pv

spec:

capacity:

storage: 100Gi # 大小根据请款设置,测试环境设的100G

volumeMode: Filesystem

accessModes:

- ReadWriteOnce # hostpath下的读写特性,单节点读写

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage # 同storageclass的设置name一直

local: # local类型

path: /mnt/data # 节点上的具体路径

nodeAffinity: # 这里就设置了节点亲和

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname # 命令 kubectl get no --show-labels

operator: In

values:

- node1 # 这里我们使用node01节点,该节点有/data/vol1路径

storageclass

# local-storageclass.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage # 与pv的设置的name一致

provisioner: kubernetes.io/no-provisioner # 动态供给插件

volumeBindingMode: WaitForFirstConsumer

设置pv & storageclass

kubectl apply -f local-pv-master1[2|3].yaml -n elk # 在elk的namespace下

kubectl apply -f local-pv-data1[2|3].yaml -n elk # 在elk的namespace下

kubectl apply -f local-storageclass.yaml -n elk # 在elk的namespace下

5.准备helm安装节点的values yaml

参考:Helm 安装 ElasticSearch & Kibana 7.x 版本 http://www.mydlq.club/article/13

es-master

---

# 集群名称

clusterName: "helm"

# 节点所属群组

nodeGroup: "master"

# Master 节点的服务地址,这里是Master,不需要

masterService: ""

# 节点类型:

roles:

master: "true"

ingest: "false"

data: "false"

# 节点数量,做为 Master 节点,数量必须是 node >=3 and node mod 2 == 1

replicas: 1 # 节点数量按情况设置,本人测试设置1,官方3

minimumMasterNodes: 1

esMajorVersion: ""

esConfig:

# elasticsearch.yml 的配置,主要是数据传输和监控的开关及证书配置

elasticsearch.yml: |

xpack:

security:

enabled: true

transport:

ssl:

enabled: true

verification_mode: certificate

keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

monitoring:

collection:

enabled: true

# 设置 ES 集群的 elastic 账号密码为变量

extraEnvs:

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: elastic-credentials

key: username

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

envFrom: []

# 挂载证书位置

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

# 镜像拉取来源,我对镜像做了一些简单的修改,故放置于自建的 harbor 里。

image: "docker.elastic.co/elasticsearch/elasticsearch"

imageTag: "6.8.18"

imagePullPolicy: "IfNotPresent"

imagePullSecrets:

- name: registry-secret

podAnnotations: {}

labels: {}

# ES 的 JVM 内存

esJavaOpts: "-Xmx512m -Xms512m" # 内存不要设太大,根据自己机器情况定,如果一致unready,建议512m

# ES 运行所需的资源

resources:

requests:

cpu: "500m"

memory: "1Gi"

limits:

cpu: "500m"

memory: "1Gi"

initResources: {}

sidecarResources: {}

# ES 的服务 IP,如果没有设置这个,服务有可能无法启动。

networkHost: "0.0.0.0"

# ES 的存储配置

volumeClaimTemplate:

storageClassName: "local-storage" # 与前面的storageclass.yaml一致

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi # 大小一致

# PVC 开关

persistence:

enabled: true

labels:

enabled: false

annotations: {}

# rbac 暂未详细研究

rbac:

create: false

serviceAccountAnnotations: {}

serviceAccountName: ""

# 镜像部署选择节点

nodeSelector:

kubernetes.io/hostname: node1

# 容忍污点,如果 K8S 集群节点较少,需要在 Master 节点部署,需要使用此项

tolerations:

- operator: "Exists"

es-data

---

# 集群名称,必须和 Master 节点的集群名称保持一致

clusterName: "helm"

# 节点类型

nodeGroup: "data"

# Master 节点服务名称

masterService: "helm-master"

# 节点权限,为 True 的是提供相关服务,Data 节点不需要 Master 权限

roles:

master: "false"

ingest: "true"

data: "true"

# 节点数量

replicas: 1 # 按实际情况设置,测试设为1,官方3

esMajorVersion: "6"

esConfig:

# elasticsearch.yml 配置,同 Master 节点配置

elasticsearch.yml: |

xpack:

security:

enabled: true

transport:

ssl:

enabled: true

verification_mode: certificate

keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

monitoring:

collection:

enabled: true

extraEnvs:

# 同 Master 节点配置

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: elastic-credentials

key: username

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

envFrom: []

secretMounts:

# 证书挂载,同 Master 节点配置

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

image: "docker.elastic.co/elasticsearch/elasticsearch"

imageTag: "6.8.18"

imagePullPolicy: "IfNotPresent"

imagePullSecrets:

- name: registry-secret

podAnnotations: {}

labels: {}

# ES节点的 JVM 内存分配,根据实际情况进行增加

esJavaOpts: "-Xmx512m -Xms512m"

# ES 运行所需的资源

resources:

requests:

cpu: "1000m"

memory: "1Gi"

limits:

cpu: "1000m"

memory: "1Gi"

initResources: {}

sidecarResources: {}

# ES 的服务 IP,如果没有设置这个,服务有可能无法启动。

networkHost: "0.0.0.0"

# ES 数据存储

volumeClaimTemplate:

storageClassName: "local-storage"

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 10Gi

# PVC 开关

persistence:

enabled: true

labels:

enabled: false

annotations: {}

# rbac 暂未详细研究

rbac:

create: false

serviceAccountAnnotations: {}

serviceAccountName: ""

# 镜像部署选择节点

# nodeSelector:

# elk-rolse: data

# 容忍污点,如果 K8S 集群节点较少,需要在 Master 节点部署,需要使用此项

tolerations:

- operator: "Exists"

es-client

# ============设置集群名称============

## 设置集群名称

clusterName: "helm"

## 设置节点名称

nodeGroup: "client"

## 设置角色

roles:

master: "false"

ingest: "false"

data: "false"

# Master 节点服务名称

masterService: "helm-master"

# ============镜像配置============

## 指定镜像与镜像版本

image: "docker.elastic.co/elasticsearch/elasticsearch"

imageTag: "6.8.18"

## 副本数

replicas: 1

# ============资源配置============

## JVM 配置参数

esJavaOpts: "-Xmx512m -Xms512m"

## 部署资源配置(生成环境一定要设置大些)

resources:

requests:

cpu: "1000m"

memory: "2Gi"

limits:

cpu: "1000m"

memory: "2Gi"

## 数据持久卷配置

persistence:

enabled: false

# ============安全配置============

## 设置协议,可配置为 http、https

protocol: http

## 证书挂载配置,这里我们挂入上面创建的证书

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

## 允许您在/usr/share/elasticsearch/config/中添加任何自定义配置文件,例如 elasticsearch.yml

## ElasticSearch 7.x 默认安装了 x-pack 插件,部分功能免费,这里我们配置下

## 下面注掉的部分为配置 https 证书,配置此部分还需要配置 helm 参数 protocol 值改为 https

esConfig:

elasticsearch.yml: |

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

# xpack.security.http.ssl.enabled: true

# xpack.security.http.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

# xpack.security.http.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

## 环境变量配置,这里引入上面设置的用户名、密码 secret 文件

extraEnvs:

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: elastic-credentials # 与release namespace一致

key: username

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

# ============Service 配置============

service:

type: NodePort

nodePort: "30200"

6.helm install pod

执行顺序:master>data>client

# master节点

helm install es-m -nelk -f es-master.yaml elastic/elasticsearch --version 6.8.18 --debug

# data节点

helm install es-d -nelk -f es-data.yaml elastic/elasticsearch --version 6.8.18 --debug

# client节点

helm install es-c -nelk -f es-client.yaml elastic/elasticsearch --version 6.8.18 --debug

7.查看状态及测试功能

# 查看pod的情况

watch kubectl get po -n elk -o wide

---

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

helm-client-0 1/1 Running 0 6h2m 10.233.96.81 node2 <none> <none>

helm-data-0 1/1 Running 0 45m 10.233.96.84 node2 <none> <none>

helm-master-0 1/1 Running 0 45m 10.233.90.89 node1 <none> <none>

---

# 如pod一直pending或其他不正常状态,查看原因

kubectl describe po helm-data-0 -nelk # -n elk也可以

# 查看pod的日志

kubectl logs helm-master-0 -nelk

8.问题汇总

8.1 pv pvc sc的设置

关于存储卷的分类:

- nfs

- ceph

- local volume

8.2 节点亲和性问题

节点的亲和性与反亲和性

8.3 es起来了但不是ready状态-jvm内存问题

openjdk提示useavx=2不支持本cpu问题

9.部署kibana

创建kibana-values.yaml

# ============镜像配置============

## 指定镜像与镜像版本

image: "docker.elastic.co/kibana/kibana"

imageTag: "6.8.18"

## 配置 ElasticSearch 地址

elasticsearchHosts: "http://helm-client:9200" # 和集群的命名保持一致

# ============环境变量配置============

## 环境变量配置,这里引入上面设置的用户名、密码 secret 文件

extraEnvs:

- name: 'ELASTICSEARCH_USERNAME'

valueFrom:

secretKeyRef:

name: elastic-credentials

key: username

- name: 'ELASTICSEARCH_PASSWORD'

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

# ============资源配置============

resources:

requests:

cpu: "500m"

memory: "1Gi"

limits:

cpu: "500m"

memory: "1Gi"

# ============配置 Kibana 参数============

## kibana 配置中添加语言配置,设置 kibana 为中文

kibanaConfig:

kibana.yml: |

i18n.locale: "zh-CN"

# ============Service 配置============

service:

type: NodePort

nodePort: "30601"

helm创建kibana应用:

# install 应用

helm install -nelk kibana elastic/kibana -f kibana-values.yaml --version 6.8.18 --debug

# 查看日志

kubectl logs kibana-kibana-875887d58-846nw -nelk

# 查看启动的描述

kubectl describe po kibana-kibana-875887d58-846nw -nelk

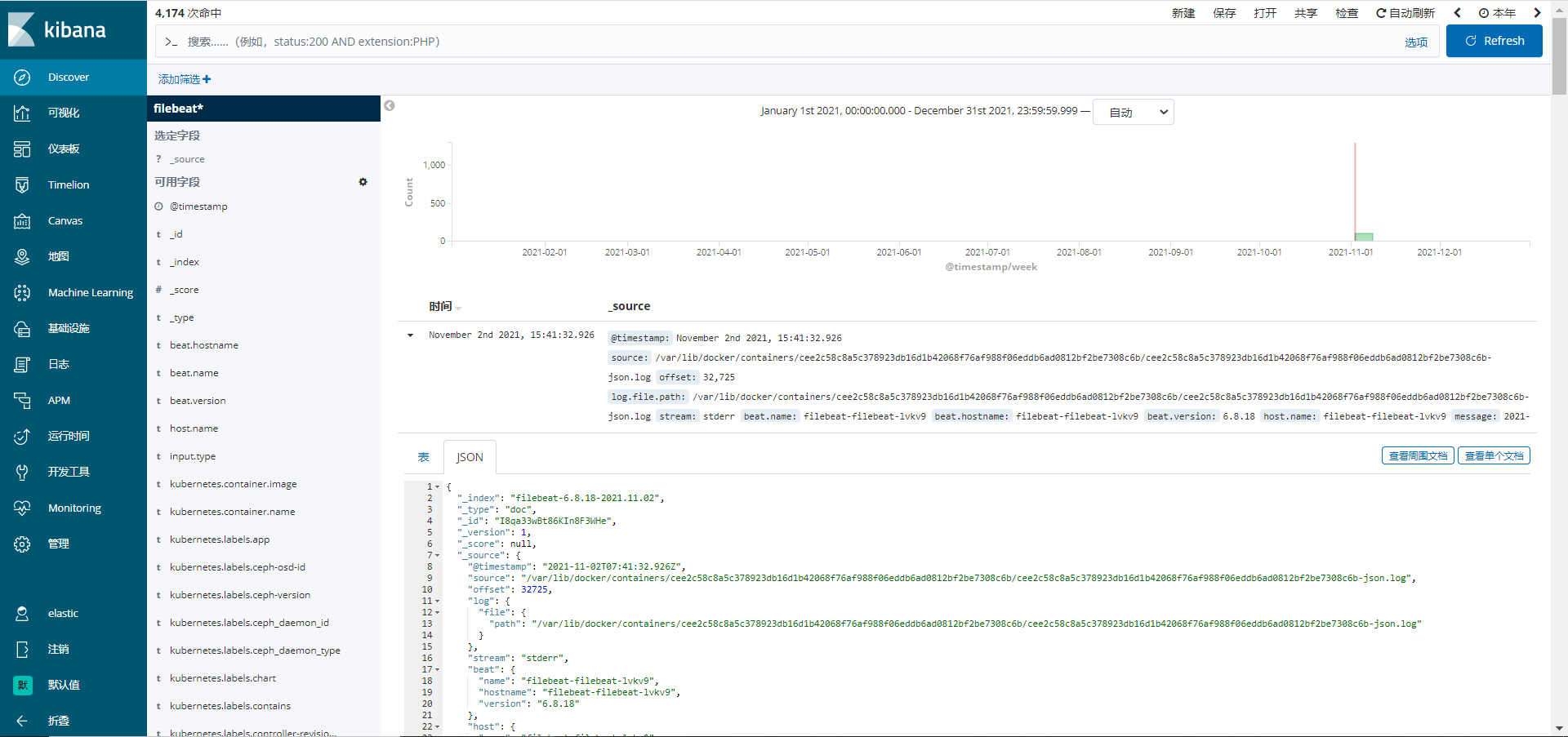

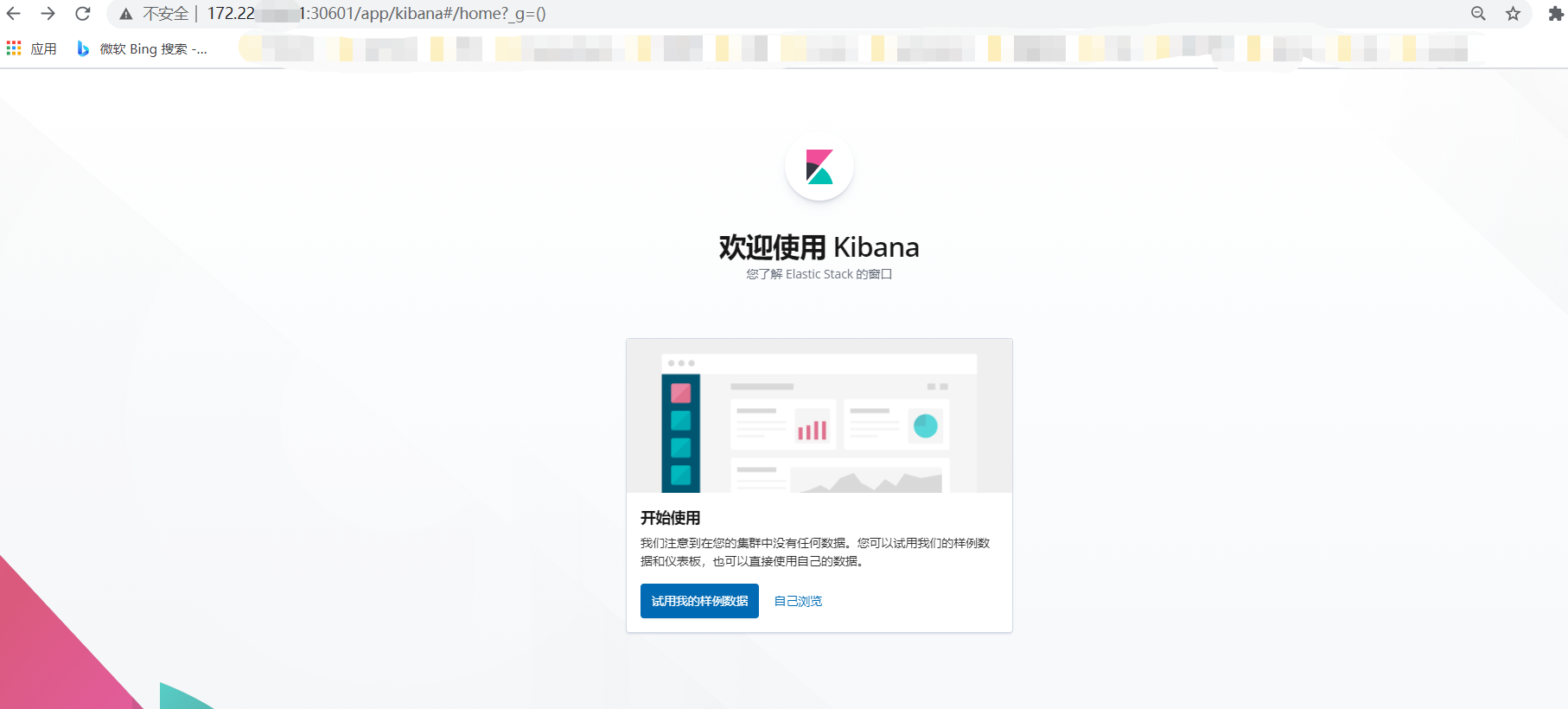

验证kibana连接es成功,登录时输入自己设置的user/password即可

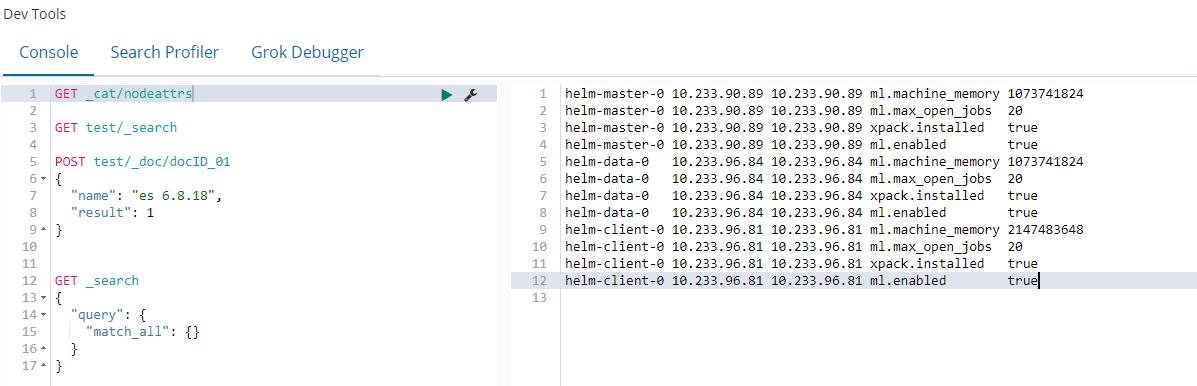

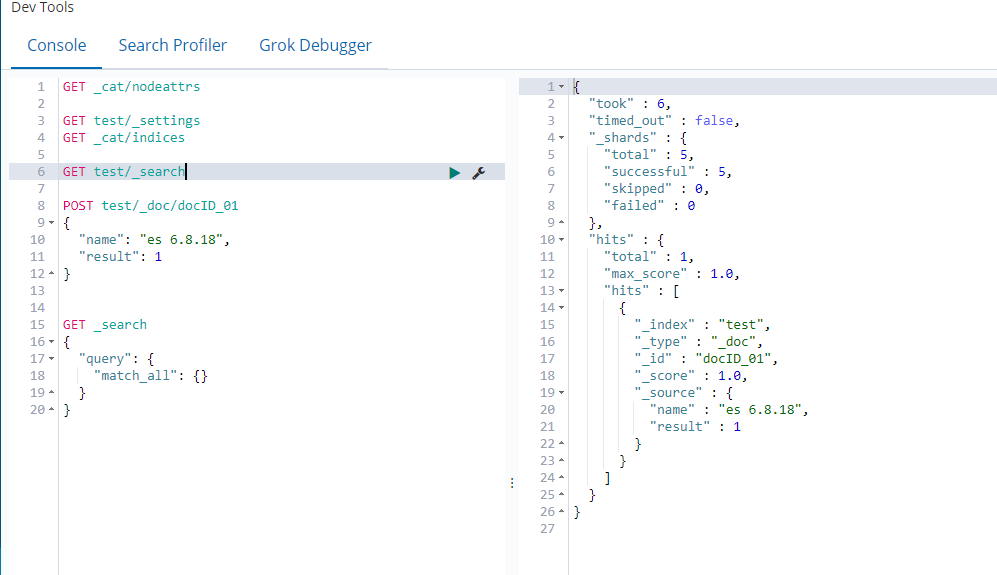

验证连接节点:

插入数据并验证:

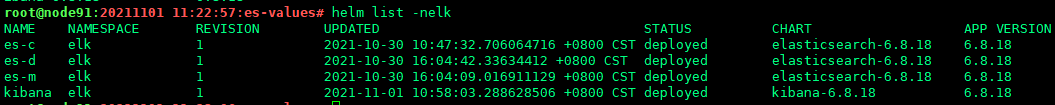

10.查看helm已安装的release

使用命令helm list [-n mynamespace]

可以看到部署的4个release,分别是es-master/data/client, kibana已全部部署成功。

11.部署filebeat

部署的配置文件:

# 使用镜像

image: "docker.elastic.co/beats/filebeat"

imageTag: "6.8.18"

# 添加配置

filebeatConfig:

filebeat.yml: |

filebeat.inputs:

- type: docker

containers.ids:

- '*'

processors:

- add_kubernetes_metadata:

in_cluster: true

output.elasticsearch:

# elasticsearch 用户

username: 'elastic'

# elasticsearch 密码

password: 'elastic123456'

# elasticsearch 主机

hosts: ["helm-client:9200"]

# 环境变量

extraEnvs:

- name: 'ELASTICSEARCH_USERNAME'

valueFrom:

secretKeyRef:

name: elastic-credentials

key: username

- name: 'ELASTICSEARCH_PASSWORD'

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

部署filebeat:

helm install filebeat -f filebeat-values.yaml -nelk elastic/filebeat --version 6.8.18 --debug

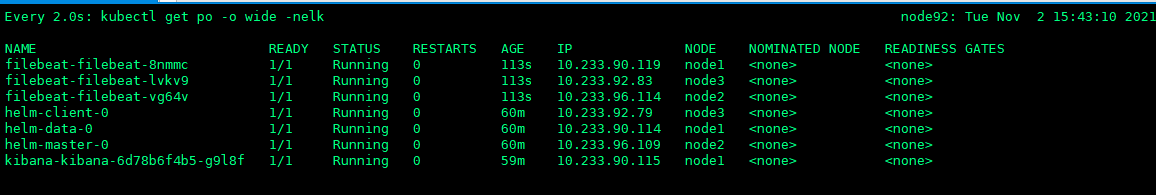

此filebeat是以daemonset形式,所以只要默认设置下,应该每个节点都会安装filebeat应用。

查看filebeat的pod, 可以看到已经ready状态:

进一步查看kibana上的filebeat日志: