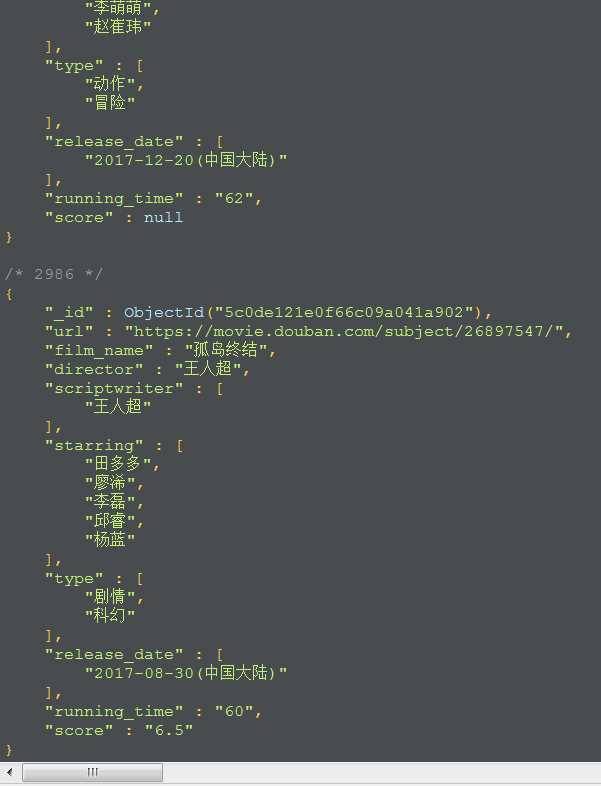

昨天写了一个小爬虫,爬取了豆瓣上2017年中国大陆的电影信息,网址为豆瓣选影视,爬取了电影的名称、导演、编剧、主演、类型、上映时间、片长、评分和链接,并保存到MongoDB中。

一开始用的本机的IP地址,没用代理IP,请求了十几个网页之后就收不到数据了,报HTTP错误302,然后用浏览器打开网页试了一下,发现浏览器也是302。。。

但是我不怕,我有代理IP,哈哈哈!详见我前一篇随笔:爬取代理IP。

使用代理IP之后果然可以持续收到数据了,但中间还是有302错误,没事,用另一个代理IP请求重新请求一次就好了,一次不行再来一次,再来一次不行那就再再来一次,再再不行,那。。。

下面附上部分代码吧。

1.爬虫文件

import scrapy

import json

from douban.items import DoubanItem

parse_url = "https://movie.douban.com/j/new_search_subjects?sort=U&range=0,10&tags=%E7%94%B5%E5%BD%B1&start={}&countries=%E4%B8%AD%E5%9B%BD%E5%A4%A7%E9%99%86&year_range=2017,2017"

class Cn2017Spider(scrapy.Spider):

name = 'cn2017'

allowed_domains = ['douban.com']

start_urls = ['https://movie.douban.com/j/new_search_subjects?sort=U&range=0,10&tags=%E7%94%B5%E5%BD%B1&start=0&countries=%E4%B8%AD%E5%9B%BD%E5%A4%A7%E9%99%86&year_range=2017,2017']

def parse(self, response):

data = json.loads(response.body.decode())

if data is not None:

for film in data["data"]:

print(film["url"])

item = DoubanItem()

item["url"] = film["url"]

yield scrapy.Request(

film["url"],

callback=self.get_detail_content,

meta={"item": item}

)

for page in range(20,3200,20):

yield scrapy.Request(

parse_url.format(page),

callback=self.parse

)

def get_detail_content(self,response):

item = response.meta["item"]

item["film_name"] = response.xpath("//div[@id='content']//span[@property='v:itemreviewed']/text()").extract_first()

item["director"] = response.xpath("//div[@id='info']/span[1]/span[2]/a/text()").extract_first()

item["scriptwriter"] = response.xpath("///div[@id='info']/span[2]/span[2]/a/text()").extract()

item["starring"] = response.xpath("//div[@id='info']/span[3]/span[2]/a[position()<6]/text()").extract()

item["type"] = response.xpath("//div[@id='info']/span[@property='v:genre']/text()").extract()

item["release_date"] = response.xpath("//div[@id='info']/span[@property='v:initialReleaseDate']/text()").extract()

item["running_time"] = response.xpath("//div[@id='info']/span[@property='v:runtime']/@content").extract_first()

item["score"] = response.xpath("//div[@class='rating_self clearfix']/strong/text()").extract_first()

# print(item)

if item["film_name"] is None:

# print("*" * 100)

yield scrapy.Request(

item["url"],

callback=self.get_detail_content,

meta={"item": item},

dont_filter=True

)

else:

yield item

2.items.py文件

import scrapy

class DoubanItem(scrapy.Item):

#电影名称

film_name = scrapy.Field()

#导演

director = scrapy.Field()

#编剧

scriptwriter = scrapy.Field()

#主演

starring = scrapy.Field()

#类型

type = scrapy.Field()

#上映时间

release_date = scrapy.Field()

#片长

running_time = scrapy.Field()

#评分

score = scrapy.Field()

#链接

url = scrapy.Field()

3.middlewares.py文件

from douban.settings import USER_AGENT_LIST

import random

import pandas as pd

class UserAgentMiddleware(object):

def process_request(self, request, spider):

user_agent = random.choice(USER_AGENT_LIST)

request.headers["User-Agent"] = user_agent

return None

class ProxyMiddleware(object):

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

ip_df = pd.read_csv(r"C:\Users\Administrator\Desktop\douban\douban\ip.csv")

ip = random.choice(ip_df.loc[:, "ip"])

request.meta["proxy"] = "http://" + ip

return None

4.pipelines.py文件

from pymongo import MongoClient

client = MongoClient()

collection = client["test"]["douban"]

class DoubanPipeline(object):

def process_item(self, item, spider):

collection.insert(dict(item))

5.settings.py文件

DOWNLOADER_MIDDLEWARES = {

'douban.middlewares.UserAgentMiddleware': 543,

'douban.middlewares.ProxyMiddleware': 544,

}

ITEM_PIPELINES = {

'douban.pipelines.DoubanPipeline': 300,

}

ROBOTSTXT_OBEY = False

DOWNLOAD_TIMEOUT = 10

RETRY_ENABLED = True

RETRY_TIMES = 10

程序共运行1小时20分21.473772秒,抓取到2986条数据。

最后,

还是要每天开心鸭!