一、完善球赛程序,测试球赛程序。

将代码末尾稍作改动即可,若代码正确,则运行,否则输出Error。

from random import random

#打印程序介绍信息

def printIntro():

print("19信计2班23号邓若言")

print("这个程序模拟两个选手A和B的乒乓球比赛")

print("程序运行需要A和B的能力值(以0到1之间的小数表示)")

#获得程序运行参数

def printInputs():

a = eval(input("请输入选手A的能力值(0-1): "))

b = eval(input("请输入选手B的能力值(0-1): "))

n = eval(input("模拟比赛的场次: "))

return a, b, n

# 进行N场比赛

def simNGames(n, probA, probB):

winsA, winsB = 0, 0

for i in range(n):

for j in range(7): #进行7局4胜的比赛

scoreA, scoreB = simOneGame(probA, probB)

if scoreA > scoreB:

winsA += 1

else:

winsB += 1

return winsA,winsB

#进行一场比赛

def simOneGame(probA, probB):

scoreA, scoreB = 0, 0 #初始化AB的得分

serving = "A"

while not gameOver(scoreA, scoreB): #用while循环来执行比赛

if scoreA==10 and scoreB==10:

return(simOneGame2(probA,probB))

if serving == "A":

if random() < probA: ##用随机数生成胜负

scoreA += 1

else:

serving="B"

else:

if random() < probB:

scoreB += 1

else:

serving="A"

return scoreA, scoreB

def simOneGame2(probA,probB):

scoreA,scoreB=10,10

serving = "A"

while not gameOver2(scoreA, scoreB):

if serving == "A":

if random() < probA:

scoreA += 1

else:

serving="B"

else:

if random() < probB:

scoreB += 1

else:

serving="A"

return scoreA, scoreB

#比赛结束

def gameOver(a,b): #正常比赛结束

return a==11 or b==11

def gameOver2(a,b): #进行抢12比赛结束

if abs((a-b))>=2:

return a,b

#输出数据

def printSummary(winsA, winsB):

n = winsA + winsB

print("竞技分析开始,共模拟{}场比赛".format(n))

print("选手A获胜{}场比赛,占比{:0.1%}".format(winsA, winsA/n))

print("选手B获胜{}场比赛,占比{:0.1%}".format(winsB, winsB/n))

#主体函数

def main():

printIntro()

probA, probB, n = printInputs()

winsA, winsB = simNGames(n, probA, probB)

printSummary(winsA, winsB)

try:

main()

except:

print("Error!")

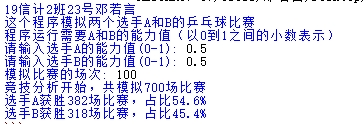

结果如下:

则测试得代码无误。

二、用requests库的get()函数访问必应搜狗主页20次,打印返回状态,text内容,并且计算text()属性和content属性返回网页内容的长度。

关于requests库的内容可戳以下链接

https://www.cnblogs.com/deng11/p/12863994.html

import requests

for i in range(20):

r=requests.get("https://www.sogou.com",timeout=30) #网页链接可换

r.raise_for_status()

r.encoding='utf-8'

print('状态={}'.format(r.status_code))

print(r.text)

print('text属性长度{},content属性长度{}'.format(len(r.text),len(r.content)))

结果如下(取20次中的其中一次,text属性内容太长所以不展示出来):

三、根据所给的html页面,保持为字符串,完成如下要求:

(1)打印head标签内容和你学号的后两位

(2)获取body标签的内容

(3)获取id的first的标签对象

(4)获取并打印html页面中的中文字符

<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title>菜鸟教程(runoob.com)</title> </head> <body> <h1>我的第一个标题</h1> <p id="first">我的第一个段落。</p> </body> <table border="1"> <tr> <td>row 1, cell 1</td> <td>row 1, cell 2</td> </tr> </table> </html>

代码如下:

from bs4 import BeautifulSoup r = ''' <!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title>菜鸟教程(runoob.com) 23号的作业</title> </head> <body> <h1>我的第一个标题</h1> <p id="first">我的第一个段落。</p> </body> <table border="1"> <tr> <td>row 1, cell 1</td> <td>row 1, cell 2</td> </tr> </table> </html> ''' demo = BeautifulSoup(r,"html.parser") print(demo.title) print(demo.body) print(demo.p) print(demo.string)

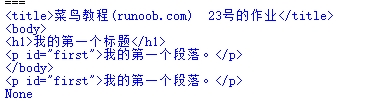

效果如下:

四、爬取中国大学排名(年费2016),将数据存为csv文件。

import requests

from bs4 import BeautifulSoup

ALL = []

def getHTMLtext(url):

try:

r = requests.get(url,timeout = 30)

r.raise_for_status()

r.encoding = 'utf-8'

return r.text

except:

return ""

def fillUni(soup):

data = soup.find_all('tr')

for tr in data:

td1 = tr.find_all('td')

if len(td1) == 0:

continue

Single = []

for td in td1:

Single.append(td.string)

ALL.append(Single)

def printUni(num):

print("{1:^2}{2:{0}^10}{3:{0}^6}{4:{0}^6}{5:{0}^6}{6:{0}^6}{7:{0}^6}{8:{0}^6}{9:{0}^5}{10:{0}^6}{11:{0}^6}{12:{0}^6}{13:{0}^6}".format(chr(12288),"排名","学校名称","省市","总分",

"生源质量","培养结果","科研规模","科研质量",

"顶尖成果","顶尖人才","科技服务",

"产学研究合作","成果转化"))

for i in range(num):

u = ALL[i]

print("{1:^4}{2:{0}^10}{3:{0}^6}{4:{0}^8}{5:{0}^9}{6:{0}^9}{7:{0}^7}{8:{0}^9}{9:{0}^7}{10:{0}^9}{11:{0}^8}{12:{0}^9}{13:{0}^9}".format(chr(12288),u[0],

u[1],u[2],eval(u[3]),

u[4],u[5],u[6],u[7],u[8],

u[9],u[10],u[11],u[12]))

def main(num):

url = "http://www.zuihaodaxue.com/zuihaodaxuepaiming2016.html"

html = getHTMLtext(url)

soup = BeautifulSoup(html,"html.parser")

fillUni(soup)

printUni(num)

main(10)

效果:

将爬取到的数据存为csv文件,只需将printUni()函数换掉。

改动后的代码如下:

import requests

from bs4 import BeautifulSoup

import csv

import os

ALL = []

def getHTMLtext(url):

try:

r = requests.get(url,timeout = 30)

r.raise_for_status()

r.encoding = 'utf-8'

return r.text

except:

return ""

def fillUni(soup):

data = soup.find_all('tr')

for tr in data:

td1 = tr.find_all('td')

if len(td1) == 0:

continue

Single = []

for td in td1:

Single.append(td.string)

ALL.append(Single)

def writercsv(save_road,num,title):

if os.path.isfile(save_road):

with open(save_road,'a',newline='')as f:

csv_write=csv.writer(f,dialect='excel')

for i in range(num):

u=ALL[i]

csv_write.writerow(u)

else:

with open(save_road,'w',newline='')as f:

csv_write=csv.writer(f,dialect='excel')

csv_write.writerow(title)

for i in range(num):

u=ALL[i]

csv_write.writerow(u)

title=["排名","学校名称","省市","总分","生源质量","培养结果","科研规模","科研质量","顶尖成果","顶尖人才","科技服务","产学研究合作","成果转化"]

save_road="C:\Users\邓若言\Desktop\html.csv"

def main(num):

url = "http://www.zuihaodaxue.com/zuihaodaxuepaiming2016.html"

html = getHTMLtext(url)

soup = BeautifulSoup(html,"html.parser")

fillUni(soup)

writercsv(save_road,num,title)

main(10)

效果: