本例子ceph N版本采用的是bluestore,而不是filestore.

生产环境有ssd可以直接认到并自动创建ssd class, 修改前集群拓扑:

# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-40 58.21582 root hdd

-41 14.55396 rack shzjJ08-HDD

-4 14.55396 host shzj-computer-167

32 hdd 7.27698 osd.32 down 0 1.00000

33 hdd 7.27698 osd.33 down 0 1.00000

-58 14.55396 rack shzjJ09-HDD

-10 14.55396 host shzj-computer-168

34 hdd 7.27698 osd.34 up 1.00000 1.00000

35 hdd 7.27698 osd.35 up 1.00000 1.00000

-61 14.55396 rack shzjJ10-HDD

-16 14.55396 host shzj-computer-169

36 hdd 7.27698 osd.36 up 1.00000 1.00000

37 hdd 7.27698 osd.37 up 1.00000 1.00000

-64 14.55396 rack shzjJ11-HDD

-24 14.55396 host shzj-computer-170

38 hdd 7.27698 osd.38 up 1.00000 1.00000

39 hdd 7.27698 osd.39 up 1.00000 1.00000

-1 29.07599 root ssd

-19 7.26900 rack shzjJ08

-7 3.63399 host shzj-computer-159

2 ssd 0.90900 osd.2 up 1.00000 1.00000

5 ssd 0.90900 osd.5 up 1.00000 1.00000

8 ssd 0.90900 osd.8 up 1.00000 1.00000

30 ssd 0.90900 osd.30 up 1.00000 1.00000

-11 3.63399 host shzj-computer-163

10 ssd 0.90900 osd.10 up 1.00000 1.00000

12 ssd 0.90900 osd.12 up 1.00000 1.00000

22 ssd 0.90900 osd.22 up 1.00000 1.00000

23 ssd 0.90900 osd.23 up 1.00000 1.00000

-20 7.26900 rack shzjJ09

-9 3.63399 host shzj-computer-160

9 ssd 0.90900 osd.9 up 1.00000 1.00000

20 ssd 0.90900 osd.20 up 1.00000 1.00000

21 ssd 0.90900 osd.21 up 1.00000 1.00000

31 ssd 0.90900 osd.31 up 1.00000 1.00000

-13 3.63399 host shzj-computer-164

3 ssd 0.90900 osd.3 up 1.00000 1.00000

24 ssd 0.90900 osd.24 up 1.00000 1.00000

25 ssd 0.90900 osd.25 up 1.00000 1.00000

29 ssd 0.90900 osd.29 up 1.00000 1.00000

-21 7.26900 rack shzjJ10

-3 3.63399 host shzj-computer-161

0 ssd 0.90900 osd.0 up 1.00000 1.00000

4 ssd 0.90900 osd.4 up 1.00000 1.00000

16 ssd 0.90900 osd.16 up 1.00000 1.00000

19 ssd 0.90900 osd.19 up 1.00000 1.00000

-15 3.63399 host shzj-computer-165

6 ssd 0.90900 osd.6 up 1.00000 1.00000

14 ssd 0.90900 osd.14 up 1.00000 1.00000

26 ssd 0.90900 osd.26 up 1.00000 1.00000

27 ssd 0.90900 osd.27 up 1.00000 1.00000

-22 7.26900 rack shzjJ12

-5 3.63399 host shzj-computer-162

1 ssd 0.90900 osd.1 up 1.00000 1.00000

7 ssd 0.90900 osd.7 up 1.00000 1.00000

17 ssd 0.90900 osd.17 up 1.00000 1.00000

18 ssd 0.90900 osd.18 up 1.00000 1.00000

-17 3.63399 host shzj-computer-166

11 ssd 0.90900 osd.11 up 1.00000 1.00000

13 ssd 0.90900 osd.13 up 1.00000 1.00000

15 ssd 0.90900 osd.15 up 1.00000 1.00000

28 ssd 0.90900 osd.28 up 1.00000 1.00000

查看class类型, 已经有2个class

# ceph osd crush class ls

[

"ssd",

"hdd"

]

查看ssd规则,已经创建完成了2个rule

# ceph osd crush rule ls

ssd_rule

hdd_rule

# ceph osd crush rule dump ssd_rule

{

"rule_id": 0,

"rule_name": "ssd_rule",

"ruleset": 0,

"type": 1,

"min_size": 1,

"max_size": 10,

"steps": [

{

"op": "take",

"item": -1,

"item_name": "ssd"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "rack"

},

{

"op": "emit"

}

]

}

# ceph osd crush rule dump hdd_rule

{

"rule_id": 1,

"rule_name": "hdd_rule",

"ruleset": 1,

"type": 1,

"min_size": 1,

"max_size": 10,

"steps": [

{

"op": "take",

"item": -40,

"item_name": "hdd"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "rack"

},

{

"op": "emit"

}

]

}

------------------------------------------------------------------------------

如果没有ssd的规则,我们可以创建,命令如下

#ceph osd crush rule create-replicated ssd_rule ssd rack

命令的含义如下

ceph osd crush rule create-replicated <name> <root> <type> {<class>} create crush rule <name> for replicated pool to start from <root>, replicate across buckets of type <type>, use devices of type <class> (ssd or hdd)

----------------------------------------------------------------------------------

创建一个使用该rule-ssd规则的存储池:

#ceph osd pool create ssd_volumes 64 64 ssd_rule

查看pool

# ceph osd pool ls detail | grep ssdpool

pool 4 'ssd_volumes' replicated size 3 min_size 1 crush_rule 0 object_hash rjenkins pg_num 64 pgp_num 64 autoscale_mode warn last_change 273444 flags hashpspool stripe_width 0

查看clinet.cinder权限

# ceph auth list

.

.

.

client.cinder

key: AQBnqfpetDPmMhAAiQ/vcxeVUExcAK51kGYhpA==

caps: [mon] allow r

caps: [osd] allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images

client.glance

key: AQBSqfpezszOHRaAyAQq0UIY+9x2b88q3UCLtQ==

caps: [mon] allow r

caps: [osd] allow class-read object_prefix rbd_children, allow rwx pool=images

.

.

.

更新client.cinder权限

# ceph auth caps client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=ssd_volumes,allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images'

查看认证账号

# ceph auth list

.

.

.

client.cinder

key: AQBnqfpetDPmMhAAiQ/vcxeVUExcAK51kGYhpA==

caps: [mon] allow r

caps: [osd] allow class-read object_prefix rbd_children,allow rwx pool=ssd_volumes,allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images

client.glance

key: AQBSqfpezszOHRaAyAQq0UIY+9x2b88q3UCLtQ==

caps: [mon] allow r

caps: [osd] allow class-read object_prefix rbd_children, allow rwx pool=images

.

.

.

修改openstack cinder-volume增加配置,并创建volume

在/etc/cinder/cinder.conf添加以下内容,调用ceph2个pool,一个hdd,一个ssd

[DEFAULT]

enabled_backends = ceph,ssd

[ceph]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = 5

rbd_user = cinder

rbd_secret_uuid = 2b706e33-609e-4542-9cc5-2b01703a292f

volume_backend_name = ceph

report_discard_supported = True

image_upload_use_cinder_backend = True

[ssd]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = ssd_volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = 5

rbd_user = cinder

rbd_secret_uuid = 2b706e33-609e-4542-9cc5-2b01703a292f

volume_backend_name = ssd

report_discard_supported = True

image_upload_use_cinder_backend = True

[oslo_middleware]

重启cinder-volume服务

systemctl restart openstack-cinder-volume.service

查看cinder的pool (Show pool information for backends)

# cinder get-pools

+----------+-------------------------------+

| Property | Value |

+----------+-------------------------------+

| name | shzj-controller-153@ceph#ceph |

+----------+-------------------------------+

+----------+-------------------------------+

| Property | Value |

+----------+-------------------------------+

| name | shzj-controller-151@ceph#ceph |

+----------+-------------------------------+

+----------+-----------------------------+

| Property | Value |

+----------+-----------------------------+

| name | shzj-controller-153@ssd#ssd |

+----------+-----------------------------+

+----------+-------------------------------+

| Property | Value |

+----------+-------------------------------+

| name | shzj-controller-152@ceph#ceph |

+----------+-------------------------------+

创建新的cinder-type

cinder type-create ssd

cinder type-key ssd set volume_backend_name=ssd

查询cinder-volume 是否启动成功

# openstack volume service list

+------------------+--------------------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+--------------------------+------+---------+-------+----------------------------+

| cinder-scheduler | shzj-controller-151 | nova | enabled | up | 2021-03-18T05:48:30.000000 |

| cinder-volume | shzj-controller-151@ceph | nova | enabled | up | 2021-03-18T05:48:37.000000 |

| cinder-volume | shzj-controller-152@ceph | nova | enabled | up | 2021-03-18T05:48:31.000000 |

| cinder-scheduler | shzj-controller-152 | nova | enabled | up | 2021-03-18T05:48:28.000000 |

| cinder-scheduler | shzj-controller-153 | nova | enabled | up | 2021-03-18T05:48:29.000000 |

| cinder-volume | shzj-controller-153@ceph | nova | enabled | up | 2021-03-18T05:48:33.000000 |

| cinder-volume | shzj-controller-153@ssd | nova | enabled | up | 2021-03-18T05:48:28.000000 |

+------------------+--------------------------+------+---------+-------+----------------------------+

创建volume

# openstack volume create --type ssd --size 1 disk20191026

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2021-03-18T05:49:22.000000 |

| description | None |

| encrypted | False |

| id | 7bc67df9-d9de-4f55-8284-c7f01cfb4dda |

| migration_status | None |

| multiattach | False |

| name | disk20210318 |

| properties | |

| replication_status | None |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| type | ssd |

| updated_at | None |

| user_id | 380d6b7f829e4c8ab46704cc15222a2d |

+---------------------+--------------------------------------+

# openstack volume list | grep disk20210318

| 7bc67df9-d9de-4f55-8284-c7f01cfb4dda | disk20210318 | available | 1 |

在ceph检查volume是否在ssd_volumes创建的

# rbd -p ssd_volumes ls

volume-7bc67df9-d9de-4f55-8284-c7f01cfb4dda

# rbd info ssd_volumes/volume-7bc67df9-d9de-4f55-8284-c7f01cfb4dda

rbd image 'volume-7bc67df9-d9de-4f55-8284-c7f01cfb4dda':

size 1 GiB in 256 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 7485e2ba3dac9b

block_name_prefix: rbd_data.7485e2ba3dac9b

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Thu Mar 18 13:49:24 2021

access_timestamp: Thu Mar 18 13:49:24 2021

modify_timestamp: Thu Mar 18 13:49:24 2021

以上编号UID对应的

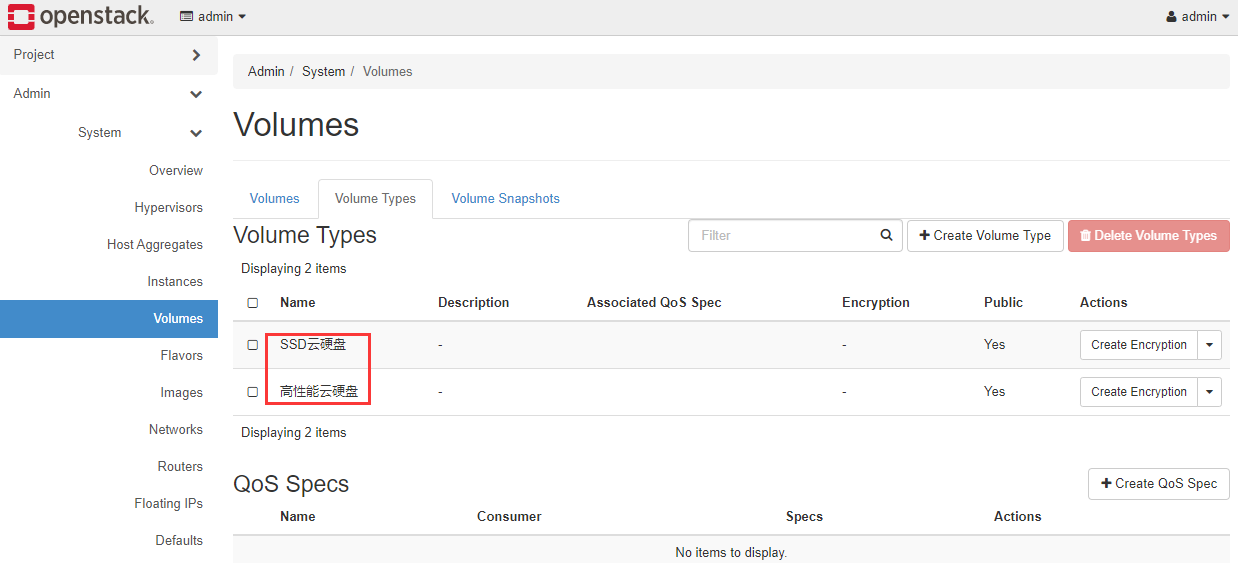

最后我们可以给类型改个高大上点的名字

# cinder type-update --name "SSD云硬盘" 724b73de-83a9-4186-b98c-034167a88a18

# cinder type-list

+--------------------------------------+--------------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+--------------+-------------+-----------+

| 724b73de-83a9-4186-b98c-034167a88a18 | SSD云硬盘 | - | True |

| ba44284f-5e23-4a6c-8cc2-f972941e46b5 | 高性能云硬盘 | - | True |

+--------------------------------------+--------------+-------------+-----------+

作者:Dexter_Wang 工作岗位:某互联网公司资深云计算与存储工程师 联系邮箱:993852246@qq.com