以面向对象的程序设计方式,编写爬虫代码爬去‘李毅吧’所有页面的内容,也可以通过改变对象的参数来爬取其它贴吧页面的内容。

所用到的库为:requests

涉及知识点:python面向对象编程,字符串操作,文件操作,爬虫基本原理

程序代码如下:

import requests

class TiebaSpider:

def __init__(self, tieba_name):

self.tieba_name=tieba_name

self.url_tmp='https://tieba.baidu.com/f?kw='+self.tieba_name+'&ie=utf-8&pn={}'

self.headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36 Edge/17.17134"}

def get_url_list(self): # 获取该贴吧所有的url地址并存放在列表中

url_list=[]

for i in range(1000):

url_list.append(self.url_tmp.format(i+50))

return url_list

def parse_url(self,url): # 解析url,获得响应的页面内容

response=requests.get(url,headers=self.headers)

return response.content.decode() # 默认是utf-8解码

def save_html(self,html_str,page_num):

file_path='html/{}-第{}页.html'.format(self.tieba_name,page_num)

with open(file_path,'w',encoding='utf-8') as f: # 此处一定要加encoding=‘utf8'否则会报错,默认打开是以ASCII码方式,而解码是以utf8解码

f.write(html_str)

def run(self):

url_list=self.get_url_list()

for url in url_list:

html_str=self.parse_url(url)

page_num=url_list.index(url)+1

self.save_html(html_str,page_num)

if __name__=='__main__':

tiebaspider = TiebaSpider('李毅')

tiebaspider.run()

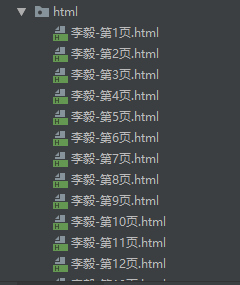

爬取结果如下: