ORACLE 10G RAC+LINUX+ASM安装

1 软件准备

10201_database_linux_x86_64.cpio è ORACLE 10.2.0.1软件

10201_clusterware_linux_x86_64.cpio.gz èORACLE 10.2.0.1 集群软件

p8202632_10205_Linux-x86-64.zip è ORACLE 10.2.0.5升级包

2 系统环境准备

|

主机名 |

IP地址 |

操作系统 |

|

rac1 |

eth0 public 172.44.2.18 eth1 private 172.44.4.18 vip 172.44.2.180 |

centos 5.8 x86_64 |

|

rac2 |

eth0 public 172.44.2.22 eth1 private 172.44.4.22 vip 172.44.2.220 |

centos 5.8 x86_64 |

3 安装

3.1 安装前提说明

本次安装是在ESXI5.5上基于Centos5.8安装ORACLE 10G RAC+ASM。

3.1.1 ESXI配置共享磁盘

1. 在ESXI中的虚拟机上新增5块磁盘,1块用作OCR,一块用作VOTE,其他的用作数据盘。并且新的SCSI总线共享策略要设置为虚拟。磁盘必须选择厚置备模式。

3.2 安装数据库软件依赖包

使用安装镜像作为本地yum源,安装ORACLE所需依赖包

yum -y install binutils compat-libstdc++-33 elfutils-libelf elfutils-libelf-devel elfutils-libelf-devel-static gcc gcc-c++ glibc glibc-common glibc-devel glibc-headers kernel-headers ksh libaio libaio-devel libgcc libgomp libstdc++ libstdc++-devel make sysstat unixODBC unixODBC-devel libXp

3.3 配置内核参数

# vim /etc/sysctl.conf kernel.shmall = 17179869184 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 fs.file-max = 65536 net.ipv4.ip_local_port_range = 1024 65000 net.core.rmem_default = 1048576 net.core.rmem_max = 1048576 net.core.wmem_default = 262144 net.core.wmem_max = 262144 # sysctl -p

3.4 配置用户limit

vim /etc/security/limits.conf oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536

3.5 配置/etc/pam.d/login

# vim /etc/pam.d/login session required pam_limits.so

3.6 配置双机时间同步

# /usr/sbin/ntpdate 172.44.3.101 && /sbin/hwclock –w # crontab -e * * * * * (/usr/sbin/ntpdate 172.44.3.101 && /sbin/hwclock -w) &> /dev/null # vim /etc/modprobe.conf options hangcheck-timer hangcheck_tick=30 hangcheck_margin=180 # modprobe -v hangcheck-timer

3.7 创建用户和属组

# groupadd -g 1000 oinstall # groupadd -g 1001 dba # useradd -g oinstall -G dba oracle

3.8 配置/etc/hosts

# vim /etc/hosts ## 添加以下信息 172.44.2.18 rac1 172.44.2.22 rac2 172.44.4.18 rac1priv 172.44.4.22 rac2priv 172.44.2.180 rac1vip 172.44.2.220 rac2vip

3.9 配置双机ssh互信

在节点1上执行

[root@rac1 ~]# su - oracle [oracle@rac1 ~]$ /usr/bin/ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Created directory '/home/oracle/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: f1:e5:45:34:68:d5:93:d6:af:bf:ee:d4:d8:dd:e5:97 oracle@rac1

在节点2上执行

[root@rac2 ~]# su - oracle [oracle@rac2 ~]$ /usr/bin/ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Created directory '/home/oracle/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: e2:ce:11:97:04:d7:05:33:56:c6:aa:0c:89:c8:1c:c2 oracle@rac2 [oracle@rac2 ~]$

在节点1,2上执行

[oracle@rac2 ~]$ cd .ssh/ [oracle@rac2 .ssh]$ cat id_rsa.pub > authorized_keys [oracle@rac2 .ssh]$ vim authorized_keys

把节点1 authorized_keys文件的内容拷贝到节点2的authorized_keys文件中,把节点2 authorized_keys文件中的内容拷贝到节点1中。

[oracle@rac2 .ssh]$ vim authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA4+8rN3x69Exzx5Y+8POGESZUGS2vew8iHgXRhEk1yI4vquENFmxZinTRsx+tlp2i7fpsIjgkPTqG4Z5UL4E6RYKSeOFJa9qy1VdfQnH6oO0A8fr0qaSrwqy3VzwsV6pFU5Rd0l+y/P7aMCrrWbIqboWdiuAj9ge14jRygY+ip/MwcVPgoXwx5HBzb7Ig7R73mEHAmAXPjoa8PabZmrvBKYt3o0hDAqUlURRV/hA1UPMJOnLP7gfZHEF3g4PiYux8rLIiMZGVSpXFpk8H9yvXqqhJPW780K4Qp15MLRwJW8kMMqDl/pRwwvfNZkgd8ZCtSiAnshtxGWk/VvnauEqI9w== oracle@rac2 ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA0JKof7JLP1/P2Yk0RbflGGihNArnRYt1dOSDgdllNULAEyA0X8I7IKV4rwkqXZkfEwirYKCtkdeOg56S/qddbpOa kJ1ibOHmrcavhfYeIyK6/fPon1TuJESuTdWcvk3H8XYergVTtWKmWoHs6xzL+Ms1dIUeywfg/7liWxprTpuyTW6kUB4Y7ilcxumVTKkUGs1oLWCN7xJbGKUV+yzo pGeDApK2IhdSKZeDzSJCeeagGNzh+K9r6tuoLdY/w5ahvQc8Rw9EKO2+Kv1P+lvEIhE6qsxTotJRQsO+hLVYT/U1dCMLjXkL0hm0C6ARsAh5s91xovxkmQ5Bc62s vHnFGQ== oracle@rac1

测试双机ssh互信,在节点1,2上分别执行

[oracle@rac2 ~]$ ssh rac1 Last login: Wed Jan 18 17:53:09 2017 from rac1priv [oracle@rac1 ~]$ exit logout Connection to rac1 closed. [oracle@rac2 ~]$ ssh rac2 The authenticity of host 'rac2 (172.44.2.22)' can't be established. RSA key fingerprint is 01:bc:86:0e:77:04:41:9d:1d:ee:7d:ff:12:bb:8b:88. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac2,172.44.2.22' (RSA) to the list of known hosts. [oracle@rac2 ~]$ exit logout Connection to rac2 closed. [oracle@rac2 ~]$ ssh rac1priv The authenticity of host 'rac1priv (172.44.4.18)' can't be established. RSA key fingerprint is 01:bc:86:0e:77:04:41:9d:1d:ee:7d:ff:12:bb:8b:88. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac1priv,172.44.4.18' (RSA) to the list of known hosts. Last login: Wed Jan 18 17:54:06 2017 from rac2 [oracle@rac1 ~]$ exit logout Connection to rac1priv closed. [oracle@rac2 ~]$ ssh rac2priv The authenticity of host 'rac2priv (172.44.4.22)' can't be established. RSA key fingerprint is 01:bc:86:0e:77:04:41:9d:1d:ee:7d:ff:12:bb:8b:88. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac2priv,172.44.4.22' (RSA) to the list of known hosts. Last login: Wed Jan 18 17:54:13 2017 from rac2 [oracle@rac2 ~]$

3.10 配置oracle用户环境变量

在节点1上

[oracle@rac1 ~]$ vim .bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH ORACLE_BASE=/u01/app/oracle export ORA_CRS_HOME=$ORACLE_BASE/product/10.2.0/crs export ORACLE_HOME=$ORACLE_BASE/product/10.2.0/db_1 export ORACLE_SID=test1 export PATH=$PATH:$ORA_CRS_HOME/bin:$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:. alias sqlplus='rlwrap sqlplus' # oracle sqlpls alias rman='rlwrap rman' # oracle rman alias ggsci='rlwrap ggsci' # oracle goldengate stty erase ^h export NLS_LANG=AMERICAN_AMERICA.UTF8 export NLS_DATE_FORMAT='yyyymmdd hh24:mi:ss'

在节点2上

[oracle@rac2 ~]$ vim .bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin ORACLE_BASE=/u01/app/oracle export ORA_CRS_HOME=$ORACLE_BASE/product/10.2.0/crs export ORACLE_HOME=$ORACLE_BASE/product/10.2.0/db_1 export ORACLE_SID=test2 export PATH=$PATH:$ORA_CRS_HOME/bin:$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:. alias sqlplus='rlwrap sqlplus' # oracle sqlpls alias rman='rlwrap rman' # oracle rman alias ggsci='rlwrap ggsci' # oracle goldengate stty erase ^h export NLS_LANG=AMERICAN_AMERICA.UTF8 export NLS_DATE_FORMAT='yyyymmdd hh24:mi:ss' export PATH

3.11 配置共享磁盘

在节点1上对磁盘进行分区

[root@rac1 network-scripts]# fdisk -l Disk /dev/sda: 32.2 GB, 32212254720 bytes 255 heads, 63 sectors/track, 3916 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sda1 * 1 13 104391 83 Linux /dev/sda2 14 1057 8385930 82 Linux swap / Solaris /dev/sda3 1058 3916 22964917+ 8e Linux LVM Disk /dev/sdb: 524 MB, 524288000 bytes 64 heads, 32 sectors/track, 500 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Disk /dev/sdb doesn't contain a valid partition table Disk /dev/sdc: 524 MB, 524288000 bytes 64 heads, 32 sectors/track, 500 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Disk /dev/sdc doesn't contain a valid partition table Disk /dev/sdd: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk /dev/sdd doesn't contain a valid partition table Disk /dev/sde: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk /dev/sde doesn't contain a valid partition table Disk /dev/sdf: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk /dev/sdf doesn't contain a valid partition table [root@rac1 network-scripts]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/VolGroup00-LogVol00 22G 2.8G 18G 14% / /dev/sda1 99M 13M 81M 14% /boot tmpfs 2.0G 0 2.0G 0% /dev/shm /dev/hdc 4.3G 4.3G 0 100% /mnt [root@rac1 network-scripts]# fdisk /dev/sdb Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): Value out of range. Partition number (1-4): Value out of range. Partition number (1-4): 1 First cylinder (1-500, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-500, default 500): Using default value 500 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@rac1 network-scripts]# fdisk /dev/sdc Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-500, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-500, default 500): Using default value 500 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@rac1 network-scripts]# fdisk /dev/sdd Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. The number of cylinders for this disk is set to 1305. There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with: 1) software that runs at boot time (e.g., old versions of LILO) 2) booting and partitioning software from other OSs (e.g., DOS FDISK, OS/2 FDISK) Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1305, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-1305, default 1305): Using default value 1305 Command (m for help): Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@rac1 network-scripts]# fdisk /dev/sde Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. The number of cylinders for this disk is set to 1305. There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with: 1) software that runs at boot time (e.g., old versions of LILO) 2) booting and partitioning software from other OSs (e.g., DOS FDISK, OS/2 FDISK) Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1305, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-1305, default 1305): Using default value 1305 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@rac1 network-scripts]# fdisk /dev/sdf Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. The number of cylinders for this disk is set to 1305. There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with: 1) software that runs at boot time (e.g., old versions of LILO) 2) booting and partitioning software from other OSs (e.g., DOS FDISK, OS/2 FDISK) Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1305, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-1305, default 1305): Using default value 1305 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. # partprobe [root@rac1 network-scripts]# fdisk -l Disk /dev/sda: 32.2 GB, 32212254720 bytes 255 heads, 63 sectors/track, 3916 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sda1 * 1 13 104391 83 Linux /dev/sda2 14 1057 8385930 82 Linux swap / Solaris /dev/sda3 1058 3916 22964917+ 8e Linux LVM Disk /dev/sdb: 524 MB, 524288000 bytes 64 heads, 32 sectors/track, 500 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Device Boot Start End Blocks Id System /dev/sdb1 1 500 511984 83 Linux Disk /dev/sdc: 524 MB, 524288000 bytes 64 heads, 32 sectors/track, 500 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Device Boot Start End Blocks Id System /dev/sdc1 1 500 511984 83 Linux Disk /dev/sdd: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sdd1 1 1305 10482381 83 Linux Disk /dev/sde: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sde1 1 1305 10482381 83 Linux Disk /dev/sdf: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sdf1 1 1305 10482381 83 Linux [root@rac1 network-scripts]#

在节点1,2上执行

[root@rac1 network-scripts]# vim /etc/udev/rules.d/60-raw.rules ACTION=="add", KERNEL=="sdc3",RUN+="/bin/raw /dev/raw/raw6 %N" # Enter raw device bindings here. # # An example would be: # ACTION=="add", KERNEL=="sda", RUN+="/bin/raw /dev/raw/raw1 %N" # to bind /dev/raw/raw1 to /dev/sda, or # ACTION=="add", ENV{MAJOR}=="8", ENV{MINOR}=="1", RUN+="/bin/raw /dev/raw/raw2 %M %m" # to bind /dev/raw/raw2 to the device with major 8, minor 1. ACTION=="add", KERNEL=="sdb1",RUN+="/bin/raw /dev/raw/raw1 %N" ACTION=="add", KERNEL=="sdc1",RUN+="/bin/raw /dev/raw/raw2 %N" ACTION=="add", KERNEL=="sdd1",RUN+="/bin/raw /dev/raw/raw3 %N" ACTION=="add", KERNEL=="sde1",RUN+="/bin/raw /dev/raw/raw4 %N" ACTION=="add", KERNEL=="sdf1",RUN+="/bin/raw /dev/raw/raw5 %N" ACTION=="add",KERNEL=="raw[1-5]", OWNER="oracle", GROUP="oinstall", MODE="660" [root@rac1 network-scripts]# start_udev Starting udev: [ OK ] [root@rac1 network-scripts]# raw raw raw2tiff [root@rac1 network-scripts]# raw raw raw2tiff [root@rac1 network-scripts]# raw -qa /dev/raw/raw1: bound to major 8, minor 17 /dev/raw/raw2: bound to major 8, minor 33 /dev/raw/raw3: bound to major 8, minor 49 /dev/raw/raw4: bound to major 8, minor 65 /dev/raw/raw5: bound to major 8, minor 81 [root@rac1 network-scripts]#

3.12 创建安装路径

在节点1,2上执行

# mkdir -p /u01/app/oracle/product/10.2.0/crs # mkdir -p /u01/app/oracle/product/10.2.0/db_1 # mkdir -p /u01/oradata # chown -R oracle.oinstall /u01 # chown -R oracle.oinstall /u01

3.13 解压ORACLE 集群软件安装包

在安装ORACLE数据库软件前,需要先安装ORACLE clusterware集群软件

[root@rac1 src]# cpio -idcmv < 10201_clusterware_linux_x86_64.cpio

3.14 安装clusterware集群软件

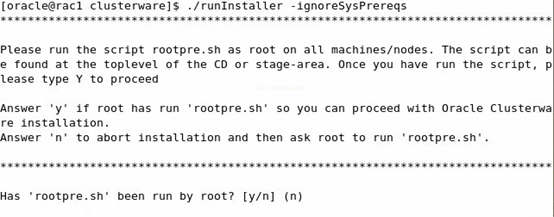

1. 在节点1,2上执行

# /usr/local/src/clusterware/rootpre/rootpre.sh

2. 运行以下命令

$ ./runInstaller –ignoreSysPrereqs

3. 选择crs路径,注意别选择了dbms的

4. 检查安装要求

5. 输入另一个节点的信息

6. 确认两个节点的信息无误,选择下一步

7. 修改网卡用途

8. 选择OCR路径

9. 选择Voting Disk

10. 开始安装

11. 安装进度条

12. 使用root在两个节点上执行脚本

在节点1 上执行 [root@rac1 ~]# /home/oracle/oraInventory/orainstRoot.sh 在节点2上执行 [root@rac2 ~]# /home/oracle/oraInventory/orainstRoot.sh Changing permissions of /home/oracle/oraInventory to 770. Changing groupname of /home/oracle/oraInventory to oinstall. The execution of the script is complete [root@rac2 ~]# 在节点1上执行 [root@rac1 ~]# /u01/app/oracle/product/10.2.0/crs/root.sh WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root Checking to see if Oracle CRS stack is already configured /etc/oracle does not exist. Creating it now. Setting the permissions on OCR backup directory Setting up NS directories Oracle Cluster Registry configuration upgraded successfully WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 1: rac1 rac1priv rac1 node 2: rac2 rac2priv rac2 Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. Now formatting voting device: /dev/raw/raw2 Format of 1 voting devices complete. Startup will be queued to init within 90 seconds. Adding daemons to inittab Expecting the CRS daemons to be up within 600 seconds. CSS is active on these nodes. rac1 CSS is inactive on these nodes. rac2 Local node checking complete. Run root.sh on remaining nodes to start CRS daemons. [root@rac1 ~]#

在节点2上执行,出现报错

[root@rac2 ~]# /u01/app/oracle/product/10.2.0/crs/root.sh WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root Checking to see if Oracle CRS stack is already configured /etc/oracle does not exist. Creating it now. Setting the permissions on OCR backup directory Setting up NS directories Oracle Cluster Registry configuration upgraded successfully WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root clscfg: EXISTING configuration version 3 detected. clscfg: version 3 is 10G Release 2. Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 1: rac1 rac1priv rac1 node 2: rac2 rac2priv rac2 clscfg: Arguments check out successfully. NO KEYS WERE WRITTEN. Supply -force parameter to override. -force is destructive and will destroy any previous cluster configuration. Oracle Cluster Registry for cluster has already been initialized Startup will be queued to init within 90 seconds. Adding daemons to inittab Expecting the CRS daemons to be up within 600 seconds. CSS is active on these nodes. rac1 rac2 CSS is active on all nodes. Waiting for the Oracle CRSD and EVMD to start Oracle CRS stack installed and running under init(1M) Running vipca(silent) for configuring nodeapps /u01/app/oracle/product/10.2.0/crs/jdk/jre//bin/java: error while loading shared libraries: libpthread.so.0: cannot open shared object file: No such file or directory [root@rac2 ~]#

备注:如果不希望看到报错,可以先执行下面的vipca操作以及oifcfg操作后,再执行该脚本

在节点1上执行

$ cd /u01/app/oracle/product/10.2.0/crs/bin

$ vim vipca

在文件中添加unset LD_ASSUME_KERNEL,如下图所示

在节点1上执行

[root@rac1 bin]# ./oifcfg setif -global eth0/172.44.2.0:public [root@rac1 bin]# ./oifcfg setif -global eth1/172.44.4.0:cluster_interconnect [root@rac1 bin]# ./oifcfg getif eth0 172.44.2.0 global public eth1 172.44.4.0 global cluster_interconnect [root@rac1 bin]#

在节点1上执行

# cd /u01/app/oracle/product/10.2.0/crs/bin # ./vipca

选择NEXT

2. 选择下一步

3. 输入两个节点的VIP信息

4. 确认两个节点的VIP信息无误

5. 等待安装完成

6. 这时候返回之前安装的界面,点击OK

7. 如果配置有问题的话,点击OK之后,后面会出现报错。

8. 安装完成。

3.15 安装数据库软件

在节点1上执行

/usr/local/src/database/runInstaller

- 选择下一步

- 选择安装企业版

- 注意这是是DB HOME

- 选择两个节点

- 检查通过之后,选择下一步

- 选择只安装软件

- 选择安装

- 在两个节点上执行脚本

[root@rac1 bin]# /u01/app/oracle/product/10.2.0/db_1/root.sh Running Oracle10 root.sh script... The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/10.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root.sh script. Now product-specific root actions will be performed. [root@rac1 bin]# [root@rac2 ~]# /u01/app/oracle/product/10.2.0/db_1/root.sh Running Oracle10 root.sh script... The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/10.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root.sh script. Now product-specific root actions will be performed. [root@rac2 ~]#

安装完成。

3.16 安装监听

在节点1上执行

$ netca

2. 选择集群配置

3. 选择两个节点

4. 选择下一步

5. 选择下一步

6. 选择下一步

7. 选择下一步

8. 选择下一步

9. 选择下一步

10 . 完成配置。

3.17 建库

1. 在节点1上执行

$ dbca

2. 选择下一步

3. 选择创建数据库

4. 选择两个节点

5. 选择下一步

6. 输入数据库名称

7. 选择下一步

8. 配置密码

9. 使用ASM

10. 配置ASM参数

11. 配置ASM 磁盘

12. 配置ASM

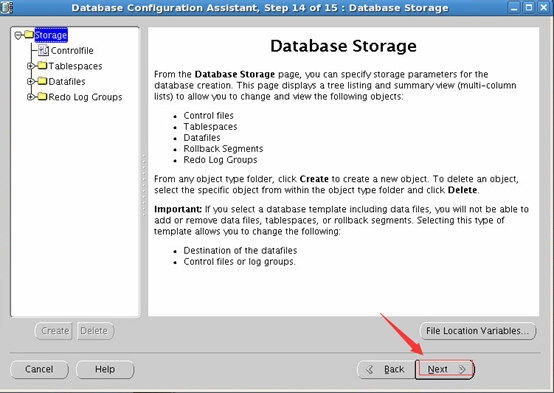

13. 选择数据文件存放路径

14. 选择下一步

15. 选择下一步

16. 选择下一步

17. 配置数据库字符集

18. 选择下一步

19. 选择下一步

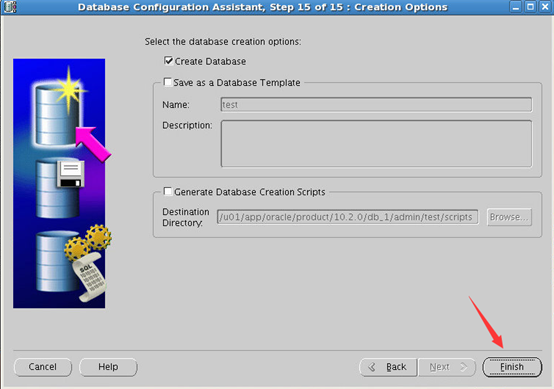

20. 选择Finish

21. 选择OK ,开始安装

22. 安装完成

[oracle@rac1 ~]$ crs_stat -t Name Type Target State Host ------------------------------------------------------------ ora....SM1.asm application ONLINE ONLINE rac1 ora....C1.lsnr application ONLINE ONLINE rac1 ora.rac1.gsd application ONLINE ONLINE rac1 ora.rac1.ons application ONLINE ONLINE rac1 ora.rac1.vip application ONLINE ONLINE rac1 ora....SM2.asm application ONLINE ONLINE rac2 ora....C2.lsnr application ONLINE ONLINE rac2 ora.rac2.gsd application ONLINE ONLINE rac2 ora.rac2.ons application ONLINE ONLINE rac2 ora.rac2.vip application ONLINE ONLINE rac2 ora.test.db application ONLINE ONLINE rac1 ora....t1.inst application ONLINE ONLINE rac1 ora....t2.inst application ONLINE ONLINE rac2

3.18 升级CRS

在节点1执行

# unzip p8202632_10205_Linux-x86-64.zip # cd Disk1/

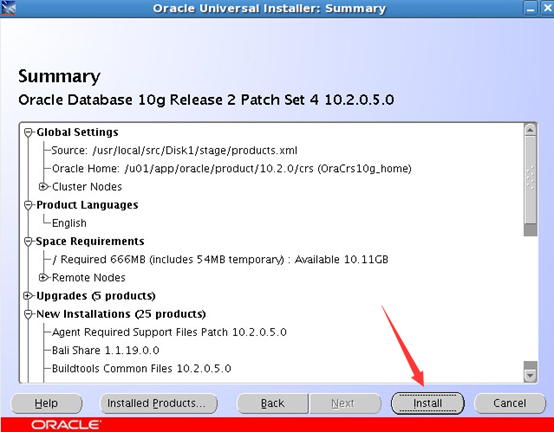

1. 选择下一步

2. 选择下一步

3. 选择下一步

4. 检查通过后,选择下一步

5. 选择安装,安装完成之后,在节点1,2执行以下语句

[root@rac1 Disk1]# /u01/app/oracle/product/10.2.0/crs/bin/crsctl stop crs Stopping resources. Successfully stopped CRS resources Stopping CSSD. Shutting down CSS daemon. Shutdown request successfully issued. [root@rac1 Disk1]# /u01/app/oracle/product/10.2.0/crs/install/root102.sh Creating pre-patch directory for saving pre-patch clusterware files Completed patching clusterware files to /u01/app/oracle/product/10.2.0/crs Relinking some shared libraries. Relinking of patched files is complete. WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root Preparing to recopy patched init and RC scripts. Recopying init and RC scripts. Startup will be queued to init within 30 seconds. Starting up the CRS daemons. Waiting for the patched CRS daemons to start. This may take a while on some systems. . 10205 patch successfully applied. clscfg: EXISTING configuration version 3 detected. clscfg: version 3 is 10G Release 2. Successfully deleted 1 values from OCR. Successfully deleted 1 keys from OCR. Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 1: rac1 rac1priv rac1 Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. clscfg -upgrade completed successfully Creating '/u01/app/oracle/product/10.2.0/crs/install/paramfile.crs' with data used for CRS configuration Setting CRS configuration values in /u01/app/oracle/product/10.2.0/crs/install/paramfile.crs [root@rac1 Disk1]# [root@rac2 ~]# /u01/app/oracle/product/10.2.0/crs/bin/crsctl stop crs Stopping resources. Successfully stopped CRS resources Stopping CSSD. Shutting down CSS daemon. Shutdown request successfully issued. [root@rac2 ~]# /u01/app/oracle/product/10.2.0/crs/install/root102.sh Creating pre-patch directory for saving pre-patch clusterware files Completed patching clusterware files to /u01/app/oracle/product/10.2.0/crs Relinking some shared libraries. Relinking of patched files is complete. WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root Preparing to recopy patched init and RC scripts. Recopying init and RC scripts. Startup will be queued to init within 30 seconds. Starting up the CRS daemons. Waiting for the patched CRS daemons to start. This may take a while on some systems. . 10205 patch successfully applied. clscfg: EXISTING configuration version 3 detected. clscfg: version 3 is 10G Release 2. Successfully deleted 1 values from OCR. Successfully deleted 1 keys from OCR. Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 2: rac2 rac2priv rac2 Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. clscfg -upgrade completed successfully Creating '/u01/app/oracle/product/10.2.0/crs/install/paramfile.crs' with data used for CRS configuration Setting CRS configuration values in /u01/app/oracle/product/10.2.0/crs/install/paramfile.crs [root@rac2 ~]#

查询CRS版本

[oracle@rac2 ~]$ crsctl query crs softwareversion CRS software version on node [rac2] is [10.2.0.5.0]

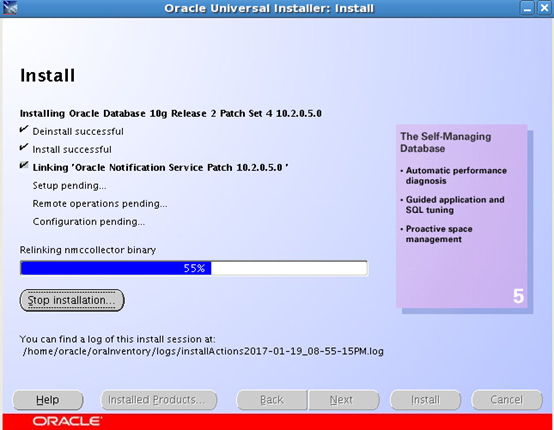

3.19 升级数据库软件

升级数据库软件必须先停止数据库

在节点1,2上执行

# /u01/app/oracle/product/10.2.0/crs/bin/crsctl stop crs

1. 选择下一步

2. 选择DB HOME

3. 选择下一步

4. 选择下一步

5. 选择下一步

6. 选择下一步

7. 选择安装

8. 在节点1,2上执行

[root@rac1 Disk1]# /u01/app/oracle/product/10.2.0/db_1/root.sh Running Oracle 10g root.sh script... The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/10.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root.sh script. Now product-specific root actions will be performed. [root@rac1 Disk1]# [root@rac2 ~]# /u01/app/oracle/product/10.2.0/db_1/root.sh Running Oracle 10g root.sh script... The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/10.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root.sh script. Now product-specific root actions will be performed. [root@rac2 ~]#

9. 安装完成。

在节点1,2上执行

[root@rac1 Disk1]# /u01/app/oracle/product/10.2.0/crs/bin/crsctl start crs Attempting to start CRS stack The CRS stack will be started shortly [root@rac1 Disk1]# [root@rac2 ~]# /u01/app/oracle/product/10.2.0/crs/bin/crsctl start crs Attempting to start CRS stack The CRS stack will be started shortly [root@rac2 ~]# [oracle@rac1 ~]$ crs_stat -t Name Type Target State Host ------------------------------------------------------------ ora....SM1.asm application ONLINE ONLINE rac1 ora....C1.lsnr application ONLINE ONLINE rac1 ora.rac1.gsd application ONLINE ONLINE rac1 ora.rac1.ons application ONLINE ONLINE rac1 ora.rac1.vip application ONLINE ONLINE rac1 ora....SM2.asm application ONLINE ONLINE rac2 ora....C2.lsnr application ONLINE ONLINE rac2 ora.rac2.gsd application ONLINE ONLINE rac2 ora.rac2.ons application ONLINE ONLINE rac2 ora.rac2.vip application ONLINE ONLINE rac2 ora.test.db application ONLINE OFFLINE ora....t1.inst application ONLINE OFFLINE ora....t2.inst application ONLINE OFFLINE [oracle@rac1 ~]$

可以看出,在升级库之前,数据库是起不来的。

3.20 升级数据库

在节点1上执行

[oracle@rac1 ~]$ cd /u01/app/oracle/product/10.2.0/db_1/dbs/ [oracle@rac1 dbs]$ ls ab_+ASM1.dat hc_+ASM1.dat hc_test1.dat init+ASM1.ora initdw.ora init.ora inittest1.ora orapw+ASM1 orapwtest1 [oracle@rac1 dbs]$ ll total 56 -rw-rw---- 1 oracle oinstall 1559 Jan 19 21:08 ab_+ASM1.dat -rw-rw---- 1 oracle oinstall 1552 Jan 19 21:09 hc_+ASM1.dat -rw-rw---- 1 oracle oinstall 1552 Jan 19 21:10 hc_test1.dat lrwxrwxrwx 1 oracle oinstall 61 Jan 18 21:20 init+ASM1.ora -> /u01/app/oracle/product/10.2.0/db_1/admin/+ASM/pfile/init.ora -rw-r--r-- 1 oracle oinstall 12920 May 3 2001 initdw.ora -rw-r----- 1 oracle oinstall 8385 Sep 11 1998 init.ora -rw-r----- 1 oracle oinstall 37 Jan 18 21:38 inittest1.ora -rw-r----- 1 oracle oinstall 1536 Jan 18 21:20 orapw+ASM1 -rw-r----- 1 oracle oinstall 1536 Jan 18 21:26 orapwtest1 [oracle@rac1 dbs]$ more inittest1.ora SPFILE='+DATADG/test/spfiletest.ora' [oracle@rac1 dbs]$

在节点1,2上,使用sqlplus登录实例

[oracle@rac1 dbs]$ !sql sqlplus "/as sysdba" SQL*Plus: Release 10.2.0.5.0 - Production on Thu Jan 19 21:19:46 2017 Copyright (c) 1982, 2010, Oracle. All Rights Reserved. Connected to an idle instance. SQL> create pfile='/tmp/pfile.txt' from spfile='+DATADG/test/spfiletest.ora'; File created. SQL>

在节点1,2上,修改/tmp/pfile.txt,将*.cluster_database=true修改为*.cluster_database=false

在节点1,2上,以升级的方式打开数据库

[oracle@rac1 dbs]$ !sql sqlplus "/as sysdba" SQL*Plus: Release 10.2.0.5.0 - Production on Thu Jan 19 21:22:40 2017 Copyright (c) 1982, 2010, Oracle. All Rights Reserved. Connected to an idle instance. SQL> startup upgrade pfile='/tmp/pfile.txt'; ORACLE instance started. Total System Global Area 1224736768 bytes Fixed Size 2095896 bytes Variable Size 335545576 bytes Database Buffers 872415232 bytes Redo Buffers 14680064 bytes Database mounted. Database opened. SQL>@?/rdbms/admin/catupgrd.sql

升级之后需要先关闭数据库,再启动

SQL> shutdown immediate; Database closed. Database dismounted. ORACLE instance shut down. SQL>startup; ORACLE instance started. Total System Global Area 1224736768 bytes Fixed Size 2095896 bytes Variable Size 335545576 bytes Database Buffers 872415232 bytes Redo Buffers 14680064 bytes Database mounted. Database

至此,ORACLE 10G RAC安装完成。