一、Kubernetes概述

1.1 Kubernetes是什么

-

Kubernetes是Google在2014年开源的一个容器集群管理系统,Kubernetes简称K8S。

-

K8S用于容器化应用程序的部署,扩展和管理。

-

K8S提供了容器编排,资源调度,弹性伸缩,部署管理,服务发现等一系列功能。

-

Kubernetes目标是让部署容器化应用简单高效。

1.2 Kubernetes特性

-

自我修复

-

在节点故障时重新启动失败的容器,替换和重新部署,保证预期的副本数量;杀死健康检查失败的容器,并且在未准备好之前不会处理客户端请求,确保线上服务不中断。

-

弹性伸缩

-

使用命令、UI或者基于CPU使用情况自动快速扩容和缩容应用程序实例,保证应用业务高峰并发时的高可用性;业务低峰时回收资源,以最小成本运行服务。

-

自动部署和回滚

-

K8S采用滚动更新策略更新应用,一次更新一个Pod,而不是同时删除所有Pod,如果更新过程中出现问题,将回滚更改,确保升级不受影响业务。

-

服务发现和负载均衡

-

K8S为多个容器提供一个统一访问入口(内部IP地址和一个DNS名称),并且负载均衡关联的所有容器,使得用户无需考虑容器IP问题。

-

机密和配置管理

-

管理机密数据和应用程序配置,而不需要把敏感数据暴露在镜像里,提高敏感数据安全性。并可以将一些常用的配置存储在K8S中,方便应用程序使用。

-

存储编排

-

挂载外部存储系统,无论是来自本地存储,公有云(如AWS),还是网络存储(如NFS、GlusterFS、Ceph)都作为集群资源的一部分使用,极大提高存储使用灵活性。

-

批处理

-

提供一次性任务,定时任务;满足批量数据处理和分析的场景。

1.3 Kubernetes集群架构与组件

1.4 Kubernetes集群组件介绍

1.4.1 Master组件

-

kube-apiserver

-

Kubernetes API,

集群的统一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储。

-

kube-controller-manager

-

处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。

-

kube-scheduler

-

根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一个节点上,也可以部署在不同的节点上。

-

etcd

-

分布式键值存储系统。用于保存集群状态数据,比如Pod、Service等对象信息。

1.4.2 Node组件

-

kubelet

-

kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器。

-

kube-proxy

-

在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。

-

docker或rocket

-

容器引擎,运行容器。

1.5 Kubernetes 核心概念

-

Pod

-

最小部署单元

-

一组容器的集合

-

一个Pod中的容器共享网络命名空间

-

Pod是短暂的

-

Controllers

-

ReplicaSet :确保预期的Pod副本数量

-

Deployment :无状态应用部署

-

StatefulSet :有状态应用部署

-

DaemonSet :确保所有Node运行同一个Pod

-

Job :一次性任务

-

Cronjob :定时任务

更高级层次对象,部署和管理Pod

-

Service

- 防止Pod失联

- 定义一组Pod的访问策略

-

Label :标签,附加到某个资源上,用于关联对象、查询和筛选

-

Namespaces:命名空间,将对象逻辑上隔离

-

Annotations :注释

二、kubeadm 快速部署K8S集群

2.1 kubernetes 官方提供的三种部署方式

-

minikube

Minikube是一个工具,可以在本地快速运行一个单点的Kubernetes,仅用于尝试Kubernetes或日常开发的用户使用。部署地址:https://kubernetes.io/docs/setup/minikube/

-

kubeadm

Kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。部署地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

-

二进制包

推荐,从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。下载地址:https://github.com/kubernetes/kubernetes/releases

2.2 安装kubeadm环境准备

以下操作,在三台节点都执行

2.2.1 环境需求

环境:centos 7.4 +

硬件需求:CPU>=2c ,内存>=2G

2.2.2 环境角色

| IP | 角色 | 安装软件 |

|---|---|---|

| 192.168.73.138 | k8s-Master | kube-apiserver kube-schduler kube-controller-manager docker flannel kubelet |

| 192.168.73.139 | k8s-node01 | kubelet kube-proxy docker flannel |

| 192.168.73.140 | k8s-node01 | kubelet kube-proxy docker flannel |

2.2.3 环境初始化

PS : 以下所有操作,在三台节点全部执行

1、关闭防火墙及selinux

$ sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config && setenforce 0

2、关闭 swap 分区

3、分别在192.168.73.138、192.168.73.139、192.168.73.140上设置主机名及配置hosts

$ hostnamectl set-hostname k8s-master(192.168.73.138主机打命令)

$ hostnamectl set-hostname k8s-node01(192.168.73.139主机打命令)$ hostnamectl set-hostname k8s-node02 (192.168.73.140主机打命令)4、在所有主机上上添加如下命令

$ cat >> /etc/hosts << EOF192.168.4.34 k8s-master192.168.4.35 k8s-node01192.168.4.36 k8s-node02EOF

5、内核调整,将桥接的IPv4流量传递到iptables的链

$ cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOF$ sysctl --system

6、设置系统时区并同步时间服务器

2.2.4 docker 安装

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo $ yum -y install docker-ce-18.06.1.ce-3.el7 $ systemctl enable docker && systemctl start docker $ docker --version Docker version 18.06.1-ce, build e68fc7a

2.2.5 添加kubernetes YUM软件源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

2.2.6 安装kubeadm,kubelet和kubectl

2.2.6上所有主机都需要操作,由于版本更新频繁,这里指定版本号部署

$ yum install -y kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0 $ systemctl enable kubelet

2.3 部署Kubernetes Master

只需要在Master 节点执行,这里的apiserve需要修改成自己的master地址

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.73.138 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.15.0 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

输出结果:

[preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Activating the kubelet service [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 192.168.4.34] [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.4.34 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.4.34 127.0.0.1 ::1] [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" ......(省略) [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.73.138:6443 --token 2nm5l9.jtp4zwnvce4yt4oj --discovery-token-ca-cert-hash sha256:12f628a21e8d4a7262f57d4f21bc85f8802bb717d

根据输出提示操作:

[root@k8s-master ~]# mkdir -p $HOME/.kube [root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

默认token的有效期为24小时,当过期之后,该token就不可用了,

如果后续有nodes节点加入,解决方法如下:

重新生成新的token

kubeadm token create [root@k8s-master ~]# kubeadm token create 0w3a92.ijgba9ia0e3scicg [root@k8s-master ~]# kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS 0w3a92.ijgba9ia0e3scicg 23h 2019-09-08T22:02:40+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token t0ehj8.k4ef3gq0icr3etl0 22h 2019-09-08T20:58:34+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token [root@k8s-master ~]#

获取ca证书sha256编码hash值

[root@k8s-master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' ce07a7f5b259961884c55e3ff8784b1eda6f8b5931e6fa2ab0b30b6a4234c09a

节点加入集群

[root@k8s-node01 ~]# kubeadm join --token aa78f6.8b4cafc8ed26c34f --discovery-token-ca-cert-hash sha256:0fd95a9bc67a7bf0ef42da968a0d55d92e52898ec37c971bd77ee501d845b538 192.168.73.138:6443 --skip-preflight-chec

2.4 加入Kubernetes Node

在两个 Node 节点执行

使用kubeadm join 注册Node节点到Matser

kubeadm join 的内容,在上面kubeadm init 已经生成好了

[root@k8s-node01 ~]# kubeadm join 192.168.4.34:6443 --token 2nm5l9.jtp4zwnvce4yt4oj --discovery-token-ca-cert-hash sha256:12f628a21e8d4a7262f57d4f21bc85f8802bb717dd6f513bf9d33f254fea3e89

输出内容:

[preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

2.5 安装网络插件

只需要在Master 节点执行

[

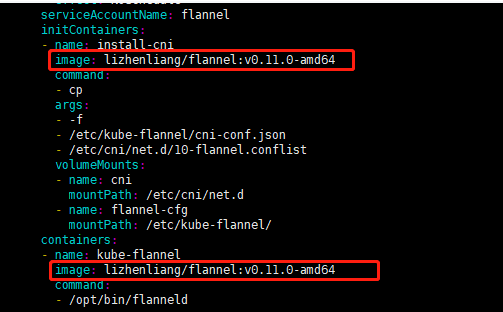

修改镜像地址:(有可能默认不能拉取,确保能够访问到quay.io这个registery,否则修改如下内容)[进入编辑,把106行,120行的内容,替换如下image,替换之后查看如下为正确

[root@k8s-master ~]# cat -n kube-flannel.yml|grep lizhenliang/flannel:v0.11.0-amd64 106 image: lizhenliang/flannel:v0.11.0-amd64 120 image: lizhenliang/flannel:v0.11.0-amd64 [root@k8s-master ~]# kubectl apply -f kube-flannel.yml [root@k8s-master ~]# ps -ef|grep flannel root 2032 2013 0 21:00 ? 00:00:00 /opt/bin/flanneld --ip-masq --kube-subnet-mgr

查看集群的node状态,安装完网络工具之后,只有显示如下状态,所有节点全部都Ready好了之后才能继续后面的操作

[root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 37m v1.15.0 k8s-node01 Ready <none> 5m22s v1.15.0 k8s-node02 Ready <none> 5m18s v1.15.0 [root@k8s-master ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-bccdc95cf-h2ngj 1/1 Running 0 14m coredns-bccdc95cf-m78lt 1/1 Running 0 14m etcd-k8s-master 1/1 Running 0 13m kube-apiserver-k8s-master 1/1 Running 0 13m kube-controller-manager-k8s-master 1/1 Running 0 13m kube-flannel-ds-amd64-j774f 1/1 Running 0 9m48s kube-flannel-ds-amd64-t8785 1/1 Running 0 9m48s kube-flannel-ds-amd64-wgbtz 1/1 Running 0 9m48s kube-proxy-ddzdx 1/1 Running 0 14m kube-proxy-nwhzt 1/1 Running 0 14m kube-proxy-p64rw 1/1 Running 0 13m kube-scheduler-k8s-master 1/1 Running 0 13m

只有全部都为1/1则可以成功执行后续步骤,如果flannel需检查网络情况,重新进行如下操作kubectl delete -f kube-flannel.yml然后重新wget,然后修改镜像地址,然后kubectl apply -f kube-flannel.yml2.7 测试Kubernetes集群

在Kubernetes集群中创建一个pod,然后暴露端口,验证是否正常访问:

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx deployment.apps/nginx created [root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort service/nginx exposed [root@k8s-master ~]# kubectl get pods,svc NAME READY STATUS RESTARTS AGE pod/nginx-554b9c67f9-wf5lm 1/1 Running 0 24s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 39m service/nginx NodePort 10.1.224.251 <none> 80:31745/TCP 9

访问地址:http://NodeIP:Port ,此例就是:http://192.168.73.138:32039

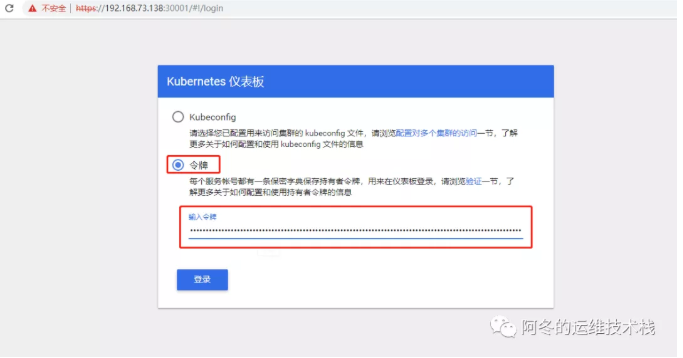

2.8 部署 Dashboard

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml [root@k8s-master ~]# vim kubernetes-dashboard.yaml 修改内容: 109 spec: 110 containers: 111 - name: kubernetes-dashboard 112 image: lizhenliang/kubernetes-dashboard-amd64:v1.10.1 # 修改此行 ...... 157 spec: 158 type: NodePort # 增加此行 159 ports: 160 - port: 443 161 targetPort: 8443 162 nodePort: 30001 # 增加此行 163 selector: 164 k8s-app: kubernetes-dashboard [root@k8s-master ~]# kubectl apply -f kubernetes-dashboard.yaml

在火狐浏览器访问(google受信任问题不能访问)地址: https://NodeIP:30001

创建service account并绑定默认cluster-admin管理员集群角色:

[root@k8s-master ~]# kubectl create serviceaccount dashboard-admin -n kube-system serviceaccount/dashboard-admin created [root@k8s-master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin [root@k8s-master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') Name: dashboard-admin-token-d9jh2 Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: dashboard-admin kubernetes.io/service-account.uid: 4aa1906e-17aa-4880-b848-8b3959483323 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJ...(省略如下)...AJdQ token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZDlqaDIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNGFhMTkwNmUtMTdhYS00ODgwLWI4NDgtOGIzOTU5NDgzMzIzIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.OkF6h7tVQqmNJniCHJhY02G6u6dRg0V8PTiF8xvMuJJUphLyWlWctgmplM4kjKVZo0fZkAthL7WAV5p_AwAuj4LMfo1X5IpxUomp4YZyhqgsBM0A2ksWoKoLDjbizFwOty8TylWlsX1xcJXZjmP9OvNgjjSq5J90N5PnxYIIgwAMP3fawTP7kUXxz5WhJo-ogCijJCFyYBHoqHrgAbk9pusI8DpGTNIZxBMxkwPPwFwzNCOfKhD0c8HjhNeliKsOYLryZObRdmTQXmxsDfxynTKsRxv_EPQb99yW9GXJPQL0OwpYb4b164CFv857ENitvvKEOU6y55P9hFkuQuAJdQ

解决其他浏览器不能访问的问题

[root@k8s-master ~]# cd /etc/kubernetes/pki/ [root@k8s-master pki]# mkdir ui [root@k8s-master pki]# cp apiserver.crt ui/ [root@k8s-master pki]# cp apiserver.key ui/ [root@k8s-master pki]# cd ui/ [root@k8s-master ui]# mv apiserver.crt dashboard.pem [root@k8s-master ui]# mv apiserver.key dashboard-key.pem [root@k8s-master ui]# kubectl delete secret kubernetes-dashboard-certs -n kube-system [root@k8s-master ui]# kubectl create secret generic kubernetes-dashboard-certs --from-file=./ -n kube-system

[root@k8s-master]# vim kubernetes-dashboard.yaml #回到这个yaml的路径下修改 修改 dashboard-controller.yaml 文件,在args下面增加证书两行 - --tls-key-file=dashboard-key.pem - --tls-cert-file=dashboard.pem [root@k8s-master ~]kubectl apply -f kubernetes-dashboard.yaml [root@k8s-master ~]# kubectl create serviceaccount dashboard-admin -n kube-system serviceaccount/dashboard-admin created [root@k8s-master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin [root@k8s-master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') Name: dashboard-admin-token-zbn9f Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: dashboard-admin kubernetes.io/service-account.uid: 40259d83-3b4f-4acc-a4fb-43018de7fc19 Type: kubernetes.io/service-account-token Data ==== namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4temJuOWYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDAyNTlkODMtM2I0Zi00YWNjLWE0ZmItNDMwMThkZTdmYzE5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.E0hGAkeQxd6K-YpPgJmNTv7Sn_P_nzhgCnYXGc9AeXd9k9qAcO97vBeOV-pH518YbjrOAx_D6CKIyP07aCi_3NoPlbbyHtcpRKFl-lWDPdg8wpcIefcpbtS6uCOrpaJdCJjWFcAEHdvcfmiFpdVVT7tUZ2-eHpRTUQ5MDPF-c2IOa9_FC9V3bf6XW6MSCZ_7-fOF4MnfYRa8ucltEIhIhCAeDyxlopSaA5oEbopjaNiVeJUGrKBll8Edatc7-wauUIJXAN-dZRD0xTULPNJ1BsBthGQLyFe8OpL5n_oiHM40tISJYU_uQRlMP83SfkOpbiOpzuDT59BBJB57OQtl3w ca.crt: 1025 bytes

文中所用到的知识点和镜像都是李振良老师的指导,在此声明感谢

如有不对的地方和问题,欢迎指出和交流