1、 uio 在register device的时候通过uio_dev_add_attributes生成/sys/class/uio/uio0/xx,注册中断

2、 用户程序fd=open(/sys/class/uio/uio0/maps/mapX/size)

3、调用mmap(fd)会进行remap

#define UIO_SIZE "/sys/class/uio/uio0/maps/map0/size"

int main(int argc, char **argv)

{

int uio_fd;

unsigned int uio_size;

FILE *size_fp;

void *base_address;

/*

* 1. Open the UIO device so that it is ready to use

*/

uio_fd = open("/dev/uio0", O_RDWR);

/*

* 2. Get the size of the memory region from the size sysfs file

* attribute

*/

size_fp = fopen(UIO_SIZE, O_RDONLY);

fscanf(size_fp, "0x%08X", &uio_size);

/*

* 3. Map the device registers into the process address space so they

* are directly accessible

*/

base_address = mmap(NULL, uio_size,

PROT_READ|PROT_WRITE,

MAP_SHARED, uio_fd, 0);

// Access to the hardware can now occur ...

/*

* 4. Unmap the device registers to finish

*/

munmap(base_address, uio_size);

...

}

内核空间代码

#include <linux/module.h> #include <linux/platform_device.h> #include <linux/uio_driver.h> #include <asm/io.h> #include <linux/slab.h> /* kmalloc, kfree */ #include <linux/irq.h> /* IRQ_TYPE_EDGE_BOTH */ static irqreturn_t my_interrupt(int irq, void *dev_id) { struct uio_info *info = (struct uio_info *)dev_id; unsigned long *ret_val_add = (unsigned long *)(info->mem[1].addr); *ret_val_add = 10; printk("----------------------------------------------------%d " ,(int)(*ret_val_add)); return IRQ_RETVAL(IRQ_HANDLED); ----------------------返回irq_handled } struct uio_info kpart_info = { .name = "kpart", .version = "0.1", .irq = IRQ_EINT19, .handler = my_interrupt, .irq_flags = IRQ_TYPE_EDGE_RISING, --------------边缘触发发 }; struct button_irq_desc { int irq; int num; char *name; }; static struct button_irq_desc button_irqs [] = { {IRQ_EINT8 , 1, "KEY0"}, {IRQ_EINT11, 2, "KEY1"}, {IRQ_EINT13, 3, "KEY2"}, {IRQ_EINT14, 4, "KEY3"}, {IRQ_EINT15, 5, "KEY4"}, }; static int drv_kpart_probe(struct device *dev); static int drv_kpart_remove(struct device *dev); static struct device_driver uio_dummy_driver = { .name = "kpart", .bus = &platform_bus_type, .probe = drv_kpart_probe, .remove = drv_kpart_remove, }; static irqreturn_t buttons_interrupt(int irq, void *dev_id) { struct button_irq_desc *button_irqs = (struct button_irq_desc *)dev_id; unsigned long *ret_val_add = (unsigned long *)(kpart_info.mem[1].addr); *ret_val_add = button_irqs->num; printk("%s is being pressed ..... ", button_irqs->name); uio_event_notify(&kpart_info); return IRQ_RETVAL(IRQ_HANDLED); } static int drv_kpart_probe(struct device *dev) { printk("drv_kpart_probe(%p) ", dev); unsigned long phys = 0x56000010; // 0x56000010 = GPBCON kpart_info.mem[0].addr = phys; kpart_info.mem[0].memtype = UIO_MEM_PHYS; ---------------物理地址 kpart_info.mem[0].size = 12; kpart_info.mem[1].addr = (unsigned long)kmalloc(4,GFP_KERNEL);; if(kpart_info.mem[1].addr == 0) return -ENOMEM; kpart_info.mem[1].memtype = UIO_MEM_LOGICAL; --------------逻辑地址 kpart_info.mem[1].size = 4; unsigned long *ret_val_add = (unsigned long *)(kpart_info.mem[1].addr); *ret_val_add = 999; if(uio_register_device(dev, &kpart_info)){ kfree((void *)kpart_info.mem[1].addr); return -ENODEV; } int i = 0 ,err = 0;

uio_device_register也会去request_irq for (i = 0; i < sizeof(button_irqs)/sizeof(button_irqs[0]); i++) { err = request_irq(button_irqs[i].irq, buttons_interrupt, IRQ_TYPE_EDGE_RISING, button_irqs[i].name, (void *)&button_irqs[i]); ----------------uio event notifier if (err) break; } return 0; } static int drv_kpart_remove(struct device *dev) { kfree((void *)kpart_info.mem[1].addr); uio_unregister_device(&kpart_info); return 0; } static struct platform_device * uio_dummy_device; static int __init uio_kpart_init(void) { printk("Hello, Mini2440 module is installed ! "); uio_dummy_device = platform_device_register_simple("kpart", -1, NULL, 0); return driver_register(&uio_dummy_driver); } static void __exit uio_kpart_exit(void) { platform_device_unregister(uio_dummy_device); driver_unregister(&uio_dummy_driver); } module_init(uio_kpart_init); module_exit(uio_kpart_exit); MODULE_LICENSE("GPL"); MODULE_AUTHOR("Benedikt Spranger"); MODULE_DESCRIPTION("UIO dummy driver");

void uio_event_notify(struct uio_info *info) { struct uio_device *idev = info->uio_dev; atomic_inc(&idev->event); wake_up_interruptible(&idev->wait); kill_fasync(&idev->async_queue, SIGIO, POLL_IN); }

用户空间

#include <stdio.h> #include <fcntl.h> #include <stdlib.h> #include <unistd.h> #include <sys/mman.h> #include <errno.h> #define UIO_DEV "/dev/uio0" #define UIO_ADDR "/sys/class/uio/uio0/maps/map0/addr" #define UIO_SIZE "/sys/class/uio/uio0/maps/map0/size" #define UIO_ADDR1 "/sys/class/uio/uio0/maps/map1/addr" #define UIO_SIZE1 "/sys/class/uio/uio0/maps/map1/size" #define LED_ON 1 //LED亮状态 #define LED_OFF 0 //LED灭状态 static char uio_addr_buf[16], uio_size_buf[16]; int main(int argc, char **argv) { int uio_fd, addr_fd, size_fd, addr_fd_1, size_fd_1; int uio_size, uio_size_1; void *uio_addr, *uio_addr_1, *access_address, *access_address_1; volatile unsigned long virt, virt_1; volatile unsigned long *GPBCON, *GPBDAT, *GPBUP, *RETVAL; unsigned long pagesz; int turn, index; uio_fd = open(UIO_DEV,O_RDWR); addr_fd = open(UIO_ADDR, O_RDONLY); ---------------addr0 size_fd = open(UIO_SIZE, O_RDONLY); addr_fd_1 = open(UIO_ADDR1, O_RDONLY); ------------adrr1 size_fd_1 = open(UIO_SIZE1, O_RDONLY); if(addr_fd < 0 || size_fd < 0 || uio_fd < 0 || addr_fd_1 < 0 || size_fd_1 < 0) { fprintf(stderr, "mmap: %s ", strerror(errno)); exit(-1); } read(addr_fd, uio_addr_buf, sizeof(uio_addr_buf)); read(size_fd, uio_size_buf, sizeof(uio_size_buf)); uio_addr = (void*)strtoul(uio_addr_buf, NULL, 0); uio_size = (int)strtol(uio_size_buf, NULL, 0); read(addr_fd_1, uio_addr_buf, sizeof(uio_addr_buf)); read(size_fd_1, uio_size_buf, sizeof(uio_size_buf)); uio_addr_1 = (void*)strtoul(uio_addr_buf, NULL, 0); uio_size_1 = (int)strtol(uio_size_buf, NULL, 0); access_address = mmap(NULL, uio_size, PROT_READ | PROT_WRITE, MAP_SHARED, uio_fd, 0); access_address_1 = mmap(NULL, uio_size_1, PROT_READ | PROT_WRITE, MAP_SHARED, uio_fd, getpagesize()); ------------调用uio mmap if (access_address == (void*) -1 || access_address_1 == (void*) -1) { fprintf(stderr, "mmap: %s ", strerror(errno)); exit(-1); } printf("The device address %p (lenth %d) can be accessed over logical address %p ", uio_addr, uio_size, access_address); pagesz = getpagesize(); virt = (unsigned long)uio_addr; virt = ((unsigned long)access_address + (virt & (pagesz - 1))); virt_1 = (unsigned long)uio_addr_1; virt_1 = ((unsigned long)access_address_1 + (virt_1 & (pagesz - 1))); GPBCON = (unsigned long *)(virt + 0x00); GPBDAT = (unsigned long *)(virt + 0x04); GPBUP = (unsigned long *)(virt + 0x08); RETVAL = (unsigned long *)virt_1; int retval = (int)(*RETVAL); printf("GPBCON %p " "GPBDAT %p " "GPBUP %p ", GPBCON, GPBDAT, GPBUP); printf("retval ==============> %d ", retval); // GPBCON if(*GPBCON != 0x154FD) *GPBCON = 0x154FD; // GPBDAT close all leds *GPBDAT = 0x1E0; // open all leds //*GPBDAT = 0x00; // GPBUP if(*GPBUP != 0x7FF) *GPBUP = 0x7FF; fd_set rd_fds, tmp_fds; /*************************** while (1) { // 清空文件描述符集合 FD_ZERO(&rd_fds); FD_SET(uio_fd, &rd_fds); tmp_fds = rd_fds; // 以阻塞的方式等待 tmp_fds(此处为读取数据文件描述集合)中读取数据事件的发生 // 此方法会清除掉没有事件发生的相文件应描述符位 // ret 待处理事件总数 int ret = select(uio_fd+1, &tmp_fds, NULL, NULL, NULL); if (ret > 0) { // 检测读管道文件描述符是否存储在tmp_fds中 // 检测此文件描述符中是否有事件发生 if (FD_ISSET(uio_fd, &tmp_fds)) { int c; read(uio_fd, &c, sizeof(int)); retval = (int)(*RETVAL); printf("current event count is: %d ", c); printf("current return value is: %d ", retval); } } if(retval == 10) break; } **********************************/ close(uio_fd); return 0; }

/* * drivers/uio/uio.c * * Copyright(C) 2005, Benedikt Spranger <b.spranger@linutronix.de> * Copyright(C) 2005, Thomas Gleixner <tglx@linutronix.de> * Copyright(C) 2006, Hans J. Koch <hjk@linutronix.de> * Copyright(C) 2006, Greg Kroah-Hartman <greg@kroah.com> * * Userspace IO * * Base Functions * * Licensed under the GPLv2 only. */ #include <linux/module.h> #include <linux/init.h> #include <linux/poll.h> #include <linux/device.h> #include <linux/mm.h> #include <linux/idr.h> #include <linux/string.h> #include <linux/kobject.h> #include <linux/uio_driver.h> #define UIO_MAX_DEVICES 255 struct uio_device { struct module *owner; struct device *dev; int minor; atomic_t event; struct fasync_struct *async_queue; wait_queue_head_t wait; int vma_count; struct uio_info *info; struct kobject *map_dir; }; static int uio_major; static DEFINE_IDR(uio_idr); static const struct file_operations uio_fops; /* UIO class infrastructure */ static struct uio_class { struct kref kref; struct class *class; } *uio_class; /* Protect idr accesses */ static DEFINE_MUTEX(minor_lock); /* * attributes */ struct uio_map { struct kobject kobj; struct uio_mem *mem; }; #define to_map(map) container_of(map, struct uio_map, kobj) static ssize_t map_addr_show(struct uio_mem *mem, char *buf) { return sprintf(buf, "0x%lx ", mem->addr); } static ssize_t map_size_show(struct uio_mem *mem, char *buf) { return sprintf(buf, "0x%lx ", mem->size); } static ssize_t map_offset_show(struct uio_mem *mem, char *buf) { return sprintf(buf, "0x%lx ", mem->addr & ~PAGE_MASK); } struct uio_sysfs_entry { struct attribute attr; ssize_t (*show)(struct uio_mem *, char *); ssize_t (*store)(struct uio_mem *, const char *, size_t); }; static struct uio_sysfs_entry addr_attribute = __ATTR(addr, S_IRUGO, map_addr_show, NULL); static struct uio_sysfs_entry size_attribute = __ATTR(size, S_IRUGO, map_size_show, NULL); static struct uio_sysfs_entry offset_attribute = __ATTR(offset, S_IRUGO, map_offset_show, NULL); static struct attribute *attrs[] = { &addr_attribute.attr, &size_attribute.attr, &offset_attribute.attr, NULL, /* need to NULL terminate the list of attributes */ }; static void map_release(struct kobject *kobj) { struct uio_map *map = to_map(kobj); kfree(map); } static ssize_t map_type_show(struct kobject *kobj, struct attribute *attr, char *buf) { struct uio_map *map = to_map(kobj); struct uio_mem *mem = map->mem; struct uio_sysfs_entry *entry; entry = container_of(attr, struct uio_sysfs_entry, attr); if (!entry->show) return -EIO; return entry->show(mem, buf); } static struct sysfs_ops uio_sysfs_ops = { .show = map_type_show, }; static struct kobj_type map_attr_type = { .release = map_release, .sysfs_ops = &uio_sysfs_ops, .default_attrs = attrs, }; static ssize_t show_name(struct device *dev, struct device_attribute *attr, char *buf) { struct uio_device *idev = dev_get_drvdata(dev); if (idev) return sprintf(buf, "%s ", idev->info->name); else return -ENODEV; } static DEVICE_ATTR(name, S_IRUGO, show_name, NULL); static ssize_t show_version(struct device *dev, struct device_attribute *attr, char *buf) { struct uio_device *idev = dev_get_drvdata(dev); if (idev) return sprintf(buf, "%s ", idev->info->version); else return -ENODEV; } static DEVICE_ATTR(version, S_IRUGO, show_version, NULL); static ssize_t show_event(struct device *dev, struct device_attribute *attr, char *buf) { struct uio_device *idev = dev_get_drvdata(dev); if (idev) return sprintf(buf, "%u ", (unsigned int)atomic_read(&idev->event)); else return -ENODEV; } static DEVICE_ATTR(event, S_IRUGO, show_event, NULL); static struct attribute *uio_attrs[] = { &dev_attr_name.attr, &dev_attr_version.attr, &dev_attr_event.attr, NULL, }; static struct attribute_group uio_attr_grp = { .attrs = uio_attrs, }; /* * device functions */ static int uio_dev_add_attributes(struct uio_device *idev) { int ret; int mi; int map_found = 0; struct uio_mem *mem; struct uio_map *map; ret = sysfs_create_group(&idev->dev->kobj, &uio_attr_grp); if (ret) goto err_group; for (mi = 0; mi < MAX_UIO_MAPS; mi++) { mem = &idev->info->mem[mi]; if (mem->size == 0) break; if (!map_found) { map_found = 1; idev->map_dir = kobject_create_and_add("maps", ------------------/dev/uio/*/*/maps &idev->dev->kobj); if (!idev->map_dir) goto err; } map = kzalloc(sizeof(*map), GFP_KERNEL); if (!map) goto err; kobject_init(&map->kobj, &map_attr_type); map->mem = mem; mem->map = map; ret = kobject_add(&map->kobj, idev->map_dir, "map%d", mi); if (ret) goto err; ret = kobject_uevent(&map->kobj, KOBJ_ADD); if (ret) goto err; } return 0; err: for (mi--; mi>=0; mi--) { mem = &idev->info->mem[mi]; map = mem->map; kobject_put(&map->kobj); } kobject_put(idev->map_dir); sysfs_remove_group(&idev->dev->kobj, &uio_attr_grp); err_group: dev_err(idev->dev, "error creating sysfs files (%d) ", ret); return ret; } static void uio_dev_del_attributes(struct uio_device *idev) { int mi; struct uio_mem *mem; for (mi = 0; mi < MAX_UIO_MAPS; mi++) { mem = &idev->info->mem[mi]; if (mem->size == 0) break; kobject_put(&mem->map->kobj); } kobject_put(idev->map_dir); sysfs_remove_group(&idev->dev->kobj, &uio_attr_grp); } static int uio_get_minor(struct uio_device *idev) { int retval = -ENOMEM; int id; mutex_lock(&minor_lock); if (idr_pre_get(&uio_idr, GFP_KERNEL) == 0) goto exit; retval = idr_get_new(&uio_idr, idev, &id); if (retval < 0) { if (retval == -EAGAIN) retval = -ENOMEM; goto exit; } idev->minor = id & MAX_ID_MASK; exit: mutex_unlock(&minor_lock); return retval; } static void uio_free_minor(struct uio_device *idev) { mutex_lock(&minor_lock); idr_remove(&uio_idr, idev->minor); mutex_unlock(&minor_lock); } /** * uio_event_notify - trigger an interrupt event * @info: UIO device capabilities */ void uio_event_notify(struct uio_info *info) { struct uio_device *idev = info->uio_dev; atomic_inc(&idev->event); wake_up_interruptible(&idev->wait); kill_fasync(&idev->async_queue, SIGIO, POLL_IN); } EXPORT_SYMBOL_GPL(uio_event_notify); /** * uio_interrupt - hardware interrupt handler * @irq: IRQ number, can be UIO_IRQ_CYCLIC for cyclic timer * @dev_id: Pointer to the devices uio_device structure */ static irqreturn_t uio_interrupt(int irq, void *dev_id) { struct uio_device *idev = (struct uio_device *)dev_id; irqreturn_t ret = idev->info->handler(irq, idev->info); if (ret == IRQ_HANDLED) uio_event_notify(idev->info); return ret; } struct uio_listener { struct uio_device *dev; s32 event_count; }; static int uio_open(struct inode *inode, struct file *filep) { struct uio_device *idev; struct uio_listener *listener; int ret = 0; mutex_lock(&minor_lock); idev = idr_find(&uio_idr, iminor(inode)); mutex_unlock(&minor_lock); if (!idev) { ret = -ENODEV; goto out; } if (!try_module_get(idev->owner)) { ret = -ENODEV; goto out; } listener = kmalloc(sizeof(*listener), GFP_KERNEL); if (!listener) { ret = -ENOMEM; goto err_alloc_listener; } listener->dev = idev; listener->event_count = atomic_read(&idev->event); filep->private_data = listener; if (idev->info->open) { ret = idev->info->open(idev->info, inode); if (ret) goto err_infoopen; } return 0; err_infoopen: kfree(listener); err_alloc_listener: module_put(idev->owner); out: return ret; } static int uio_fasync(int fd, struct file *filep, int on) { struct uio_listener *listener = filep->private_data; struct uio_device *idev = listener->dev; return fasync_helper(fd, filep, on, &idev->async_queue); } static int uio_release(struct inode *inode, struct file *filep) { int ret = 0; struct uio_listener *listener = filep->private_data; struct uio_device *idev = listener->dev; if (idev->info->release) ret = idev->info->release(idev->info, inode); module_put(idev->owner); kfree(listener); return ret; } static unsigned int uio_poll(struct file *filep, poll_table *wait) { struct uio_listener *listener = filep->private_data; struct uio_device *idev = listener->dev; if (idev->info->irq == UIO_IRQ_NONE) return -EIO; poll_wait(filep, &idev->wait, wait); if (listener->event_count != atomic_read(&idev->event)) return POLLIN | POLLRDNORM; return 0; } static ssize_t uio_read(struct file *filep, char __user *buf, size_t count, loff_t *ppos) { struct uio_listener *listener = filep->private_data; struct uio_device *idev = listener->dev; DECLARE_WAITQUEUE(wait, current); ssize_t retval; s32 event_count; if (idev->info->irq == UIO_IRQ_NONE) return -EIO; if (count != sizeof(s32)) return -EINVAL; add_wait_queue(&idev->wait, &wait); do { set_current_state(TASK_INTERRUPTIBLE); event_count = atomic_read(&idev->event); if (event_count != listener->event_count) { if (copy_to_user(buf, &event_count, count)) retval = -EFAULT; else { listener->event_count = event_count; retval = count; } break; } if (filep->f_flags & O_NONBLOCK) { retval = -EAGAIN; break; } if (signal_pending(current)) { retval = -ERESTARTSYS; break; } schedule(); } while (1); __set_current_state(TASK_RUNNING); remove_wait_queue(&idev->wait, &wait); return retval; } static ssize_t uio_write(struct file *filep, const char __user *buf, size_t count, loff_t *ppos) { struct uio_listener *listener = filep->private_data; struct uio_device *idev = listener->dev; ssize_t retval; s32 irq_on; if (idev->info->irq == UIO_IRQ_NONE) return -EIO; if (count != sizeof(s32)) return -EINVAL; if (!idev->info->irqcontrol) return -ENOSYS; if (copy_from_user(&irq_on, buf, count)) return -EFAULT; retval = idev->info->irqcontrol(idev->info, irq_on); return retval ? retval : sizeof(s32); } static int uio_find_mem_index(struct vm_area_struct *vma) { int mi; struct uio_device *idev = vma->vm_private_data; for (mi = 0; mi < MAX_UIO_MAPS; mi++) { if (idev->info->mem[mi].size == 0) return -1; if (vma->vm_pgoff == mi) return mi; } return -1; } static void uio_vma_open(struct vm_area_struct *vma) { struct uio_device *idev = vma->vm_private_data; idev->vma_count++; } static void uio_vma_close(struct vm_area_struct *vma) { struct uio_device *idev = vma->vm_private_data; idev->vma_count--; } static int uio_vma_fault(struct vm_area_struct *vma, struct vm_fault *vmf) { struct uio_device *idev = vma->vm_private_data; struct page *page; unsigned long offset; int mi = uio_find_mem_index(vma); if (mi < 0) return VM_FAULT_SIGBUS; /* * We need to subtract mi because userspace uses offset = N*PAGE_SIZE * to use mem[N]. */ offset = (vmf->pgoff - mi) << PAGE_SHIFT; if (idev->info->mem[mi].memtype == UIO_MEM_LOGICAL) page = virt_to_page(idev->info->mem[mi].addr + offset); else page = vmalloc_to_page((void *)idev->info->mem[mi].addr + offset); get_page(page); vmf->page = page; return 0; } static struct vm_operations_struct uio_vm_ops = { .open = uio_vma_open, .close = uio_vma_close, .fault = uio_vma_fault, }; ------------------------------ 物理地址映射用 remap static int uio_mmap_physical(struct vm_area_struct *vma) { struct uio_device *idev = vma->vm_private_data; int mi = uio_find_mem_index(vma); if (mi < 0) return -EINVAL; vma->vm_flags |= VM_IO | VM_RESERVED; vma->vm_page_prot = pgprot_noncached(vma->vm_page_prot); return remap_pfn_range(vma, vma->vm_start, idev->info->mem[mi].addr >> PAGE_SHIFT, vma->vm_end - vma->vm_start, vma->vm_page_prot); } -------------------- 逻辑地址映射 static int uio_mmap_logical(struct vm_area_struct *vma) { vma->vm_flags |= VM_RESERVED; vma->vm_ops = &uio_vm_ops; uio_vma_open(vma); return 0; } static int uio_mmap(struct file *filep, struct vm_area_struct *vma) { struct uio_listener *listener = filep->private_data; struct uio_device *idev = listener->dev; int mi; unsigned long requested_pages, actual_pages; int ret = 0; if (vma->vm_end < vma->vm_start) return -EINVAL; vma->vm_private_data = idev; mi = uio_find_mem_index(vma); if (mi < 0) return -EINVAL; requested_pages = (vma->vm_end - vma->vm_start) >> PAGE_SHIFT; actual_pages = (idev->info->mem[mi].size + PAGE_SIZE -1) >> PAGE_SHIFT; if (requested_pages > actual_pages) return -EINVAL; if (idev->info->mmap) { ret = idev->info->mmap(idev->info, vma); return ret; } switch (idev->info->mem[mi].memtype) { case UIO_MEM_PHYS: return uio_mmap_physical(vma); case UIO_MEM_LOGICAL: case UIO_MEM_VIRTUAL: return uio_mmap_logical(vma); default: return -EINVAL; } } static const struct file_operations uio_fops = { .owner = THIS_MODULE, .open = uio_open, .release = uio_release, .read = uio_read, .write = uio_write, .mmap = uio_mmap, .poll = uio_poll, .fasync = uio_fasync, }; static int uio_major_init(void) { uio_major = register_chrdev(0, "uio", &uio_fops); if (uio_major < 0) return uio_major; return 0; } static void uio_major_cleanup(void) { unregister_chrdev(uio_major, "uio"); } static int init_uio_class(void) { int ret = 0; if (uio_class != NULL) { kref_get(&uio_class->kref); goto exit; } /* This is the first time in here, set everything up properly */ ret = uio_major_init(); if (ret) goto exit; uio_class = kzalloc(sizeof(*uio_class), GFP_KERNEL); if (!uio_class) { ret = -ENOMEM; goto err_kzalloc; } kref_init(&uio_class->kref); uio_class->class = class_create(THIS_MODULE, "uio"); if (IS_ERR(uio_class->class)) { ret = IS_ERR(uio_class->class); printk(KERN_ERR "class_create failed for uio "); goto err_class_create; } return 0; err_class_create: kfree(uio_class); uio_class = NULL; err_kzalloc: uio_major_cleanup(); exit: return ret; } static void release_uio_class(struct kref *kref) { /* Ok, we cheat as we know we only have one uio_class */ class_destroy(uio_class->class); kfree(uio_class); uio_major_cleanup(); uio_class = NULL; } static void uio_class_destroy(void) { if (uio_class) kref_put(&uio_class->kref, release_uio_class); } /** * uio_register_device - register a new userspace IO device * @owner: module that creates the new device * @parent: parent device * @info: UIO device capabilities * * returns zero on success or a negative error code. */ int __uio_register_device(struct module *owner, struct device *parent, struct uio_info *info) { struct uio_device *idev; int ret = 0; if (!parent || !info || !info->name || !info->version) return -EINVAL; info->uio_dev = NULL; ret = init_uio_class(); if (ret) return ret; idev = kzalloc(sizeof(*idev), GFP_KERNEL); if (!idev) { ret = -ENOMEM; goto err_kzalloc; } idev->owner = owner; idev->info = info; init_waitqueue_head(&idev->wait); atomic_set(&idev->event, 0); ret = uio_get_minor(idev); if (ret) goto err_get_minor; idev->dev = device_create(uio_class->class, parent, MKDEV(uio_major, idev->minor), idev, "uio%d", idev->minor); if (IS_ERR(idev->dev)) { printk(KERN_ERR "UIO: device register failed "); ret = PTR_ERR(idev->dev); goto err_device_create; } ret = uio_dev_add_attributes(idev); if (ret) goto err_uio_dev_add_attributes; info->uio_dev = idev; if (idev->info->irq >= 0) { ret = request_irq(idev->info->irq, uio_interrupt, idev->info->irq_flags, idev->info->name, idev); if (ret) goto err_request_irq; } return 0; err_request_irq: uio_dev_del_attributes(idev); err_uio_dev_add_attributes: device_destroy(uio_class->class, MKDEV(uio_major, idev->minor)); err_device_create: uio_free_minor(idev); err_get_minor: kfree(idev); err_kzalloc: uio_class_destroy(); return ret; } EXPORT_SYMBOL_GPL(__uio_register_device); /** * uio_unregister_device - unregister a industrial IO device * @info: UIO device capabilities * */ void uio_unregister_device(struct uio_info *info) { struct uio_device *idev; if (!info || !info->uio_dev) return; idev = info->uio_dev; uio_free_minor(idev); if (info->irq >= 0) free_irq(info->irq, idev); uio_dev_del_attributes(idev); dev_set_drvdata(idev->dev, NULL); device_destroy(uio_class->class, MKDEV(uio_major, idev->minor)); kfree(idev); uio_class_destroy(); return; } EXPORT_SYMBOL_GPL(uio_unregister_device); static int __init uio_init(void) { return 0; } static void __exit uio_exit(void) { } module_init(uio_init) module_exit(uio_exit) MODULE_LICENSE("GPL v2");

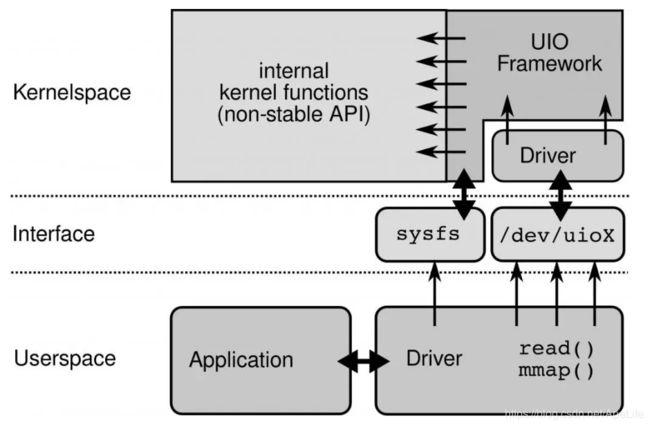

一、dpdk uio驱动框架

在系统加载igb_uio驱动后,每当有网卡和igb_uio驱动进行绑定时, 就会在/dev目录下创建一个uio设备,例如/dev/uio1。uio设备是一个接口层,用于将pci网卡的内存空间以及网卡的io空间暴露给应用层。通过这种方式,应用层访问uio设备就相当于访问网卡。具体来说,当有网卡和uio驱动绑定时,被内核加载的igb_uio驱动, 会将pci网卡的内存空间,网卡的io空间保存在uio目录下,例如/sys/class/uio/uio1/maps文件中,同时也会保存到pci设备目录下的uio文件中。这样应用层就可以访问这2个文件中的任意一个文件里面保存的地址空间,然后通过mmap将文件中保存网卡的物理内存映射成虚拟地址, 应用层访问这个虚拟地址空间就相当于访问pci设备。

从图中可以看出,一共由用户态驱动pmd, 运行在内核态的igb_uio驱动,以及linux的uio框架组成。用户态驱动pmd通过轮询的方式,直接从网卡收发报文,将内核旁路了,绕过了协议栈,避免了内核和应用层之间的拷贝性能; 内核态驱动igb_uio,用于将pci网卡的内存空间,io空间暴露给应用层,供应用层访问,同时会处理在网卡的硬件中断;linux uio框架提供了一些给igb_uio驱动调用的接口,例如uio_open打开uio; uio_release关闭uio; uio_read从uio读取数据; uio_write往uio写入数据。linux uio框架的代码在内核源码drivers/uio/uio.c文件中实现。linux uio框架也会调用内核提供的其他api接口函数。

应用层pmd通过read系统调用来访问/dev/uiox设备,进而调用igb_uio驱动中的接口, igb_uio驱动最终会调用linux uio框架提供的接口。

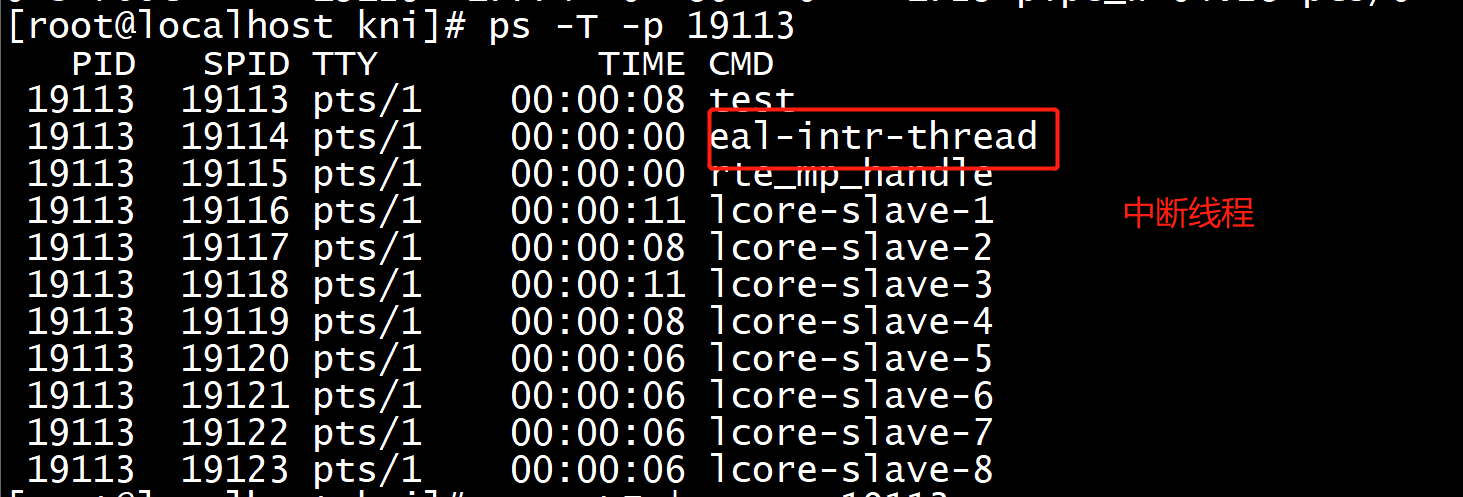

二、用户态驱动pmd轮询与uio中断的关系

pmd用户态驱动是通过轮询的方式,直接从网卡收发报文,将内核旁路了,绕过了协议栈。那为什么还要实现uio呢? 在某些情况下应用层想要知道网卡的状态信息之类的,就需要网卡硬件中断的支持。因为硬件中断只能在内核上完成, 目前dpdk的实现方式是在内核态igb_uio驱动上实现小部分硬件中断,例如统计硬件中断的次数, 然后唤醒应用层注册到epoll中的/dev/uiox中断,进而由应用层来完成大部分的中断处理过程,例如获取网卡状态等。

有一个疑问,是不是网卡报文到来时,产生的硬件中断也会到/dev/uiox中断来呢? 肯定是不会的, 因为这个/dev/uiox中断只是控制中断,网卡报文收发的数据中断是不会触发到这里来的。为什么数据中断就不能唤醒epoll事件呢,dpdk是如何区分数据中断与控制中断的? 那是因为在pmd驱动中,调用igb_intr_enable接口开启uio中断功能,设置中断的时候,是可以指定中断掩码的, 例如指定E1000_ICR_LSC网卡状态改变中断掩码,E1000_ICR_RXQ0接收网卡报文中断掩码; E1000_ICR_TXQ0发送网卡报文中断掩码等。 如果某些掩码没指定,就不会触发相应的中断。dpdk的用户态pmd驱动中只指定了E1000_ICR_LSC网卡状态改变中断掩码,因此网卡收发报文中断是被禁用掉了的,只有网卡状态改变才会使得epoll事件触发。因此当有来自网卡的报文时,产生的硬件中断是不会唤醒epoll事件的。这些中断源码在e1000_defines.h文件中定义。

另一个需要注意的是,igb_uio驱动在注册中断处理回调时,会将中断处理函数设置为igbuio_pci_irqhandler,也就是将正常网卡的硬件中断给拦截了, 这也是用户态驱动pmd能够直接访问网卡的原因。得益于拦截了网卡的中断回调,因此在中断发生时,linux uio框架会唤醒epoll事件,进而应用层能够读取网卡中断事件,或者对网卡进行一些控制操作。拦截硬件中断处理回调只是对网卡的控制操作才有效, 对于pmd用户态驱动轮询网卡报文是没有影响的。也就是说igb_uio驱动不管有没拦截硬件中断回调,都不影响pmd的轮询。 劫持硬件中断回调,只是为了应用层能够响应硬件中断,并对网卡做些控制操作。

uio_intx_intr_disable(const struct rte_intr_handle *intr_handle)

{

unsigned char command_high;

/* use UIO config file descriptor for uio_pci_generic */

if (pread(intr_handle->uio_cfg_fd, &command_high, 1, 5) != 1) {

RTE_LOG(ERR, EAL,

"Error reading interrupts status for fd %d

",

intr_handle->uio_cfg_fd);

return -1;

}

/* disable interrupts */

command_high |= 0x4;

if (pwrite(intr_handle->uio_cfg_fd, &command_high, 1, 5) != 1) {

RTE_LOG(ERR, EAL,

"Error disabling interrupts for fd %d

",

intr_handle->uio_cfg_fd);

return -1;

}

return 0;

}

drivers/net/e1000/igb_ethdev.c:553: E1000_WRITE_REG(hw, E1000_IMS, intr->mask); drivers/net/e1000/igb_ethdev.c:2787: intr->mask |= E1000_ICR_LSC; drivers/net/e1000/igb_ethdev.c:2789: intr->mask &= ~E1000_ICR_LSC; drivers/net/e1000/igb_ethdev.c:5604: uint32_t tmpval, regval, intr_mask; drivers/net/e1000/igb_ethdev.c:5652: intr_mask = RTE_LEN2MASK(intr_handle->nb_efd, uint32_t) << drivers/net/e1000/igb_ethdev.c:5656: intr_mask |= (1 << IGB_MSIX_OTHER_INTR_VEC); drivers/net/e1000/igb_ethdev.c:5659: E1000_WRITE_REG(hw, E1000_EIAC, regval | intr_mask); drivers/net/e1000/igb_ethdev.c:5663: E1000_WRITE_REG(hw, E1000_EIMS, regval | intr_mask); drivers/net/e1000/igb_ethdev.c:5671: intr_mask = RTE_LEN2MASK(intr_handle->nb_efd, uint32_t) << drivers/net/e1000/igb_ethdev.c:5675: intr_mask |= (1 << IGB_MSIX_OTHER_INTR_VEC); drivers/net/e1000/igb_ethdev.c:5678: E1000_WRITE_REG(hw, E1000_EIAM, regval | intr_mask);

#define IXGBE_EIMC_RTX_QUEUE IXGBE_EICR_RTX_QUEUE /* RTx Queue Interrupt */ #define IXGBE_EIMC_FLOW_DIR IXGBE_EICR_FLOW_DIR /* FDir Exception */ #define IXGBE_EIMC_RX_MISS IXGBE_EICR_RX_MISS /* Packet Buffer Overrun */ #define IXGBE_EIMC_PCI IXGBE_EICR_PCI /* PCI Exception */ #define IXGBE_EIMC_MAILBOX IXGBE_EICR_MAILBOX /* VF to PF Mailbox Int */ #define IXGBE_EIMC_LSC IXGBE_EICR_LSC /* Link Status Change */

三、dpdk uio驱动的实现过程

先来整体看下igb_uio驱动做了哪些操作。

(1) 针对uio设备本身的操作,例如创建uio设备结构,并注册一个uio设备。此时将会在/dev/目录下创建一个uio文件,例如/dev/uiox。同时也会在/sys/class/uio目录下创建一个uio目录,例如/sys/class/uio/uio1; 并将这个uio目录拷贝到网卡目录下,例如/sys/bus/pci/devices/0000:02:06.0/uio。

(2) 为pci网卡预留物理内存与io空间,同时将这些空间保存到uio设备上,相当于将这些物理空间与io空间暴露给uio设备。应用层访问uio设备就相当于访问网卡设备

(3) 在idb_uio驱动注册硬件中断回调, 驱动层的硬件中断代码越少越好,大部分硬件中断由应用层来实现。

1、igb_uio驱动初始化

在执行insmod命令加载igb_uio驱动时,会进行uio驱动的初始化操作, 注册一个uio驱动到内核。注册uio驱动的时候,会指定一个驱动操作接口igbuio_pci_driver,其中的probe是在网卡绑定uio驱动的时候 ,uio驱动探测到有网卡进行绑定操作,这个时候probe会被调度执行; 同理当网卡卸载uio驱动时,uio驱动检测到有网卡卸载了,则remove会被调度执行。

static struct pci_driver igbuio_pci_driver =

{

.name = "igb_uio",

.id_table = NULL,

.probe = igbuio_pci_probe, //为pci设备绑定uio驱动时会被调用

.remove = igbuio_pci_remove,//为pci设备卸载uio驱动时会被调用

};

//igb_uio驱动初始化

static int __init igbuio_pci_init_module(void)

{

return pci_register_driver(&igbuio_pci_driver);

}2、驱动探测probe

上面已经提到过这个接口被调用的时间,也就是在网卡绑定igb_uio驱动的时候会被调度执行,现在来分析下这个接口的执行过程。需要注意的是,这个接口内部调用了linux uio框架的接口以及调用了一堆linux内核的api接口, 读者在分析这部分代码的时候,关注重点流程就好了,不要被内核的这些接口干扰。

2.1 激活pci设备

在igb_uio驱动能够操作pci网卡之前,需要将pci设备给激活, 唤醒pci设备。在驱动程序可以访问PCI设备的任何设备资源之前(I/O区域或者中断),驱动程序必须调用该函数。也就是说只有激活了pci设备, igb_uio驱动以及应用层调用者,才能够访问pci网卡的内存或者io空间。

int igbuio_pci_probe(struct pci_dev *dev, const struct pci_device_id *id)

{

//激活PCI设备,在驱动程序可以访问PCI设备的任何设备资源之前(I/O区域或者中断),驱动程序必须调用该函数

err = pci_enable_device(dev);

}

int pci_enable_device(struct pci_dev *dev)

{

//使得驱动能够访问pci设备的内存与io空间

return __pci_enable_device_flags(dev, IORESOURCE_MEM | IORESOURCE_IO);

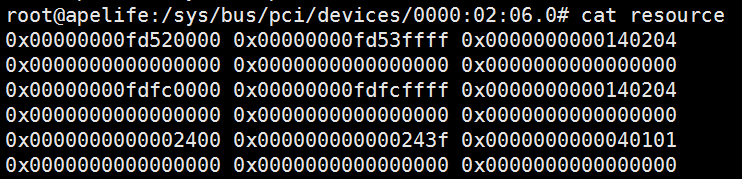

}2.2 为pci设备预留内存与io空间

igb_uio驱动会根据网卡目录下的resource文件, 例如/sys/bus/pci/devices/0000:02:06.0文件记录的io空间的大小,开始位置; 内存空间的大小,开始位置。在内存中为pci设备预留这部分空间。分配好后空间后,这个io与内存空间就被该pci网卡独占,应用层可以通过访问/dev/uiox设备,其实也就是访问网卡的这部分空间。

int igbuio_pci_probe(struct pci_dev *dev, const struct pci_device_id *id)

{

//在内存中申请pci设备的内存区域

err = pci_request_regions(dev, "igb_uio");

}

int _kc_pci_request_regions(struct pci_dev *dev, char *res_name)

{

int i;

//根据网卡目录下的/sys/bus/pci/devices/0000:02:06.0文件记录的网卡内存与io空间的大小,在内存中申请这些空间

for (i = 0; i < 6; i++)

{

if (pci_resource_flags(dev, i) & IORESOURCE_IO)

{

//在内存中为网卡申请io空间

request_region(pci_resource_start(dev, i), pci_resource_len(dev, i), res_name);

}

else if (pci_resource_flags(dev, i) & IORESOURCE_MEM)

{

//在内存中为网卡申请物理内存空间

request_mem_region(pci_resource_start(dev, i), pci_resource_len(dev, i), res_name);

}

}

}

2.3 为pci网卡设置dma模式

将网卡设置为dma模式, 用户态pmd驱动就可以轮询的从dma直接接收网卡报文,或者将报文交给dma来发送

int igbuio_pci_probe(struct pci_dev *dev, const struct pci_device_id *id)

{

//设置dma总线模式,使得pmd驱动可以直接从dma收发报文

pci_set_master(dev);

//设置可以访问的地址范围0-64地址空间

err = pci_set_dma_mask(dev, DMA_BIT_MASK(64));

err = pci_set_consistent_dma_mask(dev, DMA_BIT_MASK(64));

}

2.4 将pci网卡的物理空间以及io空间暴露给uio设备

将pci网卡的物理内存空间以及io空间保存在uio设备结构struct uio_info中的mem成员以及port成员中,uio设备就知道了网卡的物理以及io空间。应用层访问这个uio设备的物理空间以及io空间,就相当于访问pci设备的物理以及io空间。本质上就是将pci网卡的空间暴露给uio设备。

int igbuio_pci_probe(struct pci_dev *dev, const struct pci_device_id *id)

{

//将pci内存,端口映射给uio设备

struct rte_uio_pci_dev *udev;

err = igbuio_setup_bars(dev, &udev->info);

}

static int igbuio_setup_bars(struct pci_dev *dev, struct uio_info *info)

{

//pci内存,端口映射给uio设备

for (i = 0; i != sizeof(bar_names) / sizeof(bar_names[0]); i++)

{

if (pci_resource_len(dev, i) != 0 && pci_resource_start(dev, i) != 0)

{

flags = pci_resource_flags(dev, i);

if (flags & IORESOURCE_MEM)

{

//暴露pci的内存空间给uio设备

ret = igbuio_pci_setup_iomem(dev, info, iom, i, bar_names[i]);

}

else if (flags & IORESOURCE_IO)

{

//暴露pci的io空间给uio设备

ret = igbuio_pci_setup_ioport(dev, info, iop, i, bar_names[i]);

}

}

}

}

========================================================

igbuio_pci_setup_iomem 设置uio_ifo,

uio_register_device调用uio_dev_add_attributes 根据uio_ifo创建文件

uio_mmap读取文件,调用remap

igbuio_pci_setup_iomem(struct pci_dev *dev, struct uio_info *info, int n, int pci_bar, const char *name) { unsigned long addr, len; void *internal_addr; if (n >= ARRAY_SIZE(info->mem)) return -EINVAL; addr = pci_resource_start(dev, pci_bar); len = pci_resource_len(dev, pci_bar); if (addr == 0 || len == 0) return -1; if (wc_activate == 0) { internal_addr = ioremap(addr, len); if (internal_addr == NULL) return -1; } else { internal_addr = NULL; } info->mem[n].name = name; info->mem[n].addr = addr; info->mem[n].internal_addr = internal_addr; info->mem[n].size = len; info->mem[n].memtype = UIO_MEM_PHYS; return 0; }

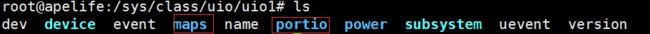

将pci设备的物理内存空间以及io空间保存在uio设备结构struct uio_info中的mem成员以及port成员中,之后在下面调用uio_register_device注册一个uio设备时。内部就将mem以及port成员保存的信息分别保存到/sys/class/uio/uiox目录下的maps以及portio, 这样应用层访问这两个目录里面文件记录的内容,就可以访问的pci设备的物理以及地址空间,真正的暴露给应用层。

可以简单查看内核的源码,__uio_register_device会调用uio_dev_add_attributes接口来完成将网卡的物理内存空间以及io空间保存到文件中

static int uio_dev_add_attributes(struct uio_device *idev)

{

for (mi = 0; mi < MAX_UIO_MAPS; mi++)

{

//将pci物理内存保存到/sys/class/uio/uio1/maps目录下

mem = &idev->info->mem[mi];

idev->map_dir = kobject_create_and_add("maps", &idev->dev->kobj);

}

for (pi = 0; pi < MAX_UIO_PORT_REGIONS; pi++)

{

//将pci设备io空间保存到/sys/class/uio/uio1/portio目录下

port = &idev->info->port[pi];

idev->portio_dir = kobject_create_and_add("portio", &idev->dev->kobj);

}

}2.5 设置uio设备的中断

注册uio设备的中断回调,也就是上面提到的拦截硬件中断回调。拦截硬件中断后,当硬件中断触发时,就不会一直触发内核去执行中断回调。也就是通过这种方式,才能在应用层实现硬件中断处理过程。再次注意下,这里说的中断仅是控制中断,而不是报文收发的数据中断,数据中断是不会走到这里来的,因为在pmd开启中断时,没有设置收发报文的中断掩码,只注册了网卡状态改变的中断掩码。

int igbuio_pci_probe(struct pci_dev *dev, const struct pci_device_id *id)

{

//填充uio信息

udev->info.name = "igb_uio";

udev->info.version = "0.1";

udev->info.handler = igbuio_pci_irqhandler; //硬件控制中断的入口,劫持原来的硬件中断

udev->info.irqcontrol = igbuio_pci_irqcontrol; //应用层开关中断时被调用,用于是否开始中断

}

static irqreturn_t igbuio_pci_irqhandler(int irq, struct uio_info *info)

{

if (udev->mode == RTE_INTR_MODE_LEGACY && !pci_check_and_mask_intx(udev->pdev))

{

return IRQ_NONE;

}

//返回IRQ_HANDLED时,linux uio框架会唤醒等待uio中断的进程。注册到epoll的uio中断事件就会被调度

/* Message signal mode, no share IRQ and automasked */

return IRQ_HANDLED;

}

static int igbuio_pci_irqcontrol(struct uio_info *info, s32 irq_state)

{

//调用内核的api来开关中断

if (udev->mode == RTE_INTR_MODE_LEGACY)

{

pci_intx(pdev, !!irq_state);

}

else if (udev->mode == RTE_INTR_MODE_MSIX)

{

list_for_each_entry(desc, &pdev->msi_list, list)

igbuio_msix_mask_irq(desc, irq_state);

}

}

在下面调用uio_register_device注册uio设备的时候,会注册一个linux uio框架下的硬件中断入口回调uio_interrupt。这个回调里面会调用igb_uio驱动注册的硬件中断回调igbuio_pci_irqhandler。通常igbuio_pci_irqhandler直接返回IRQ_HANDLED,因此会唤醒阻塞在uio设备中断的进程,应用层注册到epoll的uio中断事件就会被调度,例如e1000用户态pmd驱动在eth_igb_dev_init函数中注册的uio设备中断处理函数eth_igb_interrupt_handler就会被调度执行,来获取网卡的状态信息。

总结下中断调度流程:linux uio硬件中断回调被触发 ----> igb_uio驱动的硬件中断回调被调度 ----> 唤醒用户态注册的uio中断回调。

int __uio_register_device(struct module *owner,struct device *parent, struct uio_info *info)

{

//注册uio框架的硬件中断入口

ret = request_irq(idev->info->irq, uio_interrupt,

idev->info->irq_flags, idev->info->name, idev);

}

static irqreturn_t uio_interrupt(int irq, void *dev_id)

{

struct uio_device *idev = (struct uio_device *)dev_id;

//调度igb_uio驱动注册的中断回调

irqreturn_t ret = idev->info->handler(irq, idev->info);

//唤醒所有阻塞在uio设备中断的进程,注册到epoll的uio中断事件就会被调度

if (ret == IRQ_HANDLED)

uio_event_notify(idev->info);

}2.6 uio设备的注册

最后执行uio设备的注册,在/dev/目录下创建uio文件,例如/dev/uio1; 同时也会在/sys/class/uio目录下创建一个uio目录,例如/sys/class/uio/uio1; 最后将/sys/class/uio/uio1目录下的内容拷贝到网卡目录下,例如/sys/bus/pci/devices/0000:02:06.0/uio

另外还会执行上面提到过的,将uio设备保存的网卡的内存空间,io空间保存到文件中,以便应用层能够访问这个网卡空间。同时也会注册一个linux uio框架下的网卡硬件中断。

int __uio_register_device(struct module *owner,struct device *parent, struct uio_info *info)

{

//创建uio设备/dev/uiox

idev->dev = device_create(uio_class->class, parent,

MKDEV(uio_major, idev->minor), idev, "uio%d", idev->minor);

//将uio设备保存的网卡的内存空间,io空间保存到文件中

ret = uio_dev_add_attributes(idev);

//注册uio框架的硬件中断入口

ret = request_irq(idev->info->irq, uio_interrupt,

idev->info->irq_flags, idev->info->name, idev);

}到此为止uio设备驱动与pmd的关系也就分析完成了。接下来的文章将详细分析用户态驱动pmd是如何收发网卡报文的。