https://luohao-brian.gitbooks.io/interrupt-virtualization/content/kvm-run-processzhi-qemu-he-xin-liu-cheng.html

kvm_cpu_thread

void *kvm_cpu_thread(void *data) { struct kvm *kvm = (struct kvm *)data; int ret = 0; kvm_reset_vcpu(kvm->vcpus); while (1) { printf("KVM start run "); ret = ioctl(kvm->vcpus->vcpu_fd, KVM_RUN, 0); if (ret < 0) { fprintf(stderr, "KVM_RUN failed "); exit(1); } switch (kvm->vcpus->kvm_run->exit_reason) { case KVM_EXIT_UNKNOWN: printf("KVM_EXIT_UNKNOWN "); break; case KVM_EXIT_DEBUG: printf("KVM_EXIT_DEBUG "); break; case KVM_EXIT_IO: printf("KVM_EXIT_IO "); printf("out port: %d, data: %d ", kvm->vcpus->kvm_run->io.port, *(int *)((char *)(kvm->vcpus->kvm_run) + kvm->vcpus->kvm_run->io.data_offset) ); sleep(1); break; case KVM_EXIT_MMIO: printf("KVM_EXIT_MMIO "); break; case KVM_EXIT_INTR: printf("KVM_EXIT_INTR "); break; case KVM_EXIT_SHUTDOWN: printf("KVM_EXIT_SHUTDOWN "); goto exit_kvm; break; default: printf("KVM PANIC "); goto exit_kvm; } } exit_kvm: return 0; }

kvm->vcpus = kvm_init_vcpu(kvm, 0, kvm_cpu_thread);

struct vcpu *kvm_init_vcpu(struct kvm *kvm, int vcpu_id, void *(*fn)(void *)) { struct vcpu *vcpu = malloc(sizeof(struct vcpu)); vcpu->vcpu_id = 0; vcpu->vcpu_fd = ioctl(kvm->vm_fd, KVM_CREATE_VCPU, vcpu->vcpu_id); if (vcpu->vcpu_fd < 0) { perror("can not create vcpu"); return NULL; } vcpu->kvm_run_mmap_size = ioctl(kvm->dev_fd, KVM_GET_VCPU_MMAP_SIZE, 0); if (vcpu->kvm_run_mmap_size < 0) { perror("can not get vcpu mmsize"); return NULL; } printf("%d ", vcpu->kvm_run_mmap_size); vcpu->kvm_run = mmap(NULL, vcpu->kvm_run_mmap_size, PROT_READ | PROT_WRITE, MAP_SHARED, vcpu->vcpu_fd, 0); if (vcpu->kvm_run == MAP_FAILED) { perror("can not mmap kvm_run"); return NULL; } vcpu->vcpu_thread_func = fn; return vcpu; }

void kvm_run_vm(struct kvm *kvm) { int i = 0; for (i = 0; i < kvm->vcpu_number; i++) { if (pthread_create(&(kvm->vcpus->vcpu_thread), (const pthread_attr_t *)NULL, kvm->vcpus[i].vcpu_thread_func, kvm) != 0) { perror("can not create kvm thread"); exit(1); } } pthread_join(kvm->vcpus->vcpu_thread, NULL); }

Qemu核心流程

阶段一:参数解析

这里使用qemu版本为qemu-kvm-1.2.0,使用Qemu工具使能虚拟机运行的命令为:

$sudo /usr/local/kvm/bin/qemu-system-x86_64 -hda vdisk_linux.img -m 1024

这时候会启动qemu-system-x86_64应用程序,该程序入口为

int main(int argc, char **argv, char **envp) <------file: vl.c,line: 2345

在main函数中第一阶段主要对命令传入的参数进行parser,包括如下几个方面:

QEMU_OPTION_M 机器类型及体系架构相关

QEMU_OPTION_hda

/mtdblock/

pflash 存储介质相关

QEMU_OPTION_numa numa系统相关

QEMU_OPTION_kernel 内核镜像相关

QEMU_OPTION_initrd initramdisk相关

QEMU_OPTION_append 启动参数相关

QEMU_OPTION_net/netdev 网络相关

QEMU_OPTION_smp smp相关

阶段二:VM的创建

通过configure_accelerator()->kvm_init() file: kvm-all.c, line: 1281;

首先打开/dev/kvm,获得三大描述符之一kvmfd, 其次通过KVM_GET_API_VERSION进行版本验证,最后通过KVM_CREATE_VM创建了一个VM对象,返回了三大描述符之二: VM描述符/vmfd。

s->fd = qemu_open("/dev/kvm", O_RDWR); kvm_init()/line: 1309

ret = kvm_ioctl(s, KVM_GET_API_VERSION, 0); kmv_init()/line: 1316

s->vmfd = kvm_ioctl(s, KVM_CREATE_VM, 0); kvm_init()/line: 1339

阶段三:VM的初始化

通过加载命令的参数的解析和相关系统的初始化,找到对应的machine类型进行第三阶段的初始化:

machine->init(ram_size, boot_devices, kernel_filename, kernel_cmdline, initrd_filename, cpu_model);

file: vl.c, line: 3651

其中的参数包括从命令行传入解析后得到的,ram的大小,内核镜像文件名,内核启动参数,initramdisk文件名,cpu模式等。

我们使用系统默认类型的machine,则init函数为pc_init_pci(),通过一系列的调用:

pc_init_pci() file: pc_piix.c, line: 294

--->pc_init1() file: pc_piix.c, line: 123

--->pc_cpus_init() file: pc.c, line: 941

在命令行启动时配置的smp参数在这里启作用了,qemu根据配置的cpu个数,进行n次的cpu初始化,相当于n个核的执行体。

void pc_cpus_init(const char *cpu_model)

{

int i;

/* init CPUs */

for(i = 0; i < smp_cpus; i++) {

pc_new_cpu(cpu_model);

}

}

继续cpu的初始化:

pc_new_cpu() file: hw/pc.c, line: 915

--->cpu_x86_init() file: target-i386/helper.c, line: 1150

--->x86_cpu_realize() file: target-i386/cpu.c, line: 1767

--->qemu_init_vcpu() file: cpus.c, line: 1039

--->qemu_kvm_start_vcpu() file: cpus.c, line: 1011

qemu_kvm_start_vcpu是一个比较重要的函数,我们在这里可以看到VM真正的执行体是什么。

阶段四:VM RUN

static void qemu_kvm_start_vcpu(CPUArchState *env) <--------file: cpus.c, line: 1011

{

CPUState *cpu = ENV_GET_CPU(env);

......

qemu_cond_init(env->halt_cond);

qemu_thread_create(cpu->thread, qemu_kvm_cpu_thread_fn, env, QEMU_THREAD_JOINABLE);

......

}

void qemu_thread_create(QemuThread *thread,

void *(*start_routine)(void*),

void *arg, int mode) <--------file: qemu-thread-posix.c, line: 118

{

......

err = pthread_attr_init(&attr);

......

err = pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_DETACHED);

......

pthread_sigmask(SIG_SETMASK, &set, &oldset);

......

pthread_create(&thread->thread, &attr, start_routine, arg);

......

pthread_attr_destroy(&attr);

}

可以看到VM真正的执行体是QEMU进程创建的一系列POSIX线程,而线程执行函数为qemu_kvm_cpu_thread_fn。

kvm_init_vcpu()通过KVM_CREATE_VCPU创建了三大描述符之三:vcpu描述符/vcpufd。

并进入了while(1)的循环循环,反复调用kvm_cpu_exec()。

static void *qemu_kvm_cpu_thread_fn(void *arg) file: cpus.c, line: 732

{

......

r = kvm_init_vcpu(env); <--------file: kvm-all.c, line: 213

......

qemu_kvm_init_cpu_signals(env);

/* signal CPU creation */

env->created = 1;

qemu_cond_signal(&qemu_cpu_cond);

while (1) {

if (cpu_can_run(env)) {

r = kvm_cpu_exec(env); <--------file: kvm-all.c, line: 1550

if (r == EXCP_DEBUG) {

cpu_handle_guest_debug(env);

}

}

qemu_kvm_wait_io_event(env);

}

return NULL;

}

我们可以看到kvm_cpu_exec()中又是一个do()while(ret == 0)的循环体,该循环体中主要通过KVM_RUN启动VM的运行,从此处进入了kvm的内核处理阶段,并等待返回结果,同时根据返回的原因进行相关的处理,最后将处理结果返回。因为整个执行体在上述函数中也是在循环中,所以后续又会进入到该函数的处理中,而整个VM的cpu的处理就是在这个循环中不断的进行。

int kvm_cpu_exec(CPUArchState *env)

{

do {

run_ret = kvm_vcpu_ioctl(env, KVM_RUN, 0); ---------调kvm,进入内核kvm

//退出kvm,进入qemu

switch (run->exit_reason) {

case KVM_EXIT_IO:

kvm_handle_io();

......

case KVM_EXIT_MMIO:

cpu_physical_memory_rw();

......

case KVM_EXIT_IRQ_WINDOW_OPEN:

ret = EXCP_INTERRUPT;

......

case KVM_EXIT_SHUTDOWN:

ret = EXCP_INTERRUPT;

......

case KVM_EXIT_UNKNOWN:

ret = -1

......

case KVM_EXIT_INTERNAL_ERROR:

ret = kvm_handle_internal_error(env, run);

......

default:

ret = kvm_arch_handle_exit(env, run);

......

}

} while (ret == 0);

env->exit_request = 0;

return ret;

}

Conclusion

总结下kvm run在Qemu中的核心流程:

- 解析参数;

- 创建三大描述符:kvmfd/vmfd/vcpufd,及相关的初始化,为VM的运行创造必要的条件;

- 根据cpu的配置数目,启动n个POSIX线程运行VM实体,所以vm的执行环境追根溯源是在Qemu创建的线程环境中开始的。

- 通过 KVM_RUN 调用KVM提供的API发起KVM的启动,从这里进入到了内核空间运行,等待运行返回;

- 重复循环进入run阶段。

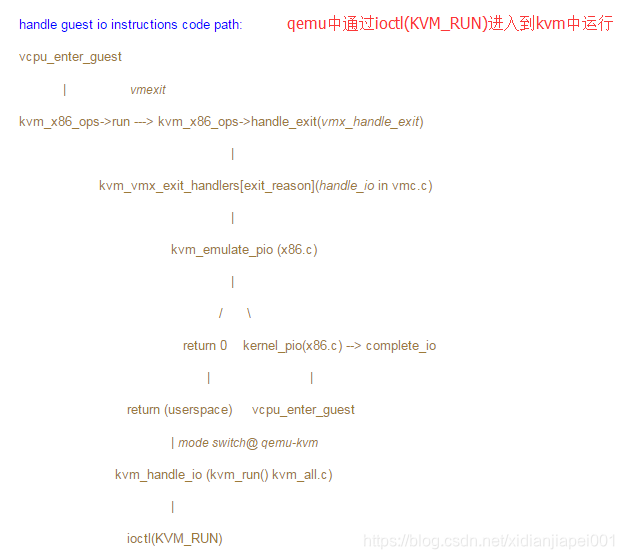

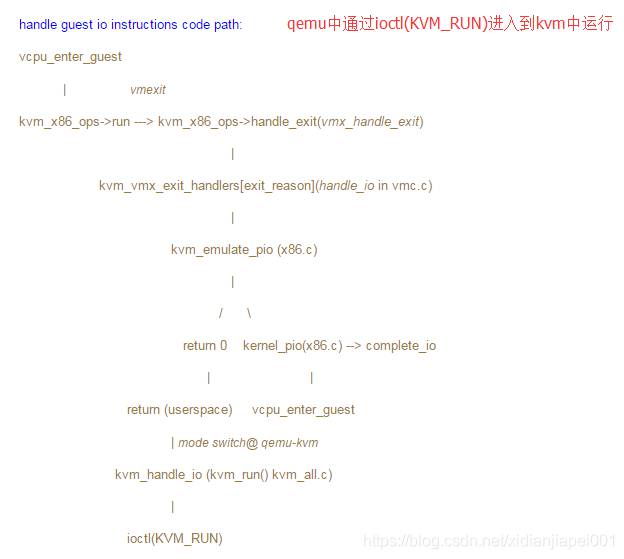

KVM核心流程

qemu通过调用kvm提供的一系列接口来启动kvm. qemu的入口为vl.c中的main函数, main函数通过调用kvm_init 和 machine->init来初始化kvm. 其中, machine->init会创建vcpu, 用一个线程去模拟vcpu, 该线程执行的函数为qemu_kvm_cpu_thread_fn, 并且该线程最终调用kvm_cpu_exec, 该函数调用kvm_vcpu_ioctl切换到kvm中,下次从kvm中返回时,会接着执行kvm_vcpu_ioctl之后的代码,判断exit_reason,然后进行相应处理.

int kvm_cpu_exec(CPUState *cpu) --> run_ret = kvm_vcpu_ioctl(cpu, KVM_RUN, 0);

当传入参数为KVM_RUN时,会进入到KVM中,会执行__vcpu_run函数,最终会调用到vcpu_enter_guest函数, vcpu_enter_guest函数中调用了kvm_x86_ops->run(vcpu), 在intel处理器架构中该函数对应的实现为vmx_vcpu_run, vmx_vcpu_run设置好寄存器状态之后调用VM_LAUNCH或者VM_RESUME进入guest vm, 一旦发生vm exit则从此处继续执行下去.

static void vmx_vcpu_run(struct kvm_vcpu *vcpu)

{

/*vmx_vcpu_run设置好寄存器状态之后调用VM_LAUNCH或者VM_RESUME

进入guest vm, 一旦发生vm exit则从此处继续执行下去*/

asm(

/* Enter guest mode */

"jne .Llaunched

"

__ex(ASM_VMX_VMLAUNCH) "

"

"jmp .Lkvm_vmx_return

"

".Llaunched: " __ex(ASM_VMX_VMRESUME) "

"

".Lkvm_vmx_return: "

vmx->launched = 1;

/*当Guest Vm进行IO操作需要访问设备时,

就会触发vm exit 返回到vmx_vcpu_run*/

vmx_complete_interrupts(vmx);

}

static int vcpu_run(struct kvm_vcpu *vcpu) { int r; struct kvm *kvm = vcpu->kvm; vcpu->srcu_idx = srcu_read_lock(&kvm->srcu); for (;;) { if (kvm_vcpu_running(vcpu)) r = vcpu_enter_guest(vcpu);//进入虚拟机 else r = vcpu_block(kvm, vcpu); if (r <= 0) break; clear_bit(KVM_REQ_PENDING_TIMER, &vcpu->requests); if (kvm_cpu_has_pending_timer(vcpu)) kvm_inject_pending_timer_irqs(vcpu); if (dm_request_for_irq_injection(vcpu) && kvm_vcpu_ready_for_interrupt_injection(vcpu)) { r = 0; vcpu->run->exit_reason = KVM_EXIT_IRQ_WINDOW_OPEN; ++vcpu->stat.request_irq_exits; break; } kvm_check_async_pf_completion(vcpu); if (signal_pending(current)) { r = -EINTR; vcpu->run->exit_reason = KVM_EXIT_INTR; ++vcpu->stat.signal_exits; break; } if (need_resched()) { srcu_read_unlock(&kvm->srcu, vcpu->srcu_idx); cond_resched(); vcpu->srcu_idx = srcu_read_lock(&kvm->srcu); } } srcu_read_unlock(&kvm->srcu, vcpu->srcu_idx); return r; }

当Guest VM进行IO操作需要访问设备时,就会触发VM Exit 返回到vmx_vcpu_run, vmx保存好vmcs并且记录下VM_EXIT_REASON后返回到调用该函数的vcpu_enter_guest

vmx_complete_interrupts --> vmx->exit_reason = vmcs_read32(VM_EXIT_REASON);

回到vcpu_enter_guest中,在vcpu_enter_guest函数末尾调用了r = kvm_x86_ops->handle_exit(vcpu),该函数对应于vmx_handle_exit函数。

static int vmx_handle_exit(struct kvm_vcpu *vcpu)

{

struct vcpu_vmx *vmx = to_vmx(vcpu);

u32 exit_reason = vmx->exit_reason;

u32 vectoring_info = vmx->idt_vectoring_info;

...

if (exit_reason < kvm_vmx_max_exit_handlers

&& kvm_vmx_exit_handlers[exit_reason])

return kvm_vmx_exit_handlers[exit_reason](vcpu);

else {

vcpu->run->exit_reason = KVM_EXIT_UNKNOWN;

vcpu->run->hw.hardware_exit_reason = exit_reason;

}

return 0;

}

vmx_handle_exit 调用kvm_vmx_exit_handlers[exit_reason](vcpu),该语句根据exit_reason调用不同的函数,该数据结构定义如下:

static int (*const kvm_vmx_exit_handlers[])(struct kvm_vcpu *vcpu) = {

[EXIT_REASON_EXCEPTION_NMI] = handle_exception,

[EXIT_REASON_EXTERNAL_INTERRUPT] = handle_external_interrupt,

[EXIT_REASON_TRIPLE_FAULT] = handle_triple_fault,

[EXIT_REASON_NMI_WINDOW] = handle_nmi_window,

[EXIT_REASON_IO_INSTRUCTION] = handle_io,

[EXIT_REASON_CR_ACCESS] = handle_cr,

[EXIT_REASON_DR_ACCESS] = handle_dr,

[EXIT_REASON_CPUID] = handle_cpuid,

[EXIT_REASON_MSR_READ] = handle_rdmsr,

[EXIT_REASON_MSR_WRITE] = handle_wrmsr,

[EXIT_REASON_PENDING_INTERRUPT] = handle_interrupt_window,

[EXIT_REASON_HLT] = handle_halt,

[EXIT_REASON_INVD] = handle_invd,

[EXIT_REASON_INVLPG] = handle_invlpg,

[EXIT_REASON_RDPMC] = handle_rdpmc,

[EXIT_REASON_VMCALL] = handle_vmcall,

[EXIT_REASON_VMCLEAR] = handle_vmclear,

[EXIT_REASON_VMLAUNCH] = handle_vmlaunch,

[EXIT_REASON_VMPTRLD] = handle_vmptrld,

[EXIT_REASON_VMPTRST] = handle_vmptrst,

[EXIT_REASON_VMREAD] = handle_vmread,

[EXIT_REASON_VMRESUME] = handle_vmresume,

[EXIT_REASON_VMWRITE] = handle_vmwrite,

[EXIT_REASON_VMOFF] = handle_vmoff,

[EXIT_REASON_VMON] = handle_vmon,

[EXIT_REASON_TPR_BELOW_THRESHOLD] = handle_tpr_below_threshold,

[EXIT_REASON_APIC_ACCESS] = handle_apic_access,

[EXIT_REASON_WBINVD] = handle_wbinvd,

[EXIT_REASON_XSETBV] = handle_xsetbv,

[EXIT_REASON_TASK_SWITCH] = handle_task_switch,

[EXIT_REASON_MCE_DURING_VMENTRY] = handle_machine_check,

[EXIT_REASON_EPT_VIOLATION] = handle_ept_violation,

[EXIT_REASON_EPT_MISCONFIG] = handle_ept_misconfig,

[EXIT_REASON_PAUSE_INSTRUCTION] = handle_pause,

[EXIT_REASON_MWAIT_INSTRUCTION] = handle_invalid_op,

[EXIT_REASON_MONITOR_INSTRUCTION] = handle_invalid_op,

};

如果是因为IO原因导致的vm exit,则调用的处理函数为handle_io.

static int handle_io(struct kvm_vcpu *vcpu)

{

unsigned long exit_qualification;

int size, in, string;

unsigned port;

++vcpu->stat.io_exits;

exit_qualification = vmcs_readl(EXIT_QUALIFICATION); //获取exit qualification

string = (exit_qualification & 16) != 0; //判断是否为string io (ins, outs)

if (string) {

if (emulate_instruction(vcpu, 0) == EMULATE_DO_MMIO)

return 0;

return 1;

}

size = (exit_qualification & 7) + 1; //大小

in = (exit_qualification & 8) != 0; //判断io方向,是in 还是out

port = exit_qualification >> 16; //得到端口号

skip_emulated_instruction(vcpu);

return kvm_emulate_pio(vcpu, in, size, port);

}

这里我们可以看到,根据io的方向,以及是否为string io,处理方式是不同的.

int kvm_emulate_pio(struct kvm_vcpu *vcpu, int in, int size, unsigned port)

{

unsigned long val;

trace_kvm_pio(!in, port, size, 1);

vcpu->run->exit_reason = KVM_EXIT_IO;

vcpu->run->io.direction = in ? KVM_EXIT_IO_IN : KVM_EXIT_IO_OUT;

vcpu->run->io.size = vcpu->arch.pio.size = size;

vcpu->run->io.data_offset = KVM_PIO_PAGE_OFFSET * PAGE_SIZE;

vcpu->run->io.count = vcpu->arch.pio.count = vcpu->arch.pio.cur_count = 1;

vcpu->run->io.port = vcpu->arch.pio.port = port;

vcpu->arch.pio.in = in;

vcpu->arch.pio.string = 0;

vcpu->arch.pio.down = 0;

vcpu->arch.pio.rep = 0;

val = kvm_register_read(vcpu, VCPU_REGS_RAX);

memcpy(vcpu->arch.pio_data, &val, 4);

/* 如果在kmod中能完成io的话,就完成处理,不需要再返回qemu了 */

if (!kernel_pio(vcpu, vcpu->arch.pio_data)) {

complete_pio(vcpu);

return 1;

}

return 0;

}

返回qemu之后,继续kvm_cpu_exec的执行:

int kvm_cpu_exec(CPUArchState *env)

{

struct kvm_run *run = env->kvm_run;

int ret, run_ret;

…

switch (run->exit_reason) {

//根据kvm_run中存放的退出原因来选择处理方式

case KVM_EXIT_IO:

DPRINTF("handle_io

");

kvm_handle_io(run->io.port,

(uint8_t *)run + run->io.data_offset,

run->io.direction,

run->io.size,

run->io.count);

//这里可以看到,qemu会根据kvm_run中存放的数据来进行处理了

ret = 0;

break;

…

}

static void kvm_handle_io(uint16_t port, void *data, int direction, int size, uint32_t count)

{

//在这个函数中,我们就可以看到,最终是调用了

//cpu_inb、cpu_outb这些函数和具体的设备进行交互。

int i;

uint8_t *ptr = data;

for (i = 0; i < count; i++) {

if (direction == KVM_EXIT_IO_IN) {

switch (size) {

case 1:

stb_p(ptr, cpu_inb(port));

break;

...

}

} else {

switch (size) {

case 1:

cpu_outb(port, ldub_p(ptr));

break;

...

}

ptr += size;

}

}

这样,kvm就完成了一次对guest执行out指令的虚拟。

当qemu完成IO操作后,会在kvm_cpu_exec函数的循环中,调用kvm_vcpu_ioctl重新进入kvm。

[<ffffffffb66a4d89>] schedule+0x39/0x80 [<ffffffffc0606a06>] ? kvm_irq_delivery_to_apic+0x56/0x220 [kvm] [<ffffffffb66a7447>] rwsem_down_read_failed+0xc7/0x120 [<ffffffffb63cb594>] call_rwsem_down_read_failed+0x14/0x30 [<ffffffffb66a6af7>] ? down_read+0x17/0x20 [<ffffffffc05d1480>] kvm_host_page_size+0x60/0xa0 [kvm] [<ffffffffc05ea9bc>] mapping_level+0x5c/0x130 [kvm] [<ffffffffc05f1b1b>] tdp_page_fault+0x9b/0x260 [kvm] [<ffffffffc05eba21>] kvm_mmu_page_fault+0x31/0x120 [kvm] [<ffffffffc0678db4>] handle_ept_violation+0xa4/0x170 [kvm_intel] [<ffffffffc067fd07>] vmx_handle_exit+0x257/0x490 [kvm_intel] [<ffffffffb60b2081>] ? __vtime_account_system+0x31/0x40 [<ffffffffc05e662f>] vcpu_enter_guest+0x6af/0xff0 [kvm] [<ffffffffc06034ad>] ? kvm_apic_local_deliver+0x5d/0x60 [kvm] [<ffffffffc05e8564>] kvm_arch_vcpu_ioctl_run+0xc4/0x3c0 [kvm] [<ffffffffc05cf844>] kvm_vcpu_ioctl+0x324/0x5d0 [kvm] [<ffffffffb611a4cc>] ? acct_account_cputime+0x1c/0x20 [<ffffffffb60b1f23>] ? account_user_time+0x73/0x80 [<ffffffffb61da203>] do_vfs_ioctl+0x83/0x4e0 [<ffffffffb600261f>] ? enter_from_user_mode+0x1f/0x50 [<ffffffffb6002711>] ? syscall_trace_enter_phase1+0xc1/0x110 [<ffffffffb61da6ac>] SyS_ioctl+0x4c/0x80 [<ffffffffb66a892e>] entry_SYSCALL_64_fastpath+0x12/0x7

KVM RUN的准备

当Qemu使用kvm_vcpu_ioctl(env, KVM_RUN, 0);发起KVM_RUN命令时,ioctl会陷入内核,到达kvm_vcpu_ioctl();

kvm_vcpu_ioctl() file: virt/kvm/kvm_main.c, line: 1958

--->kvm_arch_vcpu_ioctl_run() file: arch/x86/kvm, line: 6305

--->__vcpu_run() file: arch/x86/kvm/x86.c, line: 6156

在__vcpu_run()中也出现了一个while(){}主循环;

static int __vcpu_run(struct kvm_vcpu *vcpu)

{

......

r = 1;

while (r > 0) {

if (vcpu->arch.mp_state == KVM_MP_STATE_RUNNABLE && !vcpu->arch.apf.halted)

r = vcpu_enter_guest(vcpu);

else {

......

}

}

if (r <= 0) <--------当r小于0时会跳出循环体,回到qemu

break;

......

}

return r;

}

我们看到当KVM通过__vcpu_run()进入主循环后,调用vcpu_enter_guest(),从名字上看可以知道这是进入guest模式的入口;

当r大于0时KVM内核代码会一直调用vcpu_enter_guest(),重复进入guest模式;

当r小于等于0时则会跳出循环体,此时会一步一步退到当初的入口kvm_vcpu_ioctl(),乃至于退回到用户态空间Qemu进程中(退出kvm,回到qemu),具体的地方可以参看上一篇文章,这里也给出相关的代码片段:

int kvm_cpu_exec(CPUArchState *env)

{

do {

run_ret = kvm_vcpu_ioctl(env, KVM_RUN, 0);

switch (run->exit_reason) { <----------Qemu根据退出的原因进行处理,主要是IO相关方面的操作

case KVM_EXIT_IO:

kvm_handle_io(); -------------不同于handle_io

......

case KVM_EXIT_MMIO:

cpu_physical_memory_rw();

......

case KVM_EXIT_IRQ_WINDOW_OPEN:

ret = EXCP_INTERRUPT;

......

case KVM_EXIT_SHUTDOWN:

ret = EXCP_INTERRUPT;

......

case KVM_EXIT_UNKNOWN:

ret = -1

......

case KVM_EXIT_INTERNAL_ERROR:

ret = kvm_handle_internal_error(env, run);

......

default:

ret = kvm_arch_handle_exit(env, run);

......

}

} while (ret == 0);

env->exit_request = 0;

return ret;

}

Qemu根据退出的原因进行处理,主要是IO相关方面的操作,当然处理完后又会调用kvm_vcpu_ioctl(env, KVM_RUN, 0)再次RUN KMV。

我们再次拉回到内核空间,走到了static int vcpu_enter_guest(struct kvm_vcpu *vcpu)函数,其中有几个重要的初始化准备工作:

static int vcpu_enter_guest(struct kvm_vcpu *vcpu) file: arch/x86/kvm/x86.c, line: 5944

{

......

kvm_check_request(); <-------查看是否有guest退出的相关请求

......

kvm_mmu_reload(vcpu); <-------Guest的MMU初始化,为内存虚拟化做准备

......

preempt_disable(); <-------内核抢占关闭

......

kvm_x86_ops->run(vcpu); <-------体系架构相关的run操作

...... <-------到这里表明guest模式已退出

kvm_x86_ops->handle_external_intr(vcpu); <-------host处理外部中断

......

preempt_enable(); <-------内核抢占使能

......

r = kvm_x86_ops->handle_exit(vcpu); <------根据具体的退出原因进行处理

return r;

......

}

Guest的进入

kvm_x86_ops是一个x86体系相关的函数集,定义位于file: arch/x86/kvm/vmx.c, line: 8693

static struct kvm_x86_ops vmx_x86_ops = {

......

.run = vmx_vcpu_run,

.handle_exit = vmx_handle_exit,

......

}

vmx_vcpu_run()中一段核心的汇编函数的功能主要就是从ROOT模式切换至NO ROOT模式,主要进行了:

- Store host registers:主要将host状态上下文存入到VM对应的VMCS结构中;

- Load guest registers:主要讲guest状态进行加载;

- Enter guest mode: 通过ASM_VMX_VMLAUNCH指令进行VM的切换,从此进入另一个世界,即Guest OS中;

- Save guest registers, load host registers: 当发生VM Exit时,需要保持guest状态,同时加载HOST;

当第4步完成后,Guest即从NO ROOT模式退回到了ROOT模式中,又恢复了HOST的执行生涯。

Guest的退出处理

当然Guest的退出不会就这么算了,退出总是有原因的,为了保证Guest后续的顺利运行,KVM要根据退出原因进行处理,此时重要的函数为:vmx_handle_exit();

static int vmx_handle_exit(struct kvm_vcpu *vcpu) file: arch/x86/kvm/vmx.c, line: 6877

{

......

if (exit_reason < kvm_vmx_max_exit_handlers

&& kvm_vmx_exit_handlers[exit_reason])

return kvm_vmx_exit_handlers[exit_reason](vcpu); <-----根据reason调用对应的注册函数处理

else {

vcpu->run->exit_reason = KVM_EXIT_UNKNOWN;

vcpu->run->hw.hardware_exit_reason = exit_reason;

}

return 0; <--------若发生退出原因不在KVM预定义的handler范围内,则返回0

}

而众多的exit reason对应的handler如下:

static int (*const kvm_vmx_exit_handlers[])(struct kvm_vcpu *vcpu) = {

[EXIT_REASON_EXCEPTION_NMI] = handle_exception, <------异常

[EXIT_REASON_EXTERNAL_INTERRUPT] = handle_external_interrupt, <------外部中断

[EXIT_REASON_TRIPLE_FAULT] = handle_triple_fault,

[EXIT_REASON_NMI_WINDOW] = handle_nmi_window,

[EXIT_REASON_IO_INSTRUCTION] = handle_io, <------io指令操作

[EXIT_REASON_CR_ACCESS] = handle_cr,

[EXIT_REASON_DR_ACCESS] = handle_dr,

[EXIT_REASON_CPUID] = handle_cpuid,

[EXIT_REASON_MSR_READ] = handle_rdmsr,

[EXIT_REASON_MSR_WRITE] = handle_wrmsr,

[EXIT_REASON_PENDING_INTERRUPT] = handle_interrupt_window,

[EXIT_REASON_HLT] = handle_halt,

[EXIT_REASON_INVD] = handle_invd,

[EXIT_REASON_INVLPG] = handle_invlpg,

[EXIT_REASON_RDPMC] = handle_rdpmc,

[EXIT_REASON_VMCALL] = handle_vmcall, <-----VM相关操作指令

[EXIT_REASON_VMCLEAR] = handle_vmclear,

[EXIT_REASON_VMLAUNCH] = handle_vmlaunch,

[EXIT_REASON_VMPTRLD] = handle_vmptrld,

[EXIT_REASON_VMPTRST] = handle_vmptrst,

[EXIT_REASON_VMREAD] = handle_vmread,

[EXIT_REASON_VMRESUME] = handle_vmresume,

[EXIT_REASON_VMWRITE] = handle_vmwrite,

[EXIT_REASON_VMOFF] = handle_vmoff,

[EXIT_REASON_VMON] = handle_vmon,

[EXIT_REASON_TPR_BELOW_THRESHOLD] = handle_tpr_below_threshold,

[EXIT_REASON_APIC_ACCESS] = handle_apic_access,

[EXIT_REASON_APIC_WRITE] = handle_apic_write,

[EXIT_REASON_EOI_INDUCED] = handle_apic_eoi_induced,

[EXIT_REASON_WBINVD] = handle_wbinvd,

[EXIT_REASON_XSETBV] = handle_xsetbv,

[EXIT_REASON_TASK_SWITCH] = handle_task_switch, <----进程切换

[EXIT_REASON_MCE_DURING_VMENTRY] = handle_machine_check,

[EXIT_REASON_EPT_VIOLATION] = handle_ept_violation, <----EPT缺页异常

[EXIT_REASON_EPT_MISCONFIG] = handle_ept_misconfig,

[EXIT_REASON_PAUSE_INSTRUCTION] = handle_pause,

[EXIT_REASON_MWAIT_INSTRUCTION] = handle_invalid_op,

[EXIT_REASON_MONITOR_INSTRUCTION] = handle_invalid_op,

[EXIT_REASON_INVEPT] = handle_invept,

};

当该众多的handler处理成功后,会得到一个大于0的返回值,而处理失败则会返回一个小于0的数;则又回到__vcpu_run()中的主循环中;

vcpu_enter_guest() > 0时: 则继续循环,再次准备进入Guest模式;

vcpu_enter_guest() <= 0时: 则跳出循环,返回用户态空间,由Qemu根据退出原因进行处理。

handle_io

vmx_handle_eixt() { /* kvm_vmx_exit_handlers[exit_reason](vcpu); */ handle_io() { kvm_emulate_pio() { kernel_io() { if (read) { kvm_io_bus_read() { } } else { kvm_io_bus_write() { ioeventfd_write(); } } } } }

vmx_handle_exit-->kvm_vmx_exit_handlers[exit_reason]-->handle_io-->kvm_fast_pio_out-->emulator_pio_out_emulated-->emulator_pio_in_out-->kernel_pio-->kvm_io_bus_write-->kvm_iodevice_write(dev->ops->write)-->ioeventfd_write-->eventfd_signal

-->wake_up_locked_poll-->__wake_up_locked_key-->__wake_up_common-->vhost_poll_wakeup-->vhost_poll_queue-->vhost_work_queue-->wake_up_process

————————————————————

static int handle_io(struct kvm_vcpu *vcpu) { unsigned long exit_qualification; int size, in, string; unsigned port; exit_qualification = vmcs_readl(EXIT_QUALIFICATION); string = (exit_qualification & 16) != 0; in = (exit_qualification & 8) != 0; ++vcpu->stat.io_exits; if (string || in) return emulate_instruction(vcpu, 0) == EMULATE_DONE; port = exit_qualification >> 16; size = (exit_qualification & 7) + 1; skip_emulated_instruction(vcpu); return kvm_fast_pio_out(vcpu, size, port); }

kvm_handle_io

static void kvm_handle_io(uint16_t port, MemTxAttrs attrs, void *data, int direction, int size, uint32_t count) { int i; uint8_t *ptr = data; for (i = 0; i < count; i++) { address_space_rw(&address_space_io, port, attrs, ptr, size, direction == KVM_EXIT_IO_OUT); ptr += size; } }

#0 blk_aio_prwv (blk=0x555556a6fc60, offset=0x0, bytes=0x200, qiov=0x7ffff0059e70, co_entry=0x555555b58df1 <blk_aio_read_entry>, flags=0, cb=0x555555997813 <ide_buffered_readv_cb>, opaque=0x7ffff0059e50) at block/block-backend.c:995 #1 blk_aio_preadv (blk=0x555556a6fc60, offset=0x0, qiov=0x7ffff0059e70, flags=0, cb=0x555555997813 <ide_buffered_readv_cb>, opaque=0x7ffff0059e50) at block/block-backend.c:1100 #2 ide_buffered_readv (s=0x555557f66a68, sector_num=0x0, iov=0x555557f66d60, nb_sectors=0x1, cb=0x555555997b41 <ide_sector_read_cb>, opaque=0x555557f66a68) at hw/ide/core.c:637 #3 ide_sector_read (s=0x555557f66a68) at hw/ide/core.c:760 #4 cmd_read_pio (s=0x555557f66a68, cmd=0x20) at hw/ide/core.c:1452 #5 ide_exec_cmd (bus=0x555557f669f0, val=0x20) at hw/ide/core.c:2043 #6 ide_ioport_write (opaque=0x555557f669f0, addr=0x7, val=0x20) at hw/ide/core.c:1249 #7 portio_write (opaque=0x555558044e00, addr=0x7, data=0x20, size=0x1) at /home/jaycee/qemu-io_test/qemu-2.8.0/ioport.c:202 #8 memory_region_write_accessor (mr=0x555558044e00, addr=0x7, value=0x7ffff5f299b8, size=0x1, shift=0x0, mask=0xff, attrs=...) at /home/jaycee/qemu-io_test/qemu-2.8.0/memory.c:526 #9 access_with_adjusted_size (addr=0x7, value=0x7ffff5f299b8, size=0x1, access_size_min=0x1, access_size_max=0x4, access=0x5555557abd17 <memory_region_write_accessor>, mr=0x555558044e00, attrs=...) at /home/jaycee/qemu-io_test/qemu-2.8.0/memory.c:592 #10 memory_region_dispatch_write (mr=0x555558044e00, addr=0x7, data=0x20, size=0x1, attrs=...) at /home/jaycee/qemu-io_test/qemu-2.8.0/memory.c:1323 #11 address_space_write_continue (as=0x555556577d20 <address_space_io>, addr=0x1f7, attrs=..., buf=0x7ffff7fef000 " 237�06", len=0x1, addr1=0x7, l=0x1, mr=0x555558044e00) at /home/jaycee/qemu-io_test/qemu-2.8.0/exec.c:2608 #12 address_space_write (as=0x555556577d20 <address_space_io>, addr=0x1f7, attrs=..., buf=0x7ffff7fef000 " 237�06", len=0x1) at /home/jaycee/qemu-io_test/qemu-2.8.0/exec.c:2653 #13 address_space_rw (as=0x555556577d20 <address_space_io>, addr=0x1f7, attrs=..., buf=0x7ffff7fef000 " 237�06", len=0x1, is_write=0x1) at /home/jaycee/qemu-io_test/qemu-2.8.0/exec.c:2755 #14 kvm_handle_io (port=0x1f7, attrs=..., data=0x7ffff7fef000, direction=0x1, size=0x1, count=0x1) at /home/jaycee/qemu-io_test/qemu-2.8.0/kvm-all.c:1800 #15 kvm_cpu_exec (cpu=0x555556a802a0) at /home/jaycee/qemu-io_test/qemu-2.8.0/kvm-all.c:1958 #16 qemu_kvm_cpu_thread_fn (arg=0x555556a802a0) at /home/jaycee/qemu-io_test/qemu-2.8.0/cpus.c:998 #17 start_thread (arg=0x7ffff5f2a700) at pthread_create.c:333 #18 clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:109

2、kvm和qemu的交互

Qemu创建虚拟机进入kvm:main函数通过调用kvm_init 和 machine->init来初始化kvm. 其中, machine->init会创建vcpu, 用一个线程去模拟vcpu, 该线程执行的函数为qemu_kvm_cpu_thread_fn, 并且该线程最终kvm_cpu_exec,该函数调用kvm_vcpu_ioctl切换到kvm中。

Kvm运行并因io退出:在kvm中看到参数KVM_RUN,最后调用vcpu_enter_guest,然后 vmx_vcpu_run设置好寄存器状态之后调用VM_LAUNCH或者VM_RESUME进入guest vm。如果vm进行IO操作需要访问设备时,就会触发vm exit 返回到vmx_vcpu_run, vmx保存好vmcs并且记录下VM_EXIT_REASON后返回到调用该函数的vcpu_enter_guest, 在vcpu_enter_guest函数末尾调用了r = kvm_x86_ops->handle_exit(vcpu), 该函数对应于vmx_handle_exit函数, vmx_handle_exit 调用kvm_vmx_exit_handlers[exit_reason](vcpu),该语句根据exit_reason调用不同的函数。io操作则是handle_io把数据填充到vcpu->run,就一路return到kvm_vcpu_ioctl,就ioctl返回到qemu的kvm_cpu_exec中。

从kvm返回到qemu后的处理:Qemu在kvm_cpu_exec中会看kvm_run的run->exit_reason如果是KVM_EXIT_IO就进入kvm_handle_io里处理。 当qemu完成IO操作后,会在kvm_cpu_exec函数的循环中,调用kvm_vcpu_ioctl重新进入kvm。

kvm_run,这是用于vcpu和应用层的程序(典型如qemu)通信的一个结构,user space的 程序通过KVM__VCPU_MMAP_SIZE这个ioctl得到大小,然后映射到用户空间。

3、kvm的io处理流程

static int handle_io(struct kvm_vcpu *vcpu)

{

unsigned long exit_qualification;

int size, in, string;

unsigned port;

exit_qualification = vmcs_readl(EXIT_QUALIFICATION); //获取exit qualification

string = (exit_qualification & 16) != 0; //判断是否为string io (ins, outs)

in = (exit_qualification & 8) != 0; //判断io方向,是in 还是out

++vcpu->stat.io_exits;

if (string || in) //如果是输入类的指令,或者是string io,就进入emulator处理

return emulate_instruction(vcpu, 0) == EMULATE_DONE;

port = exit_qualification >> 16; //得到端口号

size = (exit_qualification & 7) + 1; //大小

skip_emulated_instruction(vcpu); //跳过这个指令

return kvm_fast_pio_out(vcpu, size, port); //进行out操作

}Guest执行io指令 -> 发生vmexit-> 返回qemu -> 处理io

1、out指令虚拟:虚拟单个out指令,在KVM中可以直接把out的数据返回给qemu,qemu完成out操作。

流程:KVM的handle_io->kvm_fast_pio_out->emulator_pio_out_emulated后面是vcpu->arch.pio.count = 0函数中非string类型的 out操作可以一步完成,所以从qemu处理完返回kvm后不需要再进入emulator。在emulator_pio_out_emulated中,将IO数据memcpy到kvm和qemu共享buffer中,然后emulator_pio_in_out,将相应数据保存到kvm_run中就返回到qemu的kvm_cpu_exec的switch看run->exit_reason,如果是KVM_EXIT_IO则进入kvm_handle_io中和设备交互。

2、String或in指令虚拟:如果是in指令,qemu只能把得到的数据写到kvm_run中,kvm必须在下一次vmentry的时候,将qemu得到的数据放到相应的位置,所以,在handle_io中,如果是in或者string指令,没有调用skip_emulated_instruction,这样,在qemu完成in或者一次out之后,还会在同样的地方发生vmexit,这样再由emulator完成相应的处理,针对string类型的指令,emulator会进行解码等操作,确认io的次数和源操作数、目的操作数等。

流程:handle_io->emulate_instruction->x86_emulate_instruction对指令的decode,在过程中会调用到em_in和em_out(这两个函数最后调用的emulator_pio_in_emulated中先通过和上面PIO一样的函数emulator_pio_in_out,正确返回表明qemu已经将模拟出的数据返回到参数val了,则可直接memcpy完成具体的将从qemu中得到的数据写到正确位置vcpu->arch.pio_data),设置如果是out,下次到KVM时直接进入emulator,如果是in,注册vcpu->arch.complete_userspace_io = complete_emulated_pio;需要在下次qemu进入kvm的时候,完成io,实际上就是将qemu得到的数据写到正确的位置。下次进入kvm,如果要完成in指令,会在函数kvm_arch_vcpu_ioctl_run中调用注册的complete_emulated_pio会再次调用emulate_instruction将数据写到正确位置(这次不用解码,而是直接em_in)。

- 在guest中,virtio-blk的初始化或者说是在探测virtio-blk之前

virtio_dev_probe

|-->add_status

|-->dev->config->set_status[vp_set_status]

|-->iowrite8(status, vp_dev->ioaddr + VIRTIO_PCI_STATUS)这里就产生VM exit到Qemu中了,而在Qemu中有如下的处理:-

Qemu中建立ioeventfd的处理流程:

virtio_pci_config_write

|-->virtio_ioport_write

|-->virtio_pci_start_ioeventfd

|-->virtio_pci_set_host_notifier_internal

|-->virtio_queue_set_host_notifier_fd_handler

|-->memory_region_add_eventfd

|-->memory_region_transaction_commit

|-->address_space_update_ioeventfds

|-->address_space_add_del_ioeventfds

|-->eventfd_add[kvm_mem_ioeventfd_add]

|-->kvm_set_ioeventfd_mmio

|-->kvm_vm_ioctl(...,KVM_IOEVENTFD,...)最后这一步就切换到kvm内核模块中来通过KVM_IOEVENT来建立ioeventfd:

kvm内核模块中建立ioeventfd:

kvm_ioeventfd

|-->kvm_assign_ioeventfd在这个流程中为某段区域建立了一个ioeventfd,这样的话guest在操作这块区域的时候就会触发ioeventfd(这是fs的eventfd机制),从而通知到Qemu,Qemu的main loop原先是阻塞的,现在有ioevent发生之后就可以得到运行了,也就可以做对virtio-blk相应的处理了。

KVm 使用ioeventfd

那么当guest对该块区域内存区域进行写的时候,势必会先exit到kvm内核模块中,kvm内核模块又是怎么知道这块区域是注册了event的呢?是怎么个流程呢?

只使用EPT的情况下,guest对一块属于MMIO的区域进行读写操作引起的exit在kvm中对应的处理函数是handle_ept_misconfig,下面就看下具体的流程:

handle_ept_misconfig

|-->x86_emulate_instruction

|-->x86_emulate_insn

|-->writeback

|-->segmented_write

|-->write_emulated[emulator_write_emulated]

|-->emulator_read_write

|-->emulator_read_write_onepage

|-->ops->read_write_mmio[write_mmio]

|-->vcpu_mmio_write

|-->kvm_io_bus_write

|-->__kvm_io_bus_write

|-->kvm_iodevice_write

|-->ops->write[ioeventfd_write]在ioeventfd_write函数中会调用文件系统eventfd机制的eventfd_signal函数来触发相应的事件。

上述就是整个ioeventfd从创建到触发的流程!!!!

Conclusion

至此,KVM内核代码部分的核心调用流程的分析到此结束,从上述流程中可以看出,KVM内核代码的主要工作如下:

- Guest进入前的准备工作;

- Guest的进入;

- 根据Guest的退出原因进行处理,若kvm自身能够处理的则自行处理;若KVM无法处理,则返回到用户态空间的Qemu进程中进行处理;

总而言之,KVM与Qemu的工作是为了确保Guest的正常运行,通过各种异常的处理,使Guest无需感知其运行的虚拟环境。