Kafka Refer

–http://research.microsoft.com/en-us/um/people/srikanth/netdb11/netdb11papers/netdb11-final12.pdf

- http://incubator.apache.org/kafka

- http://prezi.com/sj433kkfzckd/kafka-bringing-reliable-stream-processing-to-a-cold-dark-world/

- http://sna-projects.com/blog/2011/08/kafka/

- http://sna-projects.com/sna/media/kafka_hadoop.pdf

- https://github.com/kafka-dev/kafka/tree/master/clients , all kinds of clients of kafka

- 中文版的设计文档, http://www.oschina.net/translate/kafka-design

Kafka: a Distributed Messaging System for Log Processing

1. Introduction

We have built a novel messaging system for log processing called Kafka [18] that combines the benefits of traditional log aggregators and messaging systems.

On the one hand, Kafka is distributed and scalable, and offers high throughput.

On the other hand, Kafka provides an API similar to a messaging system and allows applications to consume log events in real time.

可以理解成一个分布式的product-consumer架构.

2. Related Work

既然先前有那么多的log aggreagtor和messaging system系统, 为什么还需要kafka?

和传统messaging system 对比

1. MQ或JMS都有很强的delivery guarantees功能, 这个对于log aggregator不需要, 某些log丢就丢了无所谓,而这些功能大大增加系统复杂性.

2. 因为以前没有bigdata, 所以没有focus在throughput上, 比如不支持批发送

3. 缺乏对distributed的support

4. 不支持实时分析, consume的速度必须非常快, 否则队列过长会有效率问题

Traditional messaging system tend not to be a good fit for log processing.

First, there is a mismatch in features offered by enterprise systems.

For example, IBM Websphere MQ [7] has transactional supports that allow an application to insert messages into multiple queues atomically. The JMS [14] specification allows each individual message to be acknowledged after consumption, potentially out of order.

Second, many systems do not focus as strongly on throughput as their primary design constraint.

Third, those systems are weak in distributed support.

Finally, many messaging systems assume near immediate consumption of messages, so the queue of unconsumed messages is always fairly small.

和现有的log aggregator对比, Pull特性

A number of specialized log aggregators have been built over the last few years.

Facebook uses a system called Scribe. Each frontend machine can send log data to a set of Scribe machines over sockets. Each Scribe machine aggregates the log entries and periodically dumps them to HDFS [9] or an NFS device.

Yahoo’s data highway project has a similar dataflow. A set of machines aggregate events from the clients and roll out “minute” files, which are then added to HDFS.

Flume is a relatively new log aggregator developed by Cloudera. It supports extensible “pipes” and “sinks”, and makes streaming log data very flexible. It also has more integrated distributed support. However, most of those systems are built for consuming the log data offline, and often expose implementation details unnecessarily (e.g. “minute files”) to the consumer.

most of them use a “push” model in which the broker forwards data to consumers. At LinkedIn, we find the “pull” model more suitable for our applications since each consumer can retrieve the messages at the maximum rate it can sustain and avoid being flooded by messages pushed faster than it can handle.

为什么要使用pull来代替push, consumer的饱饥只有consumer知道, 所以broker强制推送没有consumer自己来拿合理.

那以前的系统就想不到这点, 不是的, 这个不难想到, 问题是以前的系统都是基于offline consumer, consumer都是直接将数据存储到HDFS中, 不会在线分析, 所以通常情况下consumer不会存在被flooded的危险. 在这样的前提下, push更为简单些.

3. Kafka Architecture and Design Principles

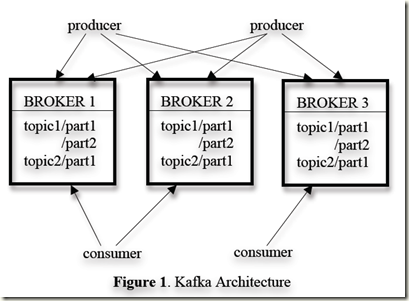

We first introduce the basic concepts in Kafka.

A stream of messages of a particular type is defined by a topic.

A producer can publish messages to a topic.

The published messages are then stored at a set of servers called brokers.

A consumer can subscribe to one or more topics from the brokers, and consume the subscribed messages by pulling data from the brokers.

To balance load, a topic is divided into multiple partitions and each broker stores one or more of those partitions.

通过topic划分partition的策略, 来保证load balance

这种分区相对比较合理, topic的热度不一样, 所以如果把不同的topic放到不同的broker上的话, 容易导致负载失衡.

默认是使用random partition, 可以定制更为合理的partition策略

3.1 Efficiency on a Single Partition

Simple storage, 简单存储

Kafka具有非常简单的存储结构

1. 存储的单元是partition, 而每个partition其实就是一组segment files, 之所以用一组files, 防止单个文件过大

所以从逻辑上, 你可以认为, 一个partition就是一个log file, 而新的message就会被append到file的末端

象所以的文件系统一样, 所有的message只有在flush后才可被consumer取到.

2. 使用logic offset来代替message id, 减少存储的overhead.

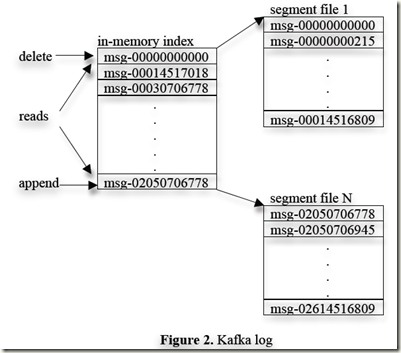

Kafka has a very simple storage layout.

1. Each partition of a topic corresponds to a logical log.

Physically, a log is implemented as a set of segment files of approximately the same size (e.g., 1GB).

Every time a producer publishes a message to a partition, the broker simply appends the message to the last segment file.

A message is only exposed to the consumers after it is flushed.

2. A message stored in Kafka doesn’t have an explicit message id. Instead, each message is addressed by its logical offset in the log. This avoids the overhead of maintaining auxiliary, seek-intensive random-access index structures that map the message ids to the actual message locations.

Efficient transfer, 高效传输

1. 一组messages批量发送, 提高吞吐效率

2. 使用文件系统cache, 而非memory cache

3. 使用sendfile, 绕过应用层buffer, 直接将数据从file传到socket (前提是应用逻辑确实不关心发送的内容)

We are very careful about transferring data in and out of Kafka.

1. Producer can submit a set of messages in a single send request. Consumer also retrieves multiple messages up to a certain size, typically hundreds of kilobytes.

2. Another unconventional choice that we made is to avoid explicitly caching messages in memory at the Kafka layer. Instead, we rely on the underlying file system page cache.

使用文件系统的page cache的优点? 参考kafka design

首先, 直接利用page cache比较简单高效, 不需要做特别的事, 避免特意去创建memory buffer

再者, page cache只要磁盘不断电, 就一直存在, broker进程重启或crash都不会丢失

最后, 最重要的是, 适合这个场景, kafka都是顺序读写This has the main benefit of avoiding double buffering---messages are only cached in the page cache.

This has the additional benefit of retaining warm cache even when a broker process is restarted.

Since both the producer and the consumer access the segment files, sequentially, with the consumer often lagging the producer by a small amount, normal operating system caching heuristics are very effective. producer和consumer

3. We optimize the network access for consumers.

On Linux and other Unix operating systems, there exists a sendfile API [5] that can directly transfer bytes from a file channel to a socket channel.

用这个省去了, (2) copy data in the page cache to an application buffer, (3) copy application buffer to another kernel buffer,

因为他本来就不想用memory buffer, 所以这样更高效, 直接从page cache copy到kernel buffer.

Stateless broker, broker无状态

Unlike most other messaging systems, in Kafka, the information about how much each consumer has consumed is not maintained by the broker, but by the consumer itself.

由于consumer采用pull决定的, broker没有必要知道consumer读了多少. 如果是push, 你必须知道...

带来的问题是你不知道consumer什么时候来pull, 那么broker什么时候把message删掉, 他用了个很简单的方法, simple time-based SLA, 过段时间就删, 比如7天.

There is an important side benefit of this design. A consumer can deliberately rewind back to an old offset and re-consume data.

这个特性很方便, 比如测试, 深有体会, 一般queue读一次就没了, 要用相同数据反复测试非常麻烦, 对于kafka改改offset就可以, 很方便

还有就是consumer挂了, 数据没有写成功, 没事, 拿上次的offset再读还能读到. 确实不错...

问题是, kafka本身不提供offset的操作接口, 看上去很美好, 实际使用确不是很方便.

3.2 Distributed Coordination

We now describe how the producers and the consumers behave in a distributed setting.

Each producer can publish a message to either a randomly selected partition or a partition semantically determined by a partitioning key and a partitioning function. We will focus on how the consumers interact with the brokers.

对于producer, 很简单, 要么随机, 要么通过某种hash方法, 发到某一个partition.

对于consumer, 就比较复杂了, 一个topic有那么多partition, 为了效率肯定需要用多个consumer去consume, 那么怎么保证consumers之间的coordination.

Kafka has the concept of consumer groups. Each consumer group consists of one or more consumers that jointly consume a set of

subscribed topics, i.e., each message is delivered to only one of the consumers within the group.

你可以把一个consumer group抽象为单一的consumer, 每条message我只需要consume一次, 之所以使用group是为了并发操作

而对于不同的group之间, 完全独立的, 一条message可以被每个group都consume一次, 所以group之间是不需要coordination的.

问题是同一个group之间的consumer需要coordinate, 来保证只每个message只被consume一次, 而且我们的目标是尽量减少这种coordinate的overhead.

1. 为了简化设计, 取消paritition本身的并发性, 只支持partition之间的并发

Our first decision is to make a partition within a topic the smallest unit of parallelism. This means that at any given time, all messages from one partition are consumed only by a single consumer within each consumer group.

一个partition, 只能有一个consumer, 这样就避免了多consumer读时的locking and state maintenance overhead

那每个partition都安排一个专属consumer可不可以, 可以, 但太浪费...partition往往比consumer的数量多很多的

所以一个consumer需要cover多个partition, 这样就产生一个问题, 当partition或consumer的数量发生变化的时候, 我们需要去做rebalance, 以从新分配consume关系. 只有当这个时候, 我们需要去coordinate各个consumer, 所以coordinate的overhead是比较低的.

这样设计最大的问题在于, 单个或少量partition的低速会拖慢整个处理速度, 因为一个partition只能有一个consumer, 其他consumers就算闲着也无法帮你.

所以你必须保证每个partition的数据产生和消费速度差不多, 否则就会有问题

比如必须巧妙的设计partition的数目, 因为如果partition数目不能整除consumer数目, 就会导致不平均

个人认为这不算值得借鉴的设计, 应该有更好的选择...

2. 使用Zookeeper来代替center master

The second decision that we made is to not have a central “master” node, but instead let consumers coordinate among themselves in a decentralized fashion.

Kafka uses Zookeeper for the following tasks:

(1) detecting the addition and the removal of brokers and consumers,

(2) triggering a rebalance process in each consumer when the above events happen, and

(3) maintaining the consumption relationship and keeping track of the consumed offset of each partition.

Specifically, when each broker or consumer starts up, it stores its information in a broker or consumer registry in Zookeeper.

The broker registry (ephemeral) contains the broker’s host name and port, and the set of topics and partitions stored on it.

The consumer registry (ephemeral) includes the consumer group to which a consumer belongs and the set of topics that it subscribes to.

The ownership registry (ephemeral) has one path for every subscribed partition and the path value is the id of the consumer currently consuming from this partition (we use the terminology that the consumer owns this partition).

The offset registry (persistent) stores for each subscribed partition, the offset of the last consumed message in the partition (for Each consumer group).

当broker和consumer发生变化时, 增加或减少, 对应的ephemeral registry会自动跟随变化, 很简单.

但同时, 还会触发consumer的rebalance event, 根据rebalance的结果去修改或增减ownership registry.

这里面只有offset registry是persistent的, 无论你consumer怎样变化, 只要记录了每个group的在partition上的offset, 就可以保证group内的coordinate.

3. Consumer Rebalance

Algorithm 1: rebalance process for consumer Ci in group G #对于group中的某个consumer

For each topic T that Ci subscribes to { #按Topic逐个进行的, 不同topic的partition数目不同

remove partitions owned by Ci from the ownership registry #先清除own关系

read the broker and the consumer registries from Zookeeper #读取broker和consumer registries

compute PT = partitions available in all brokers under topic T #取出T的partition list

compute CT = all consumers in G that subscribe to topic T #取出T对应的consumer list

sort PT and CT #对两个list进行排序

let j be the index position of Ci in CT and let N = |PT|/|CT| #找出C在consumer list的顺序, j

assign partitions from j*N to (j+1)*N - 1 in PT to consumer Cifor each assigned partition p {

set the owner of p to Ci in the ownership registry #改ownship

let Op = the offset of partition p stored in the offset registry #读offset

invoke a thread to pull data in partition p from offset Op #创建线程去并发的handle每个partition

}

}

算法关键就是这个公式, j*N to (j+1)*N - 1

其实很简单, 如果有10个partition, 2个consumer, 每个consumer应该handle几个partition?

怎么分配这5个partition, 根据 C在consumer list的顺序, j

根据这个就可以实现kafka的自动负载均衡, 总是保证每个partition都被consumer均匀分布的handle, 但某个consumer挂了, 通过rebalance就会有其他的consumer补上.

但是kafka的"make a partition within a topic the smallest unit of parallelism”策略虽然简化的复杂度, 但是也降低了balance的粒度, 他无法handle某一个partition的数据特别多这种case, 因为一个paritition最多只能有一个consumer. 所以producer在扔的时候需要保证各个partition的均衡.

设计的关键, 由于对于partition会记录该group读取的offset, 所以任何时候可以任意切换读取的consumer, 所以rebalance只是简单的做了重新分配, 不用考虑其他.

但在rebalance的时候, 有时会导致数据读重.

原因是我们考虑到consumer的不稳定性, 当把数据处理完后再commit到broker, 这样consumer crash也不会丢失数据

但当consumer rebalance的时候, 就会导致其他consumer读到相同数据...

Partition ownership的竞争, 由于通知时机导致

When there are multiple consumers within a group, each of them will be notified of a broker or a consumer change.

However, the notification may come at slightly different times at the consumers.

So, it is possible that one consumer tries to take ownership of a partition still owned by another consumer. When this happens, the first consumer simply releases all the partitions that it currently owns, waits a bit and retries the rebalance process. In practice, the rebalance process often stabilizes after only a few retries.

3.3 Delivery Guarantees

In general, Kafka only guarantees at-least-once delivery. Exactly once delivery typically requires two-phase commits and is not necessary for our applications.

这块不是kafka的重点, 不需要如两段提交这种commit机制. 一般情况下, 我们是可以保证Exactly once delivery, 但如果一个consumer读完数据后在更新zookeeper之前挂了, 那后续的consumer是有可能读到重复数据的.

Kafka guarantees that messages from a single partition are delivered to a consumer in order. However, there is no guarantee on the ordering of messages coming from different partitions.

To avoid log corruption, Kafka stores a CRC for each message in the log. 使用CRC来防止网络错误, 数据被篡改

If a broker goes down, any message stored on it not yet consumed becomes unavailable. If the storage system on a broker is permanently damaged, any unconsumed message is lost forever.

如果broker crash, 会有数据丢失问题.

In the future, we plan to add built-in replication in Kafka to redundantly store each message on multiple brokers.

4. Kafka Usage at LinkedIn

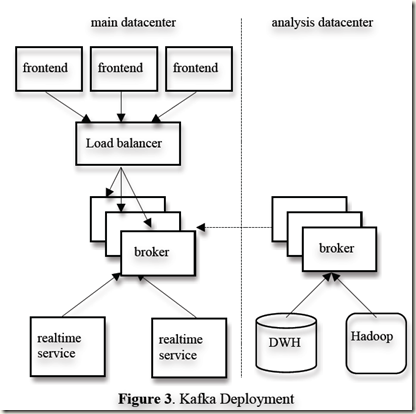

We have one Kafka cluster co-located with each datacenter where our userfacing services run.

首先在run service的datacenter 跑个kafka集群用于收集数据

The frontend services generate various kinds of log data and publish it to the local Kafka brokers in batches.

We rely on a hardware load-balancer to distribute the publish requests to the set of Kafka brokers evenly.

这个很重要, 必须要保证distribute the publish requests的balance, 因为后面无法弥补这种unbalance

The online consumers of Kafka run in services within the same datacenter.

对于这个集群, 我们采用online consumer, 来实时分析

We also deploy a cluster of Kafka in a separate datacenter for offline analysis, located geographically close to our Hadoop

cluster and other data warehouse infrastructure.

在离Hadoop集群和数据仓库比较近的地方, 建一个为了offline分析的kafka集群

This instance of Kafka runs a set of embedded consumers to pull data from the Kafka instances in the live datacenters.

consumer本身可以是另一个kafka集群, 很有创意的用法...

We then run data load jobs to pull data from this replica cluster of Kafka into Hadoop and our data warehouse, where we run various reporting jobs and analytical process on the data.