实验要求:

•实现10以内的非负双精度浮点数加法,例如输入4.99和5.70,能够预测输出为10.69

•使用Gprof测试代码热度

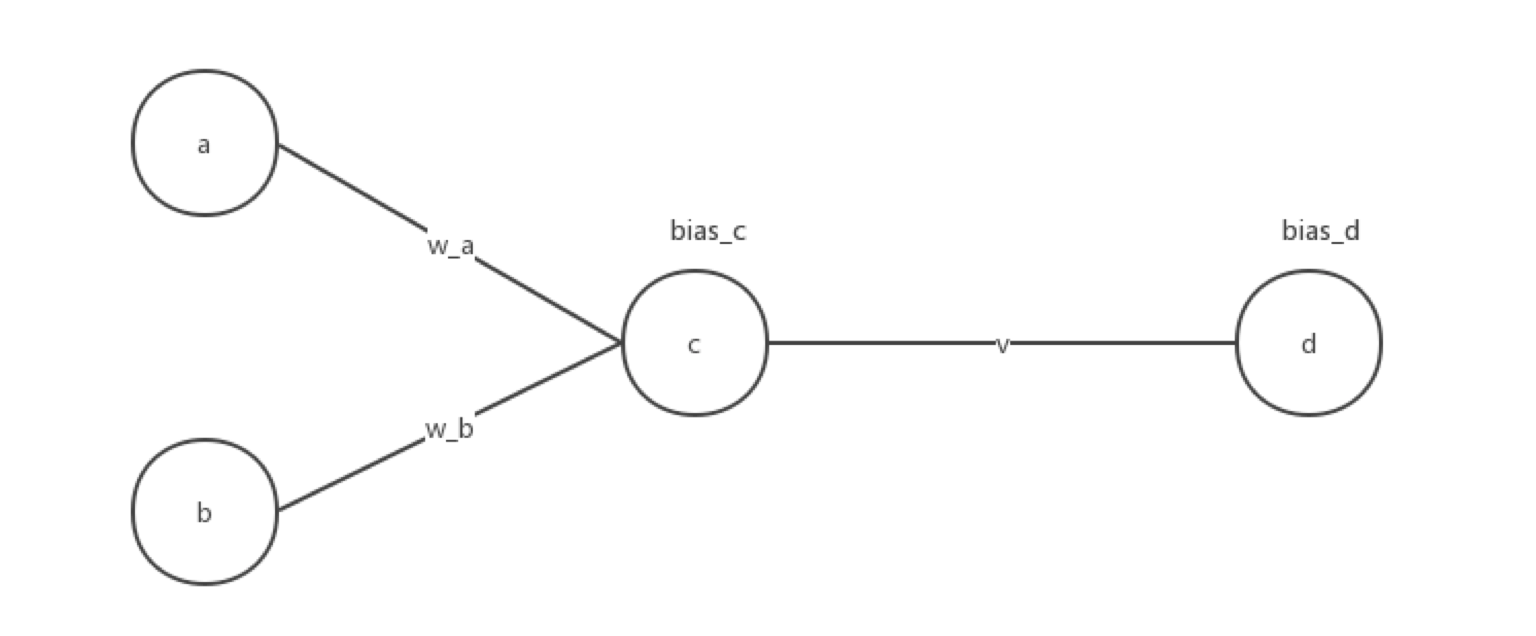

代码框架

•随机初始化1000对数值在0~10之间的浮点数,保存在二维数组a[1000][2]中。

•计算各对浮点数的相加结果,保存在数组b[1000]中,即b[0] = a[0][0] + a[0][1],以此类推。数组a、b即可作为网络的训练样本。

•定义浮点数组w、v分别存放隐层和输出层的权值数据,并随机初始化w、v中元素为-1~1之间的浮点数。

•将1000组输入(a[1000][2])逐个进行前馈计算,并根据计算的输出结果与b[1000]中对应标签值的差值进行反馈权值更新,调整w、v中各元素的数值。

•1000组输入迭代完成后,随机输入两个浮点数,测试结果。若预测误差较大,则增大训练的迭代次数(训练样本数)。

#include <stdio.h> #include <stdlib.h> #include <time.h> #include <math.h> //归一化二维数组 void normalization(float num[1000][2]){ float max1 = 0.0,max2 = 0.0; float min1 = 0.0,min2 = 0.0; for(int i = 0;i<1000;i++){ if(num[i][0]>max1||num[i][0]<min1){ if(num[i][0]>max1){ max1 = num[i][0]; } if(num[i][0]<min1){ min1 = num[i][0]; } } if(num[i][1]>max2||num[i][1]<min2){ if(num[i][1]>max2){ max2 = num[i][1]; } if(num[i][1]<min2){ min2 = num[i][1]; } } } for(int i = 0;i<1000;i++){ num[i][0] = (num[i][0]-min1+1)/(max1-min1+1); num[i][1] = (num[i][1]-min2+1)/(max2-min1+1); } printf("a[][0]的最大值和最小值分别为:%f %f",max1,min1); printf(" a[][1]的最大值和最小值分别为:%f %f",max2,min2); printf(" "); } //归一化一维数组 void normalization_b(float num[1000]){ float max = 0.0,min = 0.0; for(int i = 0;i<1000;i++){ if(num[i]>max||num[i]<min){ if(num[i]>max){ max = num[i]; }else{ min = num[i]; } } } for(int i = 0;i<1000;i++){ num[i] = (num[i]-min+1)/(max-min+1); } printf("b数组归一化的最大值和最小值为:%f,%f",max,min); } //后向隐藏层公式计算 float compute_hidden(float a,float b,float *w_a,float *w_b,float *bias_c){ float value = 0.0; value = a*(*w_a)+b*(*w_b)+(*bias_c); value = 1/(1+exp(-value)); return value; } //后向输出层公式计算 float compute_output(float c,float *w_c,float *bias_d){ float value = 0.0; value = c*(*w_c)+(*bias_d); value = 1/(1+exp(-value)); return value; } //前向输出层公式计算 float pro_output(float predict_num,float real_num){ float error = 0.0; error = predict_num*(1-predict_num)*(real_num-predict_num); return error; } //bp算法 void bp(float a,float b,float real_num,float *w_a,float *w_b,float *v,float *bias_c,float *bias_d){ //前向计算 //隐藏层 float output = compute_hidden(a,b,w_a,w_b,bias_c); //输出层 float output_final = compute_output(output,v,bias_d); //反向计算 float error_output = 0.0; //输出层 error_output = pro_output(output_final,real_num); //更新权重和偏向! //定义学习率 double learning_rate = 0.01; *v = *v + learning_rate*error_output*output; *bias_d = *bias_d + learning_rate*error_output; //前向隐藏层 float error_hidden = 0.0; error_hidden = output*(1-output)*(error_output*(*v)); //更新权重和偏向 *w_a = *w_a + learning_rate*(error_hidden*a); *w_b = *w_b + learning_rate*(error_hidden*b); *bias_c = *bias_c + learning_rate*error_hidden; } int main(int argc, const char * argv[]) { //随机初始化1000对数值在0-10之间的双精度浮点数,保存在二维数组a[1000][2]中 srand((unsigned) (time(NULL))); float a[1000][2],b[1000]; for(int i = 0;i<1000;i++){ for(int j = 0;j<2;j++){ int rd = rand()%1001; a[i][j] = rd/100.0; } } for(int i = 0;i<1000;i++){ b[i] = a[i][0] +a[i][1]; } //归一化处理 normalization(a); normalization_b(b); //定义浮点数组w,v分别存放隐层和输出层的权值数据,并随机初始化w,v为(-1,1)之间的浮点数 int w_a_rand = rand()%200001; int w_b_rand = rand()%200001; float w_a = w_a_rand/100000.0-1; float w_b = w_b_rand/100000.0-1; int v_rand = rand()%200001; float v = v_rand/100000.0-1; int bias_c_rand = rand()%200001; float bias_c = bias_c_rand/100000.0-1; int bias_d_rand = rand()%200001; float bias_d = bias_d_rand/100000.0-1; printf("w_a,w_b,v初始随机值分别是:%f %f %f ",w_a,w_b,v); //将1000组输入(a[1000][2])逐个进行前馈计算,并根据计算的输出结果与b[1000]中对应标签值的差值进行反馈权值更新,调整w、v中各元素的数值。 //对于每一个训练实例:执行bp算法 float max1 ,max2 ,min1 ,min2,max,min; printf("x0的归一化参数(最大最小值):"); scanf("%f,%f",&max1,&min1); printf("x1的归一化参数(最大最小值):"); scanf("%f,%f",&max2,&min2); printf("b的归一化参数(最大最小值):"); scanf("%f,%f",&max,&min); int mark = 0; float trainnig_data_a[800][2],trainnig_data_b[800],test_data_a[200][2],test_data_b[200]; int i = 0; while (i<1000) { if(i == mark){ //设置测试集 for(int k = i;k<(i+200);k++){ test_data_a[k][0] = a[k][0]; test_data_a[k][1] = a[k][1]; test_data_b[k] = b[k]; } i +=200; } //设置训练集 if(i<mark){ trainnig_data_a[i][0] = a[i][0]; trainnig_data_a[i][1] = a[i][1]; trainnig_data_b[i] = b[i]; i++; } if(i>mark){ trainnig_data_a[i-200][0] = a[i][0]; trainnig_data_a[i-200][1] = a[i][1]; trainnig_data_b[i-200] = b[i]; i++; } } for(int i = 0;i<800;i++){ //迭代600次 int times = 0; for(int i= 0;i<600;i++){ bp(trainnig_data_a[i][0],trainnig_data_a[i][1],trainnig_data_b[i],&w_a,&w_b,&v,&bias_c,&bias_d); times++; } } //进行预测 float pre_1,pre_2,predict_value,true_value; float MSE[200]; for(int i = 0;i<200;i++){ pre_1 = test_data_a[i][0]; pre_2 = test_data_a[i][1]; true_value = test_data_b[i]; //进行计算 float pre_hidden = compute_hidden(pre_1, pre_2, &w_a, &w_b, &bias_c); predict_value = compute_output(pre_hidden, &v, &bias_d); //求均方误差 MSE[i] = (predict_value - true_value)*(predict_value-true_value); predict_value = (predict_value*(max-min+1))-1+min; true_value = (true_value*(max-min+1))-1+min; printf("预测值为:%f 真实值为:%f ",predict_value,true_value); } float mean_square_error = 0; for(int i = 0;i<200;i++){ mean_square_error += MSE[i]; } mean_square_error = mean_square_error/200; printf("均方误差为:%lf",mean_square_error); }

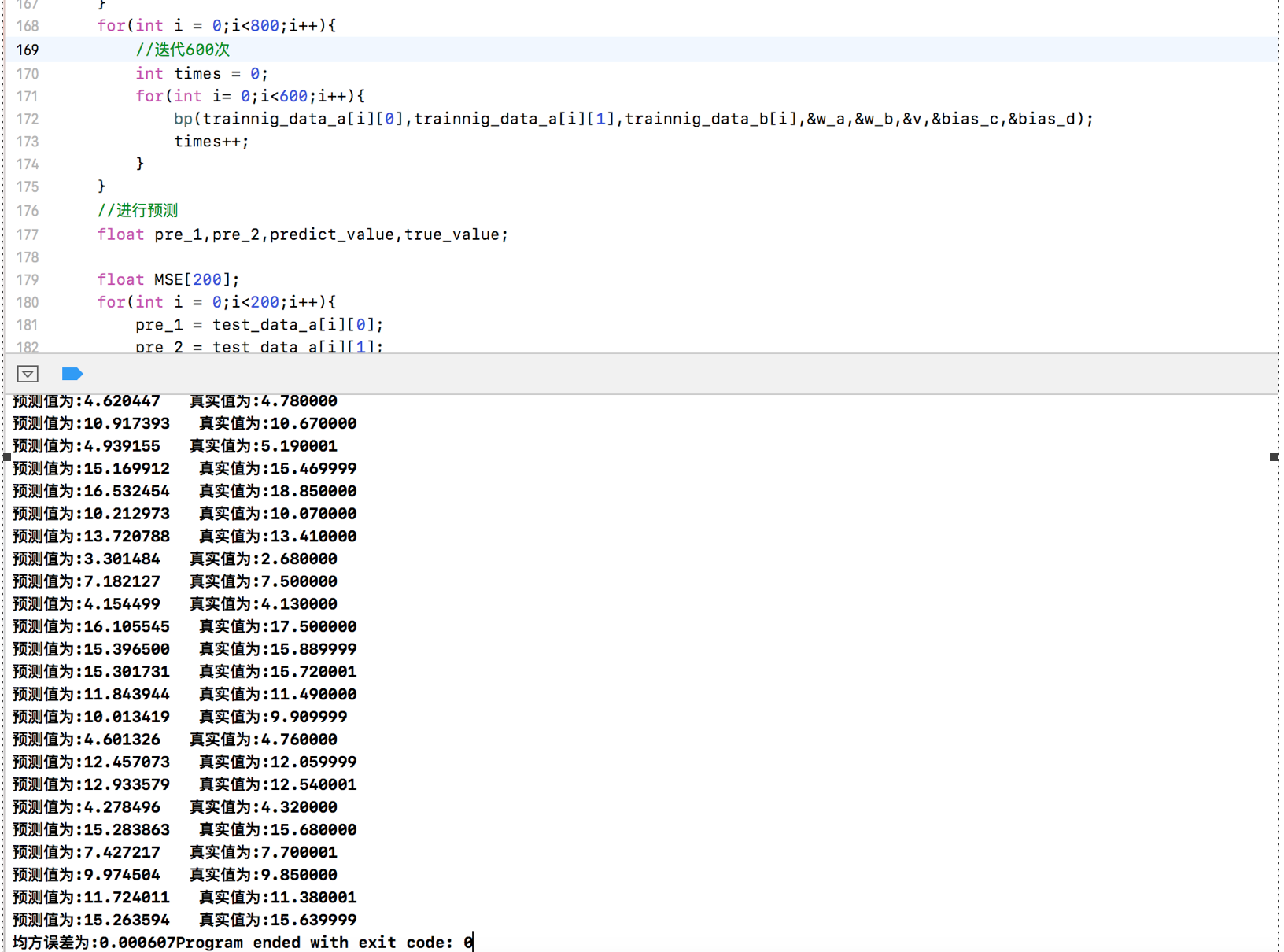

运行结果截图:

将隐藏层改为5个神经元

#include <stdio.h> #include <stdlib.h> #include <time.h> #include <math.h> struct tag{ float value[5]; }x,y; //归一化二维数组 void normalization(float num[1000][2]){ float max1 = 0.0,max2 = 0.0; float min1 = 0.0,min2 = 0.0; for(int i = 0;i<1000;i++){ if(num[i][0]>max1||num[i][0]<min1){ if(num[i][0]>max1){ max1 = num[i][0]; } if(num[i][0]<min1){ min1 = num[i][0]; } } if(num[i][1]>max2||num[i][1]<min2){ if(num[i][1]>max2){ max2 = num[i][1]; } if(num[i][1]<min2){ min2 = num[i][1]; } } } for(int i = 0;i<1000;i++){ num[i][0] = (num[i][0]-min1+1)/(max1-min1+1); num[i][1] = (num[i][1]-min2+1)/(max2-min1+1); } printf("a[][0]的最大值和最小值分别为:%f %f",max1,min1); printf(" a[][1]的最大值和最小值分别为:%f %f",max2,min2); printf(" "); } //归一化一维数组 void normalization_b(float num[1000]){ float max = 0.0,min = 0.0; for(int i = 0;i<1000;i++){ if(num[i]>max||num[i]<min){ if(num[i]>max){ max = num[i]; }else{ min = num[i]; } } } for(int i = 0;i<1000;i++){ num[i] = (num[i]-min+1)/(max-min+1); } printf("b数组归一化的最大值和最小值为:%f,%f",max,min); } //后向隐藏层公式计算 struct tag compute_hidden(float a,float b,float *w_a,float *w_b,float *bias_c){ for(int i=0;i<5;i++){ x.value[i] = a*(w_a[i])+b*(w_b[i])+(bias_c[i]); x.value[i] = 1/(1+exp(-(x.value[i]))); } return x; } //后向输出层公式计算 float compute_output(struct tag c,float *w_c,float *bias_d){ float value_output = 0; for(int i = 0;i<5;i++){ c.value[i] = c.value[i]*(w_c[i]); value_output+=c.value[i]; } value_output = (value_output+(*bias_d))/5; value_output = 1/(1+exp(-(value_output))); return value_output; } //前向输出层公式计算 float pro_output(float predict_num,float real_num){ float error = 0.0; error = predict_num*(1-predict_num)*(real_num-predict_num); return error; } //bp算法 void bp(float a,float b,float real_num,float *w_a,float *w_b,float *v,float *bias_c,float *bias_d){ //前向计算 //隐藏层 struct tag output = compute_hidden(a,b,w_a,w_b,bias_c); //输出层 float output_final = compute_output(output,v,bias_d); //反向计算 float error_output = 0.0; //输出层 error_output = pro_output(output_final,real_num); //更新权重和偏向! //定义学习率 double learning_rate = 0.00003; for(int i = 0;i<5;i++){ v[i] = v[i] + learning_rate*error_output*output.value[i]; } *bias_d = *bias_d + learning_rate*error_output; //前向隐藏层 float error_hidden[5]; for(int i = 0;i<5;i++){ error_hidden[i] = output.value[i]*(1-output.value[i])*(error_output*v[i]); } //更新权重和偏向 for(int i = 0;i<5;i++){ w_a[i] = w_a[i] + learning_rate*(error_hidden[i]*a); w_b[i] = w_b[i] + learning_rate*(error_hidden[i]*b); bias_c[i] = bias_c[i] + learning_rate*error_hidden[i]; } } int main(int argc, const char * argv[]) { //随机初始化1000对数值在0-10之间的双精度浮点数,保存在二维数组a[1000][2]中 srand((unsigned) (time(NULL))); float a[1000][2],b[1000]; for(int i = 0;i<1000;i++){ for(int j = 0;j<2;j++){ int rd = rand()%1001; a[i][j] = rd/100.0; } } for(int i = 0;i<1000;i++){ b[i] = a[i][0] +a[i][1]; } //归一化处理 normalization(a); normalization_b(b); //定义浮点数组w,v分别存放隐层和输出层的权值数据,并随机初始化w,v为(-1,1)之间的浮点数 int w_a_rand[5]; int w_b_rand[5]; for(int i = 0;i<5;i++){ w_a_rand[i] = rand()%200001; } for(int i = 0;i<5;i++){ w_b_rand[i] = rand()%200001; } float w_a[5]; for(int i = 0;i<5;i++){ w_a[i] = w_a_rand[i]/100000.0-1; } float w_b[5]; for(int i = 0;i<5;i++){ w_b[i] = w_b_rand[i]/100000.0-1; } int v_rand[5]; for(int i = 0;i<5;i++){ v_rand[i] = rand()%200001; } float v[5]; for(int i = 0;i<5;i++){ v[i] = v_rand[i]/100000.0-1; } int bias_c_rand[5]; for(int i = 0;i<5;i++){ bias_c_rand[i] = rand()%200001; } float bias_c[5]; for(int i = 0;i<5;i++){ bias_c[i] = bias_c_rand[i]/100000.0-1; } int bias_d_rand = rand()%200001; float bias_d = bias_d_rand/100000.0-1; printf("w_a[1-5],w_b[1-5],v[1-5]初始随机值分别是:"); for(int i = 0;i<5;i++){ printf("w_a[%d]=%f,w_b[%d]=%f,v[%d]=%f ",i,w_a[i],i,w_b[i],i,v[i]); } //将1000组输入(a[1000][2])逐个进行前馈计算,并根据计算的输出结果与b[1000]中对应标签值的差值进行反馈权值更新,调整w、v中各元素的数值。 //对于每一个训练实例:执行bp算法 float max1 ,max2 ,min1 ,min2,max,min; printf("x0的归一化参数(最大最小值):"); scanf("%f,%f",&max1,&min1); printf("x1的归一化参数(最大最小值):"); scanf("%f,%f",&max2,&min2); printf("b的归一化参数(最大最小值):"); scanf("%f,%f",&max,&min); int mark = 0; float trainnig_data_a[800][2],trainnig_data_b[800],test_data_a[200][2],test_data_b[200]; int i = 0; while (i<1000) { if(i == mark){ //设置测试集 for(int k = i;k<(i+200);k++){ test_data_a[k][0] = a[k][0]; test_data_a[k][1] = a[k][1]; test_data_b[k] = b[k]; } i +=200; } //设置训练集 if(i<mark){ trainnig_data_a[i][0] = a[i][0]; trainnig_data_a[i][1] = a[i][1]; trainnig_data_b[i] = b[i]; i++; } if(i>mark){ trainnig_data_a[i-200][0] = a[i][0]; trainnig_data_a[i-200][1] = a[i][1]; trainnig_data_b[i-200] = b[i]; i++; } } for(int i = 0;i<800;i++){ //迭代600次 int times = 0; for(int j= 0;j<300000;j++){ bp(trainnig_data_a[i][0],trainnig_data_a[i][1],trainnig_data_b[i],w_a,w_b,v,bias_c,&bias_d); times++; } } //进行预测 float pre_1,pre_2,predict_value,true_value; float MSE[200]; for(int i = 0;i<200;i++){ pre_1 = test_data_a[i][0]; pre_2 = test_data_a[i][1]; true_value = test_data_b[i]; //进行计算 struct tag pre_hidden = compute_hidden(pre_1, pre_2,w_a,w_b,bias_c); predict_value = compute_output(pre_hidden, v, &bias_d); //求均方误差 MSE[i] = (predict_value - true_value)*(predict_value-true_value); predict_value = (predict_value*(max-min+1))-1+min; true_value = (true_value*(max-min+1))-1+min; printf("预测值为:%f 真实值为:%f ",predict_value,true_value); } float mean_square_error = 0; for(int i = 0;i<200;i++){ mean_square_error += MSE[i]; } mean_square_error = mean_square_error/200; printf("均方误差为:%lf",mean_square_error); }

但结果没什么优化,估计要批量训练可以将结果更优