三、配置文件详解(config.py)

import os # 数据集路径,和模型检查点路径 # # path and dataset parameter # DATA_PATH = 'data' # 所有数据所在的根目录 PASCAL_PATH = os.path.join(DATA_PATH, 'pascal_voc') # VOC2012数据集所在的目录 CACHE_PATH = os.path.join(PASCAL_PATH, 'cache') # 保存生成的数据集标签缓冲文件所在文件夹 OUTPUT_DIR = os.path.join(PASCAL_PATH, 'output') # 保存生成的网络模型和日志文件所在的文件夹 WEIGHTS_DIR = os.path.join(PASCAL_PATH, 'weights') # 检查点文件所在的目录 WEIGHTS_FILE = None # WEIGHTS_FILE = os.path.join(DATA_PATH, 'weights', 'YOLO_small.ckpt') # voc2012数据集类别名 CLASSES = ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor'] # 使用水平镜像,扩大一倍数据集? FLIPPED = True # 网络模型参数 # # model parameter # # 图片大小 IMAGE_SIZE = 448 # 单元格大小,一共有7x7个单元格 CELL_SIZE = 7 # 每个单元格边界框的个数B = 2 BOXES_PER_CELL = 2 # 泄露修正线性激活函数的系数,就是lReLU的系数 ALPHA = 0.1 # 控制台输出信息 DISP_CONSOLE = False # 损失函数的权重设置 OBJECT_SCALE = 1.0 # 有目标时,置信度权重 NOOBJECT_SCALE = 1.0 # 没有目标时,置信度权重 CLASS_SCALE = 2.0 # 类别权重 COORD_SCALE = 5.0 # 边界框权重 # 训练参数设置 # # solver parameter # GPU = '' # 学习率 LEARNING_RATE = 0.0001 # 退化学习率衰减步数 DECAY_STEPS = 30000 # 衰减率 DECAY_RATE = 0.1 STAIRCASE = True #批量大小 BATCH_SIZE = 45 # 最大迭代次数 MAX_ITER = 15000 # 日志文件保存间隔步 SUMMARY_ITER = 10 # 模型保存间隔步 SAVE_ITER = 1000 # 测试时的相关参数 # # test parameter # # 格子有目标的置信度阈值 THRESHOLD = 0.2 # 非极大值抑制 IOU阈值 IOU_THRESHOLD = 0.5

四、yolo文件夹详解

yolo网络的建立是通过yolo文件夹中的yolo_net.py文件的代码实现的,yolo_net.py文件定义了YOLONet类,该类包含了网络初始化(__init__()),建立网络(build_networks)和loss函数(loss_layer())等方法

import numpy as np import tensorflow as tf import yolo.config as cfg slim = tf.contrib.slim class YOLONet(object):

1. 网络参数初始化

网络的所有初始化参数包含于__init__()方法中

# 网络参数初始化 def __init__(self, is_training=True): ''' 构造函数利用cfg文件对网络参数进行初始化,同时定义网络的输入和输出size等信息, 其中offset的作用应该是一个定长的偏移,boundery1和boundery2 作用是在输出中确定每种信息的长度(如类别,置信度等) 其中 boundery1指的是对于所有cell的类别的预测的张量维度,所以是self.cell_size * self.cell_size * self.num_class boundery2指的是在类别之后每个cell所对应的bounding boxes的数量的总合,所以是 ''' # voc 2012数据集类别名 self.classes = cfg.CLASSES # 类别个数C 20 self.num_class = len(self.classes) # 网络输入图像大小448, 448 x 448 self.image_size = cfg.IMAGE_SIZE # 单元格大小S=7,将图像分为SxS的格子 self.cell_size = cfg.CELL_SIZE # 每个网格边界框的个数B=2 self.boxes_per_cell = cfg.BOXES_PER_CELL # 网络输出的大小 S*S*(B*5 + C) = 1470 self.output_size = (self.cell_size * self.cell_size) * (self.num_class + self.boxes_per_cell * 5) # 图片的缩放比例 64 self.scale = 1.0 * self.image_size / self.cell_size # 将网络输出分离为类别和置信度以及边界框的大小,输出维度为7*7*20 + 7*7*2 + 7*7*2*4=1470 # 7*7*20 self.boundary1 = self.cell_size * self.cell_size * self.num_class # 7*7*20 + 7*7*2 self.boundary2 = self.boundary1 + self.cell_size * self.cell_size * self.boxes_per_cell # 代价函数 权重 self.object_scale = cfg.OBJECT_SCALE # 1 self.noobject_scale = cfg.NOOBJECT_SCALE # 1 self.class_scale = cfg.CLASS_SCALE # 2.0 self.coord_scale = cfg.COORD_SCALE # 2.0 # 学习率0.0001 self.learning_rate = cfg.LEARNING_RATE # 批大小 45 self.batch_size = cfg.BATCH_SIZE #泄露修正线性激活函数 系数0.1 self.alpha = cfg.ALPHA # 偏置 形状[7,7,2] self.offset = np.transpose(np.reshape(np.array( [np.arange(self.cell_size)] * self.cell_size * self.boxes_per_cell), (self.boxes_per_cell, self.cell_size, self.cell_size)), (1, 2, 0)) # 输入图片占位符 [None,image_size,image_size,3] self.images = tf.placeholder( tf.float32, [None, self.image_size, self.image_size, 3], name='images') # 构建网络,获取YOLO网络的输出(不经过激活函数的输出) 形状[None,1470] self.logits = self.build_network( self.images, num_outputs=self.output_size, alpha=self.alpha, is_training=is_training) if is_training: # 设置标签占位符 [None,S,S,5+C] 即[None,7,7,25] self.labels = tf.placeholder( tf.float32, [None, self.cell_size, self.cell_size, 5 + self.num_class]) # 设置损失函数 self.loss_layer(self.logits, self.labels) # 加入权重正则化之后的损失 self.total_loss = tf.losses.get_total_loss() # 将损失以标量形式显示,该变量命名为total_loss tf.summary.scalar('total_loss', self.total_loss)

2. 构建网络

网络的建立是通过build_network()函数实现的,网络由卷积层,池化层和全连接层组成,网络的输入维度是[None,448,448,3],输出维度为[None,1470]

def build_network(self, images, num_outputs, alpha, keep_prob=0.5, is_training=True, scope='yolo'): ''' 构建YOLO网络 args: images:输入图片占位符[None,image_size,image_size,3] 这里是[None,448,448,3] num_outputs: 标量,网络输出节点数 1470 alpha:泄露修正线性激活函数 系数0.1 keep_prob: 弃权 保留率 is_training:训练? scope:命名空间名 return: 返回网络最后一层,激活函数处理之前的值 形状[None,1470] ''' # 定义变量命名空间 with tf.variable_scope(scope): # 定义共享参数,使用L2正则化 with slim.arg_scope( [slim.conv2d, slim.fully_connected], activation_fn=leaky_relu(alpha), weights_regularizer=slim.l2_regularizer(0.0005), weights_initializer=tf.truncated_normal_initializer(0.0, 0.01) ): # pad_1 填充 454x454x3 net = tf.pad( images, np.array([[0, 0], [3, 3], [3, 3], [0, 0]]), name='pad_1') # 卷积层 conv_2 s=2 (n-f+1)/s向上取整 224x224x64 net = slim.conv2d( net, 64, 7, 2, padding='VALID', scope='conv_2') # 池化层 pool_3 112x112x64 net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_3') # 卷积层 conv_4 3x3x192 s=1 n/s向上取整 112x112x192 net = slim.conv2d(net, 192, 3, scope='conv_4') # 池化层 pool_5 56x56x192 net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_5') # 卷积层conv_6 1x1x128 s=1 n/s向上取整 56x56x128 net = slim.conv2d(net, 128, 1, scope='conv_6') # 卷积层conv_7 3x3x256 s=1 n/s向上取整 56x56x256 net = slim.conv2d(net, 256, 3, scope='conv_7') # 卷积层conv_8 1x1x256 s=1 n/s向上取整 56x56x256 net = slim.conv2d(net, 256, 1, scope='conv_8') # 卷积层conv_9 3x3x512 s=1 n/s向上取整 56x56x512 net = slim.conv2d(net, 512, 3, scope='conv_9') # 池化层 pool_10 28x28x512 net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_10') # 卷积层conv_11 1x1x256 s=1 n/s向上取整 28x28x256 net = slim.conv2d(net, 256, 1, scope='conv_11') # 卷积层conv_12 3x3x512 s=1 n/s向上取整 28x28x512 net = slim.conv2d(net, 512, 3, scope='conv_12') # 卷积层conv_13 1x1x256 s=1 n/s向上取整 28x28x256 net = slim.conv2d(net, 256, 1, scope='conv_13') # 卷积层conv_14 3x3x512 s=1 n/s向上取整 28x28x512 net = slim.conv2d(net, 512, 3, scope='conv_14') net = slim.conv2d(net, 256, 1, scope='conv_15') net = slim.conv2d(net, 512, 3, scope='conv_16') net = slim.conv2d(net, 256, 1, scope='conv_17') net = slim.conv2d(net, 512, 3, scope='conv_18') # 卷积层conv_19 3x3x512 s=1 n/s向上取整 28x28x512 net = slim.conv2d(net, 512, 1, scope='conv_19') # 卷积层conv_20 3x3x1024 s=1 n/s向上取整 28x28x1024 net = slim.conv2d(net, 1024, 3, scope='conv_20') # 池化层 pool_21 14x14x1024 net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_21') # 卷积层conv_22 1x1x512 s=1 n/s向上取整 14x14x512 net = slim.conv2d(net, 512, 1, scope='conv_22') # 卷积层conv_23 3x3x1024 s=1 n/s向上取整 14x14x1024 net = slim.conv2d(net, 1024, 3, scope='conv_23') net = slim.conv2d(net, 512, 1, scope='conv_24') net = slim.conv2d(net, 1024, 3, scope='conv_25') net = slim.conv2d(net, 1024, 3, scope='conv_26') # pad_27 填充 16x16x2014 net = tf.pad( net, np.array([[0, 0], [1, 1], [1, 1], [0, 0]]), name='pad_27') # 卷积层conv_28 3x3x1024 s=2 (n-f+1)/s向上取整 7x7x1024 net = slim.conv2d( net, 1024, 3, 2, padding='VALID', scope='conv_28') # 卷积层 conv_29 3x3x1024 s=1 n/s向上取整 7x7x1024 net = slim.conv2d(net, 1024, 3, scope='conv_29') net = slim.conv2d(net, 1024, 3, scope='conv_30') # trans_31 转置 [None,1024,7,7] net = tf.transpose(net, [0, 3, 1, 2], name='trans_31') # flat_32 展开 50716=1024*7*7 net = slim.flatten(net, scope='flat_32') # 全连接层 fc_33 512 net = slim.fully_connected(net, 512, scope='fc_33') # 全连接层 fc_34 4096 net = slim.fully_connected(net, 4096, scope='fc_34') # 惩罚项 dropout_35 4096 net = slim.dropout( net, keep_prob=keep_prob, is_training=is_training, scope='dropout_35') # 全连接层fc_36 num_outputs=1470 net = slim.fully_connected( net, num_outputs, activation_fn=None, scope='fc_36') return net

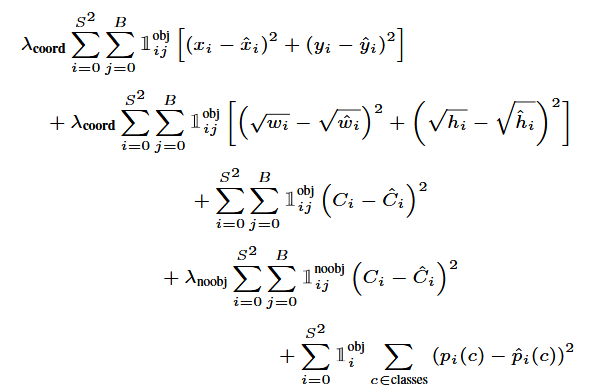

3、代价函数