1. 规划

1.1. 机器列表

|

NameNode |

SecondaryNameNode |

DataNodes |

|

192.168.1.121 |

192.168.1.122 |

192.168.1.101 |

|

|

|

192.168.1.102 |

|

|

|

192.168.1.103 |

|

|

|

|

1.2. 机器列表

|

机器IP |

主机名 |

用户组/用户 |

|

192.168.1.121 |

nameNode.smartmap.com |

hadoop/hadoop |

|

192.168.1.122 |

secondaryNameNode.smartmap.com |

hadoop/hadoop |

|

192.168.1.111 |

dataNode101.smartmap.com |

hadoop/hadoop |

|

192.168.1.112 |

dataNode102.smartmap.com |

hadoop/hadoop |

|

192.168.1.113 |

dataNode103.smartmap.com |

hadoop/hadoop |

|

|

|

|

|

|

|

|

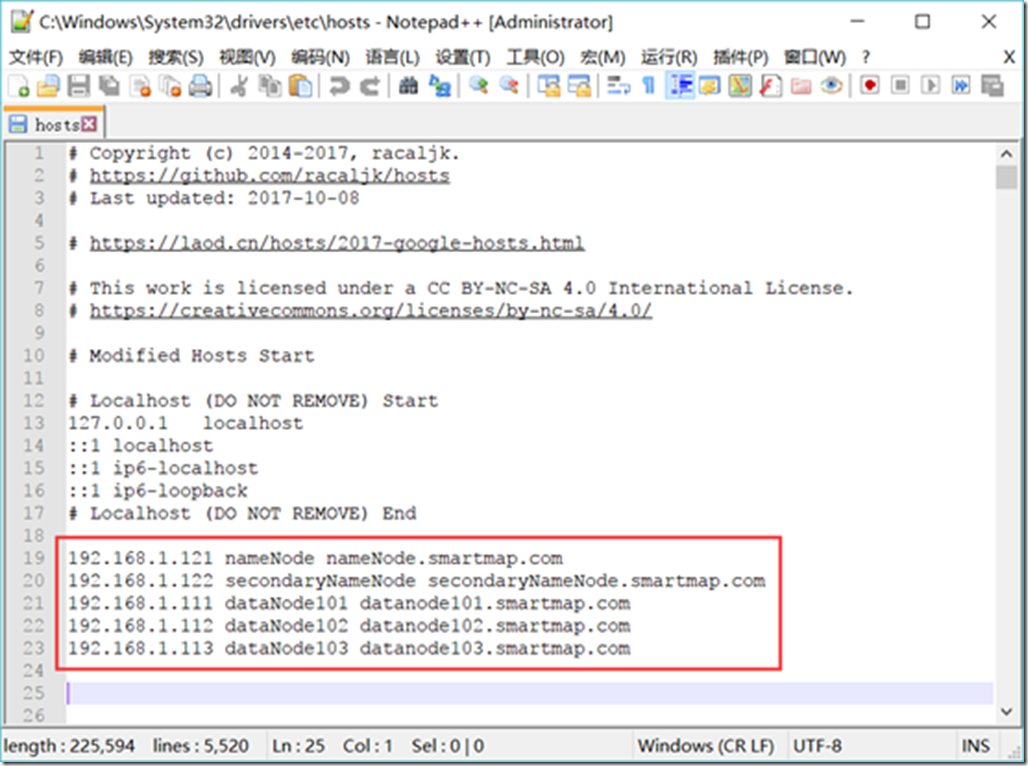

2. 设置IP与服务器名映射

[root@datanode103 ~]# vi /etc/hosts

192.168.1.121 nameNode nameNode.smartmap.com

192.168.1.122 secondaryNameNode secondaryNameNode.smartmap.com

192.168.1.111 dataNode101 datanode101.smartmap.com

192.168.1.112 dataNode102 datanode102.smartmap.com

192.168.1.113 dataNode103 datanode103.smartmap.com

并修改Windows中的hosts文件

C:WindowsSystem32driversetc

3. 添加用户

[root@datanode101 ~]# useradd hadoop

[root@datanode101 ~]# passwd hadoop

Changing password for user hadoop.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

[root@datanode101 ~]#

4. 免密码登录

4.1. 免密码登录范围

要求能通过免登录包括使用IP和主机名都能免密码登录:

1) NameNode能免密码登录所有的DataNode

2) SecondaryNameNode能免密码登录所有的DataNode

3) NameNode能免密码登录自己

4) SecondaryNameNode能免密码登录自己

5) NameNode能免密码登录SecondaryNameNode

6) SecondaryNameNode能免密码登录NameNode

7) DataNode能免密码登录自己

8) DataNode不需要配置免密码登录NameNode、SecondaryNameNode和其它DataNode。

4.2. SSH无密码配置

4.2.1. 所有节点准备密钥

4.2.1.1. 所有节点均创建密钥对

[hadoop@namenode ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:piJOz+czSaGsxsEsa4ellzJfCOCB8jDE+fLor5PhKsM hadoop@namenode.smartmap.com

The key's randomart image is:

+---[RSA 2048]----+

|... |

|oo |

|*.. |

|+=.. . |

| ==. . .S |

|.o=o+ .o |

|++O+oo.. |

|+E+B..= |

|=+@ooo.o |

+----[SHA256]-----+

[hadoop@namenode ~]$ ls -la .ssh/

total 8

drwx------ 2 hadoop hadoop 38 Apr 14 23:45 .

drwx------ 3 hadoop hadoop 74 Apr 14 23:45 ..

-rw------- 1 hadoop hadoop 1679 Apr 14 23:45 id_rsa

-rw-r--r-- 1 hadoop hadoop 410 Apr 14 23:45 id_rsa.pub

[hadoop@namenode ~]$

4.2.1.2. 所有节点均创建存放公钥的文件

[hadoop@datanode103 ~]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[hadoop@datanode103 ~]$ chmod 600 ~/.ssh/authorized_keys

[hadoop@datanode103 ~]$ ls -la ~/.ssh/

total 12

drwx------ 2 hadoop hadoop 61 Apr 15 00:07 .

drwx------ 3 hadoop hadoop 74 Apr 14 23:54 ..

-rw------- 1 hadoop hadoop 413 Apr 15 00:07 authorized_keys

-rw------- 1 hadoop hadoop 1679 Apr 14 23:54 id_rsa

-rw-r--r-- 1 hadoop hadoop 413 Apr 14 23:54 id_rsa.pub

[hadoop@datanode103 ~]$

4.2.2. NameNode与SecondaryNameNode能免密码登录所有节点

4.2.2.1. 将NameNode的公钥发送到所有节点上

[hadoop@namenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.111:~/.ssh/authorized_keys_namenode

The authenticity of host '192.168.1.111 (192.168.1.111)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.111' (ECDSA) to the list of known hosts.

hadoop@192.168.1.111's password:

authorized_keys 100% 410 324.7KB/s 00:00

[hadoop@namenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.112:~/.ssh/authorized_keys_namenode

The authenticity of host '192.168.1.112 (192.168.1.112)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.112' (ECDSA) to the list of known hosts.

hadoop@192.168.1.112's password:

authorized_keys 100% 410 272.7KB/s 00:00

[hadoop@namenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.113:~/.ssh/authorized_keys_namenode

The authenticity of host '192.168.1.113 (192.168.1.113)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.113' (ECDSA) to the list of known hosts.

hadoop@192.168.1.113's password:

authorized_keys 100% 410 402.9KB/s 00:00

[hadoop@namenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.122:~/.ssh/authorized_keys_namenode

The authenticity of host '192.168.1.122 (192.168.1.122)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.122' (ECDSA) to the list of known hosts.

hadoop@192.168.1.122's password:

authorized_keys 100% 410 382.4KB/s 00:00

[hadoop@namenode ~]$

4.2.2.2. 将SecondaryNameNode的公钥发送到所有节点上

[hadoop@secondarynamenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.111:~/.ssh/authorized_keys_secondarynamenode

The authenticity of host '192.168.1.111 (192.168.1.111)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.111' (ECDSA) to the list of known hosts.

hadoop@192.168.1.111's password:

authorized_keys 100% 419 294.8KB/s 00:00

[hadoop@secondarynamenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.112:~/.ssh/authorized_keys_secondarynamenode

The authenticity of host '192.168.1.112 (192.168.1.112)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.112' (ECDSA) to the list of known hosts.

hadoop@192.168.1.112's password:

authorized_keys 100% 419 383.6KB/s 00:00

[hadoop@secondarynamenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.113:~/.ssh/authorized_keys_secondarynamenode

The authenticity of host '192.168.1.113 (192.168.1.113)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.113' (ECDSA) to the list of known hosts.

hadoop@192.168.1.113's password:

authorized_keys 100% 419 377.1KB/s 00:00

[hadoop@secondarynamenode ~]$ scp ~/.ssh/authorized_keys hadoop@192.168.1.121:~/.ssh/authorized_keys_secondarynamenode

The authenticity of host '192.168.1.121 (192.168.1.121)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.121' (ECDSA) to the list of known hosts.

hadoop@192.168.1.121's password:

authorized_keys 100% 419 335.7KB/s 00:00

[hadoop@secondarynamenode ~]$

4.2.3. 所有DataNode将NameNode与SecondaryNameNode所发送过来的公钥追加到其公钥文件的结尾

4.2.3.1. datanode101

[hadoop@datanode101 .ssh]$ ls -la ~/.ssh/

total 20

drwx------ 2 hadoop hadoop 134 Apr 15 00:39 .

drwx------ 3 hadoop hadoop 74 Apr 14 23:53 ..

-rw-rw-r-- 1 hadoop hadoop 413 Apr 15 00:39 authorized_keys

-rw------- 1 hadoop hadoop 410 Apr 15 00:21 authorized_keys_namenode

-rw------- 1 hadoop hadoop 419 Apr 15 00:27 authorized_keys_secondarynamenode

-rw------- 1 hadoop hadoop 1679 Apr 14 23:53 id_rsa

-rw-r--r-- 1 hadoop hadoop 413 Apr 14 23:53 id_rsa.pub

[hadoop@datanode101 .ssh]$ cd ..

[hadoop@datanode101 ~]$ cat ~/.ssh/authorized_keys_namenode >> ~/.ssh/authorized_keys

[hadoop@datanode101 ~]$ cat ~/.ssh/authorized_keys_secondarynamenode >> ~/.ssh/authorized_keys

[hadoop@datanode101 ~]$

4.2.3.2. datanode102

[hadoop@datanode102 ~]$ ls -la ~/.ssh/

total 20

drwx------ 2 hadoop hadoop 134 Apr 15 00:39 .

drwx------ 3 hadoop hadoop 74 Apr 14 23:53 ..

-rw-rw-r-- 1 hadoop hadoop 413 Apr 15 00:39 authorized_keys

-rw------- 1 hadoop hadoop 410 Apr 15 00:17 authorized_keys_namenode

-rw------- 1 hadoop hadoop 419 Apr 15 00:27 authorized_keys_secondarynamenode

-rw------- 1 hadoop hadoop 1675 Apr 14 23:53 id_rsa

-rw-r--r-- 1 hadoop hadoop 413 Apr 14 23:53 id_rsa.pub

[hadoop@datanode102 ~]$ cat ~/.ssh/authorized_keys_namenode >> ~/.ssh/authorized_keys

[hadoop@datanode102 ~]$ cat ~/.ssh/authorized_keys_secondarynamenode >> ~/.ssh/authorized_keys

[hadoop@datanode102 ~]$

4.2.3.3. datanode103

[hadoop@datanode103 ~]$ ls -la ~/.ssh/

total 20

drwx------ 2 hadoop hadoop 134 Apr 15 00:28 .

drwx------ 3 hadoop hadoop 74 Apr 14 23:54 ..

-rw------- 1 hadoop hadoop 413 Apr 15 00:07 authorized_keys

-rw------- 1 hadoop hadoop 410 Apr 15 00:17 authorized_keys_namenode

-rw------- 1 hadoop hadoop 419 Apr 15 00:28 authorized_keys_secondarynamenode

-rw------- 1 hadoop hadoop 1679 Apr 14 23:54 id_rsa

-rw-r--r-- 1 hadoop hadoop 413 Apr 14 23:54 id_rsa.pub

[hadoop@datanode103 ~]$ cat ~/.ssh/authorized_keys_namenode >> ~/.ssh/authorized_keys

[hadoop@datanode103 ~]$ cat ~/.ssh/authorized_keys_secondarynamenode >> ~/.ssh/authorized_keys

[hadoop@datanode103 ~]$

4.2.3.4. namenode

[hadoop@namenode ~]$ ls -la ~/.ssh/

total 20

drwx------ 2 hadoop hadoop 121 Apr 15 00:28 .

drwx------ 3 hadoop hadoop 74 Apr 14 23:45 ..

-rw------- 1 hadoop hadoop 410 Apr 14 23:45 authorized_keys

-rw------- 1 hadoop hadoop 419 Apr 15 00:28 authorized_keys_secondarynamenode

-rw------- 1 hadoop hadoop 1679 Apr 14 23:45 id_rsa

-rw-r--r-- 1 hadoop hadoop 410 Apr 15 00:03 id_rsa.pub

-rw-r--r-- 1 hadoop hadoop 700 Apr 15 00:17 known_hosts

[hadoop@namenode ~]$ cat ~/.ssh/authorized_keys_secondarynamenode >> ~/.ssh/authorized_keys

[hadoop@namenode ~]$

4.2.3.5. secondarynamenode

[hadoop@secondarynamenode ~]$ ls -la ~/.ssh/

total 20

drwx------ 2 hadoop hadoop 112 Apr 15 00:26 .

drwx------ 3 hadoop hadoop 74 Apr 14 23:54 ..

-rw------- 1 hadoop hadoop 419 Apr 15 00:26 authorized_keys

-rw------- 1 hadoop hadoop 410 Apr 15 00:17 authorized_keys_namenode

-rw------- 1 hadoop hadoop 1675 Apr 14 23:54 id_rsa

-rw-r--r-- 1 hadoop hadoop 419 Apr 14 23:54 id_rsa.pub

-rw-r--r-- 1 hadoop hadoop 700 Apr 15 00:28 known_hosts

[hadoop@secondarynamenode ~]$ cat ~/.ssh/authorized_keys_namenode >> ~/.ssh/authorized_keys

[hadoop@secondarynamenode ~]$

4.2.4. 验证

[hadoop@secondarynamenode ~]$ ssh hadoop@192.168.1.111

Last login: Sun Apr 15 00:55:33 2018 from 192.168.1.121

[hadoop@datanode101 ~]$ exit

logout

Connection to 192.168.1.111 closed.

[hadoop@secondarynamenode ~]$ ssh hadoop@192.168.1.112

Last login: Sun Apr 15 00:55:38 2018 from 192.168.1.121

[hadoop@datanode102 ~]$ exit

logout

Connection to 192.168.1.112 closed.

[hadoop@secondarynamenode ~]$ ssh hadoop@192.168.1.113

Last login: Sun Apr 15 00:55:47 2018 from 192.168.1.121

[hadoop@datanode103 ~]$ exit

logout

Connection to 192.168.1.113 closed.

[hadoop@secondarynamenode ~]$ ssh hadoop@192.168.1.121

Last login: Sun Apr 15 00:55:59 2018 from 192.168.1.121

[hadoop@namenode ~]$ exit

logout

Connection to 192.168.1.121 closed.

[hadoop@secondarynamenode ~]$ ssh hadoop@192.168.1.122

The authenticity of host '192.168.1.122 (192.168.1.122)' can't be established.

ECDSA key fingerprint is SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.122' (ECDSA) to the list of known hosts.

Last login: Sun Apr 15 00:55:55 2018 from 192.168.1.121

[hadoop@secondarynamenode ~]$ exit

logout

Connection to 192.168.1.122 closed.

[hadoop@secondarynamenode ~]$

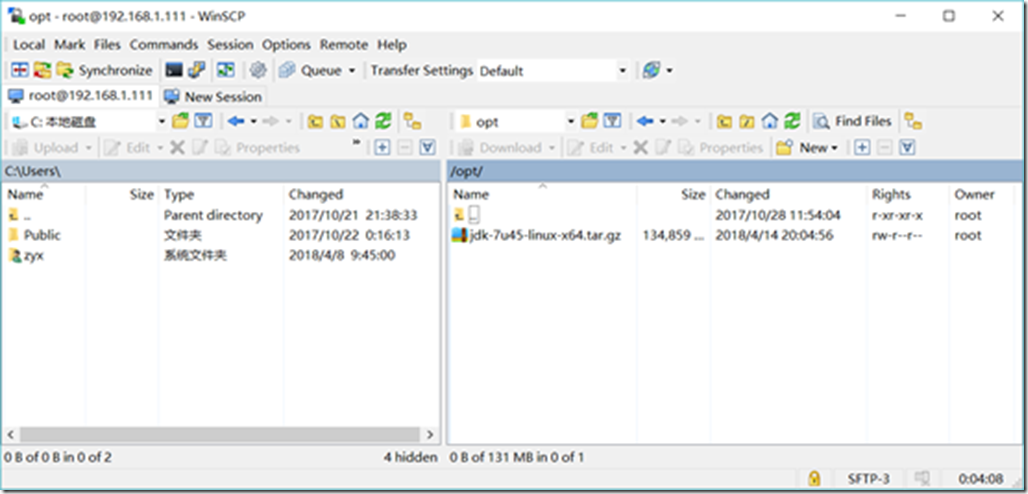

5. 安装JDK

5.1. 将下载好的JDK包上传到一台服务器上

以root服务上传目录为 /opt/

5.2. 解压JDK包

[hadoop@datanode101 ~]$ su - root

Password:

Last login: Sat Apr 14 22:49:00 CST 2018 from 192.168.1.101 on pts/0

[root@datanode101 ~]# cd /opt/

[root@datanode101 opt]# ll

total 134860

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@datanode101 opt]# tar -zxvf jdk-7u45-linux-x64.tar.gz

jdk1.7.0_45/

jdk1.7.0_45/db/

jdk1.7.0_45/db/lib/

jdk1.7.0_45/db/lib/derbyLocale_pl.jar

jdk1.7.0_45/db/lib/derbyLocale_zh_TW.jar

jdk1.7.0_45/db/lib/derbyLocale_cs.jar

jdk1.7.0_45/db/lib/derbyLocale_de_DE.jar

jdk1.7.0_45/db/lib/derbytools.jar

jdk1.7.0_45/db/lib/derbyrun.jar

jdk1.7.0_45/db/lib/derbyLocale_hu.jar

jdk1.7.0_45/db/lib/derbynet.jar

jdk1.7.0_45/db/lib/derby.jar

……

jdk1.7.0_45/man/man1/javadoc.1

jdk1.7.0_45/THIRDPARTYLICENSEREADME.txt

jdk1.7.0_45/COPYRIGHT

[root@datanode101 opt]# ls -la

total 134860

drwxr-xr-x. 3 root root 58 Apr 15 01:10 .

dr-xr-xr-x. 17 root root 244 Oct 28 11:54 ..

drwxr-xr-x 8 10 143 233 Oct 8 2013 jdk1.7.0_45

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@datanode101 opt]# ls -la jdk1.7.0_45/

total 19744

drwxr-xr-x 8 10 143 233 Oct 8 2013 .

drwxr-xr-x. 3 root root 58 Apr 15 01:10 ..

drwxr-xr-x 2 10 143 4096 Oct 8 2013 bin

-r--r--r-- 1 10 143 3339 Oct 8 2013 COPYRIGHT

drwxr-xr-x 4 10 143 122 Oct 8 2013 db

drwxr-xr-x 3 10 143 132 Oct 8 2013 include

drwxr-xr-x 5 10 143 185 Oct 8 2013 jre

drwxr-xr-x 5 10 143 250 Oct 8 2013 lib

-r--r--r-- 1 10 143 40 Oct 8 2013 LICENSE

drwxr-xr-x 4 10 143 47 Oct 8 2013 man

-r--r--r-- 1 10 143 114 Oct 8 2013 README.html

-rw-r--r-- 1 10 143 499 Oct 8 2013 release

-rw-r--r-- 1 10 143 19891378 Oct 8 2013 src.zip

-rw-r--r-- 1 10 143 123324 Oct 8 2013 THIRDPARTYLICENSEREADME-JAVAFX.txt

-r--r--r-- 1 10 143 173559 Oct 8 2013 THIRDPARTYLICENSEREADME.txt

[root@datanode101 opt]#

[root@datanode101 opt]# mkdir java

[root@datanode101 opt]# ll

total 134860

drwxr-xr-x 2 root root 6 Apr 15 01:25 java

drwxr-xr-x 8 10 143 233 Oct 8 2013 jdk1.7.0_45

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@datanode101 opt]# mv jdk1.7.0_45/ java/

[root@datanode101 opt]# cd java

[root@datanode101 java]# ls -la

total 0

drwxr-xr-x 3 root root 25 Apr 15 01:26 .

drwxr-xr-x. 3 root root 51 Apr 15 01:26 ..

drwxr-xr-x 8 10 143 233 Oct 8 2013 jdk1.7.0_45

[root@datanode101 java]# pwd

/opt/java

[root@datanode101 java]# cd jdk1.7.0_45/

[root@datanode101 jdk1.7.0_45]# pwd

/opt/java/jdk1.7.0_45

[root@datanode101 jdk1.7.0_45]#

5.3. 将JDK复制到所有其它节点上

[root@datanode101 opt]# scp jdk-7u45-linux-x64.tar.gz root@192.168.1.112:/opt/

root@192.168.1.112's password:

jdk-7u45-linux-x64.tar.gz 100% 138MB 21.9MB/s 00:16

[root@datanode101 opt]# scp jdk-7u45-linux-x64.tar.gz root@192.168.1.113:/opt/

root@192.168.1.113's password:

jdk-7u45-linux-x64.tar.gz 100% 138MB 21.9MB/s 00:16

[root@datanode101 opt]# scp jdk-7u45-linux-x64.tar.gz root@192.168.1.121:/opt/

root@192.168.1.121's password:

jdk-7u45-linux-x64.tar.gz 100% 138MB 20.6MB/s 00:17

[root@datanode101 opt]# scp jdk-7u45-linux-x64.tar.gz root@192.168.1.122:/opt/

root@192.168.1.122's password:

jdk-7u45-linux-x64.tar.gz 100% 138MB 26.9MB/s 00:13

[root@datanode101 opt]#

5.4. 在所有节点上配置环境变量

[root@datanode101 opt]# vi /etc/profile

在其结尾加入如下的内容

export JAVA_HOME=/opt/java/jdk1.7.0_45

export JRE_HOME=/opt/java/jdk1.7.0_45/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$PATH

[root@datanode101 jdk1.7.0_45]# source /etc/profile

[root@datanode101 jdk1.7.0_45]# java -version

java version "1.7.0_45"

Java(TM) SE Runtime Environment (build 1.7.0_45-b18)

Java HotSpot(TM) 64-Bit Server VM (build 24.45-b08, mixed mode)

[root@datanode101 jdk1.7.0_45]#

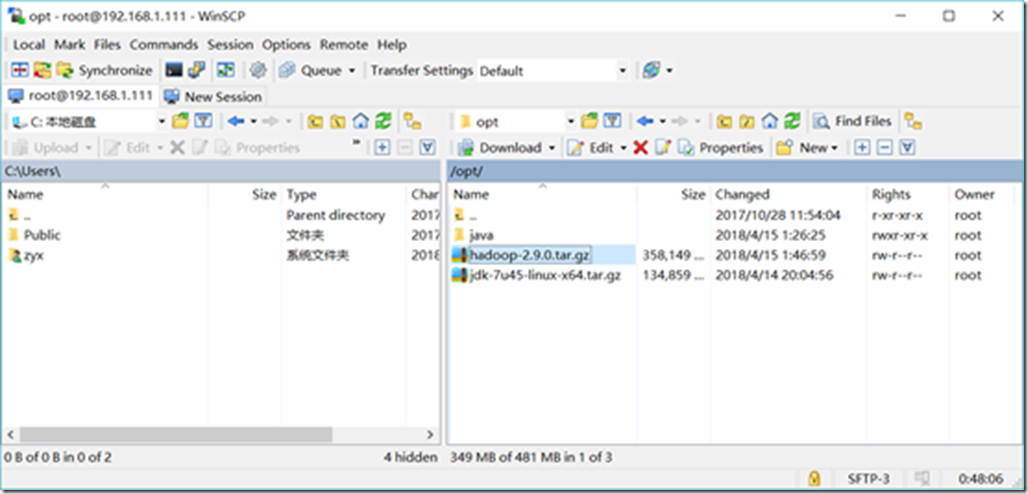

6. Hadoop安装及其环境配置

6.1. 将下载好的Hadoop包上传到一台服务器上

以root服务上传目录为 /opt/

6.2. 解压Hadoop包

[hadoop@datanode101 opt]$ su - root

Password:

Last login: Sun Apr 15 01:41:52 CST 2018 on pts/0

[root@datanode101 ~]# cd /opt

[root@datanode101 opt]# mkdir hadoop

[root@datanode101 opt]#

[root@datanode101 opt]# ls -la

total 493012

drwxr-xr-x. 4 root root 92 Apr 15 10:11 .

dr-xr-xr-x. 17 root root 244 Oct 28 11:54 ..

drwxr-xr-x 3 root root 26 Apr 15 10:11 hadoop

-rw-r--r-- 1 root root 366744329 Apr 15 01:46 hadoop-2.9.0.tar.gz

drwxr-xr-x 3 root root 25 Apr 15 01:26 java

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@datanode101 opt]# tar –zxvf hadoop-2.9.0.tar.gz

……

hadoop-2.9.0/share/hadoop/common/jdiff/hadoop-core_0.20.0.xml

hadoop-2.9.0/share/hadoop/common/jdiff/hadoop-core_0.21.0.xml

hadoop-2.9.0/share/hadoop/common/jdiff/hadoop_0.19.2.xml

hadoop-2.9.0/share/hadoop/common/jdiff/Apache_Hadoop_Common_2.6.0.xml

hadoop-2.9.0/share/hadoop/common/jdiff/Apache_Hadoop_Common_2.7.2.xml

hadoop-2.9.0/share/hadoop/common/sources/

hadoop-2.9.0/share/hadoop/common/sources/hadoop-common-2.9.0-test-sources.jar

hadoop-2.9.0/share/hadoop/common/sources/hadoop-common-2.9.0-sources.jar

[root@datanode101 opt]# ls -la

total 493012

drwxr-xr-x. 5 root root 112 Apr 15 10:08 .

dr-xr-xr-x. 17 root root 244 Oct 28 11:54 ..

drwxr-xr-x 2 root root 6 Apr 15 10:02 hadoop

drwxr-xr-x 9 hadoop hadoop 149 Nov 14 07:28 hadoop-2.9.0

-rw-r--r-- 1 root root 366744329 Apr 15 01:46 hadoop-2.9.0.tar.gz

drwxr-xr-x 3 root root 25 Apr 15 01:26 java

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@datanode101 opt]# mv hadoop-2.9.0 hadoop/

[root@datanode101 opt]# ls -la

total 493012

drwxr-xr-x. 4 root root 92 Apr 15 10:11 .

dr-xr-xr-x. 17 root root 244 Oct 28 11:54 ..

drwxr-xr-x 3 root root 26 Apr 15 10:11 hadoop

-rw-r--r-- 1 root root 366744329 Apr 15 01:46 hadoop-2.9.0.tar.gz

drwxr-xr-x 3 root root 25 Apr 15 01:26 java

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@datanode101 opt]#

6.3. 修改Hadoop包的用户

[root@datanode101 opt]# chown hadoop:hadoop -R hadoop/

[root@datanode101 opt]#

6.4. 将Hadoop复制到所有其它节点上

[root@datanode101 opt]# scp hadoop-2.9.0.tar.gz root@192.168.1.112:/opt/

root@192.168.1.112's password:

hadoop-2.9.0.tar.gz 100% 350MB 21.9MB/s 00:16

[root@datanode101 opt]# scp hadoop-2.9.0.tar.gz root@192.168.1.113:/opt/

root@192.168.1.113's password:

hadoop-2.9.0.tar.gz 100% 350MB 21.9MB/s 00:16

[root@datanode101 opt]# scp hadoop-2.9.0.tar.gz root@192.168.1.121:/opt/

root@192.168.1.121's password:

hadoop-2.9.0.tar.gz 100% 350MB 20.6MB/s 00:17

[root@datanode101 opt]# scp hadoop-2.9.0.tar.gz root@192.168.1.122:/opt/

root@192.168.1.122's password:

hadoop-2.9.0.tar.gz 100% 350MB 26.9MB/s 00:13

[root@datanode101 opt]#

6.5. Hadoop环境配置

6.5.1. 把Hadoop的安装路径添加到”/etc/profile”中

[root@datanode101 opt]# vi /etc/profile

export

JAVA_HOME=/opt/java/jdk1.7.0_45

export

HADOOP_HOME=/opt/hadoop/hadoop-2.9.0

export

CLASSPATH=.:$JAVA_HOME/lib:$CLASSPATH

export

PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin: $HADOOP_HOME/sbin:$PATH

# export JAVA_HOME=/opt/java/jdk1.7.0_45

# export HADOOP_HOME=/opt/hadoop/hadoop-2.9.0

# export CLASSPATH=.:$JAVA_HOME/lib:$CLASSPATH

# export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$PATH

export JAVA_HOME=/opt/java/jdk1.7.0_45

export CLASSPATH=.:$JAVA_HOME/lib:$CLASSPATH

export HADOOP_HOME=/opt/hadoop/hadoop-2.9.0

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_HOME=$HADOOP_HOME

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export LD_LIBRARY_PATH=$JAVA_HOME/jre/lib/amd64/server:/usr/local/lib:$HADOOP_HOME/lib/native

export JAVA_LIBRARY_PATH=$LD_LIBRARY_PATH:$JAVA_LIBRARY_PATH

[root@datanode101 opt]# source /etc/profile

[root@datanode101 opt]#

6.5.2. 创建目录

[hadoop@datanode101 opt]$ mkdir /opt/hadoop/tmp

[hadoop@datanode101 opt]$ mkdir -p /opt/hadoop/data/hdfs/name

[hadoop@datanode101 opt]$ mkdir -p /opt/hadoop/data/hdfs/data

[hadoop@datanode101 opt]$ mkdir -p /opt/hadoop/data/hdfs/checkpoint

[hadoop@datanode101 opt]$ mkdir -p /opt/hadoop/data/yarn/local

[hadoop@datanode101 opt]$ mkdir -p /opt/hadoop/pid

[hadoop@datanode101 opt]$ mkdir -p /opt/hadoop/log

6.5.3. 配置hadoop-env.sh

[root@datanode101 opt]# exit

logout

[hadoop@datanode101 ~]$ cd /opt/

[hadoop@datanode101 opt]$ vi /opt/hadoop/hadoop-2.9.0/etc/hadoop/hadoop-env.sh

[hadoop@datanode101 opt]$

# export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/opt/java/jdk1.7.0_45

export HADOOP_PID_DIR=/home/hadoop/pid

export HADOOP_LOG_DIR=/home/hadoop/log

[hadoop@datanode101 opt]$ source /etc/profile

[hadoop@datanode101 opt]$ source /opt/hadoop/hadoop-2.9.0/etc/hadoop/hadoop-env.sh

[hadoop@datanode101 opt]$

[hadoop@datanode101 opt]$ hadoop version

Hadoop 2.9.0

Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 756ebc8394e473ac25feac05fa493f6d612e6c50

Compiled by arsuresh on 2017-11-13T23:15Z

Compiled with protoc 2.5.0

From source with checksum 0a76a9a32a5257331741f8d5932f183

This command was run using /opt/hadoop/hadoop-2.9.0/share/hadoop/common/hadoop-common-2.9.0.jar

[hadoop@datanode101 opt]$

6.5.4. 配置yarn-env.sh

[hadoop@datanode101 opt]$ vi /opt/hadoop/hadoop-2.9.0/etc/hadoop/yarn-env.sh

[hadoop@datanode101 opt]$

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

export JAVA_HOME=/opt/java/jdk1.7.0_45

6.5.5. 配置etc/hadoop/core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.221:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

</configuration>

6.5.6. 配置etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.1.121:50070</value>

</property>

<property>

<name>dfs.namenode.http-bind-host</name>

<value>192.168.1.121</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.1.122:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop/data/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop/data/hdfs/data</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>/opt/hadoop/data/hdfs/checkpoint</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>67108864</value>

</property>

</configuration>

6.5.7. 配置etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

6.5.8. 配置etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.121</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/opt/hadoop/data/yarn/local</value>

</property>

</configuration>

6.5.9. 配置etc/hadoop/slaves

[hadoop@namenode hadoop]$ vi /opt/hadoop/hadoop-2.9.0/etc/hadoop/slaves

192.168.1.111

192.168.1.112

192.168.1.113

6.6. 将配置文件复制到所有其它节点上

[hadoop@namenode hadoop]$ scp core-site.xml hadoop@192.168.1.111:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

core-site.xml 100% 1042 233.7KB/s 00:00

[hadoop@namenode hadoop]$ scp core-site.xml hadoop@192.168.1.112:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

core-site.xml 100% 1042 529.6KB/s 00:00

[hadoop@namenode hadoop]$ scp core-site.xml hadoop@192.168.1.113:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

core-site.xml 100% 1042 435.4KB/s 00:00

[hadoop@namenode hadoop]$ scp core-site.xml hadoop@192.168.1.122:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

core-site.xml 100% 1042 331.9KB/s 00:00

[hadoop@namenode hadoop]$ scp hdfs-site.xml hadoop@192.168.1.111:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

hdfs-site.xml 100% 1655 349.8KB/s 00:00

[hadoop@namenode hadoop]$ scp hdfs-site.xml hadoop@192.168.1.112:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

hdfs-site.xml 100% 1655 380.2KB/s 00:00

[hadoop@namenode hadoop]$ scp hdfs-site.xml hadoop@192.168.1.113:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

hdfs-site.xml 100% 1655 953.8KB/s 00:00

[hadoop@namenode hadoop]$ scp hdfs-site.xml hadoop@192.168.1.122:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

hdfs-site.xml 100% 1655 1.6MB/s 00:00

[hadoop@namenode hadoop]$ scp mapred-site.xml hadoop@192.168.1.111:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

mapred-site.xml 100% 844 241.9KB/s 00:00

[hadoop@namenode hadoop]$ scp mapred-site.xml hadoop@192.168.1.112:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

mapred-site.xml 100% 844 251.0KB/s 00:00

[hadoop@namenode hadoop]$ scp mapred-site.xml hadoop@192.168.1.113:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

mapred-site.xml 100% 844 211.0KB/s 00:00

[hadoop@namenode hadoop]$ scp mapred-site.xml hadoop@192.168.1.122:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

mapred-site.xml 100% 844 256.3KB/s 00:00

[hadoop@namenode hadoop]$ scp yarn-site.xml hadoop@192.168.1.111:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

yarn-site.xml 100% 1016 254.6KB/s 00:00

[hadoop@namenode hadoop]$ scp yarn-site.xml hadoop@192.168.1.112:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

yarn-site.xml 100% 1016 531.6KB/s 00:00

[hadoop@namenode hadoop]$ scp yarn-site.xml hadoop@192.168.1.113:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

yarn-site.xml 100% 1016 378.3KB/s 00:00

[hadoop@namenode hadoop]$ scp yarn-site.xml hadoop@192.168.1.122:/opt/hadoop/hadoop-2.9.0/etc/hadoop/

yarn-site.xml 100% 1016 303.9KB/s 00:00

[hadoop@namenode hadoop]$

7. 启动

7.1. 启动HDFS

7.1.1. 格式化NameNode

[hadoop@namenode hadoop]$ hdfs namenode -format

18/04/15 19:47:45 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = namenode.smartmap.com/192.168.1.121

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.9.0

7.1.2. 启动与停止HDFS

[hadoop@namenode hadoop]$ . /opt/hadoop/hadoop-2.9.0/sbin/start-dfs.sh

/usr/bin/which: invalid option -- 'b'

/usr/bin/which: invalid option -- 's'

/usr/bin/which: invalid option -- 'h'

Usage: /usr/bin/which [options] [--] COMMAND [...]

Write the full path of COMMAND(s) to standard output.

……

Report bugs to< which-bugs@gnu.org>.

Starting namenodes on [nameNode]

nameNode: starting namenode, logging to /home/hadoop/log/hadoop-hadoop-namenode-namenode.smartmap.com.out

192.168.1.112: starting datanode, logging to /home/hadoop/log/hadoop-hadoop-datanode-datanode102.smartmap.com.out

192.168.1.111: starting datanode, logging to /home/hadoop/log/hadoop-hadoop-datanode-datanode101.smartmap.com.out

192.168.1.113: starting datanode, logging to /home/hadoop/log/hadoop-hadoop-datanode-datanode103.smartmap.com.out

Starting secondary namenodes [secondaryNameNode]

secondaryNameNode: starting secondarynamenode, logging to /home/hadoop/log/hadoop-hadoop-secondarynamenode-secondarynamenode.smartmap.com.out

[hadoop@namenode hadoop]$ . /opt/hadoop/hadoop-2.9.0/sbin/stop-dfs.sh

/usr/bin/which: invalid option -- 'b'

/usr/bin/which: invalid option -- 's'

/usr/bin/which: invalid option -- 'h'

Usage: /usr/bin/which [options] [--] COMMAND [...]

Write the full path of COMMAND(s) to standard output.

……

Report bugs to< which-bugs@gnu.org>.

Stopping namenodes on [nameNode]

nameNode: stopping namenode

192.168.1.113: stopping datanode

192.168.1.111: stopping datanode

192.168.1.112: stopping datanode

Stopping secondary namenodes [secondaryNameNode]

secondaryNameNode: stopping secondarynamenode

[hadoop@namenode hadoop]$

7.2. 启动YARN

[hadoop@namenode sbin]$ . /opt/hadoop/hadoop-2.9.0/sbin/start-yarn.sh

starting yarn daemons

/usr/bin/which: invalid option -- 'b'

/usr/bin/which: invalid option -- 's'

/usr/bin/which: invalid option -- 'h'

Usage: /usr/bin/which [options] [--] COMMAND [...]

Write the full path of COMMAND(s) to standard output.

……

Report bugs to< which-bugs@gnu.org>.

starting resourcemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-resourcemanager-namenode.smartmap.com.out

192.168.1.113: starting nodemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-nodemanager-datanode103.smartmap.com.out

192.168.1.112: starting nodemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-nodemanager-datanode102.smartmap.com.out

192.168.1.111: starting nodemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-nodemanager-datanode101.smartmap.com.out

[hadoop@namenode sbin]$

设置logger级别,看下具体原因

export HADOOP_ROOT_LOGGER=DEBUG,console

7.3. 启动所有服务

[hadoop@namenode sbin]$ . /opt/hadoop/hadoop-2.9.0/sbin/start-all..sh

-bash: /opt/hadoop/hadoop-2.9.0/sbin/start-all..sh: No such file or directory

[hadoop@namenode sbin]$ . /opt/hadoop/hadoop-2.9.0/sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [nameNode]

nameNode: starting namenode, logging to /home/hadoop/log/hadoop-hadoop-namenode-namenode.smartmap.com.out

192.168.1.111: starting datanode, logging to /home/hadoop/log/hadoop-hadoop-datanode-datanode101.smartmap.com.out

192.168.1.113: starting datanode, logging to /home/hadoop/log/hadoop-hadoop-datanode-datanode103.smartmap.com.out

192.168.1.112: starting datanode, logging to /home/hadoop/log/hadoop-hadoop-datanode-datanode102.smartmap.com.out

Starting secondary namenodes [secondaryNameNode]

secondaryNameNode: starting secondarynamenode, logging to /home/hadoop/log/hadoop-hadoop-secondarynamenode-secondarynamenode.smartmap.com.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-resourcemanager-namenode.smartmap.com.out

192.168.1.111: starting nodemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-nodemanager-datanode101.smartmap.com.out

192.168.1.112: starting nodemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-nodemanager-datanode102.smartmap.com.out

192.168.1.113: starting nodemanager, logging to /opt/hadoop/hadoop-2.9.0/logs/yarn-hadoop-nodemanager-datanode103.smartmap.com.out

[hadoop@namenode sbin]$

[hadoop@namenode sbin]$ . /opt/hadoop/hadoop-2.9.0/sbin/stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [nameNode]

nameNode: stopping namenode

192.168.1.111: stopping datanode

192.168.1.112: stopping datanode

192.168.1.113: stopping datanode

Stopping secondary namenodes [secondaryNameNode]

secondaryNameNode: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

192.168.1.111: stopping nodemanager

192.168.1.113: stopping nodemanager

192.168.1.112: stopping nodemanager

192.168.1.111: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

192.168.1.113: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

192.168.1.112: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

no proxyserver to stop

[hadoop@namenode sbin]$

7.4. 查看服务器上Hadoop的服务进程情况

[hadoop@namenode sbin]$ jps

3729 ResourceManager

4012 Jps

3444 NameNode

7.5. 查看节点情况

[hadoop@namenode sbin]$ hadoop dfsadmin -report

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Configured Capacity: 115907493888 (107.95 GB)

Present Capacity: 109141913600 (101.65 GB)

DFS Remaining: 109141876736 (101.65 GB)

DFS Used: 36864 (36 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (3):

Name: 192.168.1.111:50010 (dataNode101)

Hostname: dataNode101

Decommission Status : Normal

Configured Capacity: 38635831296 (35.98 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 2255196160 (2.10 GB)

DFS Remaining: 36380622848 (33.88 GB)

DFS Used%: 0.00%

DFS Remaining%: 94.16%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sun Apr 15 21:03:11 CST 2018

Last Block Report: Sun Apr 15 20:10:07 CST 2018

Name: 192.168.1.112:50010 (dataNode102)

Hostname: dataNode102

Decommission Status : Normal

Configured Capacity: 38635831296 (35.98 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 2255192064 (2.10 GB)

DFS Remaining: 36380626944 (33.88 GB)

DFS Used%: 0.00%

DFS Remaining%: 94.16%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sun Apr 15 21:03:11 CST 2018

Last Block Report: Sun Apr 15 20:10:07 CST 2018

Name: 192.168.1.113:50010 (dataNode103)

Hostname: dataNode103

Decommission Status : Normal

Configured Capacity: 38635831296 (35.98 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 2255192064 (2.10 GB)

DFS Remaining: 36380626944 (33.88 GB)

DFS Used%: 0.00%

DFS Remaining%: 94.16%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sun Apr 15 21:03:11 CST 2018

Last Block Report: Sun Apr 15 20:10:07 CST 2018

[hadoop@namenode sbin]$

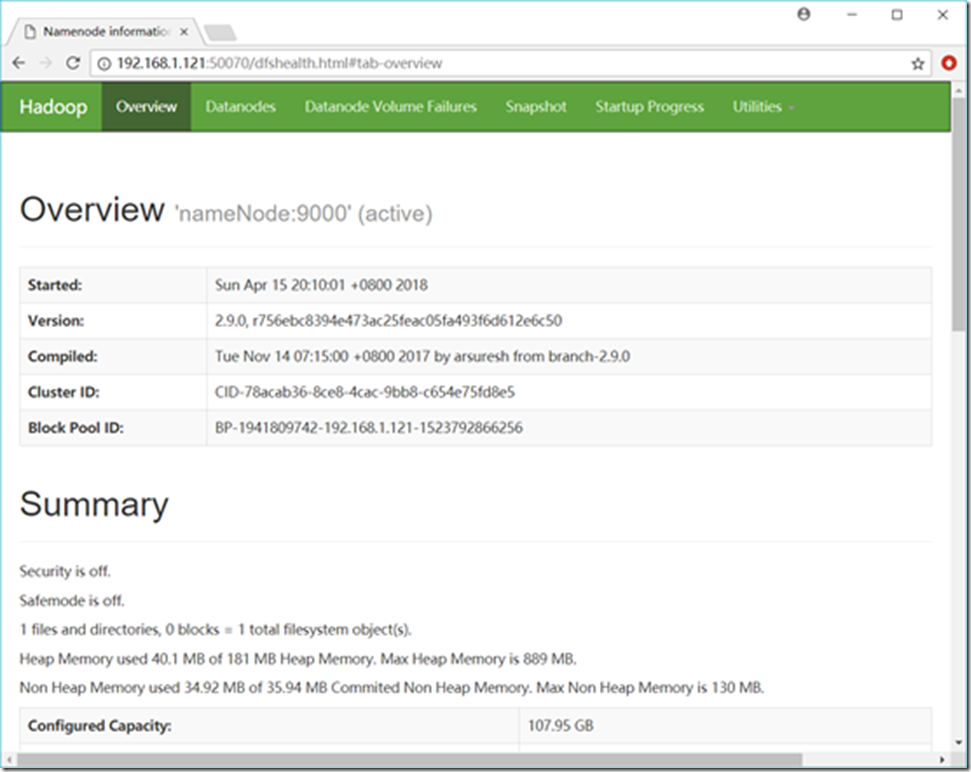

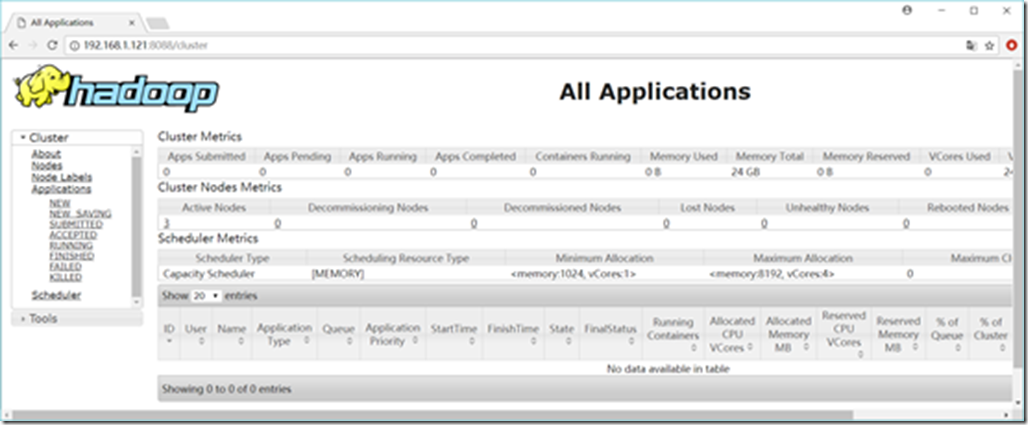

7.6. 通过网页查看集群

http://192.168.1.121:50070/dfshealth.html#tab-overview

http://192.168.1.121:8088/cluster

8. 如何运行自带wordcount

8.1. 找到该程序所在的位置

[hadoop@namenode sbin]$ cd /opt/hadoop/hadoop-2.9.0/share/hadoop/mapreduce/

[hadoop@namenode mapreduce]$ ll

total 5196

-rw-r--r-- 1 hadoop hadoop 571621 Nov 14 07:28 hadoop-mapreduce-client-app-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 787871 Nov 14 07:28 hadoop-mapreduce-client-common-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 1611701 Nov 14 07:28 hadoop-mapreduce-client-core-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 200628 Nov 14 07:28 hadoop-mapreduce-client-hs-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 32802 Nov 14 07:28 hadoop-mapreduce-client-hs-plugins-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 71360 Nov 14 07:28 hadoop-mapreduce-client-jobclient-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 1623508 Nov 14 07:28 hadoop-mapreduce-client-jobclient-2.9.0-tests.jar

-rw-r--r-- 1 hadoop hadoop 85175 Nov 14 07:28 hadoop-mapreduce-client-shuffle-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 303317 Nov 14 07:28 hadoop-mapreduce-examples-2.9.0.jar

drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 07:28 jdiff

drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 07:28 lib

drwxr-xr-x 2 hadoop hadoop 30 Nov 14 07:28 lib-examples

drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 07:28 sources

[hadoop@namenode mapreduce]$

8.2. 准备数据

8.2.1. 先在HDFS创建几个数据目录

[hadoop@namenode mapreduce]$ hadoop fs -mkdir -p /data/wordcount

[hadoop@namenode mapreduce]$ hadoop fs -mkdir -p /output/

[hadoop@namenode mapreduce]$

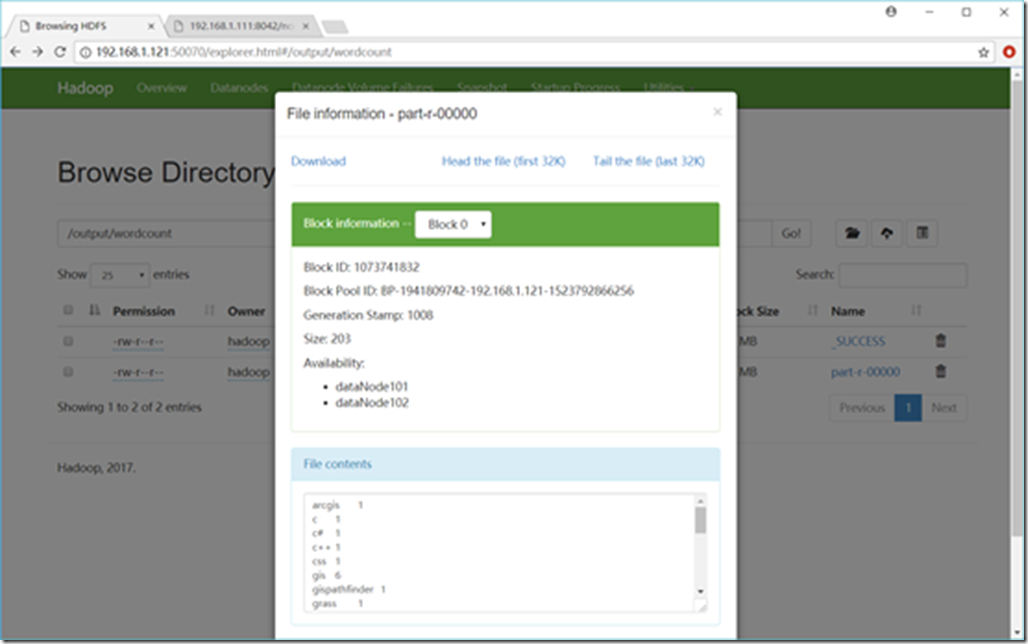

目录/data/wordcount用来存放Hadoop自带的WordCount例子的数据文件,运行这个MapReduce任务的结果输出到/output/wordcount目录中

8.2.2. 创建测试数据

[hadoop@namenode mapreduce]$ vi /opt/hadoop/inputWord

8.2.3. 上传数据

[hadoop@namenode mapreduce]$ hadoop fs -put /opt/hadoop/inputWord /data/wordcount/

8.2.4. 显示文件

[hadoop@namenode mapreduce]$ hadoop fs -ls /data/wordcount/

Found 1 items

-rw-r--r-- 2 hadoop supergroup 241 2018-04-15 22:01 /data/wordcount/inputWord

8.2.5. 查看文件内容

[hadoop@namenode mapreduce]$ hadoop fs -text /data/wordcount/inputWord

hello smartmap

hello gispathfinder

language c

language c++

language c#

language java

language python

language r

web javascript

web html

web css

gis arcgis

gis supermap

gis mapinfo

gis qgis

gis grass

gis sage

webgis leaflet

webgis openlayers

[hadoop@namenode mapreduce]$

8.3. 运行WordCount例子

[hadoop@namenode mapreduce]$ hadoop jar /opt/hadoop/hadoop-2.9.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.0.jar wordcount /data/wordcount /output/wordcount

18/04/15 22:10:08 INFO client.RMProxy: Connecting to ResourceManager at /192.168.1.121:8032

18/04/15 22:10:09 INFO input.FileInputFormat: Total input files to process : 1

18/04/15 22:10:09 INFO mapreduce.JobSubmitter: number of splits:1

18/04/15 22:10:09 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

18/04/15 22:10:10 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1523794216379_0001

18/04/15 22:10:10 INFO impl.YarnClientImpl: Submitted application application_1523794216379_0001

18/04/15 22:10:10 INFO mapreduce.Job: The url to track the job: http://nameNode:8088/proxy/application_1523794216379_0001/

18/04/15 22:10:10 INFO mapreduce.Job: Running job: job_1523794216379_0001

18/04/15 22:10:20 INFO mapreduce.Job: Job job_1523794216379_0001 running in uber mode : false

18/04/15 22:10:20 INFO mapreduce.Job: map 0% reduce 0%

18/04/15 22:10:30 INFO mapreduce.Job: map 100% reduce 0%

18/04/15 22:10:40 INFO mapreduce.Job: map 100% reduce 100%

18/04/15 22:10:40 INFO mapreduce.Job: Job job_1523794216379_0001 completed successfully

18/04/15 22:10:40 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=305

FILE: Number of bytes written=404363

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=356

HDFS: Number of bytes written=203

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=7775

Total time spent by all reduces in occupied slots (ms)=6275

Total time spent by all map tasks (ms)=7775

Total time spent by all reduce tasks (ms)=6275

Total vcore-milliseconds taken by all map tasks=7775

Total vcore-milliseconds taken by all reduce tasks=6275

Total megabyte-milliseconds taken by all map tasks=7961600

Total megabyte-milliseconds taken by all reduce tasks=6425600

Map-Reduce Framework

Map input records=19

Map output records=38

Map output bytes=393

Map output materialized bytes=305

Input split bytes=115

Combine input records=38

Combine output records=24

Reduce input groups=24

Reduce shuffle bytes=305

Reduce input records=24

Reduce output records=24

Spilled Records=48

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=1685

CPU time spent (ms)=2810

Physical memory (bytes) snapshot=476082176

Virtual memory (bytes) snapshot=1810911232

Total committed heap usage (bytes)=317718528

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=241

File Output Format Counters

Bytes Written=203

[hadoop@namenode mapreduce]$

8.4. 查看结果

8.4.1. 通过服务器上查看

[hadoop@namenode mapreduce]$ hadoop fs -ls /output/wordcount

Found 2 items

-rw-r--r-- 2 hadoop supergroup 0 2018-04-15 22:10 /output/wordcount/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 203 2018-04-15 22:10 /output/wordcount/part-r-00000

[hadoop@namenode mapreduce]$ hadoop fs -text /output/wordcount/part-r-00000

arcgis 1

c 1

c# 1

c++ 1

css 1

gis 6

gispathfinder 1

grass 1

hello 2

html 1

java 1

javascript 1

language 6

leaflet 1

mapinfo 1

openlayers 1

python 1

qgis 1

r 1

sage 1

smartmap 1

supermap 1

web 3

webgis 2

[hadoop@namenode mapreduce]$

8.4.2. 通过Web UI查看

http://192.168.1.121:50070/explorer.html#/output/wordcount