1.urllib库中request,parse的学习

1.1 简单的请求页面获取,并下载到本地 request的使用

from urllib import request # 获取此网页的demout resp = request.urlopen('http://www.baidu.com') # 读出10个字符 # 结果为 b'<!DOCTYPE ' b代表bytes 是一个字节流 # '<!DOCTYPE ' 包括空格 正好十个字符 # print(resp.read(10)) # 读取一行 # 结果为 b'<!DOCTYPE html> ' # 代表换行 # ()里面可以写读几行 # print(resp.readline()) # 全部读取 二者对应的返回类型不同 # <class 'list'> readlines # <class 'bytes'> read # print(type(resp.readlines())) # print(type(resp.read())) # 下载到本地文件夹 request.urlretrieve('http://www.baidu.com', 'baidu.html')

1.2 parse的使用

1.2.1 解决中文与码的对应问题

例:中文变成码 name=%E9%AB%98%E8%BE%BE 这些带%和中文之间的转换

1 # 主要解决网址中中文是解析不了的问题 2 3 from urllib import request 4 from urllib import parse 5 6 # 中文变成码 name=%E9%AB%98%E8%BE%BE&age=21 7 8 # urlencode函数 把字典转化为字符串 9 params = {'name': '高达', 'age': '21'} 10 result = parse.urlencode(params) 11 # 结果为 12 # <class 'str'> 13 # name=%E9%AB%98%E8%BE%BE&age=21 14 print(type(result)) 15 print(result) 16 17 # 如果直接在网址写中文会报错,虽然我们看到的是中文但是实际上是中文对应的码 18 # resp = request.urlopen('https://www.baidu.com/s?wd=刘德华') 19 20 21 # 写出一个字典 22 params = {'wd': '刘德华'} 23 print(params) 24 # 转化成正确的网站上的格式 25 qs = parse.urlencode(params) 26 print(qs) 27 # 这样请求就能成功 28 resp = request.urlopen('https://www.baidu.com/s?'+qs) 29 # print(resp.readlines()) 30 # parse.parse_qs函数 还原解码 31 result = parse.parse_qs(qs) 32 # 结果为 33 # <class 'dict'> 34 # {'wd': ['刘德华']} 35 print(type(result)) 36 print(result)

1.2.2 解析网址

# 解析网址 from urllib import parse def test(): # 测试用的 url url = 'http://www.baidu.com/s;sssas?wd=python&username=abc#1' url1 = 'http://www.baidu.com/s?wd=python&username=abc#1' url2 = 'https://api.bilibili.com/x/v2/reply?callback=jQuery17204184039578913048_1580701507886&jsonp=jsonp&pn=1&type=1&oid=70059391&sort=2&_=1580701510617' result1 = parse.urlparse(url) # <class 'urllib.parse.ParseResult'> print(type(result1)) # 结果为 ParseResult(scheme='http', netloc='www.baidu.com', path='/s', params='sssas', query='wd=python&username=abc', # fragment='1') # print(result1) # print('scheme:', result1.scheme) # print('netloc:', result1.netloc) # print('path: ', result1.path) # print('params: ', result1.params) # print('query: ', result1.query) # print('fragment: ', result1.fragment) result2 = parse.urlsplit(url) print(result2) # urlparse函数与 urlsplit函数的对比 # urlparse函数多一个params result1 = parse.urlparse(url1) result2 = parse.urlsplit(url1) print(result1) print(result2) # result3 = parse.urlparse(url2) # print('r3:', result3.query) # 格式化输出函数 def myself_urlparse(url): result = parse.urlparse(url) print('scheme:', result.scheme) print('netloc:', result.netloc) print('path: ', result.path) print('params: ', result.params) print('query: ', result.query) print('fragment: ', result.fragment) def myself_urlsplit(url): result = parse.urlsplit(url) print('scheme:', result.scheme) print('netloc:', result.netloc) print('path: ', result.path) print('query: ', result.query) print('fragment: ', result.fragment) if __name__ == '__main__': test()

1.3 使用代理IP

# ip代理 from urllib import request # 没有代理是用本地ip url = 'http://www.baidu.com' resp = request.urlopen(url) # print(resp.read()) # 使用代理 handler = request.ProxyHandler({'http': '60.170.234.221:65309'}) opener = request.build_opener(handler) resp = opener.open(url) print(resp.read())

1.4CookieJar

cokkie信息可以直接写在headers请求头里面,也可以创建CookieJar对象

# cookie信息 from urllib import request from http.cookiejar import CookieJar from urllib import parse # 登录 cookiejar = CookieJar() handler = request.HTTPCookieProcessor(cookiejar) opener = request.build_opener(handler) # 最简单的就是在handers中写cookie handers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400' } data = { 'login-username': '15932639701', 'login-passwd': 'w5153300' } url = 'https://passport.bilibili.com/login' req = request.Request(url, data=parse.urlencode(data).encode('utf-8'), headers=handers, method='GET') # 自动保存cokie信息 opener.open(req) # 访问个人主页 url1 = 'https://space.bilibili.com/141417102' # 使用之前的opener req = request.Request(url1, headers=handers) resp = opener.open(url1) # 写入本地 with open('a.html', 'w', encoding='utf-8')as fp: fp.write(resp.read().decode('utf-8'))

2.1 requests的使用 它是对urllib的再次封装,它们使用的主要区别:

requests可以直接构建常用的get和post请求并发起,urllib一般要先构建get或者post请求,然后再发起请求。

import requests resp = requests.get("https://www.baidu.com/") # # # 返回响应内容 源码 返回对象str 有乱码就要decode解码 # print(type(resp.text)) # print(resp.text.decode('utf-8')) # 返回响应内容 源码 返回对象bytes # print(type(resp.content)) # print(resp.content) # print(resp.url) # https://www.baidu.com/ print(resp.encoding) # ISO-8859-1 print(resp.status_code)# 200 print(resp.headers) print(resp.cookies)

import requests # 写入请求头 # params = { # 'wd': '中国' # } headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400' } resp = requests.get("https://www.baidu.com/s?wd=%E4%B8%AD%E5%9B%BD&rsv_spt=1&rsv_iqid=0xc673aab900009518&issp=1&f=8&rsv_bp=1&rsv_idx=2&ie=utf-8&tn=56060048_11_pg&ch=6&rsv_enter=0&rsv_dl=tb&rsv_sug3=4&rsv_sug1=2&rsv_sug7=101&prefixsug=%25E4%25B8%25AD%25E5%259B%25BD%2520&rsp=1&inputT=5214&rsv_sug4=5933",headers=headers) with open('a.html', 'w', encoding='utf-8')as fp: fp.write(resp.content.decode('utf-8')) print(resp.url)

import requests # 使用代理ip proxy = { 'http': 'http://47.100.124.14/' } resp = requests.get('https://www.baidu.com', proxies=proxy) print(resp.content.decode('utf-8'))

import requests resp = requests.get("https://www.baidu.com/") # 查看cookies信息 print(resp.cookies.get_dict()) session = requests.Session()

# 处理不信任的SSL证书 # 网站是https需要 # 出现错误是因为这个网站不是这个证书 import requests resp = requests.post("https://www.baidu.com/", verify=False)

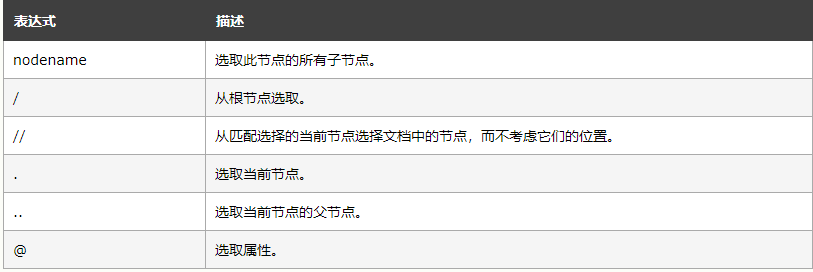

3.1 XPath

XPath语法

补充 :text()是提取该标签下的文本

图片来自于https://www.w3school.com.cn/xpath/xpath_syntax.asp

import requests from lxml import html etree = html.etree parser = etree.HTMLParser(encoding='utf-8') html = etree.parse('a.html', parser=parser) divs = html.xpath('//div') # 返回一个列表 for div in divs: print(etree.tostring(div, encoding='utf-8').decode('utf-8'))

import requests from lxml import html etree = html.etree url = 'https://www.dytt8.net/html/gndy/dyzz/list_23_1.html' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400', 'Referer': 'https://movie.douban.com/' } resp = requests.get(url, headers=headers) # print(text) text = resp.content.decode('gbk') # print(text) html = etree.HTML(text) # []里面@XXX=XXX是寻找特定的属性,在/后面@属性是要这个值的 urls = html.xpath("//table[@class='tbspan']//a/@href") # nead_urls = [] for url in urls: # nead_urls.append('https://www.dytt8.net'+url) print('https://www.dytt8.net'+url)

import requests from lxml import html etree = html.etree # 请求头 网站url url = 'https://movie.douban.com/cinema/nowplaying/langfang/' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400', 'Referer': 'https://movie.douban.com/' } resp = requests.get(url,headers=headers) # text str content bytes # text 解码过的 # print(resp.content.decode('utf-8')) # print(resp.text) # 转化为html 对象 <class 'lxml.etree._Element'> html = etree.HTML(resp.text) print(type(html)) ul = html.xpath("//ul[@class='lists']")[0] # print(ul) # print(etree.tostring(ul,encoding='utf-8').decode('utf-8') # 取出li标签存入lis lis = ul.xpath("./li") # print(etree.tostring(li,encoding='utf-8').decode('utf-8')) a = html.xpath("//div[@id='upcoming']//a[@data-psource='title']") for x in a: name = x.xpath('@title') href = x.xpath('@href') print(href) print(name) # for li in lis: # # 取出@data-title属性的值 # name = li.xpath("@data-title") # print(name) # # 取出图片链接 # img = li.xpath(".//img/@src") # print(img)

3.2 正则表达式

import re import requests def parse_page(url): headers = { 'User-Agent': 'Mozilla / 5.0(Windows NT 10.0;WOW64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 79.0.3945.130Safari / 537.36' } resp = requests.get(url,headers) text = resp.text # re.DOTALL .也可以匹配换行符 titles = re.findall(r'<divsclass="cont">.*?<b>(.*?)</b>', text, re.DOTALL) print(titles) def main(): # url ='https://www.gushiwen.org/default_1.aspx' for x in range(1, 10): url ='https://www.gushiwen.org/default_%s.aspx' % x parse_page(url) if __name__ == '__main__': main()