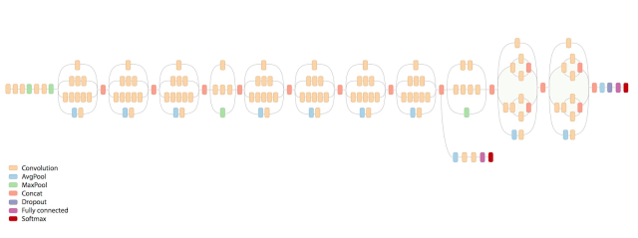

极为庞大的网络结构,不过下一节的ResNet也不小

线性的组成,结构大体如下:

常规卷积部分->Inception模块组1->Inception模块组2->Inception模块组3->池化->1*1卷积(实现个线性变换)->分类器

|_>辅助分类器

代码如下,

# Author : Hellcat

# Time : 2017/12/12

# refer : https://github.com/tensorflow/models/

# blob/master/research/inception/inception/slim/inception_model.py

import time

import math

import tensorflow as tf

from datetime import datetime

slim = tf.contrib.slim

# 截断误差初始化生成器

trunc_normal = lambda stddev:tf.truncated_normal_initializer(0.0,stddev)

def inception_v3_arg_scope(weight_decay=0.00004,

stddv=0.1,

batch_norm_var_collection='moving_vars'):

'''

网络常用函数默认参数生成

:param weight_decay: L2正则化decay

:param stddv: 标准差

:param batch_norm_var_collection:

:return:

'''

batch_norm_params = {

'decay':0.9997, # 衰减系数

'epsilon':0.001,

'updates_collections':{

'bate':None,

'gamma':None,

'moving_mean':[batch_norm_var_collection], # 批次均值

'moving_variance':[batch_norm_var_collection] # 批次方差

}

}

# 外层环境

with slim.arg_scope([slim.conv2d,slim.fully_connected],

# 权重正则化函数

weights_regularizer=slim.l2_regularizer(weight_decay)):

# 内层环境

with slim.arg_scope([slim.conv2d],

# 权重初始化函数

weights_initializer=tf.truncated_normal_initializer(stddev=stddv),

# 激活函数,默认为nn.relu

activation_fn=tf.nn.relu,

# 正则化函数,默认为None

normalizer_fn=slim.batch_norm,

# 正则化函数参数,字典形式

normalizer_params=batch_norm_params) as sc:

return sc

def inception_v3_base(inputs,scope=None):

# 保存关键节点

end_points = {}

# 重载作用域的名称,创建新的作用域名称(前面是None时使用),输入tensor

with tf.variable_scope(scope,'Inception_v3',[inputs]):

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],

stride=1,padding='VALID'):

# 299*299*3

net = slim.conv2d(inputs,32,[3,3],stride=2,scope='Conv2d_1a_3x3') # 149*149*32

net = slim.conv2d(net,32,[3,3],scope='Conv2d_2a_3x3') # 147*147*32

net = slim.conv2d(net,64,[3,3],padding='SAME',scope='Conv2d_2b_3x3') # 147*147*64

net = slim.max_pool2d(net,[3,3],stride=2,scope='MaxPool_3a_3x3') # 73*73*64

net = slim.conv2d(net,80,[1,1],scope='Conv2d_3b_1x1') # 73*73*80

net = slim.conv2d(net,192,[1,1],scope='Conv2d_4a_3x3') # 71*71*192

net = slim.max_pool2d(net,[3,3],stride=2,scope='MaxPool_5a_3x3') # 35*35*192

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],

stride=1,padding='SAME'):

'''Inception 第一模组块'''

# Inception_Module_1

with tf.variable_scope('Mixed_5b'): # 35*35*256

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,48,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,64,[5,5],scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,32,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

# Inception_Module_2

with tf.variable_scope('Mixed_5c'): # 35*35*288

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,48,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,64,[5,5],scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,64,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

# Inception_Module_3

with tf.variable_scope('Mixed_5d'): # 35*35*288

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,48,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,64,[5,5],scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,64,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

'''Inception 第二模组块'''

# Inception_Module_1

with tf.variable_scope('Mixed_6a'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,384,[3,3],stride=2,

padding='VALID',scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,96,[3,3],scope='Conv2d_0b_3x3')

branch_1 = slim.conv2d(branch_1,96,[3,3],stride=2,

padding='VALID',scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net,[3,3],stride=2,padding='VALID',

scope='Max_Pool_1a_3x3')

net = tf.concat([branch_0,branch_1,branch_2],axis=3)

# Inception_Module_2

with tf.variable_scope('Mixed_6b'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,128,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,128,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,128,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,128,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,128,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,128,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

# Inception_Module_3

with tf.variable_scope('Mixed_6c'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,160,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,160,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

# Inception_Module_4

with tf.variable_scope('Mixed_6d'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,160,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,160,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

# Inception_Module_5

with tf.variable_scope('Mixed_6e'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,192,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,192,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,192,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

end_points['Mixed_6e'] = net

'''Inception 第三模组块'''

# Inception_Module_1

with tf.variable_scope('Mixed_7a'): # 8*8*1280

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0,320,[3,3],stride=2,

padding='VALID',scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,192,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

branch_1 = slim.conv2d(branch_1,192,[3,3],stride=2,padding='VALID',scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net,[3,3],stride=2,padding='VALID',

scope='MaxPool_1a_3x3')

net = tf.concat([branch_0,branch_1,branch_2],3)

# Inception_Module_2

with tf.variable_scope('Mixed_7b'): # 8*8*2048

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,320,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,384,[1,1],scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1,384,[1,3],scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1,384,[3,1],scope='Conv2d_0b_3x1')],axis=3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,448,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,384,[3,3],scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2,384,[1,3],scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2,384,[3,1],scope='Conv2d_0d_3x1')],axis=3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],3)

# Inception_Module_3

with tf.variable_scope('Mixed_7c'): # 8*8*2048

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,320,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,384,[1,1],scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1,384,[1,3],scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1,384,[3,1],scope='Conv2d_0b_3x1')],axis=3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,448,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,384,[3,3],scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2,384,[1,3],scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2,384,[3,1],scope='Conv2d_0d_3x1')],axis=3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],3)

return net,end_points

def inception_v3(inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.8,

prediction_fn=slim.softmax,

spatial_squeeze=True,

reuse=None,

scope='Inception_v3'):

with tf.variable_scope(scope,'Inception_v3',[inputs,num_classes],reuse=reuse) as scope:

with slim.arg_scope([slim.batch_norm,slim.dropout],

is_training=is_training):

net,end_points = inception_v3_base(inputs,scope=scope)

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],

stride=1,padding='SAME'):

# 17*17*768

aux_logits = end_points['Mixed_6e']

with tf.variable_scope('AuxLogits'):

aux_logits = slim.avg_pool2d(aux_logits,[5,5],stride=3,padding='VALID',scope='AvgPool_1a_5x5')

aux_logits = slim.conv2d(aux_logits,128,[1,1],scope='Conv2d_1b_1x1')

aux_logits = slim.conv2d(aux_logits,768,[5,5],

weights_initializer=trunc_normal(0.01),

padding='VALID',

scope='Conv2d_2a_5x5')

aux_logits = slim.conv2d(aux_logits,num_classes,[1,1],activation_fn=None,

normalizer_fn=None,weights_initializer=trunc_normal(0.001),

scope='Conv2d_2b_1x1')

if spatial_squeeze:

aux_logits = tf.squeeze(aux_logits,[1,2],

name='SpatialSqueeze')

end_points['AuxLogits'] = aux_logits

with tf.variable_scope('Logits'):

net = slim.avg_pool2d(net,[8,8],padding='VALID',

scope='AvgPool_1a_8x8')

net = slim.dropout(net,keep_prob=dropout_keep_prob,scope='Dropout_1b')

end_points['PreLogits'] = net

logits = slim.conv2d(net,num_classes,[1,1],activation_fn=None,

normalizer_fn=None,scope='Conv2d_1c_1x1')

if spatial_squeeze:

logits = tf.squeeze(logits,[1,2],name='SpatialSqueeze')

end_points['Logits'] = logits

end_points['Predictions'] = prediction_fn(logits,scope='Predictions')

return logits, end_points

def time_tensorflow_run(session, target, info_string):

'''

网路运行时间测试函数

:param session: 会话对象

:param target: 运行目标节点

:param info_string:提示字符

:return: None

'''

num_steps_burn_in = 10 # 预热轮数

total_duration = 0.0 # 总时间

total_duration_squared = 0.0 # 总时间平方和

for i in range(num_steps_burn_in + num_batches):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time # 本轮时间

if i >= num_steps_burn_in:

if not i % 10:

print('%s: step %d, duration = %.3f' %

(datetime.now(),i-num_steps_burn_in,duration))

total_duration += duration

total_duration_squared += duration**2

mn = total_duration/num_batches # 平均耗时

vr = total_duration_squared/num_batches - mn**2

sd = math.sqrt(vr)

print('%s:%s across %d steps, %.3f +/- %.3f sec / batch' %

(datetime.now(), info_string, num_batches, mn, sd))

if __name__ == '__main__':

batch_size=32

height,width = 299,299

inputs = tf.random_uniform((batch_size,height,width,3))

with slim.arg_scope(inception_v3_arg_scope()):

logits,end_points = inception_v3(inputs,is_training=False)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

num_batches = 100

time_tensorflow_run(sess,logits,'Forward')

运行起来时耗过长,就不贴了。