学hadoop有些时日了,一直都是把写好的Job push到cluster中的一个Node上,然后再来

hadoop jar XXX.jar YYY arg1 arg2

一直都用这个命令来提交Job。如果我们要开发hadoop的相关APP怎么办?那应该要远程向JobTracker提交Job才对呀。既然本地的Java代码是可以读写远程HDFS的,那应该也能远程提交代码。有什么方法呢?在经过一番google之后,似乎找到了一些方法:

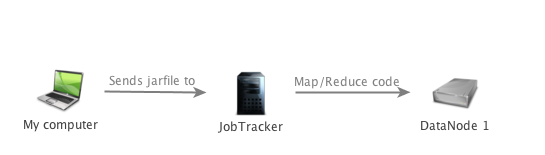

整个过程如上图所示。

准备工作:确保你的hadoop不是localhost,也就是说你的core-site.xml 等等那些文件填写的是你的真实IP,你可以从另外一台机器上访问到你的HDFS管理网页。

然后,在你的代码中,加入

Configuration conf = new Configuration();

conf.set("fs.default.name", "192.168.102.131:9000");

config.set("mapred.job.tracker", "192.168.102.131:9001");

你可以运行一下你的Job代码试试看,会有异常:

java.lang.ClassNotFoundException: com.pcbje.hadoopjobs.MyFirstJob$MyFirstMapper

怎么办?

出现这个异常的原因,应该是你的Job提交的时候没有把所依赖的类和一些jar包等提交上去,现在有解决办法:

java -jar myfirstjob-jar-with-dependencies.jar /input/path /output/path

可能还有好的解决办法,不知道能不能在程序中显式地写出所要提交的文件。

—————————————————————————————————————————————————————————————————————————

具体可以参考一下原文,这篇英文比我写的要详细很多,老外写东西就是详细。

Submitting a Hadoop MapReduce job to a remote JobTracker

While messing around with MapReduce code, I’ve found it to be a bit tedious having to generate the jarfile, copy it to the machine running the JobTracker, and then run the job every time the job has been altered. I should be able to run my jobs directly from my development environment, as illustrated in the figure below. This post explains how I’ve “solved” this problem. This may also help when integrating Hadoop with other applications. I do by no means claim that this is the proper way to do it, but it does the trick for me.

I assume that you have a (single-node) Hadoop 1.0.3 cluster properly installed on a dedicated or virtual machine. In this example, the JobTracker and HDFS resides on IP address 192.168.102.131.

Let’s start out with a simple job that does nothing except to start up and terminate:

package com.pcbje.hadoopjobs;

import java.io.IOException;

import java.util.Date;

import java.util.Iterator;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.FileInputFormat;

import org.apache.hadoop.mapred.FileOutputFormat;

import org.apache.hadoop.mapred.JobClient;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.MapReduceBase;

import org.apache.hadoop.mapred.Mapper;

import org.apache.hadoop.mapred.OutputCollector;

import org.apache.hadoop.mapred.Reporter;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapred.Reducer;

public class MyFirstJob {

public static void main(String[] args) throws Exception {

Configuration config = new Configuration();

JobConf job = new JobConf(config);

job.setJarByClass(MyFirstJob.class);

job.setJobName("My first job");

FileInputFormat.setInputPaths(job, new Path(args[0));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(MyFirstJob.MyFirstMapper.class);

job.setReducerClass(MyFirstJob.MyFirstReducer.class);

JobClient.runJob(job);

}

private static class MyFirstMapper extends MapReduceBase implements Mapper<longwritable, text,="" intwritable=""> {

public void map(LongWritable key, Text value, OutputCollector<text, intwritable=""> output, Reporter reporter) throws IOException {

}

}

private static class MyFirstReducer extends MapReduceBase implements Reducer<text, intwritable,="" text,="" intwritable=""> {

public void reduce(Text key, Iterator values, OutputCollector<text, intwritable=""> output, Reporter reporter) throws IOException {

}

}

}

Now, most of the examples you find online typically shows you a local mode setup where all the components of Hadoop (HDFS, JobTracker, etc) run on the same machine. A typical mapred-site.xml configuration might look like:

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

</configuration>

As far as I can tell, such a configuration requires that jobs are submitted from the same node as the JobTracker. This is what I want to avoid. The first thing to do is to change the fs.default.name attribute to the IP address of my NameNode.

Configuration conf = new Configuration();

conf.set("fs.default.name", "192.168.102.131:9000");

And in core-site.xml:

<configuration>

<property>

<name>fs.default.name</name>

<value>192.168.102.131:9000</value>

</property>

</configuration>

This tells the job to connect to the HDFS residing on a different machine. Running the job with this configuration will read from and write to the remote HDFS correctly, but the JobTracker at 192.168.102.131:9001 will not notice it. This means that the admin panel at 192.168.102.131:50030 wont list the job either. So the next thing to do is to tell the job configuration to submit the job to the appropriate JobTracker like this:

config.set("mapred.job.tracker", "192.168.102.131:9001");

You also need to change mapred-site.xml to allow external connections, this can be done by replacing “localhost” with the JobTracker’s IP address:

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>192.168.102.131:9001</value>

</property>

</configuration>

Restart hadoop.

Upon trying to run your job, you may get an exception like this:

SEVERE: PriviledgedActionException as:[user] cause:org.apache.hadoop.security.AccessControlException: org.apache.hadoop.security.AccessControlException: Permission denied: user=[user], access=WRITE, inode="mapred":root:supergroup:rwxr-xr-x

If you do, this may be solved by adding the following mapred-site.xml:

<configuration>

<property>

<name>mapreduce.jobtracker.staging.root.dir</name>

<value>/user</value>

</property>

</configuration>

And then execute the following commands:

stop-mapred.sh start-mapred.sh

When you now submit your job, it should be picked up by the admin page over at :50030. However, it will most probably fail and the log will be telling you something like:

java.lang.ClassNotFoundException: com.pcbje.hadoopjobs.MyFirstJob$MyFirstMapper

In order to fix this, you have to ensure that all dependencies of the submitted job are available to the JobTracker. This can be achieved by exporting the project in as a runnable jar, and then execute something like:

java -jar myfirstjob-jar-with-dependencies.jar /input/path /output/path

If your user has the appropriate permissions to the input and out directory on HDFS, the job should now run successfully. This can be verified in the console and on the administration panel.

Manually exporting runnable jars requires a lot of clicks in IDEs such as Eclipse. If you are using Maven, you can tell it to build the jar with its dependencies (See this answer for details). This would make the process a whole lot easier.

Finally, to make it even easier, place a tiny bash-script in the same folder as pom.xml for building the maven project and executing the jar:

#!/bin/sh mvn assembly:assembly java -jar $1 $2 $3

After making the script executable, you can build and submit the job with the following command:

./build-and-run-job target/myfirstjob-jar-with-dependencies.jar /input/path /output/path