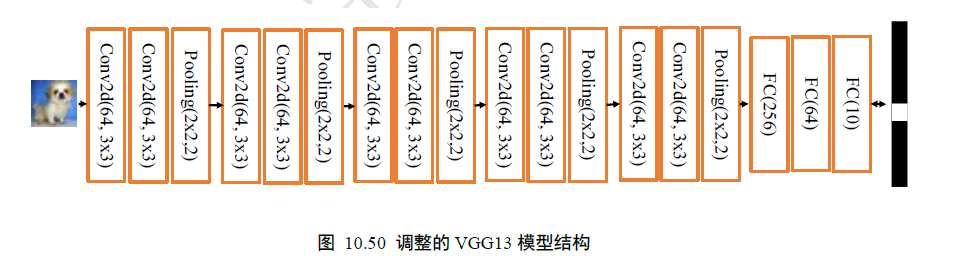

网络结构如下:

代码如下:

1 # encoding: utf-8 2 import tensorflow as tf 3 from tensorflow import keras 4 from tensorflow.keras import layers, Sequential, losses, optimizers, datasets 5 import matplotlib.pyplot as plt 6 7 # load data 8 (x, y), (x_test, y_test) = datasets.cifar10.load_data() 9 y = tf.squeeze(y, axis=1) 10 y_test = tf.squeeze(y_test, axis=1) 11 12 13 # print(x.shape, y.shape, x_test.shape, y_test.shape) 14 # (50000, 32, 32, 3) (50000,) (10000, 32, 32, 3) (10000,) 15 16 17 def pre_process(X, Y): 18 x_reshape = tf.cast(X, dtype=tf.float32) / 255. # 先将类型转化为float32,再归一到0-1 19 # x = tf.reshape(x, [-1, 32 * 32]) # 不知道x数量,用-1代替,转化为一维1024个数据 20 y_reshape = tf.cast(Y, dtype=tf.int32) # 转化为整型32 21 y_onehot = tf.one_hot(y_reshape, depth=10) # 训练数据所需的one-hot编码 22 return x_reshape, y_onehot 23 24 25 # create data_set 26 train_db = tf.data.Dataset.from_tensor_slices((x, y)) 27 train_db = train_db.shuffle(1000).map(pre_process).batch(128) 28 29 test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test)) 30 test_db = test_db.shuffle(1000).map(pre_process).batch(128) 31 32 # 观察 33 # sample = next(iter(train_db)) 34 # print('sample: ', sample[0].shape, sample[1].shape, 35 # tf.reduce_min(sample[0]), tf.reduce_max(sample[0])) 36 # sample: (128, 3, 1024) (128, 10) 37 # tf.Tensor(0.0, shape=(), dtype=float32) 38 # tf.Tensor(1.0, shape=(), dtype=float32) 39 40 41 # 卷积子网络 42 CONV_Net = Sequential([ 43 # 1 44 layers.Conv2D(64, kernel_size=3, padding='SAME', activation='relu'), 45 layers.Conv2D(64, kernel_size=3, padding='SAME', activation='relu'), 46 layers.MaxPooling2D(pool_size=[2, 2], strides=2, padding='SAME'), 47 # 2 48 layers.Conv2D(128, kernel_size=3, padding='SAME', activation='relu'), 49 layers.Conv2D(128, kernel_size=3, padding='SAME', activation='relu'), 50 layers.MaxPooling2D(pool_size=[2, 2], strides=2, padding='SAME'), 51 # 3 52 layers.Conv2D(256, kernel_size=3, padding='SAME', activation='relu'), 53 layers.Conv2D(256, kernel_size=3, padding='SAME', activation='relu'), 54 layers.MaxPooling2D(pool_size=[2, 2], strides=2, padding='SAME'), 55 # 4 56 layers.Conv2D(512, kernel_size=3, padding='SAME', activation='relu'), 57 layers.Conv2D(512, kernel_size=3, padding='SAME', activation='relu'), 58 layers.MaxPooling2D(pool_size=[2, 2], strides=2, padding='SAME'), 59 # 5 60 layers.Conv2D(512, kernel_size=3, padding='SAME', activation='relu'), 61 layers.Conv2D(512, kernel_size=3, padding='SAME', activation='relu'), 62 layers.MaxPooling2D(pool_size=[2, 2], strides=2, padding='SAME'), 63 ]) 64 65 FC_Net = Sequential([ 66 layers.Dense(256, activation='relu'), 67 layers.Dense(128, activation='relu'), 68 layers.Dense(10, activation=None), 69 ]) 70 71 CONV_Net.build(input_shape=[None, 32, 32, 3]) 72 CONV_Net.summary() 73 74 FC_Net.build(input_shape=[None, 512]) 75 FC_Net.summary() 76 77 78 def main(): 79 optimizer = tf.keras.optimizers.RMSprop(0.001) # 创建优化器,指定学习率 80 criteon = losses.CategoricalCrossentropy(from_logits=True) 81 Epoch = 50 82 # 保存训练和测试过程中的误差情况 83 train_tot_loss = [] 84 test_tot_loss = [] 85 86 for epoch in range(Epoch): 87 cor, tot = 0, 0 88 for step, (x, y) in enumerate(train_db): # (128, 32, 32, 3), (128, 10) 89 with tf.GradientTape() as tape: # 构建梯度环境 90 # train 91 out_conv = CONV_Net(x) # (128, 1, 1, 512) 92 out = tf.reshape(out_conv, [-1, 512]) # (128, 512) 93 out_fc = FC_Net(out) # (128, 10) tf.float32 94 95 # calculate loss 96 y = tf.cast(y, dtype=tf.float32) 97 loss = criteon(y, out_fc) 98 variables = CONV_Net.trainable_variables + FC_Net.trainable_variables 99 grads = tape.gradient(loss, variables) 100 optimizer.apply_gradients(zip(grads, variables)) 101 102 # train var 103 train_out = tf.nn.softmax(out_fc, axis=1) 104 train_out = tf.argmax(train_out, axis=1) 105 train_out = tf.cast(train_out, dtype=tf.int64) 106 107 train_y = tf.nn.softmax(y, axis=1) 108 train_y = tf.argmax(train_y, axis=1) 109 110 # calculate train var loss 111 train_cor = tf.equal(train_y, train_out) 112 train_cor = tf.cast(train_cor, dtype=tf.float32) 113 train_cor = tf.reduce_sum(train_cor) 114 cor += train_cor 115 tot += x.shape[0] 116 117 print('After %d Epoch' % epoch) 118 print('training acc is ', cor / tot) 119 train_tot_loss.append(cor / tot) 120 121 correct, total = 0, 0 122 for x, y in test_db: 123 # test 124 pred_conv = CONV_Net(x) 125 pred_conv = tf.reshape(pred_conv, [-1, 512]) 126 pred = FC_Net(pred_conv) 127 128 # test var 129 test_out = tf.nn.softmax(pred, axis=1) 130 test_out = tf.argmax(test_out, axis=1) 131 test_out = tf.cast(test_out, dtype=tf.int64) 132 133 test_y = tf.nn.softmax(y, axis=1) 134 test_y = tf.argmax(test_y, axis=1) 135 136 test_cor = tf.equal(test_y, test_out) 137 test_cor = tf.cast(test_cor, dtype=tf.float32) 138 test_cor = tf.reduce_sum(test_cor) 139 correct += test_cor 140 total += x.shape[0] 141 142 print('testing acc is : ', correct / total) 143 test_tot_loss.append(correct / total) 144 145 plt.figure() 146 plt.plot(train_tot_loss, 'b', label='train') 147 plt.plot(test_tot_loss, 'r', label='test') 148 plt.xlabel('Epoch') 149 plt.ylabel('ACC') 150 plt.legend() 151 # plt.savefig('exam8.3_train_test_CNN1.png') 152 plt.show() 153 154 155 if __name__ == "__main__": 156 main()

注释:

(1)由于笔记本配置的原因,程序没有跑完,今后有合适的机器再跑;

(2)对CIFAR数据集的理解不够!需要进一步加深;

(3)下次更新ResNet18网络与CIFAR10数据集实战。

2020.5.16 --------更新------

(1)采用谷歌CoLab在线跑代码,解决了机器配置不足的问题

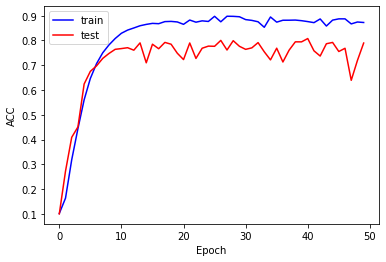

训练和测试结果如下:

最后测试准确率到78.95%,与书上77.5%近似。