环境:Hadoop 2.6.0 + hive-0.14.0

问题出现的背景:在hive中建表 (建表语句如下),并且表的字段中有Map,Set,Collection等集合类型。

CREATE EXTERNAL TABLE agnes_app_hour(

start_id string,

current_time string,

app_name string,

app_version string,

app_store string,

send_time string,

letv_uid string,

app_run_id string,

start_from string,

props map<string,string>,

ip string,

server_time string)

PARTITIONED BY (

dt string,

hour string,

product string)

ROW FORMAT DELIMITED

COLLECTION ITEMS TERMINATED BY ','

MAP KEYS TERMINATED BY ':'

STORED AS RCFILE ;

执行hive语句,执行

hive - e "select count(1) from temp_agnes_app_hour ; "

提交map/reduce 到yarn时报出如下异常:

Diagnostic Messages for this Task:

Error: java.io.IOException: java.lang.reflect.InvocationTargetException

at org.apache.hadoop.hive.io.HiveIOExceptionHandlerChain.handleRecordReaderCreationException(HiveIOExceptionHandlerChain.java:97)

at org.apache.hadoop.hive.io.HiveIOExceptionHandlerUtil.handleRecordReaderCreationException(HiveIOExceptionHandlerUtil.java:57)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.initNextRecordReader(HadoopShimsSecure.java:312)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.<init>(HadoopShimsSecure.java:259)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileInputFormatShim.getRecordReader(HadoopShimsSecure.java:386)

at org.apache.hadoop.hive.ql.io.CombineHiveInputFormat.getRecordReader(CombineHiveInputFormat.java:652)

at org.apache.hadoop.mapred.MapTask$TrackedRecordReader.<init>(MapTask.java:169)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:429)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:163)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1628)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.initNextRecordReader(HadoopShimsSecure.java:298)

... 11 more

Caused by: java.lang.RuntimeException: native-lzo library not available

at com.hadoop.compression.lzo.LzoCodec.getDecompressorType(LzoCodec.java:187)

at org.apache.hadoop.hive.ql.io.CodecPool.getDecompressor(CodecPool.java:122)

at org.apache.hadoop.hive.ql.io.RCFile$Reader.init(RCFile.java:1518)

at org.apache.hadoop.hive.ql.io.RCFile$Reader.<init>(RCFile.java:1363)

at org.apache.hadoop.hive.ql.io.RCFile$Reader.<init>(RCFile.java:1343)

at org.apache.hadoop.hive.ql.io.RCFileRecordReader.<init>(RCFileRecordReader.java:100)

at org.apache.hadoop.hive.ql.io.RCFileInputFormat.getRecordReader(RCFileInputFormat.java:57)

at org.apache.hadoop.hive.ql.io.CombineHiveRecordReader.<init>(CombineHiveRecordReader.java:65)

针对"native-lzo library not available" 异常即lzo安装的异常。

===========================================

####安装lzo的过程

1.验证安装环境(以root账户执行):

yum -y install lzo-devel zlib-devel gcc autoconf automake libtool

2.安装LZO (以下以haodop用户执行)

wget http://www.oberhumer.com/opensource/lzo/download/lzo-2.06.tar.gz

tar -zxvf lzo-2.06.tar.gz

./configure -enable-shared -prefix=/usr/local/hadoop/lzo/

make && make test && make install

3.安装LZOP

wget http://www.lzop.org/download/lzop-1.03.tar.gz

tar -zxvf lzop-1.03.tar.gz

./configure -enable-shared -prefix=/usr/local/hadoop/lzop

make && make install

4.把lzop复制到/usr/bin/

ln -s /usr/local/hadoop/lzop/bin/lzop /usr/bin/lzop

5.测试lzop

lzop /home/hadoop/data/access_20131219.log

会在生成一个lzo后缀的压缩文件: /home/hadoop/data/access_20131219.log.lzo

2,3,4,5 可以使用如下脚本批量执行。

####安装Hadoop-LZO

1. 下载Hadoop-LZO源码,

hadoop-lzo:下载地址

https://github.com/twitter/hadoop-lzo

https://github.com/twitter/hadoop-lzo

git clone https://github.com/twitter/hadoop-lzo

ps: 下载的时,有时候会连接超时,所以多试几次,就可以下载。

2.编译hadoop-lzo的源码

cd hadoop-lzo

mvn clean package

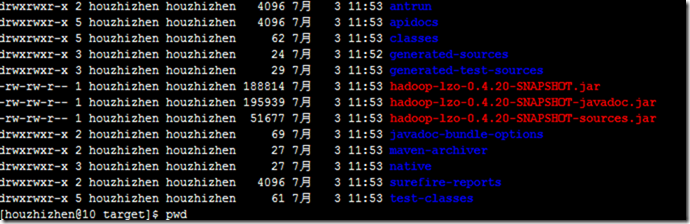

cd ~/twiter-hadoop-lzo/hadoop-lzo/target/

ls

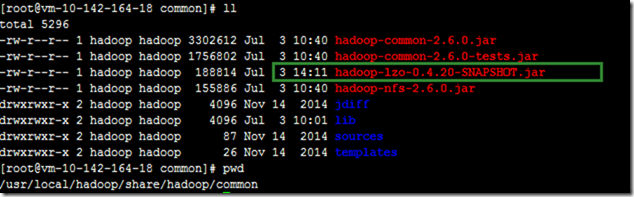

3.上传hadoop-lzo-0.4.20-SNAPSHOT.jar 到hadoop各个节点的安装目录的/usr/local/hadoop/share/hadoop/common/目录下

hadoop-lzo.jar文件:

ls /usr/local/hadoop/share/hadoop/common/

4. 修改各个hadoop节点的hadoop的配置文件:

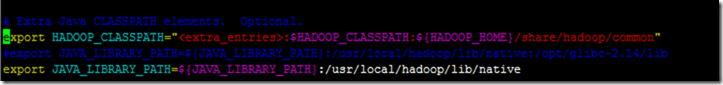

vi hadoop-env.sh :

添加如下配置:

# Extra Java CLASSPATH elements. Optional.

export HADOOP_CLASSPATH="<extra_entries>:$HADOOP_CLASSPATH:${HADOOP_HOME}/share/hadoop/common"

#export JAVA_LIBRARY_PATH=${JAVA_LIBRARY_PATH}:/usr/local/hadoop/lib/native:/opt/glibc-2.14/lib

export JAVA_LIBRARY_PATH=${JAVA_LIBRARY_PATH}:/usr/local/hadoop/lib/native

vi core-site.xml :

添加如下配置:

<property >

<name >io.compression.codec.lzo.class </name>

<value >com.hadoop.compression.lzo.LzoCodec </value>

</property >

<property >

<name >io.compression.codecs </name>

<value> org.apache.hadoop.io.compress.DefaultCodec,com.hadoop.compression.lzo.LzoCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.DefaultCodec,com.hadoop.compression.lzo.LzopCodec,org.apache.hadoop.io.compress.BZip2Codec </value>

</property >

vi mapred-site.xml

<property>

<name >mapred.compress.map.output </name>

<value >true </value>

</property>

<property>

<name >mapred.map.output.compression.codec </name>

<value >com.hadoop.compression.lzo.LzoCodec </value>

</property>

<property>

<name >mapreduce.map.env </name>

<value >LD_LIBRARY_PATH=/ usr/local/hadoop /lzo/lib</value>

</property >

<property >

<name >mapreduce.reduce.env </name>

<value >LD_LIBRARY_PATH=/ usr/local/hadoop /lzo/lib</value>

</property >

<property >

<name >mapred.child.env </name>

<value >LD_LIBRARY_PATH=/ usr/local/hadoop /lzo/lib</value>

</property >

5.分发 配置文件到所有的hadoop服务器节点

#分发hadoop-lzo.jar

./upgrade.sh distribute temp/allnodes_hosts /letv/setupHadoop/hadoop-2.6.0/share/hadoop/common/hadoop-lzo-0.4.20-SNAPSHOT.jar /usr/local/hadoop/share/hadoop/common/

#分发修改好的hadoop配置文件

./upgrade.sh distribute cluster_nodes hadoop-2.6.0/etc/hadoop/mapred-site.xml /usr/local/hadoop/etc/hadoop/

./upgrade.sh distribute cluster_nodes hadoop-2.6.0/etc/hadoop/core-site.xml /usr/local/hadoop/etc/hadoop/

./upgrade.sh distribute cluster_nodes hadoop-2.6.0/etc/hadoop/hadoop-env.sh /usr/local/hadoop/etc/hadoop/