这是MATLAB深度学习工具箱中CNN代码的学习笔记。

工具箱可以从github上下载:https://github.com/rasmusbergpalm/DeepLearnToolbox

建议参考CNN代码分析笔记:https://blog.csdn.net/u013007900/article/details/51428186

讲解误差反向传播的笔记:https://blog.csdn.net/viatorsun/article/details/82696475

MATLAB卷积运算笔记:https://blog.csdn.net/baoxiao7872/article/details/80435214

推荐阅读:《深度学习》

在CNN的示例中,使用自带的数据(手写数字的图片)进行CNN的训练和测试。

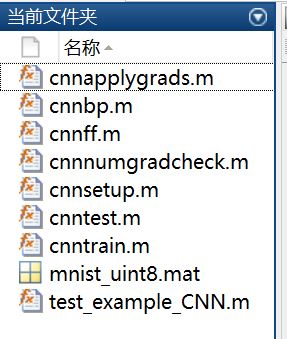

全部代码名称如下图所示。

其中test_example_CNN为测试示例,mnist_uint8为数据,该部分代码及注释如下:

function test_example_CNN load mnist_uint8; %手写数字样本,每个样本特征为28*28的向量 train_x = double(reshape(train_x',28,28,60000))/255; %训练数据,重塑数组为28*28,60000份,并归一化 test_x = double(reshape(test_x',28,28,10000))/255; %测试数据,10000份 train_y = double(train_y'); test_y = double(test_y'); %% ex1 Train a 6c-2s-12c-2s Convolutional neural network %will run 1 epoch in about 200 second and get around 11% error. %With 100 epochs you'll get around 1.2% error rand('state',0) %每次产生的随机数都相同 cnn.layers = { struct('type', 'i') %input layer 输入层 struct('type', 'c', 'outputmaps', 6, 'kernelsize', 5) %convolution layer 卷积层 % outputmaps:卷积输出特征图像个数 6 % kernelsize:卷积核尺寸 5 struct('type', 's', 'scale', 2) %sub sampling layer 降采样层,功能类似于pooling % 降采样尺寸 2 struct('type', 'c', 'outputmaps', 12, 'kernelsize', 5) %convolution layer 卷积层 % outputmaps:卷积输出特征图像个数 12 % kernelsize:卷积核尺寸 5 struct('type', 's', 'scale', 2) %subsampling layer 降采样层 %降采样尺寸 2 }; % 此处定义神经网络一共有5层:输入层-卷积层-降采样层-卷积层-降采样层 opts.alpha = 1; %学习效率 opts.batchsize = 50; %批训练样本数量 opts.numepochs = 1; %迭代次数 cnn = cnnsetup(cnn, train_x, train_y); %CNN初始化 cnn = cnntrain(cnn, train_x, train_y, opts); %训练CNN [er, bad] = cnntest(cnn, test_x, test_y); %测试CNN %plot mean squared error figure; plot(cnn.rL); %画出MSE,均方误差 assert(er<0.12, 'Too big error');

根据代码运行顺序,接下来运行cnnsetup,对CNN中的参数进行初始化,主要设置卷积核初始值和偏置初始值。代码及注释如下:

%% 初始化CNN参数 % 卷积核,偏置,尾部单层感知机 function net = cnnsetup(net, x, y) assert(~isOctave() || compare_versions(OCTAVE_VERSION, '3.8.0', '>='), ['Octave 3.8.0 or greater is required for CNNs as there is a bug in convolution in previous versions. See http://savannah.gnu.org/bugs/?39314. Your version is ' myOctaveVersion]); inputmaps = 1; % 每次输入map个数 mapsize = size(squeeze(x(:, :, 1))); % 将3维数据压缩成2维数据并计算矩阵尺寸 % mapsize = [28, 28] for l = 1 : numel(net.layers) % 对各层神经网络的参数进行初始化设置 if strcmp(net.layers{l}.type, 's') %subsampling layer 若为降采样层 mapsize = mapsize / net.layers{l}.scale; % 若l=3,mapsize = [24, 24]/2 = [12, 12] % 若l=5,mapsize = [8, 8]/2 = [4, 4] assert(all(floor(mapsize)==mapsize), ['Layer ' num2str(l) ' size must be integer. Actual: ' num2str(mapsize)]); for j = 1 : inputmaps net.layers{l}.b{j} = 0; %一个降采样层的所有输入map,偏置b初始化为0 end end if strcmp(net.layers{l}.type, 'c') %卷积层 mapsize = mapsize - net.layers{l}.kernelsize + 1; % 卷积后map的尺寸(默认步长stride为1) % 计算方式:(原图尺寸 - 卷积核尺寸)/步长 + 1 % 若l=2,mapsize = [28, 28] - 5 + 1 = [24, 24] % 若l=4,mapsize = [12, 12] - 5 + 1 = [8, 8] fan_out = net.layers{l}.outputmaps * net.layers{l}.kernelsize ^ 2; % 此次卷积核神经元总数 % 若l=2,fan_out = 6 * 5^2 = 150 % 若l=4,fan_out = 12 * 5^2 = 300 for j = 1 : net.layers{l}.outputmaps % output map fan_in = inputmaps * net.layers{l}.kernelsize ^ 2; % 每个输出map对应卷积核神经元总数 % 若l=2,j=1, fan_in = 1 * 5^2 = 25; % 若l=4,j=1,fan_in= 6 * 5^2 = 150 for i = 1 : inputmaps % input map net.layers{l}.k{i}{j} = (rand(net.layers{l}.kernelsize) - 0.5) * 2 * sqrt(6 / (fan_in + fan_out)); % 对每个卷积核值进行初始化 % k{i}{j} = (随机5*5矩阵 - 0.5)* 2 * sqrt(6/(fan_in + fan_out)) % 若l=2,该层共有1*6=6个卷积核 % 若l=4,该层共有6*12=72个卷积核,上层神经网络生成的每个特征图像对应6个卷积核 end net.layers{l}.b{j} = 0; % 偏置为0 % 每个输出map只有一个bias,并非每个filter一个bias end inputmaps = net.layers{l}.outputmaps; %更新下一层的输入特征图像个数 % 若l=2,inputmaps = 6 % 若l=4,inputmaps = 12 end end % 'onum' is the number of labels, that's why it is calculated using size(y, 1). If you have 20 labels so the output of the network will be 20 neurons. % 'fvnum' is the number of output neurons at the last layer, the layer just before the output layer. % 'ffb' is the biases of the output neurons. % 'ffW' is the weights between the last layer and the output neurons. Note that the last layer is fully connected to the output layer, that's why the size of the weights is (onum * fvnum) fvnum = prod(mapsize) * inputmaps; % 最后一层(输出层前一层)神经元数量 % fvnum = 4 * 4 * 12 = 196 onum = size(y, 1); %标签总个数 % onum = 10 net.ffb = zeros(onum, 1); %输出神经元偏置 net.ffW = (rand(onum, fvnum) - 0.5) * 2 * sqrt(6 / (onum + fvnum)); %最后一层与输出神经元的连接权重 % ffW = (随机10*196矩阵 - 0.5) * 2 * sqrt(6 / (10 + 196)) end

接下来运行cnntrain,利用分批数据训练神经网络,代码及注释如下:

%% 训练CNN function net = cnntrain(net, x, y, opts) m = size(x, 3); % 训练样本总个数 % m = 60000 numbatches = m / opts.batchsize; % 能够分成的批次总数 % numbatches = 60000/50 if rem(numbatches, 1) ~= 0 error('numbatches not integer'); end net.rL = []; for i = 1 : opts.numepochs %迭代次数 disp(['epoch ' num2str(i) '/' num2str(opts.numepochs)]); tic; %计时 kk = randperm(m); %生成1~m随机序号向量 for l = 1 : numbatches batch_x = x(:, :, kk((l - 1) * opts.batchsize + 1 : l * opts.batchsize)); %随机抽取一批样本 batch_y = y(:, kk((l - 1) * opts.batchsize + 1 : l * opts.batchsize)); net = cnnff(net, batch_x); %前向过程 net = cnnbp(net, batch_y); %计算反向误差,计算梯度 net = cnnapplygrads(net, opts); %卷积核权重更新 if isempty(net.rL) net.rL(1) = net.L; end net.rL(end + 1) = 0.99 * net.rL(end) + 0.01 * net.L; % net.L为损失函数MSE % net.rL为损失函数的平滑序列 end toc; end end

在cnntrain中,根据运行顺序,首先运行cnnff,进行前向过程,将数据输入神经网络,获得相应的输出。代码及注释如下:

%% 前向过程 function net = cnnff(net, x) n = numel(net.layers); % 神经网络层数 n=5 net.layers{1}.a{1} = x; % 第一层神经网络(输入层) % a是输入map,为一个[28, 28, 50]的矩阵(具体情况具体定) inputmaps = 1; for l = 2 : n % for each layer if strcmp(net.layers{l}.type, 'c') %卷积层 % !!below can probably be handled by insane matrix operations for j = 1 : net.layers{l}.outputmaps % for each output map % create temp output map z = zeros(size(net.layers{l - 1}.a{1}) - [net.layers{l}.kernelsize - 1 net.layers{l}.kernelsize - 1 0]); % z用于存储输出特征图像值 % 若l=2,size(net.layers{l - 1}.a{1}) = [28, 28, 50], % z = zeros([28, 28, 50] - [5 - 1, 5 - 1, 0]) = zeros([24, 24, 50]) for i = 1 : inputmaps % for each input map % convolve with corresponding kernel and add to temp output map z = z + convn(net.layers{l - 1}.a{i}, net.layers{l}.k{i}{j}, 'valid'); % 将输入与卷积核进行卷积运算,输出未被填充0的部分 end % add bias, pass through nonlinearity net.layers{l}.a{j} = sigm(z + net.layers{l}.b{j}); % 加偏置,采用sigmoid函数进行非线性化 % 获得激活函数结果,作为该层输出 end % set number of input maps to this layers number of outputmaps inputmaps = net.layers{l}.outputmaps; % 下一层的输入为该层输出特征图像个数 elseif strcmp(net.layers{l}.type, 's') %降采样层 % downsample for j = 1 : inputmaps z = convn(net.layers{l - 1}.a{j}, ones(net.layers{l}.scale) / (net.layers{l}.scale ^ 2), 'valid'); % !! replace with variable % 上一层输出与2*2且值全为1/4的矩阵进行卷积运算,返回未被填充的部分 net.layers{l}.a{j} = z(1 : net.layers{l}.scale : end, 1 : net.layers{l}.scale : end, :); end end end % 尾部单层感知机 % concatenate all end layer feature maps into vector net.fv = []; for j = 1 : numel(net.layers{n}.a) sa = size(net.layers{n}.a{j}); %最后一层 % sa = [4, 4, 50] net.fv = [net.fv; reshape(net.layers{n}.a{j}, sa(1) * sa(2), sa(3))]; % 重塑为[12, 16, 50]; end % feedforward into output perceptrons net.o = sigm(net.ffW * net.fv + repmat(net.ffb, 1, size(net.fv, 2))); %输出 % sigmoid函数非线性化 % sigmoid([10, 196] * [196, 50] + 50份偏置) % 输出乘以权重 end

在cnntrain中,继续运行cnnbp,计算反向误差和梯度。这一部分比较难理解,建议先看一下反向误差传播的原理。代码及注释如下:

function net = cnnbp(net, y) n = numel(net.layers); % error net.e = net.o - y; %神经网络前向过程的输出与期望输出的误差 % loss function net.L = 1/2* sum(net.e(:) .^ 2) / size(net.e, 2); % 损失函数:均方误差(1/2方便计算微分) %% backprop deltas 误差反向传播 net.od = net.e .* (net.o .* (1 - net.o)); % output delta % 输出层误差向上一层(单层感知机)传递 % error * output * (1-output) 为损失函数相对于参数的偏微分,没考虑学习速度 net.fvd = (net.ffW' * net.od); % feature vector delta 特征向量误差传递到单层感知机 if strcmp(net.layers{n}.type, 'c') % only conv layers has sigm function 前一层为卷积层时 net.fvd = net.fvd .* (net.fv .* (1 - net.fv)); % sigmoid求导,误差再求导一次,因为卷积结果进行了非线性化 end % reshape feature vector deltas into output map style sa = size(net.layers{n}.a{1}); %最后一层输出map尺寸4*4,共12个,50张 fvnum = sa(1) * sa(2); %4*4 for j = 1 : numel(net.layers{n}.a) %j=1:12 net.layers{n}.d{j} = reshape(net.fvd(((j - 1) * fvnum + 1) : j * fvnum, :), sa(1), sa(2), sa(3)); % 4*4*50 误差矩阵 end for l = (n - 1) : -1 : 1 %从后向前 if strcmp(net.layers{l}.type, 'c') %卷积层,误差从降采样层获得 for j = 1 : numel(net.layers{l}.a) net.layers{l}.d{j} = net.layers{l}.a{j} .* (1 - net.layers{l}.a{j}) .* (expand(net.layers{l + 1}.d{j}, [net.layers{l + 1}.scale net.layers{l + 1}.scale 1]) / net.layers{l + 1}.scale ^ 2); % expand:多项式展开相乘,将后一层的误差矩阵展开还原(降采样的逆过程) % 仍然为error*output*(1-output)形式 end elseif strcmp(net.layers{l}.type, 's') %降采样层,误差从卷积层获得,进行反卷积过程 for i = 1 : numel(net.layers{l}.a) z = zeros(size(net.layers{l}.a{1})); for j = 1 : numel(net.layers{l + 1}.a) z = z + convn(net.layers{l + 1}.d{j}, rot180(net.layers{l + 1}.k{i}{j}), 'full'); %卷积核旋转180度,反卷积 end net.layers{l}.d{i} = z; end end end %% calc gradients 计算梯度 for l = 2 : n %从前向后 if strcmp(net.layers{l}.type, 'c') %卷积层 for j = 1 : numel(net.layers{l}.a) for i = 1 : numel(net.layers{l - 1}.a) %前一层 net.layers{l}.dk{i}{j} = convn(flipall(net.layers{l - 1}.a{i}), net.layers{l}.d{j}, 'valid') / size(net.layers{l}.d{j}, 3); % 卷积核修改量=输入图像*输出图像误差矩阵 end net.layers{l}.db{j} = sum(net.layers{l}.d{j}(:)) / size(net.layers{l}.d{j}, 3); %偏置 end end end % 计算单层感知机梯度(修改量) net.dffW = net.od * (net.fv)' / size(net.od, 2); % 权重修改量 net.dffb = mean(net.od, 2); % 偏置修改量 function X = rot180(X) X = flipdim(flipdim(X, 1), 2); end end

在cnntrain中,继续运行cnnapplygrads,根据计算的修改量,更新卷积核的权重。这部分代码我的工具箱里没有,因此我从网上的代码中复制了一份。代码及注释如下:

function net = cnnapplygrads(net, opts) %使用梯度 %特征抽取层(卷机降采样)的权重更新 for l = 2 : numel(net.layers) %从第二层开始 if strcmp(net.layers{l}.type, 'c')%对于每个卷积层 for j = 1 : numel(net.layers{l}.a)%枚举该层的每个输出 %枚举所有卷积核net.layers{l}.k{ii}{j} for ii = 1 : numel(net.layers{l - 1}.a)%枚举上层的每个输出 net.layers{l}.k{ii}{j} = net.layers{l}.k{ii}{j} - opts.alpha * net.layers{l}.dk{ii}{j}; % 修正卷积核值 end % 修正偏置bias net.layers{l}.b{j} = net.layers{l}.b{j} - opts.alpha * net.layers{l}.db{j}; end end end %单层感知机的权重更新 net.ffW = net.ffW - opts.alpha * net.dffW; net.ffb = net.ffb - opts.alpha * net.dffb; end

自此,CNN的训练已经完成。接下来利用cnntest测试训练的深度学习神经网络分类准确程度如何。

function [er, bad] = cnntest(net, x, y) % feedforward net = cnnff(net, x); % 前向传播 [~, h] = max(net.o); %输出结果最大值 [~, a] = max(y); bad = find(h ~= a); % 预测错误的样本数量 er = numel(bad) / size(y, 2); % 计算错误概率 end