平台及框架:python3 + anaconda + pytorch + pycharm

我主要是根据陈云的《深度学习框架PyTorch入门与实践》来学习的,书中第二章的一个示例是利用卷积神经网络LeNet进行CIFAR-10分类。

原书中的代码是在IPython或Jupyter Notebook中写的,在pycharm中写的时候遇到一些问题,在代码中有注释。

下面附上LeNet进行CIFAR-10分类的python代码:

import torch as t from torch.autograd import Variable import torch.nn as nn import torch.nn.functional as F import torchvision as tv import torchvision.transforms as transforms from torchvision.transforms import ToPILImage from torch import optim show = ToPILImage() # 数据预处理 transform = transforms.Compose([ transforms.ToTensor(), # 转为Tensor transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), # 数据归一化 # ToTensor()能够把灰度范围从0-255变换到0-1之间,而后面的transform.Normalize()则把0-1变换到(-1,1) # image=(image-mean)/std # 其中mean和std分别通过(0.5,0.5,0.5)和(0.5,0.5,0.5)进行指定。原来的0-1最小值0则变成(0-0.5)/0.5=-1,而最大值1则变成(1-0.5)/0.5=1 # 前面的(0.5,0.5,0.5)是RGB三个通道上的均值, 后面(0.5, 0.5, 0.5)是三个通道的标准差 ]) if __name__ == '__main__': # 输出4张图片时,返回多线程出错的解决方法 # 训练集 trainset = tv.datasets.CIFAR10(root='E:/pycharm projects/book1/data', train=True, download=True, transform=transform) trainloader = t.utils.data.DataLoader( trainset, batch_size=4, shuffle=True, num_workers=2 ) # 测试集 testset = tv.datasets.CIFAR10( 'E:/pycharm projects/book1/data', train=False, download=True, transform=transform ) testloader = t.utils.data.DataLoader( testset, batch_size=4, shuffle=False, num_workers=2 ) classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck') # (data, label) = trainset[100] # print(classes[label]) # show((data + 1) / 2).resize((100, 100)).show() # 按照书中不加.show()无法打印出图片 # dataiter = iter(trainloader) # images, labels = dataiter.next() # print(' '.join('%11s' % classes[labels[j]] for j in range(4))) # show(tv.utils.make_grid((images + 1) / 2)).resize((400, 100)).show() # 定义网络 class Net(nn.Module): def __init__(self): # 执行父类的构造函数 super(Net, self).__init__() # '1':输入图片为单通道 # '6':输出通道数 # '5':卷积核5*5 # 输入层 self.conv1 = nn.Conv2d(3, 6, 5) # ‘3’表示三通道彩图 # 卷积层 self.conv2 = nn.Conv2d(6, 16, 5) # 全连接层 self.fc1 = nn.Linear(16*5*5, 120) self.fc2 = nn.Linear(120, 84) self.fc3 = nn.Linear(84, 10) def forward(self, x): # 卷积-激活-池化 x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2)) x = F.max_pool2d(F.relu(self.conv2(x)), 2) # reshape, "-1"表示自适应 x = x.view(x.size()[0], -1) x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x net = Net() # 定义损失函数和优化器 criterion = nn.CrossEntropyLoss() # 交叉熵损失函数 optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9) for epoch in range(2): running_loss = 0.0 for i, data in enumerate(trainloader, 0): # 输入数据 inputs, labels = data inputs, labels = Variable(inputs), Variable(labels) # 梯度清零 optimizer.zero_grad() # 前向传播+反向传播 outputs = net(inputs) loss = criterion(outputs, labels) loss.backward() # 更新参数 optimizer.step() running_loss += loss.data if i % 2000 == 1999: print('[%d, %5d] loss: %.3f' % (epoch + 1, i + 1, running_loss / 2000)) running_loss = 0.0 print('Finished Training') # 测试数据集 # dataiter = iter(testloader) # images, labels = dataiter.next() # print('实际的label:', ' '.join('%08s' % classes[labels[j]] for j in range(4))) # # show(tv.utils.make_grid(images / 2 - 0.5)).resize((400, 100)).show() # # outputs = net(Variable(images)) # _, predicted = t.max(outputs.data, 1) # print('预测结果:', ' '.join('%5s' % classes[predicted[j]] for j in range(4))) correct = 0 total = 0 for data in testloader: images, labels = data outputs = net(Variable(images)) _, predicted = t.max(outputs, 1) total += labels.size(0) correct += (predicted == labels).sum() print('10000张测试集中的准确率为: %d %%' % (100 * t.true_divide(correct, total))) # tensor和int之间的除法不能直接用'/',需要用t.true_divide(correct, total)

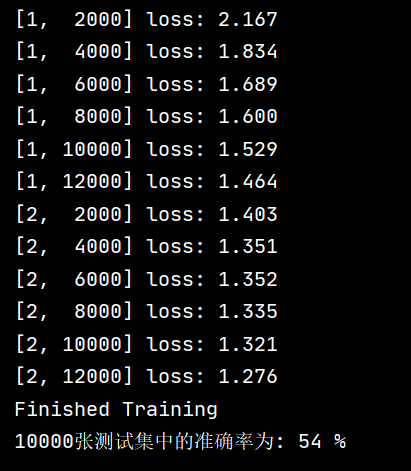

测试结果如下: