介绍

目前生产环境部署 kubernetes 集群主要有两种方式:

- kubeadm部署

- 二进制部署

从 github 下载发行版的二进制包,手动部署每个组件,组成 Kubernetes 集群。

Kubeadm 降低了部署门槛,但屏蔽了很多细节,遇到问题很难排查。如果想要更容易可控,推荐使用二进制部署kubernetes 集群,虽然手动部署麻烦点,期间可以学习很多工作原理,也利于后期维护。

本文部署版本:【v1.18.6 】所有用到的镜像及安装程序下载地址:

链接:https://pan.baidu.com/s/1au7bfc6O_T_Ja-ALiYzcjg

提取码:gspr

环境

| 角色 | IP | 备注 |

|---|---|---|

| master | 192.168.1.14 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd |

| node1 | 192.168.1.15 | kubelet,kube-proxy,docker, etcd |

| node2 | 192.168.1.16 | kubelet,kube-proxy,docker ,etcd |

环境初始化

所有集群主机都需要做初始化

停止所有服务器 firewalld 防火墙

systemctl stop firewalld; systemctl disable firewalld

关闭 Swap

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

关闭 Selinux

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

声明主机名

cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.14 master

192.168.1.15 node1

192.168.1.16 node2

设置主机名、升级内核、安装 Docker-ce

运行下面 init.sh 脚本,脚本完成下面四项任务:

- 设置服务器

hostname - 安装 k8s 依赖环境

- 升级系统内核

- 安装 docker-ce

在每台机器上执行 init.sh 脚本,示例如下:

Ps:init.sh 脚本只用于 Centos,支持 重复运行。

脚本内容如下:

#!/usr/bin/env bash

function Check_linux_system(){

linux_version=`cat /etc/redhat-release`

if [[ ${linux_version} =~ "CentOS" ]];then

echo -e "�33[32;32m 系统为 ${linux_version} �33[0m

"

else

echo -e "�33[32;32m 系统不是CentOS,该脚本只支持CentOS环境�33[0m

"

exit 1

fi

}

function Set_hostname(){

if [ -n "$HostName" ];then

grep $HostName /etc/hostname && echo -e "�33[32;32m 主机名已设置,退出设置主机名步骤 �33[0m

" && return

case $HostName in

help)

echo -e "�33[32;32m bash init.sh 主机名 �33[0m

"

exit 1

;;

*)

hostname $HostName

echo "$HostName" > /etc/hostname

echo "`ifconfig eth0 | grep inet | awk '{print $2}'` $HostName" >> /etc/hosts

;;

esac

else

echo -e "�33[32;32m 输入为空,请参照 bash init.sh 主机名 �33[0m

"

exit 1

fi

}

function Install_depend_environment(){

rpm -qa | grep nfs-utils &> /dev/null && echo -e "�33[32;32m 已完成依赖环境安装,退出依赖环境安装步骤 �33[0m

" && return

yum install -y nfs-utils curl yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget vim ntpdate libseccomp libtool-ltdl telnet

echo -e "�33[32;32m 升级Centos7系统内核到5版本,解决Docker-ce版本兼容问题�33[0m

"

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org &&

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm &&

yum --disablerepo=* --enablerepo=elrepo-kernel repolist &&

yum --disablerepo=* --enablerepo=elrepo-kernel install -y kernel-ml.x86_64 &&

yum remove -y kernel-tools-libs.x86_64 kernel-tools.x86_64 &&

yum --disablerepo=* --enablerepo=elrepo-kernel install -y kernel-ml-tools.x86_64 &&

grub2-set-default 0

modprobe br_netfilter

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

ls /proc/sys/net/bridge

}

function Install_docker(){

rpm -qa | grep docker && echo -e "�33[32;32m 已安装docker,退出安装docker步骤 �33[0m

" && return

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

yum -y install docker-ce-19.03.6 docker-ce-cli-19.03.6

systemctl enable docker.service

systemctl start docker.service

systemctl stop docker.service

echo '{"registry-mirrors": ["https://4xr1qpsp.mirror.aliyuncs.com"], "log-opts": {"max-size":"500m", "max-file":"3"}}' > /etc/docker/daemon.json

systemctl daemon-reload

systemctl start docker

}

HostName=$1

Check_linux_system &&

Set_hostname &&

Install_depend_environment &&

Install_docker

执行脚本:

# master 机器运行,init.sh 后面接的参数是设置 master 服务器主机名

chmod +x init.sh && ./init.sh master

# 执行完 init.sh 脚本,请重启服务器

reboot

### node1 ###

chmod +x init.sh && ./init.sh node1

reboot

### node2 ###

chmod +x init.sh && ./init.sh node2

reboot

部署 etcd 集群

Etcd 是一个分布式键值存储系统,Kubernetes 使用 Etcd 进行数据存储,所以先准备一个 Etcd 数据库,为解决Etcd单点故障,应采用集群方式部署,这里使用 3台组件集群,可容忍1台机器故障,当然,也可以用 5 台组建集群,可容忍2台机器故障。

| 节点名称 | IP |

|---|---|

| etcd01 | 192.168.1.14 |

| etcd02 | 192.168.1.15 |

| etcd03 | 192.168.1.16 |

Ps:为了节省机器,这里的 etcd 集群和 k8s集群节点复用,也可独立于k8s集群之外。

准备 cfssl 证书生成工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。

找任意一台服务器操作,这里用Master节点。

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

生成 etcd 证书

- 创建工作目录

[root@192.168.1.14 ~]# mkdir -pv /data/tls/{etcd,k8s}

mkdir: created directory ‘/data’

mkdir: created directory ‘/data/tls’

mkdir: created directory ‘/data/tls/etcd’

mkdir: created directory ‘/data/tls/k8s’

[root@192.168.1.14 ~]#cd /data/tls/etcd/

- 自签TLS 证书

# 创建 certificate.sh 脚本

[root@192.168.1.14 /data/tls/etcd]#vim certificate.sh

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

# 注意:根据自身环境进行配置 IP 地址

#

#

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.1.14",

"192.168.1.15",

"192.168.1.16"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

执行脚本生成证书:

[root@192.168.1.14 /data/tls/etcd]#chmod +x certificate.sh && ./certificate.sh

[root@192.168.1.14 /data/tls/etcd]#ls *.pem

ca-key.pem ca.pem server-key.pem server.pem

创建工作目录并解压二进制包

[root@192.168.1.14 /data/tls/etcd]#mkdir -pv /opt/etcd/{bin,cfg,ssl}

mkdir: created directory ‘/opt/etcd’

mkdir: created directory ‘/opt/etcd/bin’

mkdir: created directory ‘/opt/etcd/cfg’

mkdir: created directory ‘/opt/etcd/ssl’

[root@192.168.1.14 ~]#cd /usr/local/src/

[root@192.168.1.14 /usr/local/src]#tar xf offline-k8s-packages.tar.gz

[root@192.168.1.14 /usr/local/src/offline-k8s-packages]#cp -a etcd-v3.4.7-linux-amd64/etcd* /opt/etcd/bin/

### 编写 etcd 配置文件脚本 ###

[root@192.168.1.14 /opt/etcd/cfg]#mkdir -pv /data/etcd/

mkdir: created directory ‘/data/etcd/’

[root@192.168.1.14 /opt/etcd/cfg]#cd /data/etcd/

[root@192.168.1.14 /data/etcd]#vim etcd.sh

#!/bin/bash

etcd_NAME=${1:-"etcd01"}

etcd_IP=${2:-"127.0.0.1"}

etcd_CLUSTER=${3:-"etcd01=https://127.0.0.1:2379"}

cat <<EOF >/opt/etcd/cfg/etcd.yml

name: ${etcd_NAME}

data-dir: /var/lib/etcd/default.etcd

listen-peer-urls: https://${etcd_IP}:2380

listen-client-urls: https://${etcd_IP}:2379,https://127.0.0.1:2379

advertise-client-urls: https://${etcd_IP}:2379

initial-advertise-peer-urls: https://${etcd_IP}:2380

initial-cluster: ${etcd_CLUSTER}

initial-cluster-token: etcd-cluster

initial-cluster-state: new

client-transport-security:

cert-file: /opt/etcd/ssl/server.pem

key-file: /opt/etcd/ssl/server-key.pem

client-cert-auth: false

trusted-ca-file: /opt/etcd/ssl/ca.pem

auto-tls: false

peer-transport-security:

cert-file: /opt/etcd/ssl/server.pem

key-file: /opt/etcd/ssl/server-key.pem

client-cert-auth: false

trusted-ca-file: /opt/etcd/ssl/ca.pem

auto-tls: false

debug: false

logger: zap

log-outputs: [stderr]

EOF

cat <<EOF >/usr/lib/systemd/system/etcd.service

[Unit]

Description=etcd Server

Documentation=https://github.com/etcd-io/etcd

Conflicts=etcd.service

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

LimitNOFILE=65536

Restart=on-failure

RestartSec=5s

TimeoutStartSec=0

ExecStart=/opt/etcd/bin/etcd --config-file=/opt/etcd/cfg/etcd.yml

[Install]

WantedBy=multi-user.target

EOF

执行 etcd.sh 脚本:

[root@192.168.1.14 /data/etcd]#chmod +x etcd.sh

[root@192.168.1.14 /data/etcd]#./etcd.sh etcd01 192.168.1.14 etcd01=https://192.168.1.14:2380,etcd02=https://192.168.1.15:2380,etcd03=https://192.168.1.16:2380

### 拷贝证书到etcd工作目录 ###

[root@192.168.1.14 /data/etcd]#cp -a /data/tls/etcd/*pem /opt/etcd/ssl/

[root@192.168.1.14 /data/etcd]#ls /opt/etcd/ssl/

ca-key.pem ca.pem server-key.pem server.pem

Ps:如果 etcd 搭建集群,服务最好能一起启动,否则会出现报错信息

将 etcd 工作目录 拷贝到 node1 node2

[root@192.168.1.14 ~]#scp -r /opt/etcd/ node1:/opt/

[root@192.168.1.14 ~]#scp -r /opt/etcd/ node2:/opt/

[root@192.168.1.14 ~]#scp /usr/lib/systemd/system/etcd.service node1:/usr/lib/systemd/system/

[root@192.168.1.14 ~]#scp /usr/lib/systemd/system/etcd.service node2:/usr/lib/systemd/system/

切换到 node1 修改配置文件参数

[root@192.168.1.15 ~]#cd /opt/etcd/cfg/

[root@192.168.1.15 /opt/etcd/cfg]#sed -i 's/1.14/1.15/g' etcd.yml

[root@192.168.1.15 /opt/etcd/cfg]#sed -i 's/name: etcd01/name: etcd02/g' etcd.yml

### 注意这里要修改正确 ###

[root@192.168.1.15 /opt/etcd/cfg]#vim etcd.yml

...

initial-cluster: etcd02=https://192.168.1.14:2380,etcd02=https://192.168.1.15:2380,etcd03=https://192.168.1.16:2380

...

切换到 node2 修改配置文件参数

[root@192.168.1.16 /opt/etcd/cfg]#sed -i 's/1.14/1.16/g' etcd.yml

[root@192.168.1.16 /opt/etcd/cfg]#sed -i 's/name: etcd01/name: etcd03/g' etcd.yml

### 注意这里要修改正确 ###

[root@192.168.1.15 /opt/etcd/cfg]#vim etcd.yml

...

initial-cluster: etcd02=https://192.168.1.14:2380,etcd02=https://192.168.1.15:2380,etcd03=https://192.168.1.16:2380

...

启动 etcd 服务并验证

### 三个节点都要启动 ###

systemctl enable etcd ; systemctl start etcd

### 添加etcd命令到环境变量

echo "export PATH=/opt/etcd/bin:$PATH" > /etc/profile.d/etcd.sh

. /etc/profile.d/etcd.sh

### 验证查看 ###

etcdctl --write-out=table

--cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem

--endpoints=https://192.168.1.14:2379,https://192.168.1.15:2379,https://192.168.1.16:2379 endpoint health

+---------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+---------------------------+--------+-------------+-------+

| https://192.168.1.14:2379 | true | 13.341351ms | |

| https://192.168.1.15:2379 | true | 14.125986ms | |

| https://192.168.1.16:2379 | true | 18.895577ms | |

+---------------------------+--------+-------------+-------+

到此,etcd 集群搭建成功。

部署 Master Node

生成 kube-apiserver 证书

[root@192.168.1.14 ~]#cd /data/tls/k8s/

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

[root@192.168.1.14 /data/tls/k8s]#ls *pem

ca-key.pem ca.pem

签发kube-apiserver 证书

[root@192.168.1.14 ~]#cd /data/tls/k8s/

### hosts字段中IP为所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。 ###

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.1.14",

"192.168.1.15",

"192.168.1.16",

"192.168.1.17",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

### 生成证书 ###

[root@192.168.1.14 /data/tls/k8s]#cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

### 查看生成后证书 ###

[root@192.168.1.14 /data/tls/k8s]#ls server*pem

server-key.pem server.pem

创建k8s工作目录解压二进制包

二进制包包含在开篇的下载

[root@192.168.1.14 ~]#mkdir -pv /opt/kubernetes/{bin,cfg,ssl,logs}

[root@192.168.1.14 ~]#cd /usr/local/src/offline-k8s-packages

[root@192.168.1.14 /usr/local/src/offline-k8s-packages]#tar xf kubernetes-server-linux-amd64.tar.gz

[root@192.168.1.14 /usr/local/src/offline-k8s-packages/kubernetes/server/bin]#cp -a kube-apiserver kube-scheduler kube-controller-manager kubectl /opt/kubernetes/bin/

### 添加环境变量 ###

[root@192.168.1.14 ~]#echo "export PATH=/opt/kubernetes/bin:$PATH" > /etc/profile.d/k8s.sh

[root@192.168.1.14 ~]#. /etc/profile.d/k8s.sh

部署 kube-apiserver

- 编写配置文件及服务启动脚本

[root@192.168.1.14 ~]#mkdir -p /data/k8s

[root@192.168.1.14 ~]#cd /data/k8s

### 编写生成 kube-apiserver.conf 脚本 ###

[root@192.168.1.14 /data/k8s]#vim apiserver.sh

#!/bin/bash

MASTER_ADDRESS=${1:-"192.168.1.14"}

ETCD_SERVERS=${2:-"http://127.0.0.1:2379"}

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--etcd-servers=${ETCD_SERVERS} \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=${MASTER_ADDRESS} \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-32767 \

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

对上面脚本中的参数做一个注解:

# –logtostderr:启用日志

# —v:日志等级

# –log-dir:日志目录

# –etcd-servers:etcd集群地址

# –bind-address:监听地址

# –secure-port:https安全端口

# –advertise-address:集群通告地址

# –allow-privileged:启用授权

# –service-cluster-ip-range:Service虚拟IP地址段

# –enable-admission-plugins:准入控制模块

# –authorization-mode:认证授权,启用RBAC授权和节点自管理

# –enable-bootstrap-token-auth:启用TLS bootstrap机制

# –token-auth-file:bootstrap token文件

# –service-node-port-range:Service nodeport类型默认分配端口范围

# –kubelet-client-xxx:apiserver访问kubelet客户端证书

# –tls-xxx-file:apiserver https证书

# –etcd-xxxfile:连接Etcd集群证书

# –audit-log-xxx:审计日志

- 拷贝生成的证书

### 拷贝认证文件到k8s 工作目录 ###

[root@192.168.1.14 ~]#cp -a /data/tls/k8s/*pem /opt/kubernetes/ssl/

[root@192.168.1.14 ~]#ls /opt/kubernetes/ssl/

ca-key.pem ca.pem server-key.pem server.pem

- 启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书

TLS bootstrapping 工作流程

创建上述文件中的 token文件

cat > /opt/kubernetes/cfg/token.csv << EOF

4fb823f13d1d21be5991b9e3a582718c,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

# 格式:token,用户名,UID,用户组

# 注意:这里的用户是不需要通过 useradd 创建的用户

# token 是随机码,也可自行生成:

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

执行 apiserver.sh 脚本:

[root@192.168.1.14 /data/k8s]#chmod +x apiserver.sh

[root@192.168.1.14 /data/k8s]#./apiserver.sh 192.168.1.14 https://192.168.1.14:2379,https://192.168.1.15:2379,https://192.168.1.16:2379

### 启动并设置开机启动 ###

[root@192.168.1.14 ~]#systemctl enable kube-apiserver ; systemctl start kube-apiserver

- 授权 kubelet-bootstrap 用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap

--clusterrole=system:node-bootstrapper

--user=kubelet-bootstrap

部署 kube-controller-manager

- 创建配置文件及启动文件

[root@192.168.1.14 ~]#cd /data/k8s/

[root@192.168.1.14 /data/k8s]#vim controller-manager.sh

#!/bin/bash

#

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--leader-elect=true

--master=127.0.0.1:8080

--bind-address=127.0.0.1

--allocate-node-cidrs=true

--cluster-cidr=10.244.0.0/16

--service-cluster-ip-range=10.0.0.0/24

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem

--root-ca-file=/opt/kubernetes/ssl/ca.pem

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem

--experimental-cluster-signing-duration=87600h0m0s"

EOF

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

# –master:通过本地非安全本地端口8080连接apiserver。

# –leader-elect:当该组件启动多个时,自动选举(HA)

# –cluster-signing-cert-file/–cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver保持一致

执行脚本:

[root@192.168.118.14 /data/k8s]#chmod +x controller-manager.sh

[root@192.168.118.14 /data/k8s]#./controller-manager.sh

- 启动并开机自启

[root@192.168.1.14 ~]#systemctl enable kube-controller-manager

[root@192.168.1.14 ~]#systemctl start kube-controller-manager

部署 kube-scheduler

- 创建配置文件及启动脚本

[root@192.168.1.14 ~]#cd /data/k8s/

[root@192.168.1.14 /data/k8s]#vim scheduler.sh

#!/bin/bash

#

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false --v=2 --log-dir=/opt/kubernetes/logs --leader-elect --master=127.0.0.1:8080 --bind-address=127.0.0.1"

EOF

# –master:通过本地非安全本地端口8080连接apiserver。

# –leader-elect:当该组件启动多个时,自动选举(HA)

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

### 执行脚本 ###

[root@192.168.1.14 /data/k8s]#chmod +x scheduler.sh

[root@192.168.1.14 /data/k8s]#./scheduler.sh

- 启动服务并开机自启

[root@192.168.1.14 /data/k8s]#systemctl enable kube-scheduler

[root@192.168.1.14 /data/k8s]#systemctl start kube-scheduler

设置 kuberctl 自动补全功能

[root@192.168.1.14 ~]#yum install bash-completion -y

[root@192.168.1.14 ~]#kubectl completion bash > /etc/bash_completion.d/kubectl

重启会话即可实现 kubectl 自动补全功能

查看集群

# 所有组件都已经启动成功,通过kubectl工具查看当前集群组件状态:

[root@192.168.1.14 ~]#kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

部署 Work node

下面切换到 node1 (192.168.1.15)节点操作

创建工作目录并拷贝二进制文件

[root@192.168.1.15 ~]#mkdir -pv /opt/kubernetes/{bin,cfg,ssl,logs}

[root@192.168.1.15 ~]#cd /usr/local/src/

[root@192.168.1.15 /usr/local/src]#tar xf offline-k8s-packages.tar.gz

[root@192.168.1.15 /usr/local/src]#cd offline-k8s-packages/

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#tar xf kubernetes-server-linux-amd64.tar.gz

[root@192.168.1.15 /usr/local/src/offline-k8s-packages/kubernetes/server/bin]#cp -a kubelet kube-proxy /opt/kubernetes/bin/

[root@192.168.1.15 ~]#echo "export PATH=/opt/kubernetes/bin:$PATH" > /etc/profile.d/k8s.sh

[root@192.168.1.15 ~]#. /etc/profile.d/k8s.sh

部署kubelet

- 导入k8s所需的docker镜像文件

[root@192.168.1.15 ~]#cd /usr/local/src/offline-k8s-packages

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#tar xf k8s-imagesV1.18.6.tar.gz

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#docker load < k8s-imagesV1.18.6.tar

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/coreos/flannel v0.13.0-rc2 79dd6d6368e2 3 weeks ago 57.2MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 7 months ago 683kB

- 创建配置文件

[root@192.168.1.15 ~]#mkdir -pv /data/k8s

[root@192.168.1.15 ~]#cd /data/k8s

[root@192.168.1.15 ~]#vim kubelet.sh

#!/bin/bash

#

HostName=${1:-"node1"}

cat > /opt/kubernetes/cfg/kubelet.conf <<EOF

KUBELET_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--hostname-override=${HostName} \

--network-plugin=cni \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet-config.yml \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=k8s.gcr.io/pause:3.2"

EOF

# –hostname-override:显示名称,集群中唯一

# –network-plugin:启用CNI

# –kubeconfig:空路径,会自动生成,后面用于连接apiserver

# –bootstrap-kubeconfig:首次启动向apiserver申请证书

# –config:配置参数文件

# –cert-dir:kubelet证书生成目录

# –pod-infra-container-image:管理Pod网络容器的镜像

### 启动脚本 ###

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

### 执行脚本 ###

[root@192.168.1.15 /data/k8s]#chmod +x kubelet.sh

[root@192.168.1.15 /data/k8s]#./kubelet.sh node1

- 配置参数文件

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

- 生成 bootstrap.kubeconfig 文件

注意:生成 bootstrap.kubeconfig 是在 master 节点执行的,完成后拷贝到 node 节点

[root@192.168.1.14 ~]#cd /data/k8s/

KUBE_APISERVER="https://192.168.1.14:6443" # apiserver IP:PORT

TOKEN="4fb823f13d1d21be5991b9e3a582718c" # 与master节点 token.csv里保持一致

# 生成 kubelet bootstrap kubeconfig 配置文件

kubectl config set-cluster kubernetes

--certificate-authority=/opt/kubernetes/ssl/ca.pem

--embed-certs=true

--server=${KUBE_APISERVER}

--kubeconfig=bootstrap.kubeconfig

kubectl config set-credentials "kubelet-bootstrap"

--token=${TOKEN}

--kubeconfig=bootstrap.kubeconfig

kubectl config set-context default

--cluster=kubernetes

--user="kubelet-bootstrap"

--kubeconfig=bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

# 拷贝到配置文件路径

[root@192.168.1.14 /data/k8s]#scp bootstrap.kubeconfig node1:/opt/kubernetes/cfg/

- 拷贝认证文件到 node 节点

从 master 节点拷贝到 node

[root@192.168.1.14 ~]#scp /opt/kubernetes/ssl/ca.pem node1:/opt/kubernetes/ssl/

- 启动 kubelet

[root@192.168.1.15 ~]#systemctl enable kubelet; systemctl start kubelet

- 批准 kubelet 证书并加入集群

master 节点操作

# 查看kubelet证书请求

[root@192.168.1.14 ~]#kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-D_9vbQyKez8UqyNqYkVXrQ0fvpLyZ2LqSPqNhu65ZaQ 4m8s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

# 批准申请

[root@192.168.1.14 ~]#kubectl certificate approve node-csr-D_9vbQyKez8UqyNqYkVXrQ0fvpLyZ2LqSPqNhu65ZaQ

# 查看节点

[root@192.168.1.14 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1 NotReady <none> 14s v1.18.6

# 由于网络插件还没有部署,节点会没有准备就绪 NotReady

部署 kube-proxy

- 创建配置文件

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

- 创建参数文件

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: node1

clusterCIDR: 10.0.0.0/24

EOF

- 生成 kubeconfig 文件

在 master 节点执行

[root@192.168.1.14 ~]#cd /data/tls/k8s/

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 生成证书

[root@192.168.1.14 /data/tls/k8s]#cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

[root@192.168.1.14 /data/tls/k8s]#ls kube-proxy*pem

kube-proxy-key.pem kube-proxy.pem

生成文件:

KUBE_APISERVER="https://192.168.1.14:6443"

kubectl config set-cluster kubernetes

--certificate-authority=/opt/kubernetes/ssl/ca.pem

--embed-certs=true

--server=${KUBE_APISERVER}

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy

--client-certificate=./kube-proxy.pem

--client-key=./kube-proxy-key.pem

--embed-certs=true

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default

--cluster=kubernetes

--user=kube-proxy

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

# 拷贝配置文件到指定路径

[root@192.168.1.14 /data/tls/k8s]#scp kube-proxy.kubeconfig node1:/opt/kubernetes/cfg/

- 创建kube-proxy 启动脚本

回到 node1 (192.168.1.15)操作

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

- 启动并设置开机启动

[root@192.168.1.15 ~]#systemctl enable kube-proxy; systemctl start kube-proxy

部署 CNI 网络

注: master 节点是未安装 kubelet 和 kube-proxy

- 准备二进制文件

[root@192.168.1.15 ~]#mkdir -pv /opt/cni/bin

[root@192.168.1.15 ~]#cd /usr/local/src/offline-k8s-packages/

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#tar xf cni-plugins-linux-amd64-v0.8.7.tgz -C /opt/cni/bin/

- 导入镜像

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#tar xf k8s-imagesV1.18.6.tar.gz

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#docker load < k8s-imagesV1.18.6.tar

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/coreos/flannel v0.13.0-rc2 79dd6d6368e2 3 weeks ago 57.2MB

coredns/coredns 1.7.0 bfe3a36ebd25 3 months ago 45.2MB

kubernetesui/metrics-scraper v1.0.4 86262685d9ab 6 months ago 36.9MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 7 months ago 683kB

- 初始化 flannel

初始化操作是在 master 节点执行

[root@192.168.1.14 /usr/local/src/offline-k8s-packages]#kubectl apply -f kube-flannel.yaml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

- 查看验证

### 查看 flannel pod ###

[root@192.168.1.14 /usr/local/src/offline-k8s-packages]#kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-sz7x7 1/1 Running 0 5s

### 查看节点状态 ###

[root@192.168.1.14 /usr/local/src/offline-k8s-packages]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1 Ready <none> 4m46s v1.18.6

Work node 节点已经从 NotReady 更新为 Ready

部署 CoreDNS

- 初始化 CoreDNS

[root@192.168.1.14 /usr/local/src/offline-k8s-packages]#kubectl apply -f coredns.yaml

- 查看

[root@192.168.1.14 ~]#kubectl get pods,service -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-85b4878f78-lrvpc 1/1 Running 0 68s

pod/kube-flannel-ds-sz7x7 1/1 Running 0 9m3s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP,9153/TCP 68s

- 验证

[root@192.168.1.14 ~]#kubectl run nginx-deploy --image=nginx:alpine

pod/nginx-deploy created

[root@192.168.1.14 ~]#kubectl expose pod nginx-deploy --name=nginx --port=80 --target-port=80 --protocol=TCP

service/nginx exposed

### 创建 pod,尝试通过 nginx 服务名访问 ###

[root@192.168.1.14 ~]#kubectl run -it --rm client --image=busybox

If you don't see a command prompt, try pressing enter.

/ # wget -O - -q nginx

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

新增 Work node 部署

添加新的node2 到集群

拷贝已经部署好的 node1 相关文件到新节点

注:默认已经做过初始化,安装了 docker-ce

- 拷贝文件到新节点

[root@192.168.1.15 ~]#scp -r /opt/cni/ node2:/opt/

[root@192.168.1.15 ~]#scp -r /opt/kubernetes/ node2:/opt/

[root@192.168.1.15 ~]#scp /usr/lib/systemd/system/kube* node2:/usr/lib/systemd/system/

- 导入镜像、修改配置文件并启动服务

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#tar xf k8s-imagesV1.18.6.tar.gz

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#docker load < k8s-imagesV1.18.6.tar

[root@192.168.1.15 /usr/local/src/offline-k8s-packages]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/coreos/flannel v0.13.0-rc2 79dd6d6368e2 3 weeks ago 57.2MB

coredns/coredns 1.7.0 bfe3a36ebd25 3 months ago 45.2MB

kubernetesui/metrics-scraper v1.0.4 86262685d9ab 6 months ago 36.9MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 7 months ago 683kB

# 这几个文件是证书申请审批后自动生成的,每个Node不同,必须删除重新生成。

[root@192.168.1.16 ~]#rm -rf /opt/kubernetes/cfg/kubelet.kubeconfig

[root@192.168.1.16 ~]#cd /opt/kubernetes/cfg/

[root@192.168.1.16 ~]#rm -rf /opt/kubernetes/ssl/kubelet*

# 修改主机名,这里两个文件配置必须一致,否则会报错

[root@192.168.1.16 /opt/kubernetes/cfg]#sed -i 's/node1/node2/g' kubelet.conf

[root@192.168.1.16 /opt/kubernetes/cfg]#sed -i 's/node1/node2/g' kube-proxy-config.yml

# 启动服务

[root@192.168.1.16 ~]#systemctl enable kubelet kube-proxy

[root@192.168.1.16 ~]#systemctl start kubelet kube-proxy

- 在mster 上批准node kubelet 证书申请

[root@192.168.1.14 ~]#kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-8Y-o-S-Ge3MR4Ico7cb93xpf1GAbwlwUreiH0tpqJR0 38m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-brk2tg384kUGQcDcAwXNzOtz0bhS5qYKSAoESIwV6qU 85s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

[root@192.168.1.14 ~]#kubectl certificate approve node-csr-brk2tg384kUGQcDcAwXNzOtz0bhS5qYKSAoESIwV6qU

certificatesigningrequest.certificates.k8s.io/node-csr-brk2tg384kUGQcDcAwXNzOtz0bhS5qYKSAoESIwV6qU approved

# 等待一会时间

[root@192.168.1.14 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1 Ready <none> 38m v1.18.6

node2 Ready <none> 11s v1.18.6

# 查看

[root@192.168.1.14 ~]#kubectl get pods,svc -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/coredns-85b4878f78-lrvpc 1/1 Running 0 26m 10.244.0.2 node1 <none> <none>

pod/kube-flannel-ds-q4w4g 1/1 Running 0 73s 192.168.1.16 node2 <none> <none>

pod/kube-flannel-ds-sz7x7 1/1 Running 0 34m 192.168.1.15 node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP,9153/TCP 26m k8s-app=kube-dns

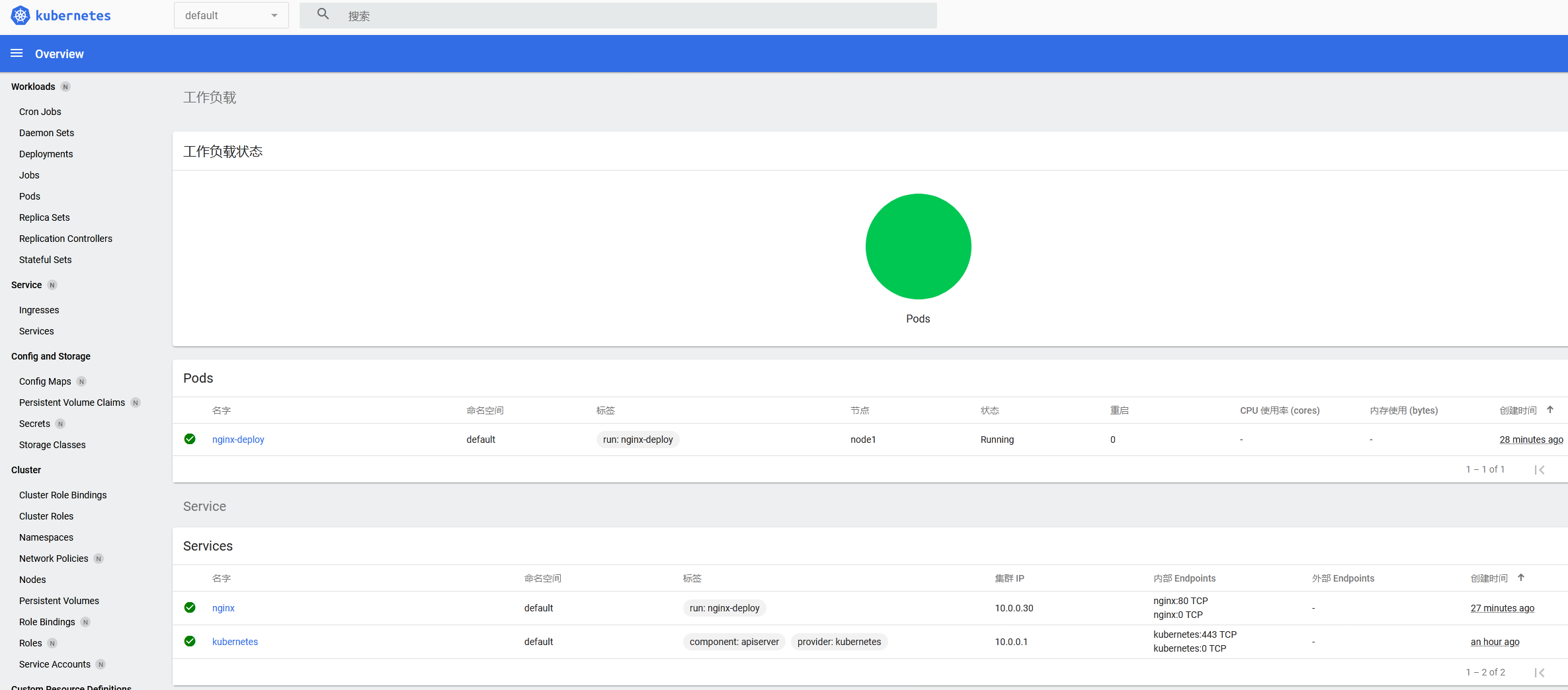

部署 Dashboard

配置文件及镜像在下载包里直接使用。

- 修改yaml配置文件使其端口暴露外部访问

# 默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

[root@192.168.1.14 /usr/local/src/offline-k8s-packages/dashboard]#vim recommended.yaml

...

ports:

- port: 443

targetPort: 8443

nodePort: 30001 # 修改这里

type: NodePort #修改这里

...

[root@192.168.1.14 /usr/local/src/offline-k8s-packages/dashboard]#kubectl apply -f recommended.yaml

[root@192.168.1.14 ~]#kubectl get pods,svc -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/dashboard-metrics-scraper-6b4884c9d5-kg98t 1/1 Running 0 42s 10.244.1.3 node2 <none> <none>

pod/kubernetes-dashboard-7d8574ffd9-dj6cx 1/1 Running 0 42s 10.244.1.2 node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dashboard-metrics-scraper ClusterIP 10.0.0.177 <none> 8000/TCP 42s k8s-app=dashboard-metrics-scraper

service/kubernetes-dashboard NodePort 10.0.0.3 <none> 443:30001/TCP 42s k8s-app=kubernetes-dashboard

- 创建 service account 并绑定默认 cluster-admin 管理员集群角色

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

# 接下来访问https://node ip:30001

# 然后将上面过滤出来的token复制上面即可访问dashboard