目录

Openstack-Mitaka 高可用之 概述

Openstack-Mitaka 高可用之 环境初始化

Openstack-Mitaka 高可用之 Mariadb-Galera集群部署

Openstack-Mitaka 高可用之 Rabbitmq-server 集群部署

Openstack-Mitaka 高可用之 memcache

Openstack-Mitaka 高可用之 Pacemaker+corosync+pcs高可用集群

Openstack-Mitaka 高可用之 认证服务(keystone)

OpenStack-Mitaka 高可用之 镜像服务(glance)

Openstack-Mitaka 高可用之 计算服务(Nova)

Openstack-Mitaka 高可用之 网络服务(Neutron)

Openstack-Mitaka 高可用之 Dashboard

Openstack-Mitaka 高可用之 启动一个实例

Openstack-Mitaka 高可用之 测试

介绍及特点

Pacemaker:工作在资源分配层,提供资源管理器的功能

Corosync:提供集群的信息层功能,传递心跳信息和集群事务信息

Pacemaker + Corosync 就可以实现高可用集群架构

集群搭建

以下三个节点都需要执行:

# yum install pcs -y # systemctl start pcsd ; systemctl enable pcsd # echo 'hacluster' | passwd --stdin hacluster # yum install haproxy rsyslog -y # echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf # 启动服务的时候,允许忽视VIP的存在 # echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf # 开启内核转发功能 # sysctl -p

在任意节点创建用于haproxy监控Mariadb的用户

MariaDB [(none)]> CREATE USER 'haproxy'@'%' ;

配置haproxy用于负载均衡器

[root@controller1 ~]# egrep -v "^#|^$" /etc/haproxy/haproxy.cfg log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon # turn on stats unix socket stats socket /var/lib/haproxy/stats defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 4000 listen galera_cluster mode tcp bind 192.168.0.10:3306 balance source option mysql-check user haproxy server controller1 192.168.0.11:3306 check inter 2000 rise 3 fall 3 backup server controller2 192.168.0.12:3306 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:3306 check inter 2000 rise 3 fall 3 backup listen memcache_cluster mode tcp bind 192.168.0.10:11211 balance source option tcplog server controller1 192.168.0.11:11211 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:11211 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:11211 check inter 2000 rise 3 fall 3

注意:

(1)确保haproxy配置无误,建议首先修改ip和端口启动测试是否成功。

(2)Mariadb-Galera和rabbitmq默认监听到 0.0.0.0 修改调整监听到本地 192.168.0.x

(3)将haproxy正确的配置拷贝到其他节点,无需手动启动haproxy服务

为haproxy配置日志(所有controller节点执行):

# vim /etc/rsyslog.conf … $ModLoad imudp $UDPServerRun 514 … local2.* /var/log/haproxy/haproxy.log … # mkdir -pv /var/log/haproxy/ mkdir: created directory ‘/var/log/haproxy/’ # systemctl restart rsyslog

启动haproxy进行验证操作:

# systemctl start haproxy [root@controller1 ~]# netstat -ntplu | grep ha tcp 0 0 192.168.0.10:3306 0.0.0.0:* LISTEN 15467/haproxy tcp 0 0 192.168.0.10:11211 0.0.0.0:* LISTEN 15467/haproxy udp 0 0 0.0.0.0:43268 0.0.0.0:* 15466/haproxy 验证成功,关闭haproxy # systemctl stop haproxy

在controller1节点上执行:

[root@controller1 ~]# pcs cluster auth controller1 controller2 controller3 -u hacluster -p hacluster --force

controller3: Authorized

controller2: Authorized

controller1: Authorized

创建集群:

[root@controller1 ~]# pcs cluster setup --name openstack-cluster controller1 controller2 controller3 --force Destroying cluster on nodes: controller1, controller2, controller3... controller3: Stopping Cluster (pacemaker)... controller2: Stopping Cluster (pacemaker)... controller1: Stopping Cluster (pacemaker)... controller3: Successfully destroyed cluster controller1: Successfully destroyed cluster controller2: Successfully destroyed cluster Sending 'pacemaker_remote authkey' to 'controller1', 'controller2', 'controller3' controller3: successful distribution of the file 'pacemaker_remote authkey' controller1: successful distribution of the file 'pacemaker_remote authkey' controller2: successful distribution of the file 'pacemaker_remote authkey' Sending cluster config files to the nodes... controller1: Succeeded controller2: Succeeded controller3: Succeeded Synchronizing pcsd certificates on nodes controller1, controller2, controller3... controller3: Success controller2: Success controller1: Success Restarting pcsd on the nodes in order to reload the certificates... controller3: Success controller2: Success controller1: Success

启动集群的所有节点:

[root@controller1 ~]# pcs cluster start --all controller2: Starting Cluster... controller1: Starting Cluster... controller3: Starting Cluster... [root@controller1 ~]# pcs cluster enable --all controller1: Cluster Enabled controller2: Cluster Enabled controller3: Cluster Enabled

查看集群信息:

[root@controller1 ~]# pcs status Cluster name: openstack-cluster WARNING: no stonith devices and stonith-enabled is not false Stack: corosync Current DC: controller3 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Thu Nov 30 19:30:43 2017 Last change: Thu Nov 30 19:30:17 2017 by hacluster via crmd on controller3 3 nodes configured 0 resources configured Online: [ controller1 controller2 controller3 ] No resources Daemon Status: corosync: active/enabled pacemaker: active/enabled pcsd: active/enabled [root@controller1 ~]# pcs cluster status Cluster Status: Stack: corosync Current DC: controller3 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Thu Nov 30 19:30:52 2017 Last change: Thu Nov 30 19:30:17 2017 by hacluster via crmd on controller3 3 nodes configured 0 resources configured PCSD Status: controller2: Online controller3: Online controller1: Online

三个节点都在线

默认的表决规则建议集群中的节点个数为奇数且不低于3。当集群只有2个节点,其中1个节点崩坏,由于不符合默认的表决规则,集群资源不发生转移,集群整体仍不可用。no-quorum-policy="ignore"可以解决此双节点的问题,但不要用于生产环境。换句话说,生产环境还是至少要3节点。

pe-warn-series-max、pe-input-series-max、pe-error-series-max代表日志深度。

cluster-recheck-interval是节点重新检查的频率。

[root@controller1 ~]# pcs property set pe-warn-series-max=1000 pe-input-series-max=1000 pe-error-series-max=1000 cluster-recheck-interval=5min

禁用stonith:

stonith是一种能够接受指令断电的物理设备,环境无此设备,如果不关闭该选项,执行pcs命令总是含其报错信息。

[root@controller1 ~]# pcs property set stonith-enabled=false

二个节点时,忽略节点quorum功能:

[root@controller1 ~]# pcs property set no-quorum-policy=ignore

验证集群配置信息

[root@controller1 ~]# crm_verify -L -V

为集群配置虚拟 ip

[root@controller1 ~]# pcs resource create ClusterIP ocf:heartbeat:IPaddr2 ip="192.168.0.10" cidr_netmask=32 nic=eno16777736 op monitor interval=30s

到此,Pacemaker+corosync 是为 haproxy服务的,添加haproxy资源到pacemaker集群

[root@controller1 ~]# pcs resource create lb-haproxy systemd:haproxy --clone

说明:创建克隆资源,克隆的资源会在全部节点启动。这里haproxy会在三个节点自动启动。

查看Pacemaker资源情况

[root@controller1 ~]# pcs resource ClusterIP (ocf::heartbeat:IPaddr2): Started controller1 # 心跳的资源绑定在第三个节点的 Clone Set: lb-haproxy-clone [lb-haproxy] # haproxy克隆资源 Started: [ controller1 controller2 controller3 ]

注意:这里一定要进行资源绑定,否则每个节点都会启动haproxy,造成访问混乱

将这两个资源绑定到同一个节点上

[root@controller1 ~]# pcs constraint colocation add lb-haproxy-clone ClusterIP INFINITY

绑定成功

[root@controller1 ~]# pcs resource ClusterIP (ocf::heartbeat:IPaddr2): Started controller3 Clone Set: lb-haproxy-clone [lb-haproxy] Started: [ controller1] Stopped: [ controller2 controller3 ]

配置资源的启动顺序,先启动vip,然后haproxy再启动,因为haproxy是监听到vip

[root@controller1 ~]# pcs constraint order ClusterIP then lb-haproxy-clone

手动指定资源到某个默认节点,因为两个资源绑定关系,移动一个资源,另一个资源自动转移。

[root@controller1 ~]# pcs constraint location ClusterIP prefers controller1 [root@controller1 ~]# pcs resource ClusterIP (ocf::heartbeat:IPaddr2): Started controller1 Clone Set: lb-haproxy-clone [lb-haproxy] Started: [ controller1 ] Stopped: [ controller2 controller3 ] [root@controller1 ~]# pcs resource defaults resource-stickiness=100 # 设置资源粘性,防止自动切回造成集群不稳定 现在vip已经绑定到controller1节点 [root@controller1 ~]# ip a | grep global inet 192.168.0.11/24 brd 192.168.0.255 scope global eno16777736 inet 192.168.0.10/32 brd 192.168.0.255 scope global eno16777736 inet 192.168.118.11/24 brd 192.168.118.255 scope global eno33554992

尝试通过vip连接数据库

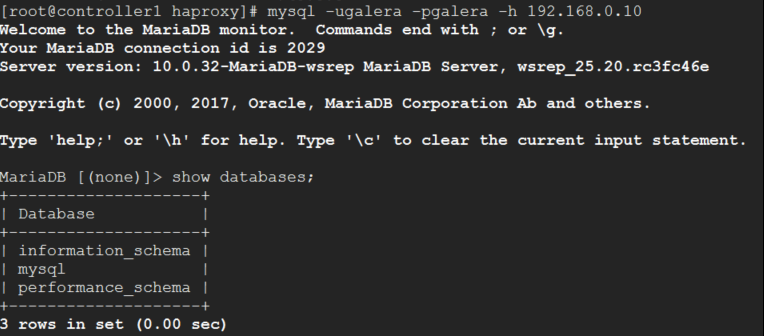

Controller1:

[root@controller1 haproxy]# mysql -ugalera -pgalera -h 192.168.0.10

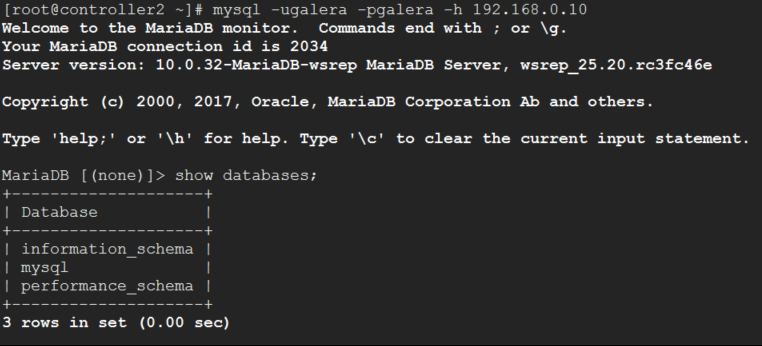

Controller2:

高可用配置成功。

测试高可用是否正常

在controller1节点上直接执行 poweroff -f

[root@controller1 ~]# poweroff -f

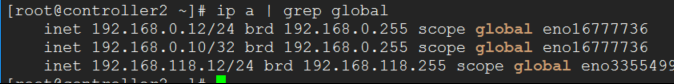

vip很快就转移到controller2节点上

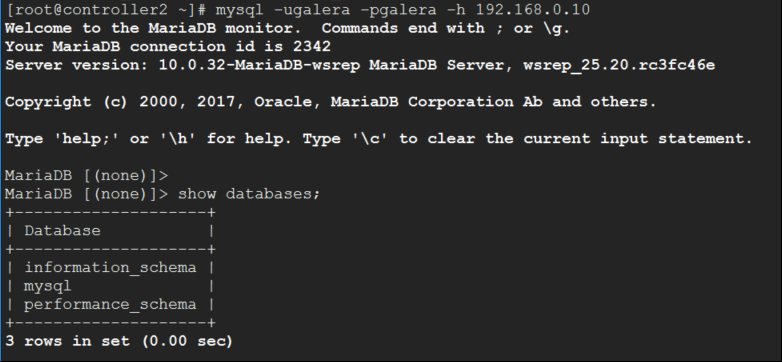

再次尝试访问数据库

无任何问题,测试成功。

查看集群信息:

[root@controller2 ~]# pcs status Cluster name: openstack-cluster Stack: corosync Current DC: controller3 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Thu Nov 30 23:57:28 2017 Last change: Thu Nov 30 23:54:11 2017 by root via crm_attribute on controller1 3 nodes configured 4 resources configured Online: [ controller2 controller3 ] OFFLINE: [ controller1 ] # controller1 已经下线 Full list of resources: ClusterIP (ocf::heartbeat:IPaddr2): Started controller2 Clone Set: lb-haproxy-clone [lb-haproxy] Started: [ controller2 ] Stopped: [ controller1 controller3 ] Daemon Status: corosync: active/enabled pacemaker: active/enabled pcsd: active/enabled