一 环境规划

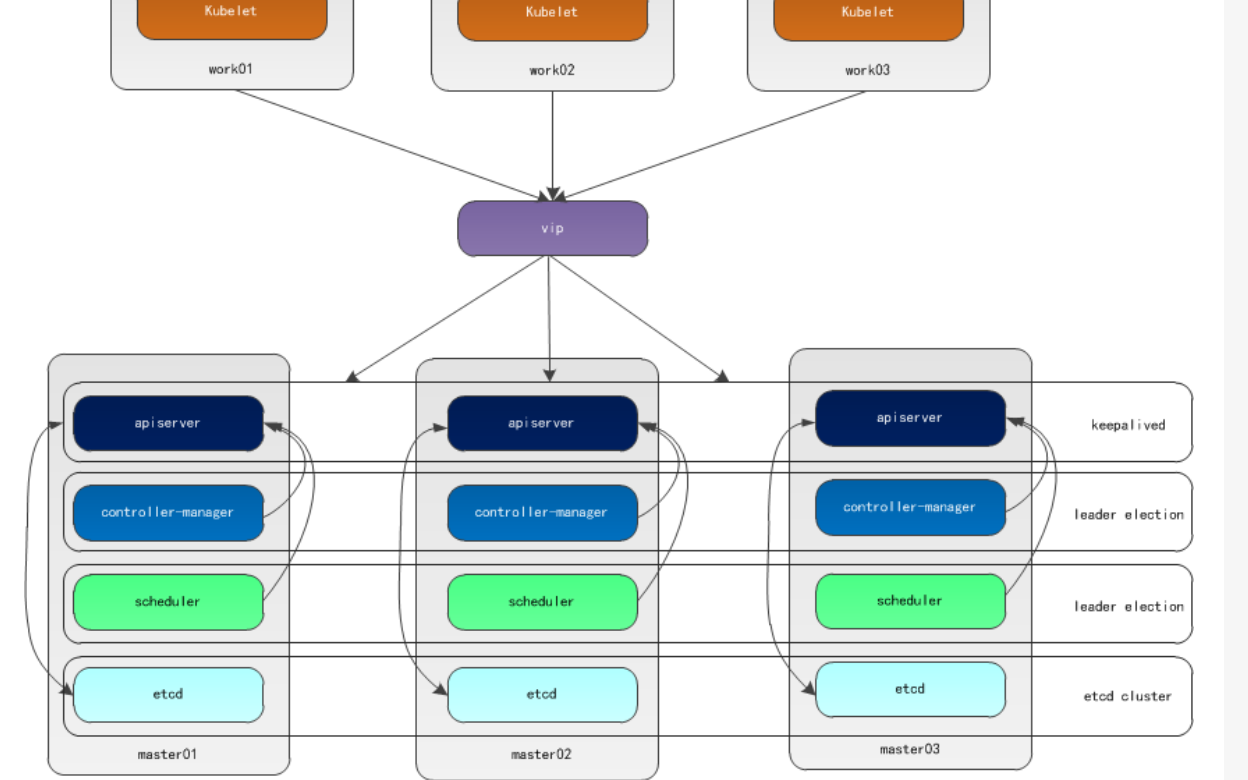

大致拓扑:

我这里是etcd和master都在同一台机器上面

二 系统初始化

见 https://www.cnblogs.com/huningfei/p/12697310.html

三 安装k8s和docker

见 https://www.cnblogs.com/huningfei/p/12697310.html

四 安装keepalived

在三台master节点上安装

yum -y install keepalived

配置文件

master1

[root@k8s-master01 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id master01

}

vrrp_instance VI_1 {

state MASTER #主

interface ens33 #网卡名字

virtual_router_id 50

priority 100 #权重

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.222 #vip

}

}

master2

! Configuration File for keepalived

global_defs {

router_id master01

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 50

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.222

}

}

master3

! Configuration File for keepalived

global_defs {

router_id master01

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 50

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.222

}

}

启动,并设置开机启动

service keepalived start

systemctl enable keepalived

四初始化master节点

只在任意一台执行即可

kubeadm init --config=kubeadm-config.yaml

初始化配置文件如下:

```bash

[root@k8s-master01 load-k8s]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.15.1

apiServer:

certSANs: #填写所有kube-apiserver节点的hostname、IP、VIP(好像也可以不用写,只写vip就行)

- k8s-master01

- k8s-node1

- k8s-node2

- 192.168.1.210

- 192.168.1.200

- 192.168.1.211

- 192.168.1.222

controlPlaneEndpoint: "192.168.1.222:6443" #vip

imageRepository: registry.aliyuncs.com/google_containers

networking:

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

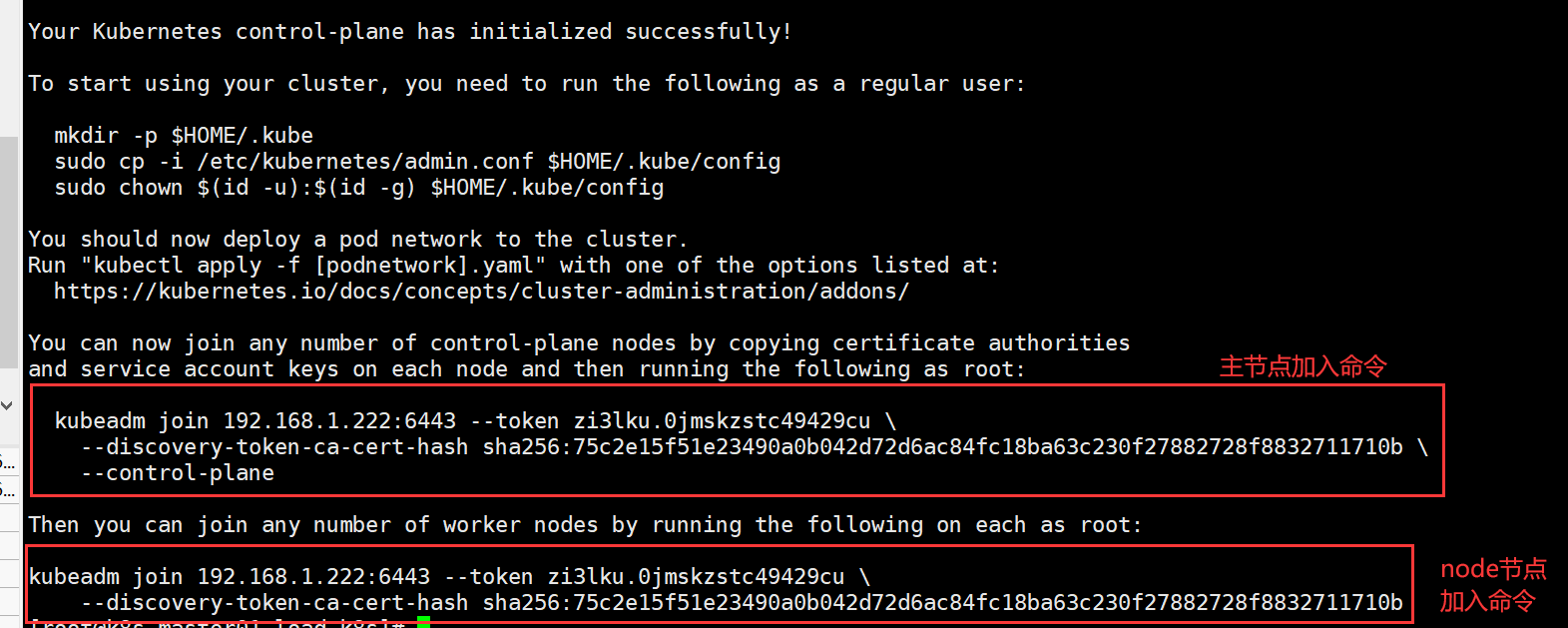

出现图中信息代表初始化成功:

然后按照提示运行命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

五安装网络插件flannel

kubectl apply -f kube-flannel.yml

六拷贝证书(关键步骤)

从master01上拷贝到其余两个主节点,我这里利用脚本拷贝

[root@k8s-master01 load-k8s]# cat cert-master.sh

USER=root # customizable

CONTROL_PLANE_IPS="192.168.1.200 192.168.1.211"

for host in ${CONTROL_PLANE_IPS}; do

scp /etc/kubernetes/pki/ca.crt "${USER}"@$host:

scp /etc/kubernetes/pki/ca.key "${USER}"@$host:

scp /etc/kubernetes/pki/sa.key "${USER}"@$host:

scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:

scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:

scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host:

scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:etcd-ca.crt

# Quote this line if you are using external etcd

scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:etcd-ca.key

done

然后去其他两个master节点把证书移动到/etc/kubernetes/pki目录下面,我这里用脚本移动

```bash

[root@k8s-node1 load-k8s]# cat mv-cert.sh

USER=root # customizable

mkdir -p /etc/kubernetes/pki/etcd

mv /${USER}/ca.crt /etc/kubernetes/pki/

mv /${USER}/ca.key /etc/kubernetes/pki/

mv /${USER}/sa.pub /etc/kubernetes/pki/

mv /${USER}/sa.key /etc/kubernetes/pki/

mv /${USER}/front-proxy-ca.crt /etc/kubernetes/pki/

mv /${USER}/front-proxy-ca.key /etc/kubernetes/pki/

mv /${USER}/etcd-ca.crt /etc/kubernetes/pki/etcd/ca.crt

# Quote this line if you are using external etcd

mv /${USER}/etcd-ca.key /etc/kubernetes/pki/etcd/ca.key

七 剩余两个master节点加入集群

kubeadm join 192.168.1.222:6443 --token zi3lku.0jmskzstc49429cu

--discovery-token-ca-cert-hash sha256:75c2e15f51e23490a0b042d72d6ac84fc18ba63c230f27882728f8832711710b

--control-plane

注意这里的ip就是keepalived生成的虚拟ip

出现下面这个代表成功

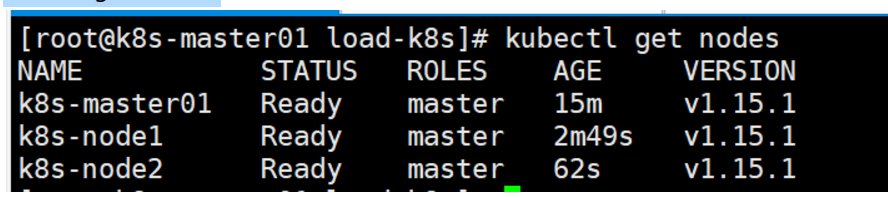

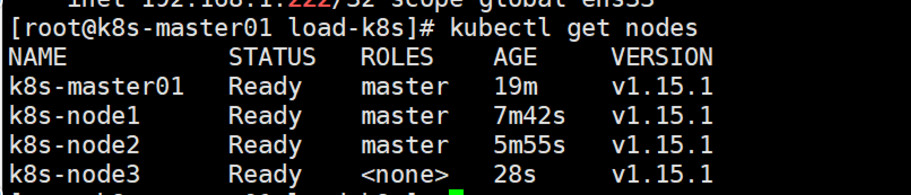

加入成功之后,可以去三台master上面查看状态都是否成功

kubectl get nodes

说明:我这里的主机名由于省事,所以就没改成master主机名,其实三台都是master节点

八 node节点加入集群

kubeadm join 192.168.1.222:6443 --token zi3lku.0jmskzstc49429cu

--discovery-token-ca-cert-hash sha256:75c2e15f51e23490a0b042d72d6ac84fc18ba63c230f27882728f8832711710b

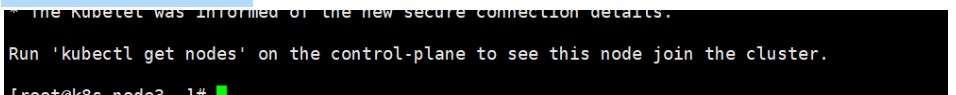

出现如下信息代表成功

查看节点状态,node3是我的node节点,其余都是主节点

九 集群高可用测试

1 master01关机,vip飘到了master02上面,各项功能正常

2 master02关机,vip飘到了master03上面,已有pod正常,但是所有命令都不能使用了

结论就是当坏掉其中一台master的时候,集群是可以正常工作的