一、简介

Supervisor是JStorm中的工作节点,类似于MR的TT,subscribe zookeeper的任务调度结果数据,根据任务调度情况启动/停止工作进程Worker。同时Supervisor需要定期向zookeeper写入活跃端口信息以便Nimbus监控。Supervisor不执行具体处理工作,所有的计算任务都交Worker完成。从整个架构上看,Supervisor处在整个JStorm三级管理架构的中间环节,辅助管理任务调度和资源管理工作。

二、架构

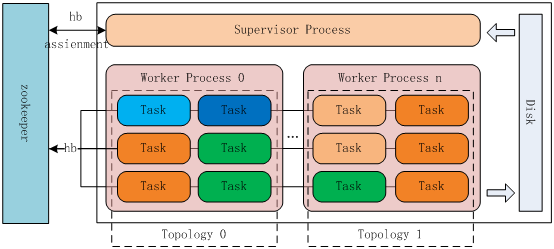

1.Supervisor

Supervisor单节点架构如上图所示,初始化时启动进程Supervisor,根据Nimbus分配的任务情况触发启动/停用Worker JVM进程,其中每个Worker进程启动一个或多个Task线程,其中Task须同属单个Topology。从整个Supervisor节点来看运行多个JVM进程,包括一个Supervisor进程和一个或多个Worker进程。

不同角色状态通过不同的方式维护。其中Task通过hb直接将包括时间信息和当前Task的统计信息写到zookeeper;Worker定期将包括Topology id,端口,Task id集合及当前时间写入本地;Supervisor定期将包括时间及节点资源(端口集合)写到zookeeper,同时从zookeeper读取任务调度结果,根据结果启动/停用Worker进程。

2.Worker

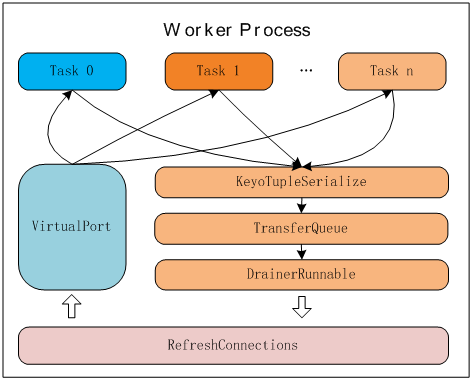

在Worker JVM进程内部,除了相互独立的Task线程外,Task线程会共享数据收发和节点之间连接管理等Worker进程内的公共资源,如图所示。其中:

VirtualPort:数据接收线程;

KeyoTupleSerialize:Tuple数据序列化;

TransferQueue:数据发送管道;

DrainerRunnable:数据发送线程;

RefreshConnections:节点之间连接管理线程。

三、实现与代码剖析

1.Supervisor

在jstorm-0.7.1中,Supervisor daemon实现在jstorm-server/src/main/java目录下com.alipay.dw.jstorm.daemon.supervisor包里。Supervisor.java是Supervisor daemon的入口,Supervisor进程主要做以下几件事情。

初始化

1、清理本地临时目录下数据$jstorm-local-dir/supervisor/tmp;

2、创建zk操作实例;

3、本地新建状态文件,$jstorm-local-dir/supervisor/localstate;

4、生成supervisor-id并写入localstate,其中key=”supervisor-id”;如果supervisor重启,先检查supervisor-id是否已经存在,若存在直接读取即可;

5、初始化并启动Heartbeat线程;

6、初始化并启动SyncProcessEvent线程;

7、初始化并启动SyncProcessEvent线程;

8、注册主进程退出数据清理Hook in SupervisorManger。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 |

@SuppressWarnings("rawtypes")

public SupervisorManger mkSupervisor(Map conf, MQContext sharedContext)

throws Exception {

LOG.info("Starting Supervisor with conf " + conf);

active = new AtomicBoolean(true);

/*

* Step 1: cleanup all files in /storm-local-dir/supervisor/tmp

*/

String path = StormConfig.supervisorTmpDir(conf);

FileUtils.cleanDirectory(new File(path));

/*

* Step 2: create ZK operation instance

* StromClusterState

*/

StormClusterState stormClusterState = Cluster

.mk_storm_cluster_state(conf);

/*

* Step 3, create LocalStat

* LocalStat is one KV database

* 4.1 create LocalState instance

* 4.2 get supervisorId, if no supervisorId, create one

*/

LocalState localState = StormConfig.supervisorState(conf);

String supervisorId = (String) localState.get(Common.LS_ID);

if (supervisorId == null) {

supervisorId = UUID.randomUUID().toString();

localState.put(Common.LS_ID, supervisorId);

}

Vector threads = new Vector();

// Step 5 create HeartBeat

// every supervisor.heartbeat.frequency.secs, write SupervisorInfo to ZK

String myHostName = NetWorkUtils.hostname();

int startTimeStamp = TimeUtils.current_time_secs();

Heartbeat hb = new Heartbeat(conf, stormClusterState, supervisorId,

myHostName, startTimeStamp, active);

hb.update();

AsyncLoopThread heartbeat = new AsyncLoopThread(hb, false, null,

Thread.MIN_PRIORITY, true);

threads.add(heartbeat);

// Step 6 create and start sync Supervisor thread

// every supervisor.monitor.frequency.secs second run SyncSupervisor

EventManager processEventManager = new EventManagerImp(false);

ConcurrentHashMap workerThreadPids = new ConcurrentHashMap();

//读取$jstorm-local-dir/supervior/localstate中key=local-assignments的value值,根据该值执行workers的kill/start

SyncProcessEvent syncProcessEvent = new SyncProcessEvent(supervisorId,

conf, localState, workerThreadPids, sharedContext);

EventManager syncSupEventManager = new EventManagerImp(false);

//通过比较$zkroot/assignments/{topologyid}全量数据和本地STORM-LOCAL-DIR/supervisor/stormdist/{topologyid}:

//1.从nimbus下载有任务分配到本节点的topology的jar和配置数据

//2.从本地删除已经失效的topology的jar和配置数据

SyncSupervisorEvent syncSupervisorEvent = new SyncSupervisorEvent(

supervisorId, conf, processEventManager, syncSupEventManager,

stormClusterState, localState, syncProcessEvent);

int syncFrequence = (Integer) conf

.get(Config.SUPERVISOR_MONITOR_FREQUENCY_SECS);

EventManagerPusher syncSupervisorPusher = new EventManagerPusher(

syncSupEventManager, syncSupervisorEvent, active, syncFrequence);

AsyncLoopThread syncSupervisorThread = new AsyncLoopThread(

syncSupervisorPusher);

threads.add(syncSupervisorThread);

LOG.info("Starting supervisor with id " + supervisorId + " at host " + myHostName);

// SupervisorManger which can shutdown all supervisor and workers

return new SupervisorManger(conf, supervisorId, active, threads,

syncSupEventManager, processEventManager, stormClusterState,

workerThreadPids);

}

|

Heartbeat线程

1、默认间隔60s向zookeeper汇报supervisor信息,汇报内容打包成SupervisorInfo,包括hostname,workerports,current time和during time等信息;

1 2 3 4 5 6 7 8 9 10 11 12 13 |

@SuppressWarnings("unchecked")

public void update() {

SupervisorInfo sInfo = new SupervisorInfo(

TimeUtils.current_time_secs(), myHostName,

(List) conf.get(Config.SUPERVISOR_SLOTS_PORTS),

(int) (TimeUtils.current_time_secs() - startTime));

try {

stormClusterState.supervisor_heartbeat(supervisorId, sInfo);

} catch (Exception e) {

LOG.error("Failed to update SupervisorInfo to ZK", e);

}

}

|

SyncProcessEvent线程

1、定期从本地文件$jstorm-local-dir/supervisor/localstate中读取key=”local-assignments”数据;该数据会由SyncSupervisorEvent线程定期写入;

2、读取本地$jstorm-local-dir /worker/ids/heartbeat中Worker状态数据;

3、对比local-assignments及worker的状态数据,执行操作start/kill worker进程;其中Worker和Supervisor属于不同JVM进程,Supervisor通过Shell命令启动Worker:

1 2 3 4 5 6 7 8 |

nohup java –server -Djava.library.path="$JAVA.LIBRARY.PATH" -Dlogfile.name="$topologyid-worker-$port.log" -Dlog4j.configuration=jstorm.log4j.properties -Djstorm.home="$JSTORM_HOME" -cp $JAVA_CLASSSPATH:$JSTORM_CLASSPATH com.alipay.dw.jstorm.daemon.worker.Worker topologyid supervisorid port workerid |

SyncProcessEvent线程执行流程如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

@SuppressWarnings("unchecked")

@Override

public void run() {

LOG.debug("Syncing processes");

try {

/**

* Step 1: get assigned tasks from localstat Map

*/

//1.从本地文件$jstorm-local-dir/supervisor/localstate里读取key=“local-assignments”数据

Map localAssignments = null;

try {

localAssignments = (Map) localState

.get(Common.LS_LOCAL_ASSIGNMENTS);

} catch (IOException e) {

LOG.error("Failed to get LOCAL_ASSIGNMENTS from LocalState", e);

throw e;

}

if (localAssignments == null) {

localAssignments = new HashMap();

}

LOG.debug("Assigned tasks: " + localAssignments);

/**

* Step 2: get local WorkerStats from local_dir/worker/ids/heartbeat

* Map

*/

//2.根据localAssignments与workers的hb比对结果得到workers的状态

Map localWorkerStats = null;

try {

localWorkerStats = getLocalWorkerStats(conf, localState,

localAssignments);

} catch (IOException e) {

LOG.error("Failed to get Local worker stats");

throw e;

}

LOG.debug("Allocated: " + localWorkerStats);

/**

* Step 3: kill Invalid Workers and remove killed worker from

* localWorkerStats

*/

//3.根据workers的状态值启动/停用相关worker

Set keepPorts = killUselessWorkers(localWorkerStats);

// start new workers

startNewWorkers(keepPorts, localAssignments);

} catch (Exception e) {

LOG.error("Failed Sync Process", e);

// throw e

}

}

|

SyncSupervisorEvent线程

1、从$zk-root/assignments/{topologyid}下载所有任务调度结果,并筛选出分配到当前supervisor的任务集合,验证单个端口仅分配了单个Topology的任务通过后,将上述任务集合写入本地文件$jstorm-local-dir/supervisor/localstate,以便SyncProcessEvent读取及后续操作;

2、对比任务分配结果与已经存在的Topology,从Nimbus下载新分配过来的Topology,同时删除过期Topology。

SyncSupervisorEvent线程执行流程如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

@Override

public void run() {

LOG.debug("Synchronizing supervisor");

try {

RunnableCallback syncCallback = new EventManagerZkPusher(this,

syncSupEventManager);

/**

* Step 1: get all assignments

* and register /ZK-dir/assignment and every assignment watch

*

*/

//1.从zk获取分配完成的任务集assignments:(topologyid -> Assignment)

//$zkroot/assignments/{topologyid}

Map assignments = Cluster.get_all_assignment(

stormClusterState, syncCallback);

LOG.debug("Get all assignments " + assignments);

/**

* Step 2: get topologyIds list from

* STORM-LOCAL-DIR/supervisor/stormdist/

*/

//2.本地已经下载的topology集合$jstorm-local-dir/supervisor/stormdist/{topologyid}

List downloadedTopologyIds = StormConfig

.get_supervisor_toplogy_list(conf);

LOG.debug("Downloaded storm ids: " + downloadedTopologyIds);

/**

* Step 3: get from ZK local node's

* assignment

*/

//3.从assignments里筛选出分配到当前supervisor的任务集合

Map localAssignment = getLocalAssign(

stormClusterState, supervisorId, assignments);

/**

* Step 4: writer local assignment to LocalState

*/

//4.将步骤3得到的结果写本地文件$jstorm-local-dir/supervisor/localstate

try {

LOG.debug("Writing local assignment " + localAssignment);

localState.put(Common.LS_LOCAL_ASSIGNMENTS, localAssignment);

} catch (IOException e) {

LOG.error("put LS_LOCAL_ASSIGNMENTS " + localAssignment

+ " of localState failed");

throw e;

}

// Step 5: download code from ZK

//5.下载新分配任务的Topology

Map topologyCodes = getTopologyCodeLocations(assignments);

downloadTopology(topologyCodes, downloadedTopologyIds);

/**

* Step 6: remove any downloaded useless topology

*/

6.删除过期任务的Topology

removeUselessTopology(topologyCodes, downloadedTopologyIds);

/**

* Step 7: push syncProcesses Event

*/

processEventManager.add(syncProcesses);

} catch (Exception e) {

LOG.error("Failed to Sync Supervisor", e);

// throw new RuntimeException(e);

}

}

|

2.Worker

在jstorm-0.7.1里,Worker daemon实现在jstorm-server/src/main/java目录下com.alipay.dw.jstorm.daemon.worker包。其中Worker.java是Worker daemon的入口。Worker进程的生命周期:

1、初始化Tuple序列化功能和数据发送管道;

2、创建分配到当前Worker的Tasks;

3、初始化并启动接收Tuple dispatcher;

4、初始化并启动用于维护Worker间连接线程RefreshConnections,包括创建/维护/销毁节点之间的连接等功能;

5、初始化并启动心跳线程WorkerHeartbeatRunable,更新本地目录:$jstorm_local_dir/worker/{workerid}/heartbeats/{workerid};

6、初始化并启动发送Tuple线程DrainerRunable;

7、注册主线程退出现场数据清理Hook。

Worker Daemon初始化流程如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

public WorkerShutdown execute() throws Exception { //1. Tuple序列化+发送管道LinkedBlockingQueue WorkerTransfer workerTransfer = getSendingTransfer(); // shutdown task callbacks //2. 初始化task线程 List shutdowntasks = createTasks(workerTransfer); workerData.setShutdownTasks(shutdowntasks); //3. WorkerVirtualPort:tuple接收dispatcher // create virtual port object // when worker receives tupls, dispatch targetTask according to task_id // conf, supervisorId, topologyId, port, mqContext, taskids WorkerVirtualPort virtual_port = new WorkerVirtualPort(workerData); Shutdownable virtual_port_shutdown = virtual_port.launch(); //3. RefreshConnections:维护节点间的连接:创建新连接|维护已建立连接|销毁无用连接 // refresh connection RefreshConnections refreshConn = makeRefreshConnections(); AsyncLoopThread refreshconn = new AsyncLoopThread(refreshConn); // refresh ZK active status RefreshActive refreshZkActive = new RefreshActive(workerData); AsyncLoopThread refreshzk = new AsyncLoopThread(refreshZkActive); //4. WorkerHeartbeatRunable:心跳线程 // 每次心跳更新本地目录数据 $LOCAL_PATH/workers/{worker-id}/Heartbeats/{worker-id} // refresh hearbeat to Local dir RunnableCallback heartbeat_fn = new WorkerHeartbeatRunable(workerData); AsyncLoopThread hb = new AsyncLoopThread(heartbeat_fn, false, null, Thread.NORM_PRIORITY, true); //5. DrainerRunable:发送tuple线程 // transferQueue, nodeportSocket, taskNodeport DrainerRunable drainer = new DrainerRunable(workerData); AsyncLoopThread dr = new AsyncLoopThread(drainer, false, null, Thread.MAX_PRIORITY, true); AsyncLoopThread[] threads = { refreshconn, refreshzk, hb, dr }; //6. 注册主线程退出数据清理hook return new WorkerShutdown(workerData, shutdowntasks, virtual_port_shutdown, threads); } |

3.Task

根据任务在Topology中不同节点角色,Task相应也会分成SpoutTask和BoltTask,二者除Task心跳及公共数据初始化等相同以外,各自有独立处理逻辑。核心实现在SpoutExecutors.java/BoltExecutors.java。

SpoutExecutors主要做两件事情:

1、作为DAG起点,负责发送原始Tuple数据;

2、如果Topology定义了Acker,SpoutExecutors会启动接收ack线程,根据接收到的ack决定是否重发Tuple;

BoltExecutor相比SpoutExecutor功能会稍微复杂:

1、接收从上游发送过来的Tuple,并根据Topology中定义的处理逻辑进行处理;

2、如果该Bolt存在下游,需要向下游发送新生成的Tuple;

3、如果Topology中定义了Acker,Bolt需要将经过简单计算的ack返回给根Spout。

四、结语

本文介绍了Supervisor/Worker/Task在整个JStorm中完成的工作及其实现逻辑和关键流程的源码剖析,其中难免存在不足和错误,欢迎交流指导。

五、参考文献

[1]Storm社区. http://Storm.incubator.apache.org/

[2]JStorm源码. https://github.com/alibaba/jStorm/

[3]Storm源码. https://github.com/nathanmarz/Storm/

[4]Jonathan Leibiusky, Gabriel Eisbruch, etc. Getting Started with Storm.http://shop.oreilly.com/product/0636920024835.do. O’Reilly Media, Inc.

[5]Xumingming Blog. http://xumingming.sinaapp.com/

[6]量子恒道官方博客. http://blog.linezing.com/