最近对计算机中并发(concurrency)和并行(parallesim)这两个词的区别很迷惑,将搜索到的相关内容整理如下。

http://www.vaikan.com/docs/Concurrency-is-not-Parallelism/#slide-7

定义:

并发 Concurrency

将相互独立的执行过程综合到一起的编程技术。

并行 Parallelism

同时执行(通常是相关的)计算任务的编程技术。

并发 vs. 并行

并发是指同时处理很多事情。

而并行是指同时能完成很多事情。

两者不同,但相关。

一个重点是组合,一个重点是执行。

并发提供了一种方式让我们能够设计一种方案将问题(非必须的)并行的解决。

并发是一种将一个程序分解成小片段独立执行的程序设计方法。

通信是指各个独立的执行任务间的合作。

这是Go语言采用的模式,包括Erlang等其它语言都是基于这种SCP模式:

C. A. R. Hoare: Communicating Sequential Processes (CACM 1978)

第二种:

https://laike9m.com/blog/huan-zai-yi-huo-bing-fa-he-bing-xing,61/

“并发”指的是程序的结构,“并行”指的是程序运行时的状态

即使不看详细解释,也请记住这句话。下面来具体说说:

并行(parallesim)

这个概念很好理解。所谓并行,就是同时执行的意思,无需过度解读。判断程序是否处于并行的状态,就看同一时刻是否有超过一个“工作单位”在运行就好了。所以,单线程永远无法达到并行状态。

要达到并行状态,最简单的就是利用多线程和多进程。但是 Python 的多线程由于存在著名的 GIL,无法让两个线程真正“同时运行”,所以实际上是无法到达并行状态的。

并发(concurrency)

要理解“并发”这个概念,必须得清楚,并发指的是程序的“结构”。当我们说这个程序是并发的,实际上,这句话应当表述成“这个程序采用了支持并发的设计”。好,既然并发指的是人为设计的结构,那么怎样的程序结构才叫做支持并发的设计?

正确的并发设计的标准是:使多个操作可以在重叠的时间段内进行(two tasks can start, run, and complete in overlapping time periods)。

这句话的重点有两个。我们先看“(操作)在重叠的时间段内进行”这个概念。它是否就是我们前面说到的并行呢?是,也不是。并行,当然是在重叠的时间段内执行,但是另外一种执行模式,也属于在重叠时间段内进行。这就是协程。

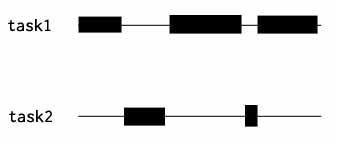

使用协程时,程序的执行看起来往往是这个样子:

task1, task2 是两段不同的代码,比如两个函数,其中黑色块代表某段代码正在执行。注意,这里从始至终,在任何一个时间点上都只有一段代码在执行,但是,由于 task1 和 task2 在重叠的时间段内执行,所以这是一个支持并发的设计。与并行不同,单核单线程能支持并发。

并发和并行的关系

Different concurrent designs enable different ways to parallelize.

这句话来自著名的talk: Concurrency is not parallelism。它足够concise,以至于不需要过多解释。但是仅仅引用别人的话总是不太好,所以我再用之前文字的总结来说明:并发设计让并发执行成为可能,而并行是并发执行的一种模式。

最后,关于Concurrency is not parallelism这个talk再多说点。自从这个talk出来,直接引爆了一堆讨论并发vs并行的文章,并且无一例外提到这个talk,甚至有的文章直接用它的slide里的图片来说明。

Although there’s a tendency to think that parallelism means multiple cores, modern computers are parallel on many different levels. The reason why individual cores have been able to get faster every year, until recently, is that they’ve been using all those extra transistors predicted by Moore’s law in parallel, both at the bit and at the instruction level.

Bit-Level Parallelism

Why is a 32-bit computer faster than an 8-bit one? Parallelism. If an 8-bit computer wants to add two 32-bit numbers, it has to do it as a sequence of 8-bit operations. By contrast, a 32-bit computer can do it in one step, handling each of the 4 bytes within the 32-bit numbers in parallel. That’s why the history of computing has seen us move from 8- to 16-, 32-, and now 64-bit architectures. The total amount of benefit we’ll see from this kind of parallelism has its limits, though, which is why we’re unlikely to see 128-bit computers soon.Instruction-Level Parallelism

Modern CPUs are highly parallel, using techniques like pipelining, out-of-order execution, and speculative execution.

As programmers, we’ve mostly been able to ignore this because, despite the fact that the processor has been doing things in parallel under our feet, it’s carefully maintained the illusion that everything is happening sequentially. This illusion is breaking down, however. Processor designers are no longer able to find ways to increase the speed of an individual core. As we move into a multicore world, we need to start worrying about the fact that instructions aren’t handled sequentially. We’ll talk about this more in Memory Visibility, on page ?.Data Parallelism

Data-parallel (sometimes called SIMD, for “single instruction, multiple data”) architectures are capable of performing the same operations on a large quantity of data in parallel. They’re not suitable for every type of problem, but they can be extremely effective in the right circumstances. One of the applications that’s most amenable to data parallelism is image processing. To increase the brightness of an image, for example, we increase the brightness of each pixel. For this reason, modern GPUs (graphics processing units) have evolved into extremely powerful data-parallel processors.Task-Level Parallelism

Finally, we reach what most people think of as parallelism—multiple processors. From a programmer’s point of view, the most important distinguishing feature of a multiprocessor architecture is the memory model, specifically whether it’s shared or distributed.

最关键的一点是,计算机在不同层次上都使用了并行技术。之前我讨论的实际上仅限于 Task-Level 这一层,在这一层上,并行无疑是并发的一个子集。但是并行并非并发的子集,因为在 Bit-Level 和 Instruction-Level 上的并行不属于并发——比如引文中举的 32 位计算机执行 32 位数加法的例子,同时处理 4 个字节显然是一种并行,但是它们都属于 32 位加法这一个任务,并不存在多个任务,也就根本没有并发。

所以,正确的说法是这样:

并行指物理上同时执行,并发指能够让多个任务在逻辑上交织执行的程序设计

按照我现在的理解,并发针对的是 Task-Level 及更高层,并行则不限。这也是它们的区别。