Spark programs are structured on RDDs: they invole reading data from stable storage into the RDD format, performing a number of computations and

data transformations on the RDD, and writing the result RDD to stable storage on collecting to the driver. Thus, most of the power of Spark comes from

its transformation: operations that are defined on RDDs and return RDDs.

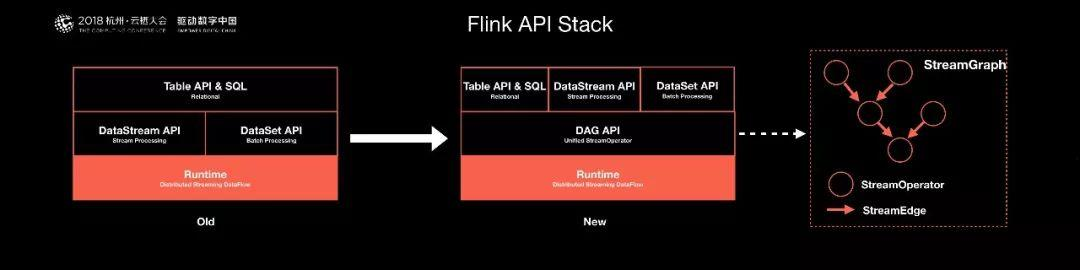

1. Need core underlying layer as basic fundmentals

2. Providing the API to high level

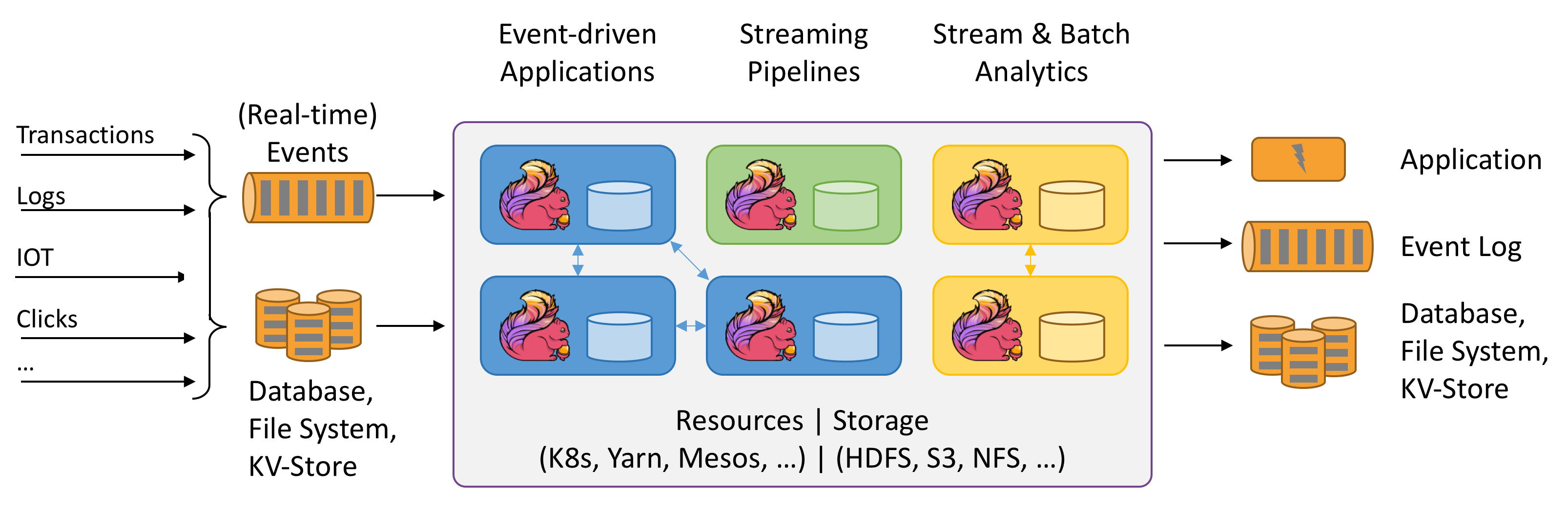

3. Stream computing = core underlying API + Distributed RPC + Computing Template + Cluster of executor

4.What will be computed, the Sequence of computed and definition of (K,V) are totally in hand of Users through the defined Computing Template.

5. We can say that Distributed Computing is a kind of platform to provide more Computing Template to operate the user data which is splited and distributed in cluster.

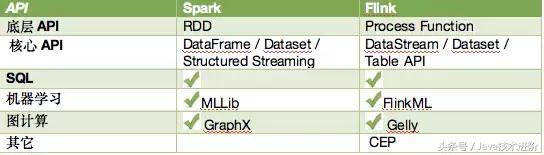

6. The ML/Bigdata SQL alike use these Stream API to do there jobs.

7. Remmeber that Stream Computing is a platform or runtime of operating distributed data with Computing Template (transformation API).

8. We can see a lot of common between StreamComputing and OS, which all provide the API to have operation on Data in Stream and on Hardeware in OS.

9.Stream Computing Runtime has API of Computing Template / Computing Generic; OS has API of Resource Operation on PC hardware.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies.

| ransformation | Description |

|---|---|

| Map DataStream → DataStream |

Takes one element and produces one element. A map function that doubles the values of the input stream: |

| FlatMap DataStream → DataStream |

Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words: |

| Filter DataStream → DataStream |

Evaluates a boolean function for each element and retains those for which the function returns true. A filter that filters out zero values: |

| KeyBy DataStream → KeyedStream |

Logically partitions a stream into disjoint partitions. All records with the same key are assigned to the same partition. Internally, keyBy() is implemented with hash partitioning. There are different ways to specify keys. This transformation returns a KeyedStream, which is, among other things, required to use keyed state. Attention A type cannot be a key if:

|

| Reduce KeyedStream → DataStream |

A "rolling" reduce on a keyed data stream. Combines the current element with the last reduced value and emits the new value. A reduce function that creates a stream of partial sums: |

| Fold KeyedStream → DataStream |

A "rolling" fold on a keyed data stream with an initial value. Combines the current element with the last folded value and emits the new value. A fold function that, when applied on the sequence (1,2,3,4,5), emits the sequence "start-1", "start-1-2", "start-1-2-3", ... |

| Aggregations KeyedStream → DataStream |

Rolling aggregations on a keyed data stream. The difference between min and minBy is that min returns the minimum value, whereas minBy returns the element that has the minimum value in this field (same for max and maxBy). |

| Window KeyedStream → WindowedStream |

Windows can be defined on already partitioned KeyedStreams. Windows group the data in each key according to some characteristic (e.g., the data that arrived within the last 5 seconds). See windows for a complete description of windows. |

| WindowAll DataStream → AllWindowedStream |

Windows can be defined on regular DataStreams. Windows group all the stream events according to some characteristic (e.g., the data that arrived within the last 5 seconds). See windows for a complete description of windows. WARNING: This is in many cases a non-parallel transformation. All records will be gathered in one task for the windowAll operator. |

| Window Apply WindowedStream → DataStream AllWindowedStream → DataStream |

Applies a general function to the window as a whole. Below is a function that manually sums the elements of a window. Note: If you are using a windowAll transformation, you need to use an AllWindowFunction instead. |

| Window Reduce WindowedStream → DataStream |

Applies a functional reduce function to the window and returns the reduced value. |

| Window Fold WindowedStream → DataStream |

Applies a functional fold function to the window and returns the folded value. The example function, when applied on the sequence (1,2,3,4,5), folds the sequence into the string "start-1-2-3-4-5": |

| Aggregations on windows WindowedStream → DataStream |

Aggregates the contents of a window. The difference between min and minBy is that min returns the minimum value, whereas minBy returns the element that has the minimum value in this field (same for max and maxBy). |

| Union DataStream* → DataStream |

Union of two or more data streams creating a new stream containing all the elements from all the streams. Note: If you union a data stream with itself you will get each element twice in the resulting stream. |

| Window Join DataStream,DataStream → DataStream |

Join two data streams on a given key and a common window. |

| Interval Join KeyedStream,KeyedStream → DataStream |

Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1.timestamp + lowerBound <= e2.timestamp <= e1.timestamp + upperBound |

| Window CoGroup DataStream,DataStream → DataStream |

Cogroups two data streams on a given key and a common window. |

| Connect DataStream,DataStream → ConnectedStreams |

"Connects" two data streams retaining their types. Connect allowing for shared state between the two streams. |

| CoMap, CoFlatMap ConnectedStreams → DataStream |

Similar to map and flatMap on a connected data stream |

| Split DataStream → SplitStream |

Split the stream into two or more streams according to some criterion. |

| Select SplitStream → DataStream |

Select one or more streams from a split stream. |

| Iterate DataStream → IterativeStream → DataStream |

Creates a "feedback" loop in the flow, by redirecting the output of one operator to some previous operator. This is especially useful for defining algorithms that continuously update a model. The following code starts with a stream and applies the iteration body continuously. Elements that are greater than 0 are sent back to the feedback channel, and the rest of the elements are forwarded downstream. See iterations for a complete description. |

| Extract Timestamps DataStream → DataStream |

Extracts timestamps from records in order to work with windows that use event time semantics. See Event Time. |