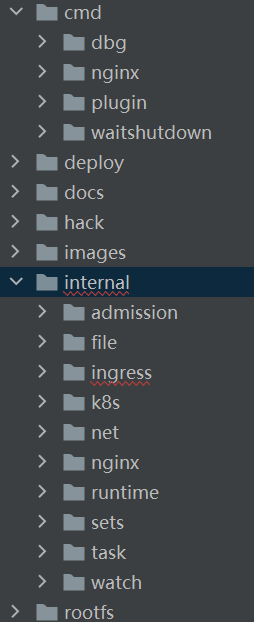

既然下了nginx-ingress的代码,那么换个角度,从go的包结构来重新审视一下,有go经验的一看就知道,cmd和internal是主要关注的;

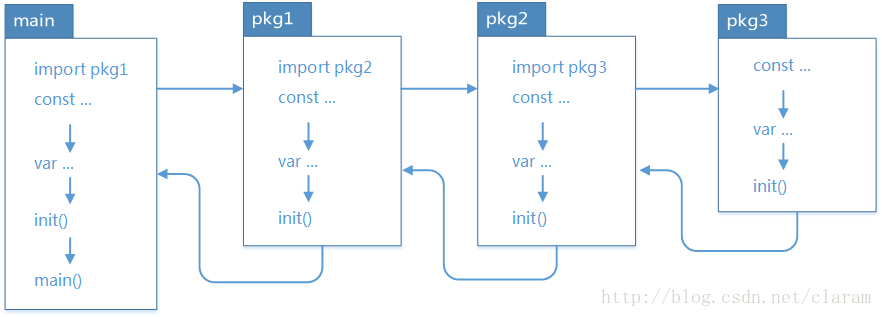

这里补充一个认知,就是go的执行顺序,来自网络

既然import这么重要,那么也看看一般cmd里main都引入了那些,明显分为三部分,第一部分是go语言提供的基础类库,第二部分是集群中通用工具引入,最关键的是k8s中的基础工具,比如封装的操作客户端client-go,apiservice客户端apimachinery等;第三部分是nginx-ingress本身的内部方法调用

"context" "fmt" "math/rand" // #nosec "net/http" "net/http/pprof" "os" "os/signal" "path/filepath" "runtime" "syscall" "time" "github.com/prometheus/client_golang/prometheus" "github.com/prometheus/client_golang/prometheus/promhttp" "k8s.io/apimachinery/pkg/api/errors" metav1 "k8s.io/apimachinery/pkg/apis/meta/v1" "k8s.io/apimachinery/pkg/util/wait" discovery "k8s.io/apimachinery/pkg/version" "k8s.io/apiserver/pkg/server/healthz" "k8s.io/client-go/kubernetes" "k8s.io/client-go/rest" "k8s.io/client-go/tools/clientcmd" certutil "k8s.io/client-go/util/cert" "k8s.io/klog/v2" "k8s.io/ingress-nginx/internal/file" "k8s.io/ingress-nginx/internal/ingress/controller" "k8s.io/ingress-nginx/internal/ingress/metric" "k8s.io/ingress-nginx/internal/k8s" "k8s.io/ingress-nginx/internal/net/ssl" "k8s.io/ingress-nginx/internal/nginx" "k8s.io/ingress-nginx/version"

那要做什么呢,main方法中先进行基本校验,根据配置启动相关监控、数据收集器等操作,

ngx := controller.NewNGINXController(conf, mc) mux := http.NewServeMux() registerHealthz(nginx.HealthPath, ngx, mux) registerMetrics(reg, mux) go startHTTPServer(conf.ListenPorts.Health, mux) go ngx.Start()

start了啥?

func (n *NGINXController) Start() { klog.InfoS("Starting NGINX Ingress controller") n.store.Run(n.stopCh) // we need to use the defined ingress class to allow multiple leaders // in order to update information about ingress status // TODO: For now, as the the IngressClass logics has changed, is up to the // cluster admin to create different Leader Election IDs. // Should revisit this in a future electionID := n.cfg.ElectionID setupLeaderElection(&leaderElectionConfig{ Client: n.cfg.Client, ElectionID: electionID, OnStartedLeading: func(stopCh chan struct{}) { if n.syncStatus != nil { go n.syncStatus.Run(stopCh) } n.metricCollector.OnStartedLeading(electionID) // manually update SSL expiration metrics // (to not wait for a reload) n.metricCollector.SetSSLExpireTime(n.runningConfig.Servers) }, OnStoppedLeading: func() { n.metricCollector.OnStoppedLeading(electionID) }, }) cmd := n.command.ExecCommand() // put NGINX in another process group to prevent it // to receive signals meant for the controller cmd.SysProcAttr = &syscall.SysProcAttr{ //Setpgid: true, //Pgid: 0, } if n.cfg.EnableSSLPassthrough { n.setupSSLProxy() } klog.InfoS("Starting NGINX process") n.start(cmd) go n.syncQueue.Run(time.Second, n.stopCh) // force initial sync n.syncQueue.EnqueueTask(task.GetDummyObject("initial-sync")) // In case of error the temporal configuration file will // be available up to five minutes after the error go func() { for { time.Sleep(5 * time.Minute) err := cleanTempNginxCfg() if err != nil { klog.ErrorS(err, "Unexpected error removing temporal configuration files") } } }() if n.validationWebhookServer != nil { klog.InfoS("Starting validation webhook", "address", n.validationWebhookServer.Addr, "certPath", n.cfg.ValidationWebhookCertPath, "keyPath", n.cfg.ValidationWebhookKeyPath) go func() { klog.ErrorS(n.validationWebhookServer.ListenAndServeTLS("", ""), "Error listening for TLS connections") }() } for { select { case err := <-n.ngxErrCh: if n.isShuttingDown { return } // if the nginx master process dies, the workers continue to process requests // until the failure of the configured livenessProbe and restart of the pod. if process.IsRespawnIfRequired(err) { return } case event := <-n.updateCh.Out(): if n.isShuttingDown { break } if evt, ok := event.(store.Event); ok { klog.V(3).InfoS("Event received", "type", evt.Type, "object", evt.Obj) if evt.Type == store.ConfigurationEvent { // TODO: is this necessary? Consider removing this special case n.syncQueue.EnqueueTask(task.GetDummyObject("configmap-change")) continue } n.syncQueue.EnqueueSkippableTask(evt.Obj) } else { klog.Warningf("Unexpected event type received %T", event) } case <-n.stopCh: return } } }

store.go里做了啥

func (i *Informer) Run(stopCh chan struct{}) { go i.Secret.Run(stopCh) go i.Endpoint.Run(stopCh) go i.IngressClass.Run(stopCh) go i.Service.Run(stopCh) go i.ConfigMap.Run(stopCh) // wait for all involved caches to be synced before processing items // from the queue if !cache.WaitForCacheSync(stopCh, i.Endpoint.HasSynced, i.IngressClass.HasSynced, i.Service.HasSynced, i.Secret.HasSynced, i.ConfigMap.HasSynced, ) { runtime.HandleError(fmt.Errorf("timed out waiting for caches to sync")) } // in big clusters, deltas can keep arriving even after HasSynced // functions have returned 'true' time.Sleep(1 * time.Second) // we can start syncing ingress objects only after other caches are // ready, because ingress rules require content from other listers, and // 'add' events get triggered in the handlers during caches population. go i.Ingress.Run(stopCh) if !cache.WaitForCacheSync(stopCh, i.Ingress.HasSynced, ) { runtime.HandleError(fmt.Errorf("timed out waiting for caches to sync")) } }

各种事件处理

ingEventHandler := cache.ResourceEventHandlerFuncs{ AddFunc: func(obj interface{}) { ing, _ := toIngress(obj) ic, err := store.GetIngressClass(ing, icConfig) if err != nil { klog.InfoS("Ignoring ingress because of error while validating ingress class", "ingress", klog.KObj(ing), "error", err) return } klog.InfoS("Found valid IngressClass", "ingress", klog.KObj(ing), "ingressclass", ic) if hasCatchAllIngressRule(ing.Spec) && disableCatchAll { klog.InfoS("Ignoring add for catch-all ingress because of --disable-catch-all", "ingress", klog.KObj(ing)) return } recorder.Eventf(ing, corev1.EventTypeNormal, "Sync", "Scheduled for sync") store.syncIngress(ing) store.updateSecretIngressMap(ing) store.syncSecrets(ing) updateCh.In() <- Event{ Type: CreateEvent, Obj: obj, } }, DeleteFunc: ingDeleteHandler, UpdateFunc: func(old, cur interface{}) { oldIng, _ := toIngress(old) curIng, _ := toIngress(cur) _, errOld := store.GetIngressClass(oldIng, icConfig) classCur, errCur := store.GetIngressClass(curIng, icConfig) if errOld != nil && errCur == nil { if hasCatchAllIngressRule(curIng.Spec) && disableCatchAll { klog.InfoS("ignoring update for catch-all ingress because of --disable-catch-all", "ingress", klog.KObj(curIng)) return } klog.InfoS("creating ingress", "ingress", klog.KObj(curIng), "ingressclass", classCur) recorder.Eventf(curIng, corev1.EventTypeNormal, "Sync", "Scheduled for sync") } else if errOld == nil && errCur != nil { klog.InfoS("removing ingress because of unknown ingressclass", "ingress", klog.KObj(curIng)) ingDeleteHandler(old) return } else if errCur == nil && !reflect.DeepEqual(old, cur) { if hasCatchAllIngressRule(curIng.Spec) && disableCatchAll { klog.InfoS("ignoring update for catch-all ingress and delete old one because of --disable-catch-all", "ingress", klog.KObj(curIng)) ingDeleteHandler(old) return } recorder.Eventf(curIng, corev1.EventTypeNormal, "Sync", "Scheduled for sync") } else { klog.V(3).InfoS("No changes on ingress. Skipping update", "ingress", klog.KObj(curIng)) return } store.syncIngress(curIng) store.updateSecretIngressMap(curIng) store.syncSecrets(curIng) updateCh.In() <- Event{ Type: UpdateEvent, Obj: cur, } }, }

controller.go

func (n *NGINXController) syncIngress(interface{}) error {

n.syncRateLimiter.Accept()

if n.syncQueue.IsShuttingDown() {

return nil

}

ings := n.store.ListIngresses()

hosts, servers, pcfg := n.getConfiguration(ings)

n.metricCollector.SetSSLExpireTime(servers)

if n.runningConfig.Equal(pcfg) {

klog.V(3).Infof("No configuration change detected, skipping backend reload")

return nil

}

n.metricCollector.SetHosts(hosts)

if !n.IsDynamicConfigurationEnough(pcfg) {

klog.InfoS("Configuration changes detected, backend reload required")

hash, _ := hashstructure.Hash(pcfg, &hashstructure.HashOptions{

TagName: "json",

})

pcfg.ConfigurationChecksum = fmt.Sprintf("%v", hash)

err := n.OnUpdate(*pcfg)

if err != nil {

n.metricCollector.IncReloadErrorCount()

n.metricCollector.ConfigSuccess(hash, false)

klog.Errorf("Unexpected failure reloading the backend:

%v", err)

n.recorder.Eventf(k8s.IngressPodDetails, apiv1.EventTypeWarning, "RELOAD", fmt.Sprintf("Error reloading NGINX: %v", err))

return err

}

klog.InfoS("Backend successfully reloaded")

n.metricCollector.ConfigSuccess(hash, true)

n.metricCollector.IncReloadCount()

n.recorder.Eventf(k8s.IngressPodDetails, apiv1.EventTypeNormal, "RELOAD", "NGINX reload triggered due to a change in configuration")

}

isFirstSync := n.runningConfig.Equal(&ingress.Configuration{})

if isFirstSync {

// For the initial sync it always takes some time for NGINX to start listening

// For large configurations it might take a while so we loop and back off

klog.InfoS("Initial sync, sleeping for 1 second")

time.Sleep(1 * time.Second)

}

retry := wait.Backoff{

Steps: 15,

Duration: 1 * time.Second,

Factor: 0.8,

Jitter: 0.1,

}

err := wait.ExponentialBackoff(retry, func() (bool, error) {

err := n.configureDynamically(pcfg)

if err == nil {

klog.V(2).Infof("Dynamic reconfiguration succeeded.")

return true, nil

}

klog.Warningf("Dynamic reconfiguration failed: %v", err)

return false, err

})

if err != nil {

klog.Errorf("Unexpected failure reconfiguring NGINX:

%v", err)

return err

}

ri := getRemovedIngresses(n.runningConfig, pcfg)

re := getRemovedHosts(n.runningConfig, pcfg)

n.metricCollector.RemoveMetrics(ri, re)

n.runningConfig = pcfg

return nil

}

nginx.go

func (n *NGINXController) configureDynamically(pcfg *ingress.Configuration) error { backendsChanged := !reflect.DeepEqual(n.runningConfig.Backends, pcfg.Backends) if backendsChanged { err := configureBackends(pcfg.Backends) if err != nil { return err } } streamConfigurationChanged := !reflect.DeepEqual(n.runningConfig.TCPEndpoints, pcfg.TCPEndpoints) || !reflect.DeepEqual(n.runningConfig.UDPEndpoints, pcfg.UDPEndpoints) if streamConfigurationChanged { err := updateStreamConfiguration(pcfg.TCPEndpoints, pcfg.UDPEndpoints) if err != nil { return err } } serversChanged := !reflect.DeepEqual(n.runningConfig.Servers, pcfg.Servers) if serversChanged { err := configureCertificates(pcfg.Servers) if err != nil { return err } } return nil }

然后呢

func configureBackends(rawBackends []*ingress.Backend) error { backends := make([]*ingress.Backend, len(rawBackends)) for i, backend := range rawBackends { var service *apiv1.Service if backend.Service != nil { service = &apiv1.Service{Spec: backend.Service.Spec} } luaBackend := &ingress.Backend{ Name: backend.Name, Port: backend.Port, SSLPassthrough: backend.SSLPassthrough, SessionAffinity: backend.SessionAffinity, UpstreamHashBy: backend.UpstreamHashBy, LoadBalancing: backend.LoadBalancing, Service: service, NoServer: backend.NoServer, TrafficShapingPolicy: backend.TrafficShapingPolicy, AlternativeBackends: backend.AlternativeBackends, } var endpoints []ingress.Endpoint for _, endpoint := range backend.Endpoints { endpoints = append(endpoints, ingress.Endpoint{ Address: endpoint.Address, Port: endpoint.Port, }) } luaBackend.Endpoints = endpoints backends[i] = luaBackend } statusCode, _, err := nginx.NewPostStatusRequest("/configuration/backends", "application/json", backends) if err != nil { return err } if statusCode != http.StatusCreated { return fmt.Errorf("unexpected error code: %d", statusCode) } return nil }

继续跳

// NewPostStatusRequest creates a new POST request to the internal NGINX status server func NewPostStatusRequest(path, contentType string, data interface{}) (int, []byte, error) { url := fmt.Sprintf("http://127.0.0.1:%v%v", StatusPort, path) buf, err := json.Marshal(data) if err != nil { return 0, nil, err } client := http.Client{} res, err := client.Post(url, contentType, bytes.NewReader(buf)) if err != nil { return 0, nil, err } defer res.Body.Close() body, err := io.ReadAll(res.Body) if err != nil { return 0, nil, err } return res.StatusCode, body, nil }

go系统通过httppost请求与luanginx交互,那么反过来lua在哪里接收呢? 搜一下,在configuration.lua中

function _M.call() if ngx.var.request_method ~= "POST" and ngx.var.request_method ~= "GET" then ngx.status = ngx.HTTP_BAD_REQUEST ngx.print("Only POST and GET requests are allowed!") return end if ngx.var.request_uri == "/configuration/servers" then handle_servers() return end if ngx.var.request_uri == "/configuration/general" then handle_general() return end if ngx.var.uri == "/configuration/certs" then handle_certs() return end if ngx.var.request_uri == "/configuration/backends" then handle_backends() return end ngx.status = ngx.HTTP_NOT_FOUND ngx.print("Not found!") end