#=======================【VM机器,二进制安装】

# 安装环境

# OS System = CentOS-7.4 X64

# JDK = jdk-12.0.2

# zookeeper = zookeeper-3.6.1-x64

# zkui = zkui-2.0 , 备注:main.java有修复一个bug关于config.cfg路径获取。

# https://github.com/tiandong19860806/zkui

# https://github.com/DeemOpen/zkui/issues/81

#========================install zookeeper======================================================================== # step 1 设置系统swap 分区大小,参考如下公式: # RAM / Swap Space # Between 1 GB and 2 GB / 1.5 times the size of the RAM # Between 2 GB and 16 GB / Equal to the size of the RAM # More than 16 GB / 16 GB # 执行如下命令 # 然后,检查和设置swap那一行是否有被注释,如果被注释就要开启 cat /etc/fstab # 查看swap 空间大小(总计): free -m # 查看swap 空间(file(s)/partition(s)): swapon -s # 查看磁盘路径的空间 df -h /home # 关闭所有的swap空间 swapoff -a # 创建新的swap文件,bs=表示每个block分块大小是1024 byte,count表示多少个block分块,所以总大小是bs*count=4GB dd if=/dev/zero of=/home/system-swap bs=1024 count=4194304 # 输出如下 # 4194304+0 records in # 4194304+0 records out # 4294967296 bytes (4.3 GB) copied, 29.991 s, 143 MB/s # 设置这个分区的权限为600 chmod -R 600 /home/system-swap # 把这个新建分区,变成swap分区 /sbin/mkswap /home/system-swap # 输出如下 # Setting up swapspace version 1, size = 4194300 KiB # no label, UUID=941e36a8-d389-4400-ad7d-07387e1da776 # 把这个新建分区,设置状态为open。 # 备注:重启之后,该swap分区还是失效,只有执行下面配置后才会永久生效。 /sbin/swapon /home/system-swap # 设置重启后,swap分区仍然有效 # 编辑如下文件,修改swap行内容为新加分区/home/system-swap cat /etc/fstab ##### /dev/mapper/centos-swap swap swap defaults 0 0 # /home/system-swap swap swap defaults 0 0 # 关闭SELINUX,设置参数SELINUXTYPE=disabled vi /etc/selinux/config # 修改参数如下 # # SELINUXTYPE=targeted SELINUXTYPE=disabled # ============================================================================================================= # step 2: 安装系统依赖软件 # 修改yum为国内镜像 === 看具体情况,有时候国内镜像不一定完整,这个时候还是要切换回国外地址 # cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup-linux && # wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo && yum clean all && yum makecache # 清理掉无用的repo yum --enablerepo=base clean metadata # 安装依赖软件 yum install binutils -y && yum install compat-libstdc++-33 -y && yum install gcc -y && yum install gcc-c++ -y && yum install glibc -y && yum install glibc-devel -y && yum install libgcc -y && yum install libstdc++ -y && yum install libstdc++-devel -y && yum install libaio -y && yum install libaio-devel -y && yum install libXext -y && yum install libXtst -y && yum install libX11 -y && yum install libXau -y && yum install libxcb -y && yum install libXi -y && yum install make -y && yum install sysstat -y && yum install zlib-devel -y && yum install elfutils-libelf-devel -y # yum rpm -q --queryformat %-{name}-%{version}-%{release}-%{arch}" " compat-libstdc++-33 glibc-kernheaders glibc-headers libaio libgcc glibc-devel xorg-x11-deprecated-libs # 输出无法下载和安装的软件,清单如下: # package compat-libstdc++-33 is not installed # package glibc-kernheaders is not installed # package glibc-headers is not installed # libaio-0.3.109-13.el7-x86_64 # libgcc-4.8.5-16.el7-x86_64 # package glibc-devel is not installed # package xorg-x11-deprecated-libs is not installed # 遇到部分无法在aliyun下载的软件,则需要重新替换yum.repo # cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup-aliyun && # cp /etc/yum.repos.d/CentOS-Base.repo.backup-linux /etc/yum.repos.d/CentOS-Base.repo && yum clean all && yum makecache && yum install -y compat-libstdc++* && yum install -y glibc-kernheaders* && yum install -y glibc-headers* && yum install -y libaio-* && yum install -y libgcc-* && yum install -y glibc-devel* && yum install -y xorg-x11-deprecated-libs* && # 确保,已经包含了libaio-0.3.106,默认开启异步I/O。 # 检查在操作系统中,是否开启AIO 异步读写IO cat /proc/slabinfo | grep kio # 如果没有开启,则在下面文件中,增加如下两行 vi /proc/slabinfo kioctx 51 120 320 12 1 : tunables 54 27 8 : slabdata 10 10 0 kiocb 30 30 256 15 1 : tunables 120 60 8 : slabdata 2 2 0 # ============================================================================================================= # step 3: 创建zookeper安装目录 mkdir -p /opt/soft/{jdk,zookeeper} # 然后上传jdk或zookeeper 二进制文件到上面创建的软件目录 # 创建zookeeper的安装主目录 mkdir -p /app/zookeeper && # 创建zookeeper的数据主目录 mkdir -p /data/zookeeper && # 创建zookeeper的日志主目录 mkdir -p /log/zookeeper # ============================================================================================================= # step 4: zookeeper安装用户和组的创建 # 使用root用户,进行如下操作: # 创建ops_install组 groupadd -g 5000 ops_install # 创建ops_admin组 groupadd -g 501 ops_admin # 创建zookeeper用户 useradd -g ops_install -G ops_admin zookeeper # 修改zookeeper密码 echo 'password'|passwd --stdin zookeeper # 删除用户和其以来的用户文件 # userdel -r zookeeper # 查看用户zookeeper权限是否设置正确,正确输出结果如下 # id zookeeper # [root@CNT7XZKPD02 ~]# id zookeeper # uid=1001(zookeeper) gid=5000(ops_install) groups=5000(ops_install),501(ops_admin) # ============================================================================================================= # step 5: 安装用户的profile文件的设置 # 编辑/etc/profile,加入以下内容 vi /etc/profile # -----------------------java env----------------------------------------------------------------- JAVA_HOME=/env/jdk/jdk-12.0.2 PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar # -----------------------java env----------------------------------------------------------------- # -----------------------zookeeper env--------------------------------------------------------------- ZOOKEEPER_HOME=/app/zookeeper/zookeeper-1 PATH=$ZOOKEEPER_HOME/bin:$PATH # -----------------------zookeeper env--------------------------------------------------------------- # 生效配置环境变量 source /etc/profile # 检查生效环境变量 env | grep ZOOKEEPER env | grep JAVA # ============================================================================================================= # step 5: 安装jdk 8 # 创建jdk的软件目录和安装目录,分别如下: mkdir -p /opt/soft/jdk/ && mkdir -p /env/jdk/ # 然后,通过WinSCP工具,把JDK 8二进制安装包tar复制到软件目录 ls -al /opt/soft/jdk/jdk-12.0.2_linux-x64_bin.tar.gz # 解压jdk到安装目录 tar -zxvf /opt/soft/jdk/jdk-12.0.2_linux-x64_bin.tar.gz -C /env/jdk/ # ============================================================================================================= # 伪集群, 节点1/2/3 # step 6: 创建相应的文件系统(或安装目录) # for 循环 - begin V_NODE_NUM=3 for ((i=1;i<=${V_NODE_NUM};i++)) do mkdir -p /app/zookeeper/zookeeper-${i} && mkdir -p /data/zookeeper/zookeeper-${i} && mkdir -p /log/zookeeper/zookeeper-${i} done # for 循环 - end ls -al /app/zookeeper # 输出结果,如下图 # [root@CNT7XZKPD02 ~]# ls -al /app/zookeeper # total 0 # drwxr-xr-x 5 root root 63 Jun 29 14:15 . # drwxr-xr-x 3 root root 23 Jun 29 14:15 .. # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-1 # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-2 # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-3 ls -al /data/zookeeper # 输出结果,如下图 # [root@CNT7XZKPD02 ~]# ls -al /data/zookeeper/ # total 0 # drwxr-xr-x 5 root root 63 Jun 29 14:15 . # drwxr-xr-x 3 root root 23 Jun 29 14:15 .. # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-1 # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-2 # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-3 ls -al /log/zookeeper # 输出结果,如下图 # [root@CNT7XZKPD02 ~]# ls -al /log/zookeeper # total 0 # drwxr-xr-x 5 root root 63 Jun 29 14:15 . # drwxr-xr-x 3 root root 23 Jun 29 14:15 .. # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-1 # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-2 # drwxrwxr-x 2 zookeeper ops_install 6 Jun 29 14:15 zookeeper-3 # 解压jdk到安装目录: 分别是三个伪节点目录 tar -zxvf /opt/soft/zookeeper/apache-zookeeper-3.6.1-bin.tar.gz -C /app/zookeeper/ # 查看zookeeper安装文件 ls -al /app/zookeeper/apache-zookeeper-3.6.1-bin # 解压后,可以看到当前目录下,如下文件 # [root@CNT7XZKPD02 ~]# ls -al /app/zookeeper/apache-zookeeper-3.6.1-bin # total 32 # drwxr-xr-x 6 root root 134 Jun 29 11:06 . # drwxrwxr-x 3 zookeeper ops_install 29 Jun 29 11:08 .. # drwxr-xr-x 2 root root 232 May 4 21:26 bin # drwxr-xr-x 2 root root 77 May 4 21:26 conf # drwxr-xr-x 5 root root 4096 May 4 23:07 docs # drwxr-xr-x 2 root root 4096 Jun 29 11:06 lib # -rw-r--r-- 1 root root 11358 May 4 21:26 LICENSE.txt # -rw-r--r-- 1 root root 432 May 4 22:22 NOTICE.txt # -rw-r--r-- 1 root root 1560 May 4 21:26 README.md # -rw-r--r-- 1 root root 1347 May 4 21:26 README_packaging.txt # 修改文件名为zookeeper-3.6.1 mv /app/zookeeper/apache-zookeeper-3.6.1-bin /app/zookeeper/zookeeper-3.6.1/ # 配置伪集群,复制三个节点 myid=1/2/3的三个安装目录 for ((i=1;i<=${V_NODE_NUM};i++)) do cp -rf /app/zookeeper/zookeeper-3.6.1/* /app/zookeeper/zookeeper-${i}/ cp /app/zookeeper/zookeeper-1/conf/zoo_sample.cfg /app/zookeeper/zookeeper-1/conf/zoo.cfg done # for 循环 - end # 授予zookeeper用户访问文件夹和文件的权限 V_NODE_NUM=3 for ((i=1;i<=${V_NODE_NUM};i++)) do chmod -R 775 /app/zookeeper/zookeeper-${i} && chown -R zookeeper:ops_install /app/zookeeper/zookeeper-${i} && chmod -R 775 /data/zookeeper/zookeeper-${i} && chown -R zookeeper:ops_install /data/zookeeper/zookeeper-${i} && chmod -R 775 /log/zookeeper/zookeeper-${i} && chown -R zookeeper:ops_install /log/zookeeper/zookeeper-${i} done # for 循环 - end # ============================================================================================================= # 配置伪集群 # step 10: 配置zookeeper的文件zoo.cfg # -------------------------------------------------- # 节点1 # 首先,修改配置文件 # 备注,必须是这个名字:zoo.cfg # cp /app/zookeeper/zookeeper-1/conf/zoo_sample.cfg /app/zookeeper/zookeeper-1/conf/zoo.cfg vi /app/zookeeper/zookeeper-1/conf/zoo.cfg # 修改配置文件,如下: # 参数1,数据目录和日志目录 dataDir=/data/zookeeper/zookeeper-1 dataLogDir=/log/zookeeper/zookeeper-1 # 参数2:server参数,为配置集群节点 # 备注:如果想做成伪集群(同一台VM,提供集群部署部署zookeeper),则将每个参数server.x的端口改为不同端口 # 格式 = server.x={IP或HOSTNAME}:{端口 = 2888}:{端口 = 3888} server.1=CNT7XZKPD02:2881:3881 server.2=CNT7XZKPD02:2882:3882 server.3=CNT7XZKPD02:2883:3883 # 参数3:客户端端口 clientPort=2181 # -------------------------------------------------- # 节点2 # 首先,修改配置文件 # 备注,必须是这个名字:zoo.cfg # cp /app/zookeeper/zookeeper-2/conf/zoo_sample.cfg /app/zookeeper/zookeeper-2/conf/zoo.cfg vi /app/zookeeper/zookeeper-2/conf/zoo.cfg # 修改配置文件,如下: # 参数1,数据目录和日志目录 dataDir=/data/zookeeper/zookeeper-2 dataLogDir=/log/zookeeper/zookeeper-2 # 参数2:server参数,为配置集群节点 # 备注:如果想做成伪集群(同一台VM,提供集群部署部署zookeeper),则将每个参数server.x的端口改为不同端口 # 格式 = server.x={IP或HOSTNAME}:{端口 = 2888}:{端口 = 3888} server.1=CNT7XZKPD02:2881:3881 server.2=CNT7XZKPD02:2882:3882 server.3=CNT7XZKPD02:2883:3883 # 参数3:客户端端口 clientPort=2182 # -------------------------------------------------- # 节点3 # 首先,修改配置文件 # 备注,必须是这个名字:zoo.cfg # cp /app/zookeeper/zookeeper-3/conf/zoo_sample.cfg /app/zookeeper/zookeeper-3/conf/zoo.cfg vi /app/zookeeper/zookeeper-3/conf/zoo.cfg # 修改配置文件,如下: # 参数1,数据目录和日志目录 dataDir=/data/zookeeper/zookeeper-3 dataLogDir=/log/zookeeper/zookeeper-3 # 参数2:server参数,为配置集群节点 # 备注:如果想做成伪集群(同一台VM,提供集群部署部署zookeeper),则将每个参数server.x的端口改为不同端口 # 格式 = server.x={IP或HOSTNAME}:{端口 = 2888}:{端口 = 3888} server.1=CNT7XZKPD02:2881:3881 server.2=CNT7XZKPD02:2882:3882 server.3=CNT7XZKPD02:2883:3883 # 参数3:客户端端口 clientPort=2183 # ============================================================================================================= # 配置伪集群 # step 11: 配置zookeeper的文件myid # 节点1/2/3 # 配置伪集群,复制三个节点的文件 myid=1/2/3 for ((i=1;i<=${V_NODE_NUM};i++)) do cat > /data/zookeeper/zookeeper-${i}/myid << EOF ${i} EOF done # for 循环 - end # ================================================================================================================================== # step 12: 启动zookeeper # 启动服务: 节点1/2/3 /app/zookeeper/zookeeper-1/bin/zkServer.sh start /app/zookeeper/zookeeper-1/conf/zoo.cfg /app/zookeeper/zookeeper-2/bin/zkServer.sh start /app/zookeeper/zookeeper-2/conf/zoo.cfg /app/zookeeper/zookeeper-3/bin/zkServer.sh start /app/zookeeper/zookeeper-3/conf/zoo.cfg # 查看每个节点的角色: 节点1/2/3 /app/zookeeper/zookeeper-1/bin/zkServer.sh status /app/zookeeper/zookeeper-1/conf/zoo.cfg /app/zookeeper/zookeeper-1/bin/zkServer.sh status /app/zookeeper/zookeeper-2/conf/zoo.cfg /app/zookeeper/zookeeper-1/bin/zkServer.sh status /app/zookeeper/zookeeper-3/conf/zoo.cfg # 停止服务: 节点1/2/3 /app/zookeeper/zookeeper-1/bin/zkServer.sh stop /app/zookeeper/zookeeper-1/conf/zoo.cfg /app/zookeeper/zookeeper-1/bin/zkServer.sh stop /app/zookeeper/zookeeper-2/conf/zoo.cfg /app/zookeeper/zookeeper-1/bin/zkServer.sh stop /app/zookeeper/zookeeper-3/conf/zoo.cfg # 查看zookeeper启动后的三个节点的端口,如下 : # client_port = 2181 / 2182 / 2183 # server_port = 2881:3881 / 2882:3882 / 2883:3883 [root@CNT7XZKPD02 ~]# netstat -nltp | grep java tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 2533/java tcp 0 0 0.0.0.0:35581 0.0.0.0:* LISTEN 2533/java tcp 0 0 192.168.16.32:2882 0.0.0.0:* LISTEN 2595/java tcp 0 0 0.0.0.0:2181 0.0.0.0:* LISTEN 2533/java tcp 0 0 0.0.0.0:2182 0.0.0.0:* LISTEN 2595/java tcp 0 0 0.0.0.0:45062 0.0.0.0:* LISTEN 2595/java tcp 0 0 0.0.0.0:2183 0.0.0.0:* LISTEN 2663/java tcp 0 0 0.0.0.0:34312 0.0.0.0:* LISTEN 2663/java tcp 0 0 192.168.16.32:3881 0.0.0.0:* LISTEN 2533/java tcp 0 0 192.168.16.32:3882 0.0.0.0:* LISTEN 2595/java tcp 0 0 192.168.16.32:3883 0.0.0.0:* LISTEN 2663/java # -------------------------------------------------------------------------------------------------- # zookeeper 命令使用 # 连接服务器 zkCli.sh -server {server_zookeeper_ip}:{server_client_port} zkCli.sh -server 127.0.0.1:2181 zkCli.sh -server 127.0.0.1:2182 zkCli.sh -server 127.0.0.1:2183 # 或 zkCli.sh -server 192.168.16.32:2181 zkCli.sh -server 192.168.16.32:2182 zkCli.sh -server 192.168.16.32:2183 # 然后,在zookeeper命令行,输入如下命令: # 创建数据,path = "/data-test" , value = "hello zookeeper" [zk: 127.0.0.1:2182(CONNECTED) 0] create "/data-test" "zookeeper" # 查询数据 [zk: 127.0.0.1:2181(CONNECTED) 15] get "/data-test" zookeeper # 修改数据,path = /data-test , value = "hello zookeeper" [zk: 127.0.0.1:2182(CONNECTED) 0] set "/data-test" "hello zookeeper" # 查询数据 [zk: 127.0.0.1:2182(CONNECTED) 5] get "/data-test" hello zookeeper # 添加子数据,path = /data-test/sub-key-01 , value = "sub-value-01" [zk: 192.168.16.32:2183(CONNECTED) 2] create "/data-test/sub-key-01" "sub-value-01" Created /data-test/sub-key-01 # 查询数据 [zk: 192.168.16.32:2183(CONNECTED) 3] get "/data-test/sub-key-01" sub-value-01 [zk: 192.168.16.32:2183(CONNECTED) 4] get "/data-test" hello zookeeper [zk: 192.168.16.32:2183(CONNECTED) 5] get /data-test hello zookeeper # 或查询数据 [zk: 127.0.0.1:2181(CONNECTED) 21] get "/data-test/sub-key-01" sub-value-01 [zk: 127.0.0.1:2181(CONNECTED) 22] get "/data-test" hello zookeeper [zk: 127.0.0.1:2181(CONNECTED) 23] get /data-test hello zookeeper # 查询节点清单 [zk: 192.168.16.32:2183(CONNECTED) 6] ls / [data-test, zookeeper] # 添加子数据,path = /data-test/sub-key-02 , value = "sub-value-02" [zk: 192.168.16.32:2183(CONNECTED) 9] create "/data-test/sub-key-02" "sub-value-02" Created /data-test/sub-key-02 [zk: 192.168.16.32:2183(CONNECTED) 10] ls "/data-test" [sub-key-01, sub-key-02] # 删除单个节点 [zk: 127.0.0.1:2181(CONNECTED) 21] delete "/data-test/sub-key-02" [zk: 192.168.16.32:2183(CONNECTED) 14] ls "/data-test" [sub-key-01] # 删除当前结点和其下面的全部子节点 # rmr = 旧版本命令 [zk: 127.0.0.1:2181(CONNECTED) 21] rmr "/data-test" # 或 deleteall == 新版本命令 [zk: 127.0.0.1:2181(CONNECTED) 21] rmr "/data-test" # 检查删除后结果,/data-test和其子节点都不存在了 [zk: 192.168.16.32:2183(CONNECTED) 25] ls /data-test Node does not exist: /data-test # ==================================================================================================================================================== # step 13: 设置开机启动zookeeper # 创建zookeepr-1.service文件,如下 # 切换到root账户 su root # 节点1/2/3 # 配置伪集群,复制三个节点 myid=1/2/3的service服务文件 V_NODE_NUM=3 for ((i=1;i<=${V_NODE_NUM};i++)) do echo "${i}, begin the service register : zookeeper-${i}, ...." cat > /etc/systemd/system/zookeeper-${i}.service <<EOF [Unit] Description=zookeeper-${i} service After=network.target After=network-online.target Wants=network-online.target [Service] User=zookeeper Type=forking TimeoutSec=0 Environment="JAVA_HOME=/env/jdk/jdk-12.0.2" ExecStart=/app/zookeeper/zookeeper-${i}/bin/zkServer.sh start /app/zookeeper/zookeeper-${i}/conf/zoo.cfg # ExecStop=/app/zookeeper/zookeeper-${i}/bin/zkServer.sh stop /app/zookeeper/zookeeper-${i}/conf/zoo.cfg RestartSec=5 LimitNOFILE=1000000 [Install] WantedBy=multi-user.target EOF # register service systemctl enable zookeeper-${i} systemctl daemon-reload # start service systemctl start zookeeper-${i} & # check service systemctl status zookeeper-${i} ps -ef | grep zookeeper-${i} netstat -nltp | grep zookeeper-${i} echo "${i}, finish the service register : zookeeper-${i}, ...." done # for 循环 - end # ==================================================================================================================================================== # step 14: 安装zookeeper 可视化UI界面工具 = zkui # 1. 首先,从下面git地址下载源代码,然后通过maven和eclipse构建编译,得到jar包 # 版本 = zkui-2.0-SNAPSHOTS # SOURCE = https://github.com/DeemOpen/zkui.git # git clone https://github.com/DeemOpen/zkui.git # 2. 创建zkui的linux服务器的安装目录 mkdir -p /app/zkui/zkui-2.0 # 复制zkui-2.0-SNAPSHOT.jar文件到此目录u ls -al /app/zkui/zkui-2.0/zkui-2.0-SNAPSHOT.jar # 3. 创建zkui的配置文件,如下 # 注意:zkui的安装,可以和zookeeper服务器不在同一台服务器上。 cat > /app/zkui/zkui-2.0/config.cfg <<EOF #Server Port serverPort=19090 #Comma seperated list of all the zookeeper servers zkServer=CNT7XZKPD02:2181,CNT7XZKPD02:2182,CNT7XZKPD02:2183 #Http path of the repository. Ignore if you dont intent to upload files from repository. scmRepo=http://CNT7XZKPD02:2181/@rev1= #Path appended to the repo url. Ignore if you dont intent to upload files from repository. scmRepoPath=//appconfig.txt #if set to true then userSet is used for authentication, else ldap authentication is used. ldapAuth=false ldapDomain=mycompany,mydomain #ldap authentication url. Ignore if using file based authentication. ldapUrl=ldap://<ldap_host>:<ldap_port>/dc=mycom,dc=com #Specific roles for ldap authenticated users. Ignore if using file based authentication. ldapRoleSet={"users": [{ "username":"domain\user1" , "role": "ADMIN" }]} userSet = {"users": [{ "username":"admin" , "password":"password","role": "ADMIN" },{ "username":"appconfig" , "password":"password#123","role": "USER" }]} #Set to prod in production and dev in local. Setting to dev will clear history each time. env=prod jdbcClass=org.h2.Driver jdbcUrl=jdbc:h2:zkui jdbcUser=root jdbcPwd=password #If you want to use mysql db to store history then comment the h2 db section. #jdbcClass=com.mysql.jdbc.Driver #jdbcUrl=jdbc:mysql://localhost:3306/zkui #jdbcUser=root #jdbcPwd=password loginMessage=Please login using admin/manager or appconfig/appconfig. #session timeout 5 mins/300 secs. sessionTimeout=300 #Default 5 seconds to keep short lived zk sessions. If you have large data then the read will take more than 30 seconds so increase this accordingly. #A bigger zkSessionTimeout means the connection will be held longer and resource consumption will be high. zkSessionTimeout=5 #Block PWD exposure over rest call. blockPwdOverRest=false #ignore rest of the props below if https=false. https=false keystoreFile=/home/user/keystore.jks keystorePwd=password keystoreManagerPwd=password # The default ACL to use for all creation of nodes. If left blank, then all nodes will be universally accessible # Permissions are based on single character flags: c (Create), r (read), w (write), d (delete), a (admin), * (all) # For example defaultAcl={"acls": [{"scheme":"ip", "id":"192.168.1.192", "perms":"*"}, {"scheme":"ip", id":"192.168.1.0/24", "perms":"r"}] defaultAcl= # Set X-Forwarded-For to true if zkui is behind a proxy X-Forwarded-For=false EOF # 4. 添加zookeeper账户对安装目录的权限 ls -al /app/zkui/zkui-2.0/ && chmod -R 775 /app/zkui/zkui-2.0/ && chown -R zookeeper:ops_install /app/zkui/zkui-2.0/ && ls -al /app/zkui/zkui-2.0/ # 4. 启动zkui,如下 java -Xms128m -Xmx512m -XX:MaxMetaspaceSize=256m -jar /app/zkui/zkui-2.0/zkui-2.0-SNAPSHOT-jar-with-dependencies.jar # 5. 设置开机自动启动,如下 cat > /etc/systemd/system/zkui.service <<EOF [Unit] Description=zkui-2.0 service After=network.target After=network-online.target Wants=network-online.target [Service] User=zookeeper Type=forking TimeoutSec=0 Environment="ZKUI_HOME=/app/zkui/zkui-2.0/" ExecStart=${JAVA_HOME}/bin/java -Xms128m -Xmx512m -XX:MaxMetaspaceSize=256m -jar /app/zkui/zkui-2.0/zkui-2.0-SNAPSHOT-jar-with-dependencies.jar RestartSec=5 LimitNOFILE=1000000 [Install] WantedBy=multi-user.target EOF # 注册服务 systemctl enable zkui # 启动服务 systemctl start zkui & # 检查服务 systemctl status zkui netstat -nltp | grep 19090 ps -ef | grep zkui # ====================================================================================================================================================

最后,截图如下

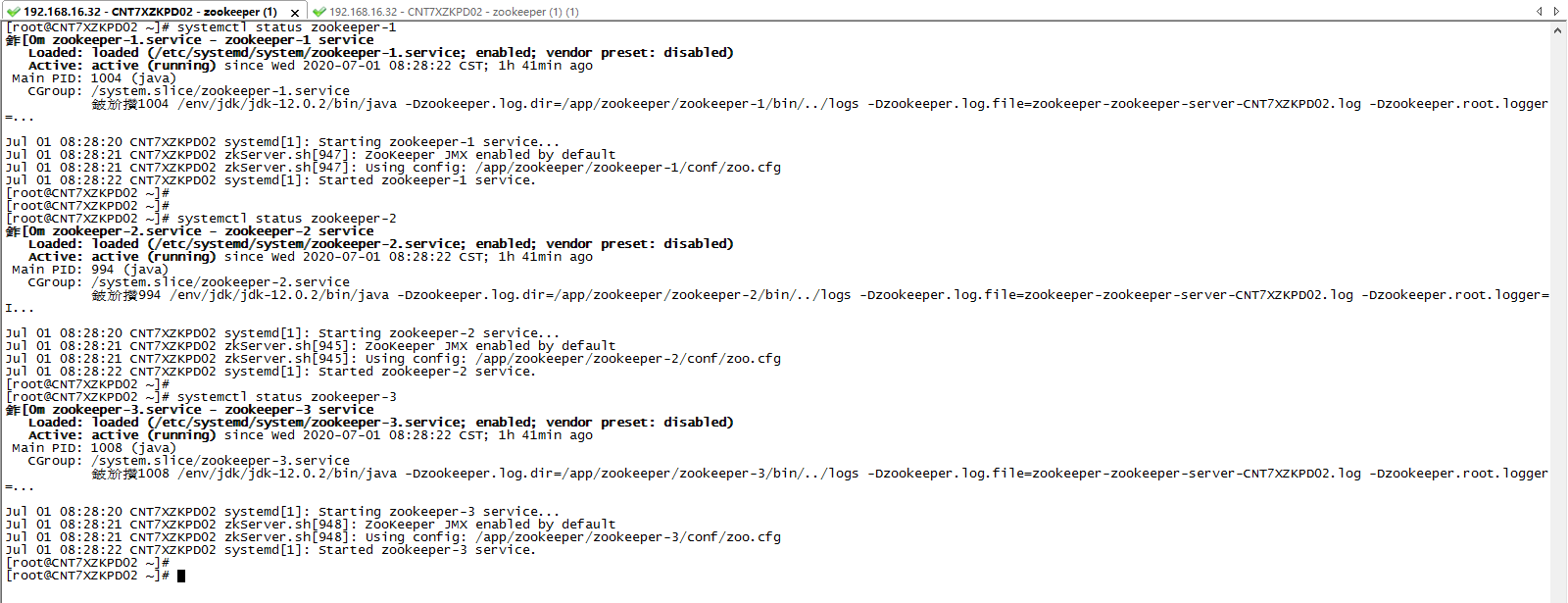

1. zookeeper 运行结果,如下

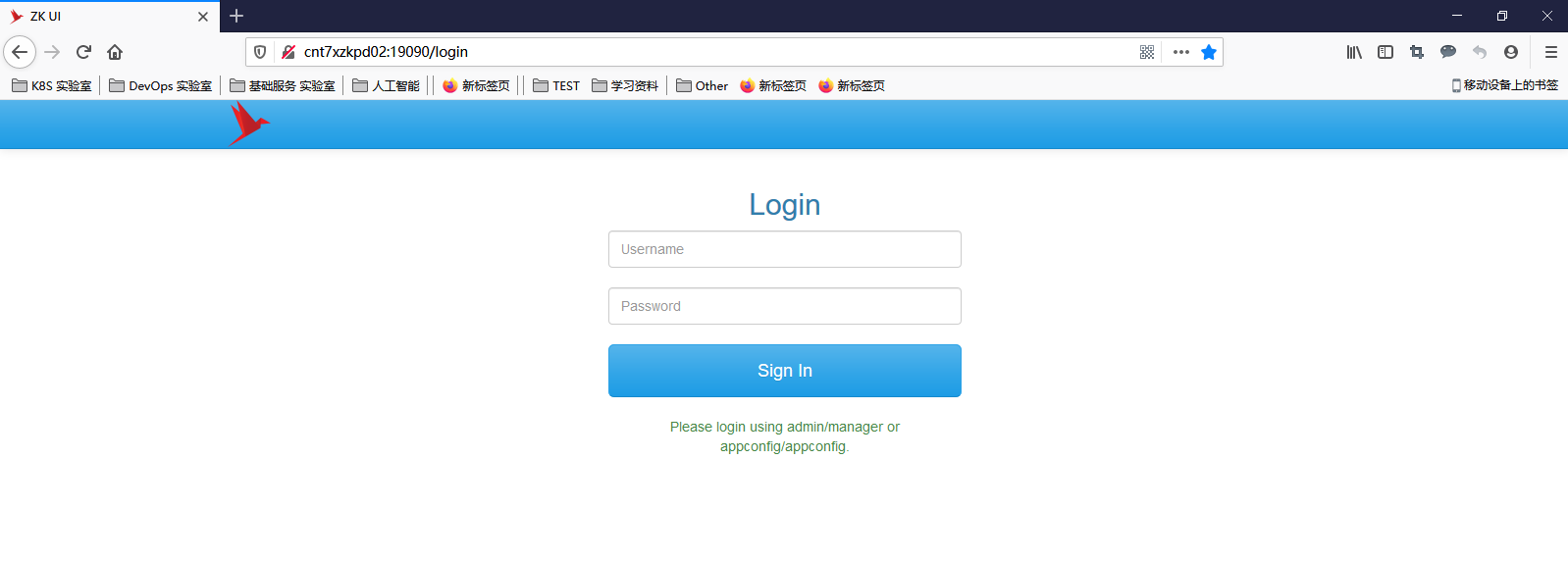

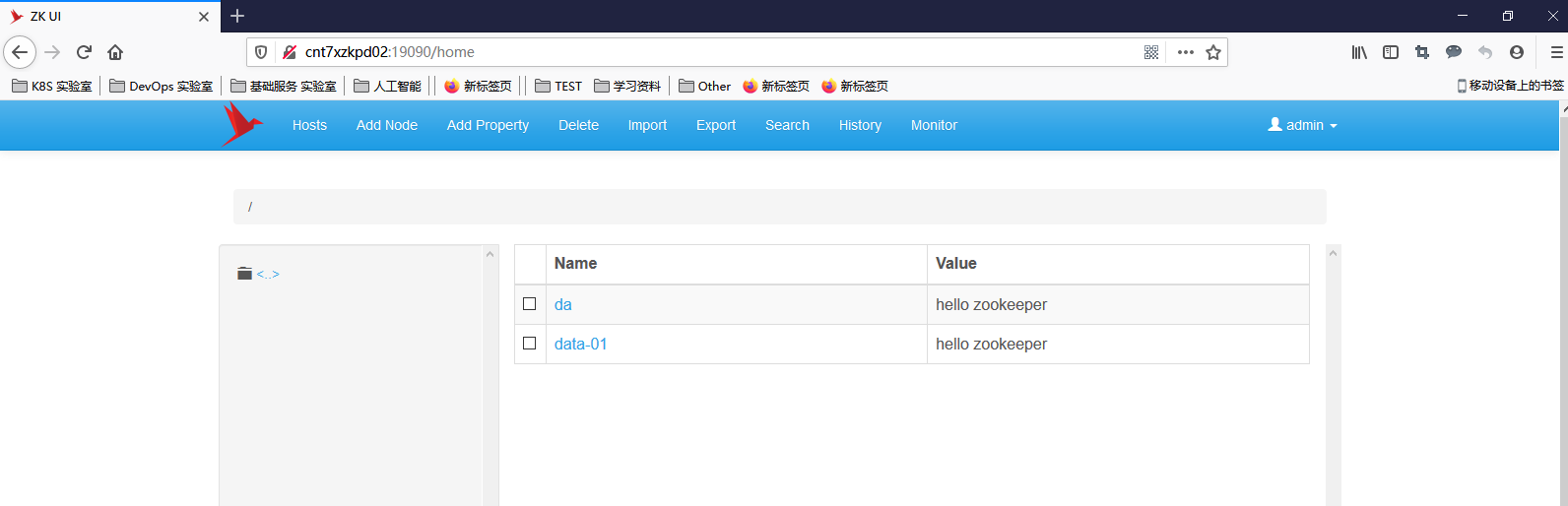

2. zkui, 运行结果如下